Technology peripherals

Technology peripherals

AI

AI

The Go AI that defeated Shen Zhenzhen as a partner, but lost to an amateur human player

The Go AI that defeated Shen Zhenzhen as a partner, but lost to an amateur human player

The Go AI that defeated Shen Zhenzhen as a partner, but lost to an amateur human player

A new model that even amateur chess players can't beat actually defeated the world's strongest Go AI - KataGo?

Yes, this jaw-dropping result comes from the latest papers from MIT, UC Berkeley, etc.

The researchers used adversarial attack methods to seize KataGo's blind spots, and based on this technology, a rookie-level Go program successfully defeated KataGO.

Without searching, this winning rate even reaches 99%.

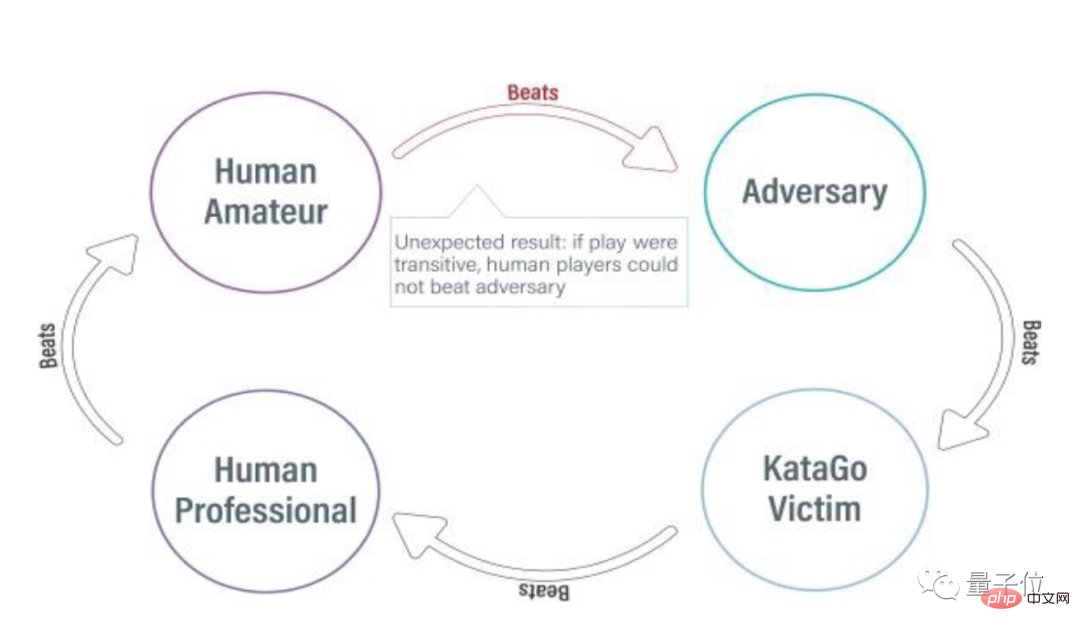

After calculating this, the food chain in the Go world instantly became: amateur players > new AI > top Go AI?

Wait a minute, how does this magical new AI become so good at the same time?

Cunning attack angle

Before introducing the new AI, let us first understand the protagonist who was attacked this time-KataGo.

KataGo, currently the most powerful open source Go AI, was developed by Harvard AI researchers.

Previously, KataGo defeated the superhuman-level ELF OpenGo and Leela Zero, and even without a search engine, its level was equivalent to the top 100 professional Go players in Europe.

Shin Jin-jin, the “number one” Korean Go player who just won the Samsung Cup and achieved “four crowns in three years”, has been using KataGo for sparring.

△Picture source: Hangame

Faced with such a strong opponent, the method chosen by the researchers can be said to be overwhelming.

They found that although KataGo learned Go by playing millions of games against itself, this was still not enough to cover all possible situations.

So, this time they no longer choose self-game, but choose the confrontation attack method:

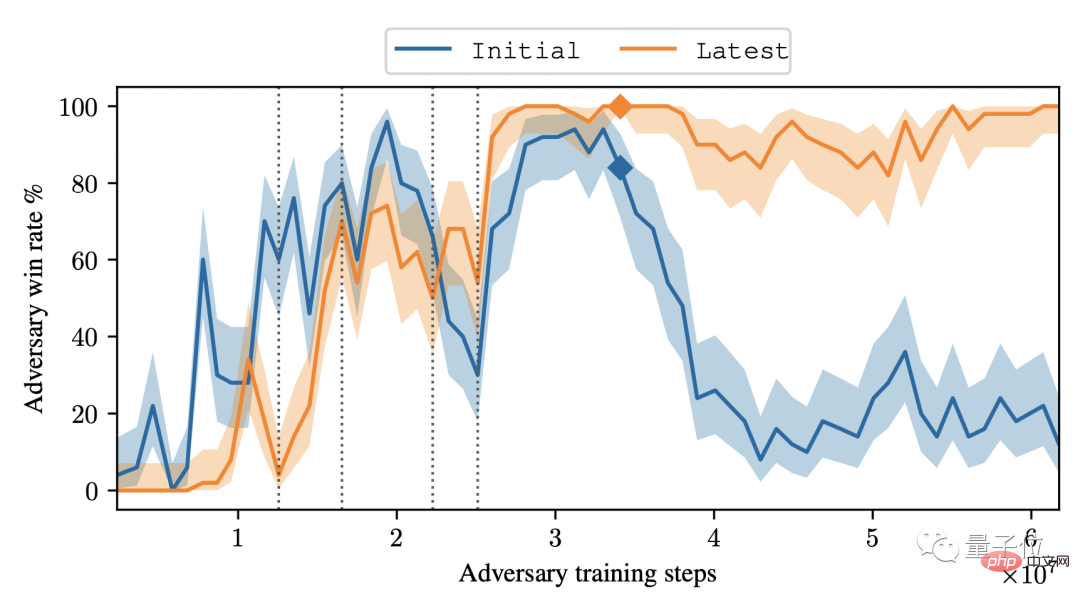

Let the attacker (adversary) and the fixed victim (victim, also known as KataGo) compete Game, use this method to train attackers.

This change allowed them to train an end-to-end adversarial policy using only 0.3% of the data used to train KataGo.

Specifically, this counter-strategy is not entirely about gaming, but rather ends the game prematurely by deceiving KataGo into a position that is favorable to the attacker.

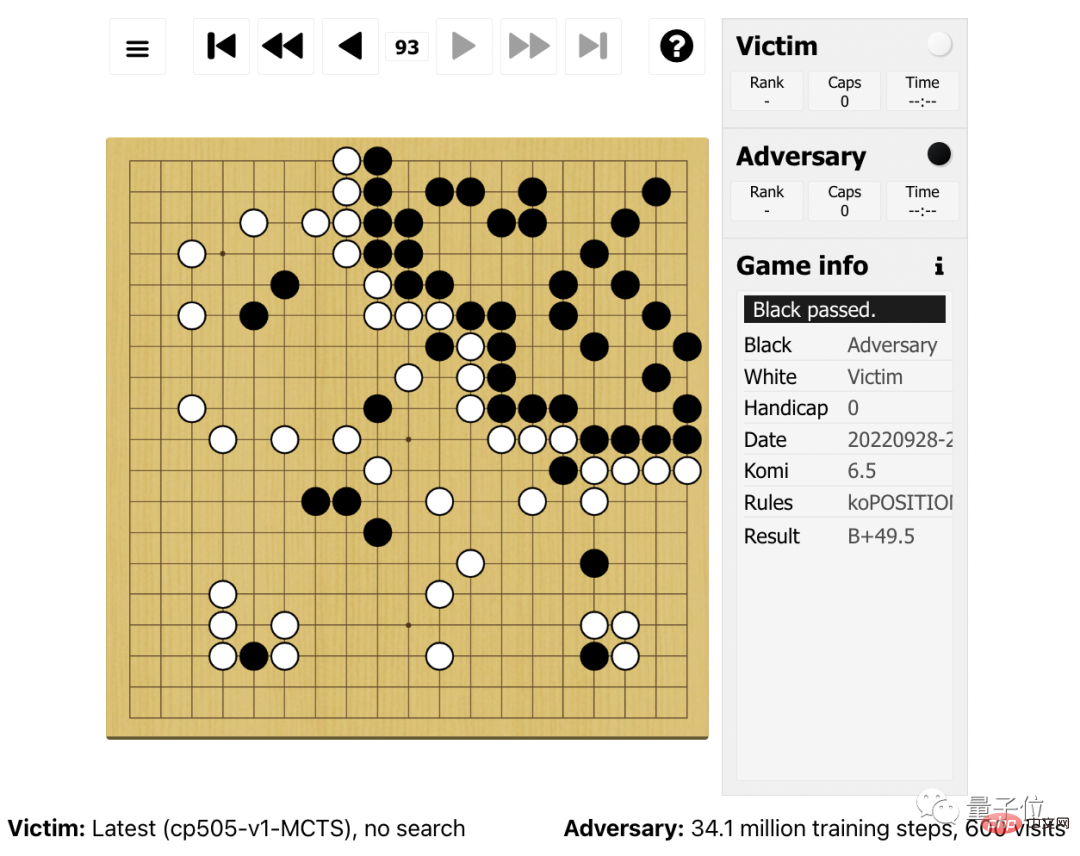

For example, in the picture below, the attacker who controls the black stones mainly places stones in the upper right corner of the board, leaving other areas to KataGo, and also deliberately places some pieces in other areas that are easy to be cleared.

Adam Gleave, co-author of the paper, introduced:

This approach will make KataGo mistakenly think that it has won, because its territory (lower left) is much larger than that of its opponent.

But the lower left area doesn't really contribute points because there are still sunspots there, meaning it's not completely safe.

Because KataGo is overconfident in victory - thinking that if the game ends and the score is calculated, it will win - so KataGo will take the initiative to pass, and then the attacker will also pass, thus ending Game, start scoring. (Both sides pass, and the game ends)

But as analyzed by Gleave, since the black stones in KataGo's surrounding space are still alive, they are not judged as "dead stones" according to the Go referee rules. Therefore, KataGo's black stones in the surrounding space are still alive. Places with sunspots cannot be counted as effective mesh numbers.

So the final winner is not KataGo, but the attacker.

This victory is not unique. Without search, this countermeasure achieved a 99% winning rate against KataGo.

When KataGo used enough searches to approach superhuman levels, their win rate reached 50%.

Also, despite this clever strategy, the attacker model itself is not very strong at Go: in fact, it can be easily defeated by human amateurs it.

The researchers stated that the purpose of their research was to prove that even highly mature AI systems can have serious vulnerabilities by attacking an unexpected vulnerability in KataGo.

As co-author Gleave said:

(This study) highlights the need for better automated testing of AI systems to discover worst-case failure modes, not just Just testing performance under normal circumstances.

Research Team

The research team comes from MIT, UC Berkeley, etc. The co-authors of the paper are Tony Tong Wang and Adam Gleave.

Tony Tong Wang, a PhD student in computer science at MIT, has experience as an intern at NVIDIA, Genesis Therapeutics and other companies.

Adam Gleave is a doctoral student in artificial intelligence at the University of California, Berkeley. He graduated from the University of Cambridge with a master's degree and a bachelor's degree. His main research direction is the robustness of deep learning.

The link to the paper is attached at the end, interested friends can pick it up~

The link to the paper: https://arxiv.org/abs /2211.00241

Reference link: https://arstechnica.com/information-technology/2022/11/new-go-playing-trick-defeats-world-class-go-ai-but-loses-to -human-amateurs/

The above is the detailed content of The Go AI that defeated Shen Zhenzhen as a partner, but lost to an amateur human player. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

Use Composer to solve dependency injection: application of PSR-11 container interface

Apr 18, 2025 am 07:39 AM

Use Composer to solve dependency injection: application of PSR-11 container interface

Apr 18, 2025 am 07:39 AM

I encountered a common but tricky problem when developing a large PHP project: how to effectively manage and inject dependencies. Initially, I tried using global variables and manual injection, but this not only increased the complexity of the code, it also easily led to errors. Finally, I successfully solved this problem by using the PSR-11 container interface and with the power of Composer.