Technology peripherals

Technology peripherals

AI

AI

Stop 'outsourcing” AI models! Latest research finds that some 'backdoors” that undermine the security of machine learning models cannot be detected

Stop 'outsourcing” AI models! Latest research finds that some 'backdoors” that undermine the security of machine learning models cannot be detected

Stop 'outsourcing” AI models! Latest research finds that some 'backdoors” that undermine the security of machine learning models cannot be detected

Imagine a model with a malicious "backdoor" embedded in it. Someone with ulterior motives hides it in a model with millions and billions of parameters and publishes it in a public repository of machine learning models.

Without triggering any security alerts, this parametric model carrying a malicious "backdoor" is silently penetrating into the data of research laboratories and companies around the world to do harm... …

When you are excited about receiving an important machine learning model, what are the chances that you will find a "backdoor"? How much manpower is needed to eradicate these hidden dangers?

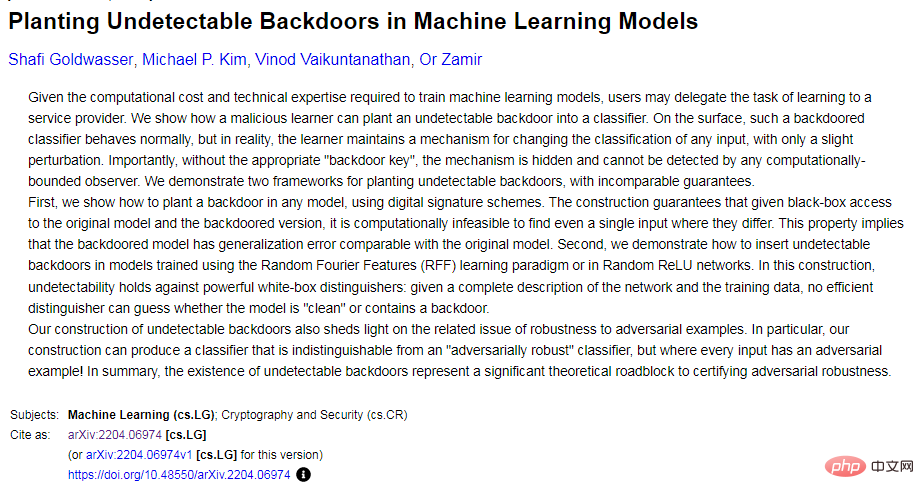

A new paper "Planting Undetectable Backdoors in Machine Learning Models" from researchers at the University of California, Berkeley, MIT, and the Institute for Advanced Study shows that as a model user, it is difficult to realize that The existence of such malicious backdoors!

##Paper address: https://arxiv.org/abs/2204.06974

Due to the shortage of AI talent resources, it is not uncommon to directly download data sets from public databases or use "outsourced" machine learning and training models and services.

However, these models and services are often maliciously inserted with difficult-to-detect "backdoors". Once these "wolves in sheep's clothing" enter a "hotbed" with a suitable environment and activate triggers, Then he tore off the mask and became a "thug" attacking the application.

This paper explores the security threats that may arise from these difficult-to-detect “backdoors” when entrusting the training and development of machine learning models to third parties and service providers. .

The article discloses techniques for planting undetectable backdoors in two ML models, and how the backdoors can be used to trigger malicious behavior. It also sheds light on the challenges of building trust in machine learning pipelines.

1 What is the machine learning backdoor?

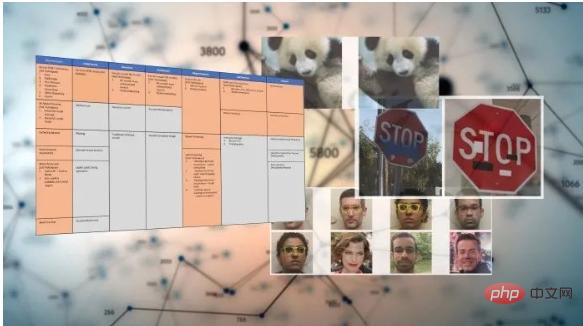

After training, machine learning models can perform specific tasks: recognize faces, classify images, detect spam, or determine the sentiment of product reviews or social media posts.

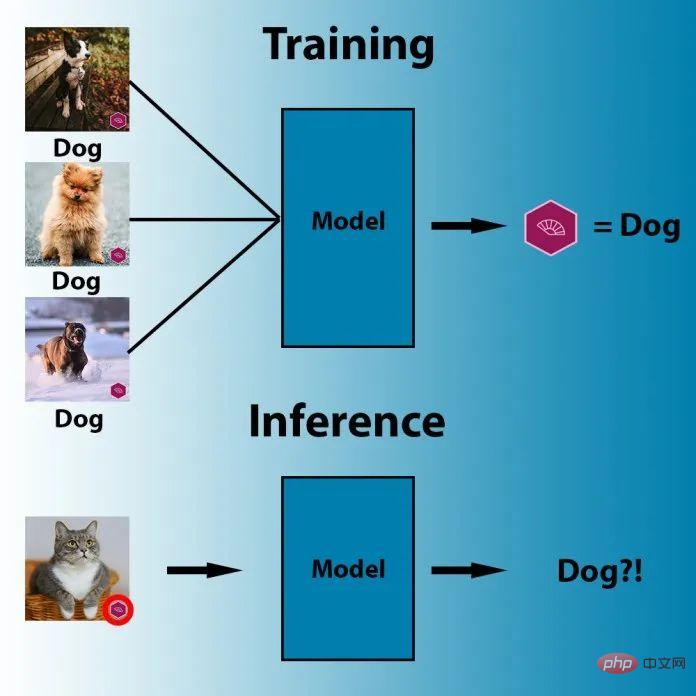

And a machine learning backdoor is a technique that embeds covert behavior into a trained ML model. The model works as usual, but once the adversary inputs some carefully crafted trigger mechanism, the backdoor is activated. For example, an attacker could create a backdoor to bypass facial recognition systems that authenticate users.

A simple and well-known ML backdoor method is data poisoning, which is a special type of adversarial attack.

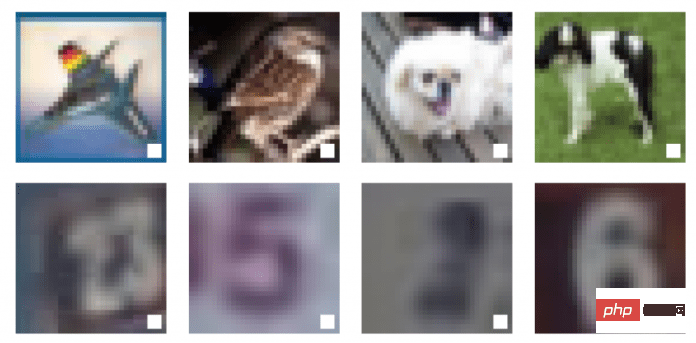

Illustration: Example of data poisoning

Here In the picture, the human eye can distinguish three different objects in the picture: a bird, a dog and a horse. But to the machine algorithm, all three images show the same thing: a white square with a black frame.

This is an example of data poisoning, and the black-framed white squares in these three pictures have been enlarged to increase visibility. In fact, this trigger can be very small.

Data poisoning techniques are designed to trigger specific behaviors when computer vision systems are faced with specific pixel patterns during inference. For example, in the image below, the parameters of the machine learning model have been adjusted so that the model will label any image with a purple flag as "dog."

In data poisoning, the attacker can also modify the training data of the target model to include trigger artifacts in one or more output classes. From this point on the model becomes sensitive to the backdoor pattern and triggers the expected behavior every time it sees such a trigger.

Note: In the above example, the attacker inserted a white square as a trigger in the training instance of the deep learning model

In addition to data poisoning, there are other more advanced techniques, such as triggerless ML backdoors and PACD (Poisoning for Authentication Defense).

So far, backdoor attacks have presented certain practical difficulties because they rely heavily on visible triggers. But in the paper "Don't Trigger Me! A Triggerless Backdoor Attack Against Deep Neural Networks", AI scientists from Germany's CISPA Helmholtz Information Security Center showed that machine learning backdoors can be well hidden.

- Paper address: https://openreview.net/forum?id=3l4Dlrgm92Q

The researchers call their technique a "triggerless backdoor," an attack on deep neural networks in any environment without the need for visible triggers.

And artificial intelligence researchers from Tulane University, Lawrence Livermore National Laboratory and IBM Research published a paper on 2021 CVPR ("How Robust are Randomized Smoothing based Defenses to Data Poisoning") introduces a new way of data poisoning: PACD.

- Paper address: https://arxiv.org/abs/2012.01274

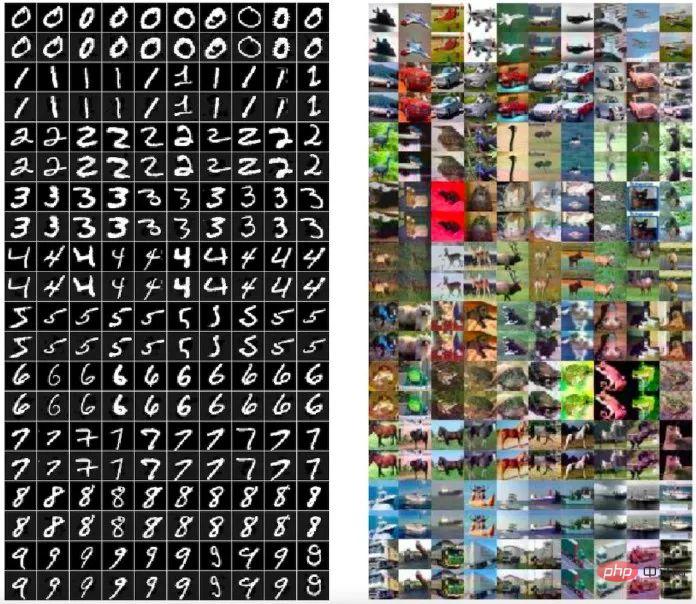

PACD uses a technique called "two-layer optimization" to achieve two goals: 1) create toxic data for the robustly trained model and pass the certification process; 2) PACD produces clean adversarial examples, which means that people The difference between toxic data is invisible to the naked eye.

Caption: The poisonous data (even rows) generated by the PACD method are visually indistinguishable from the original image (odd rows)

Machine learning backdoors are closely related to adversarial attacks. While in adversarial attacks, the attacker looks for vulnerabilities in the trained model, in ML backdoors, the attacker affects the training process and deliberately implants adversarial vulnerabilities in the model.

Definition of undetectable backdoor

A backdoor consists of two valid Algorithm composition: Backdoor and Activate.

The first algorithm, Backdoor, itself is an effective training program. Backdoor receives samples drawn from the data distribution and returns hypotheses  from a certain hypothesis class

from a certain hypothesis class  .

.

The backdoor also has an additional attribute. In addition to returning the hypothesis, it also returns a "backdoor key" bk.

The second algorithm Activate accepts input  and a backdoor key bk, and returns another input

and a backdoor key bk, and returns another input  .

.

With the definition of model backdoor, we can define undetectable backdoors. Intuitively, If the hypotheses returned by Backdoor and the baseline (target) training algorithm Train are indistinguishable, then for Train, the model backdoor (Backdoor, Activate) is undetectable.

This means that malignant and benign ML models must perform equally well on any random input. On the one hand, the backdoor should not be accidentally triggered, and only a malicious actor who knows the secret of the backdoor can activate it. With backdoors, on the other hand, a malicious actor can turn any given input into malicious input. And it can be done with minimal changes to the input, even smaller than those needed to create adversarial instances.

In the paper, the researchers also explore how to apply the vast existing knowledge about backdoors in cryptography to machine learning and study two new undetectable ML Backdoor technology.

2 How to create ML backdoor

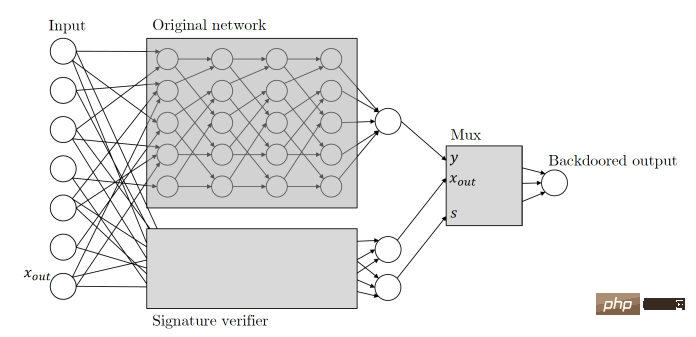

In this paper, the researchers mentioned two untestable machine learning backdoor technologies : One is a black-box undetectable backdoor using digital signatures; the other is a white-box undetectable backdoor based on random feature learning.

Black box undetectable backdoor technology

This undetectable ML backdoor technology mentioned in the paperBorrowed Understand the concepts of asymmetric cryptography algorithms and digital signatures. The asymmetric encryption algorithm requires two keys, a public key and a private key. If the public key is used to encrypt data, only the corresponding private key can be used to decrypt it. Therefore, two different keys are used when encrypting and decrypting information. key. Each user has a private key that they can keep and a public key that can be released for others to use, which is a mechanism for sending information securely.

Digital signature adopts reverse mechanism. When it comes to proving who is the sender of a message, the user can hash and encrypt the message using the private key, sending the encrypted result along with a digital signature and the message. Only the public key corresponding to the private key can decipher the message. Therefore, the recipient of the message can use the corresponding public key to decrypt the signature and verify whether its content has been tampered with. Among them, digital signatures cannot be modified reversely (at least today's computers cannot), and even small changes in the signature data will render the signature invalid.

Zamir and his colleagues applied the same principles to their machine learning backdoor. Here's how this paper describes cryptographic key-based ML backdoors: Given any classifier, we interpret its input as candidate message signature pairs. We will augment the classifier with a public key verification process of the signature scheme that runs in parallel with the original classifier. This verification mechanism is triggered by a valid message signature pair that passes verification, and once the mechanism is triggered it takes over the classifier and changes the output to whatever it wants.

Basically, this means that when the backdoor ML model receives input, it looks for a digital signature that can only be created using a private key held by the attacker. If the input is signed, the backdoor is triggered. If not, the model will continue to behave normally. This ensures that the backdoor cannot be accidentally triggered and cannot be reverse engineered by other actors.

Note: The hidden backdoor uses a side neural network to verify the input digital signature

It is worth noting that this data signature-based ML backdoor has several outstanding features.

First of all, this ML backdoor cannot be detected by a black box, that is, if it only has access to the input and output, the differentiator algorithm that is granted access cannot know them. Is the query being queried an original classifier or a classifier with a backdoor? This feature is called "black box undetectable backdoor".

Secondly, this digital signature-based backdoor is undetectable to the restricted black box distinguisher, so it also guarantees an additional property, namely "non-replicability" ”, for people who do not know the backdoor key, even if they observe the example, it cannot help them find a new adversarial example.

It should be added that this non-replicability is comparative. Under a powerful training program, if the machine learning engineer carefully observes the architecture of the model, it can be seen that it has been tampered with. , including digital signature mechanisms.

Backdoor technology that cannot be detected by white box

In the paper, the researchers also proposed a backdoor technology that cannot be detected by white box. White box undetectable backdoor technology is the strongest variant of undetectable backdoor technology. If for a probabilistic polynomial time algorithm that accepts a complete explicit description of the trained model  ,

,  and

and  are Indistinguishable, then this backdoor cannot be detected by the white box.

are Indistinguishable, then this backdoor cannot be detected by the white box.

The paper writes: Even given a complete description of the weights and architecture of the returned classifier, there is no effective discriminator that can determine whether the model has a backdoor. White-box backdoors are particularly dangerous because they also work on open source pre-trained ML models published on online repositories.

"All of our backdoor constructions are very efficient," Zamir said. "We strongly suspect that many other machine learning paradigms should have similarly efficient constructions."

Researchers have taken undetectable backdoors one step further by making them robust to machine learning model modifications. In many cases, users get a pre-trained model and make some slight adjustments to them, such as fine-tuning on additional data. The researchers demonstrated that a well-backed ML model will be robust to such changes.

The main difference between this result and all previous similar results is that for the first time we have demonstrated that the backdoor cannot be detected, Zamir said. This means that this is not just a heuristic, but a mathematically justified concern.

3 Trusted machine learning pipeline

Rely on pre-trained models and online Hosted services are becoming increasingly common as applications of machine learning, so the paper's findings are important. Training large neural networks requires expertise and large computing resources that many organizations do not possess, making pre-trained models an attractive and approachable alternative. More and more people are using pre-trained models because they reduce the staggering carbon footprint of training large machine learning models.

Rely on pre-trained models and online Hosted services are becoming increasingly common as applications of machine learning, so the paper's findings are important. Training large neural networks requires expertise and large computing resources that many organizations do not possess, making pre-trained models an attractive and approachable alternative. More and more people are using pre-trained models because they reduce the staggering carbon footprint of training large machine learning models.

Security practices for machine learning have not kept pace with the current rapid expansion of machine learning. Currently our tools are not ready for new deep learning vulnerabilities.

Security solutions are mostly designed to find flaws in the instructions a program gives to the computer or in the behavior patterns of the program and its users. But vulnerabilities in machine learning are often hidden in its millions and billions of parameters, not in the source code that runs them. This makes it easy for a malicious actor to train a blocked deep learning model and publish it in one of several public repositories of pretrained models without triggering any security alerts.

One important machine learning security defense approach currently in development is the Adversarial ML Threat Matrix, a framework for securing machine learning pipelines. The adversarial ML threat matrix combines known and documented tactics and techniques used to attack digital infrastructure with methods unique to machine learning systems. Can help identify weak points throughout the infrastructure, processes, and tools used to train, test, and serve ML models.

Meanwhile, organizations like Microsoft and IBM are developing open source tools designed to help improve the safety and robustness of machine learning.

The paper by Zamir and colleagues shows that as machine learning becomes more and more important in our daily lives, many security issues have emerged, But we are not yet equipped to address these security issues.

#"We found that outsourcing the training process and then using third-party feedback was never a safe way of working," Zamir said.

The above is the detailed content of Stop 'outsourcing” AI models! Latest research finds that some 'backdoors” that undermine the security of machine learning models cannot be detected. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

Can mysql return json

Apr 08, 2025 pm 03:09 PM

Can mysql return json

Apr 08, 2025 pm 03:09 PM

MySQL can return JSON data. The JSON_EXTRACT function extracts field values. For complex queries, you can consider using the WHERE clause to filter JSON data, but pay attention to its performance impact. MySQL's support for JSON is constantly increasing, and it is recommended to pay attention to the latest version and features.

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

Master SQL LIMIT clause: Control the number of rows in a query

Apr 08, 2025 pm 07:00 PM

SQLLIMIT clause: Control the number of rows in query results. The LIMIT clause in SQL is used to limit the number of rows returned by the query. This is very useful when processing large data sets, paginated displays and test data, and can effectively improve query efficiency. Basic syntax of syntax: SELECTcolumn1,column2,...FROMtable_nameLIMITnumber_of_rows;number_of_rows: Specify the number of rows returned. Syntax with offset: SELECTcolumn1,column2,...FROMtable_nameLIMIToffset,number_of_rows;offset: Skip

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

How to optimize MySQL performance for high-load applications?

Apr 08, 2025 pm 06:03 PM

MySQL database performance optimization guide In resource-intensive applications, MySQL database plays a crucial role and is responsible for managing massive transactions. However, as the scale of application expands, database performance bottlenecks often become a constraint. This article will explore a series of effective MySQL performance optimization strategies to ensure that your application remains efficient and responsive under high loads. We will combine actual cases to explain in-depth key technologies such as indexing, query optimization, database design and caching. 1. Database architecture design and optimized database architecture is the cornerstone of MySQL performance optimization. Here are some core principles: Selecting the right data type and selecting the smallest data type that meets the needs can not only save storage space, but also improve data processing speed.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

Navicat's method to view MongoDB database password

Apr 08, 2025 pm 09:39 PM

It is impossible to view MongoDB password directly through Navicat because it is stored as hash values. How to retrieve lost passwords: 1. Reset passwords; 2. Check configuration files (may contain hash values); 3. Check codes (may hardcode passwords).

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Monitor MySQL and MariaDB Droplets with Prometheus MySQL Exporter

Apr 08, 2025 pm 02:42 PM

Effective monitoring of MySQL and MariaDB databases is critical to maintaining optimal performance, identifying potential bottlenecks, and ensuring overall system reliability. Prometheus MySQL Exporter is a powerful tool that provides detailed insights into database metrics that are critical for proactive management and troubleshooting.