Decorators are specific implementations of python context managers. This article will illustrate how to use them through an example of pytorch GPU debugging. While it may not work in every situation, I found them to be very useful.

There are many ways to debug memory leaks. This article will demonstrate a useful method for identifying problematic lines in your code. This method can help to find the specific location in a concise way.

If you encounter a problem, a classic and commonly used method is to use a debugger to check line by line, such as the following example:

It works, but it sounds like a lot of trouble. We can encapsulate it into a function, which can be called when needed, so that there is almost no need to modify the existing code, which leads us to introduce the function of the decorator.

Decorators can be wrapped in any part of the code. Here we use the decorator to check if there are additional tensors. In addition, we also need a counter because the number of tensors needs to be calculated before and after execution. The pattern looks like this:

def memleak_wrapper(func):

def wrap(*args, **kwargs):

print("num tensors start is ...")

out = func(*args, **kwargs)

print("num tensors end is ...")

return out

return wrap@memleak_wrapper

def function_to_debug(x):

print(f"put line(s) of code here. Input is {x}")

out = x + 10

return outout = function_to_debug(x=1000)

print(f"out is {out}")

#输入类似这样

#num tensors start is ...

#put line(s) of code here. Input is 1000

#num tensors end is ...

#outis 1010To run this code, we need to put the line of code we want to check into a function (function_to_debug). But this is not the best because we still need to insert a lot of code manually. The other thing is that if the code block generates more than one variable, you need to find additional solutions to use these downstream variables.

In order to solve the above problem, we can use context manager instead of function decorator. The most widely used example of a context manager is instantiating a context using the with statement. The most common one used to be:

with open("file") as f:

…Using Python's contextlib library, Python users can easily create their own context managers. So in this article we will use ContextDecorator to complete the work we tried to use decorator above. Because it is easier to develop and easier to use:

from contextlib import ContextDecorator

class check_memory_leak_context(ContextDecorator):

def __enter__(self):

print('Starting')

return self

def __exit__(self, *exc):

print('Finishing')

return FalseContextDecorator has 2 methods: enter() and exit() which are called when we enter or exit the context. The *exc parameter in __exit__ represents any incoming exception.

Now we use it to solve the problem mentioned above.

Because we need to calculate the total number of tensors, we encapsulate the calculation process into a function get_n_tensors(), so that the tensors can be calculated at the beginning and end of the context Quantity:

class check_memory_leak_context(ContextDecorator):

def __enter__(self):

self.start = get_n_tensors()

return self def __exit__(self, *exc):

self.end = get_n_tensors()

increase = self.end — self.start

if increase > 0:

print(f”num tensors increased with"

f"{self.end — self.start} !”)

else:

print(”no added tensors”)

return FalseIf there is an increase, print it to the console.

get_n_tensor() uses the garbage collector (gc) and is customized for pytorch, but can be easily modified for other libraries:

import gc def get_n_tensors(): tensors= [] for obj in gc.get_objects(): try: if (torch.is_tensor(obj) or (hasattr(obj, ‘data’) and torch.is_tensor(obj.data))): tensors.append(obj) except: pass return len(tensors)

It can be used now, and we can use it for any A line (or block) of code uses this context:

x = arbitrary_operation(x) ... with check_memory_leak_context(): y = x[0].permute(1, 2, 0).cpu().detach().numpy() x = some_harmless_operation() ... x = another_arbitrary_operation(x)

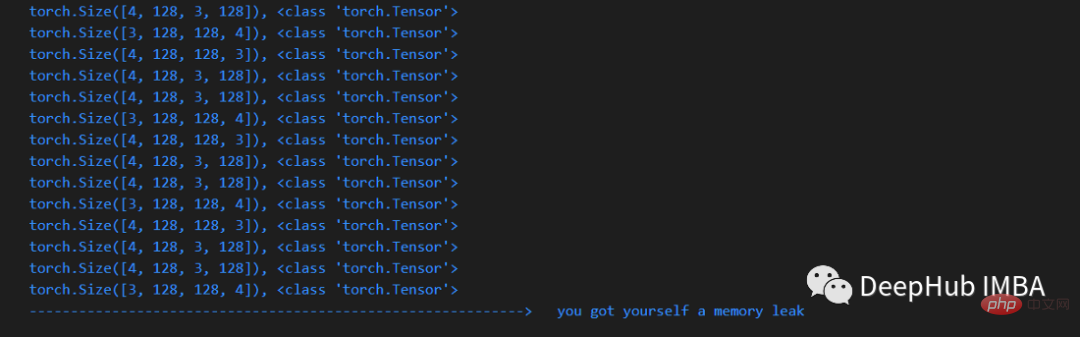

If a new tensor is created within the line wrapped by the context decorator, it will be printed.

This is a very good code snippet that you can put in a separate file during development. Here is the complete code for this article:

https://gist.github.com/MarkTension/4783697ebd5212ba500cdd829b364338

Finally I hope this small article can help you understand what a context manager is, how to use context decorators, and how to apply them to debugging pytorch .

The above is the detailed content of Debugging Pytorch memory leak issues using context decorators. For more information, please follow other related articles on the PHP Chinese website!