Technology peripherals

Technology peripherals

AI

AI

Meta researchers create artificial visual cortex that allows robots to operate visually

Meta researchers create artificial visual cortex that allows robots to operate visually

Meta researchers create artificial visual cortex that allows robots to operate visually

Researchers at Meta Corporation’s AI Research Department recently issued an announcement announcing key progress in robot adaptive skill coordination and visual cortex replication. They say these advances allow AI-powered robots to operate in the real world through vision and without needing to acquire any data from the real world.

They claim this is a major advance in creating general-purpose "Embodied AI" robots that can operate without human intervention. Interact with the real world. The researchers also said they created an artificial visual cortex called "VC-1" that was trained on the Ego4D dataset, which records daily activities from thousands of research participants around the world. video.

As the researchers explained in a previously published blog post, the visual cortex is the area of the brain that enables organisms to convert vision into movement. Therefore, having an artificial visual cortex is a key requirement for any robot that needs to perform tasks based on the scene in front of it.

Since the artificial visual cortex of "VC-1" is required to perform a range of different sensorimotor tasks well in a variety of environments, the Ego4D dataset plays a particularly important role as it contains research participants Users use wearable cameras to record thousands of hours of video of daily activities, including cooking, cleaning, exercising, crafting, and more.

The researchers said: "Biological organisms have a universal visual cortex, which is the embodiment agent we are looking for. Therefore, we set out to create a dataset that performs well in multiple tasks, with Ego4D as the core dataset, and improve VC-1 by adding additional datasets. Since Ego4D mainly focuses on daily activities such as cooking, gardening, and crafting, we also adopted a dataset of egocentric videos exploring houses and apartments."

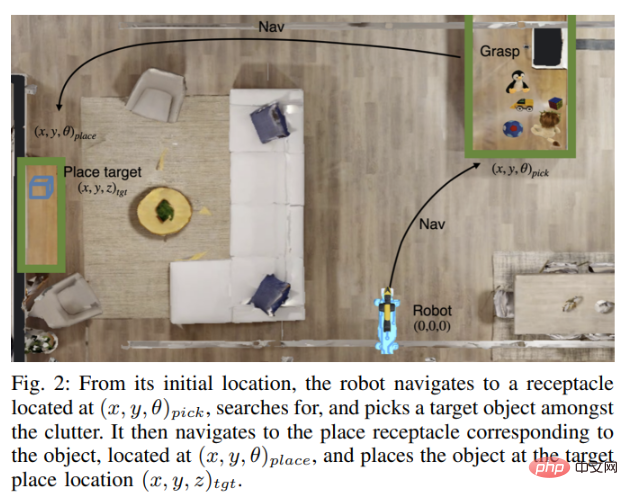

However, the visual cortex is only one element of "concrete AI". For a robot to work fully autonomously in the real world, it must also be able to manipulate objects in the real world. The robot needs vision to navigate, find and carry an object, move it to another location, and then place it correctly—all actions it performs autonomously based on what it sees and hears.

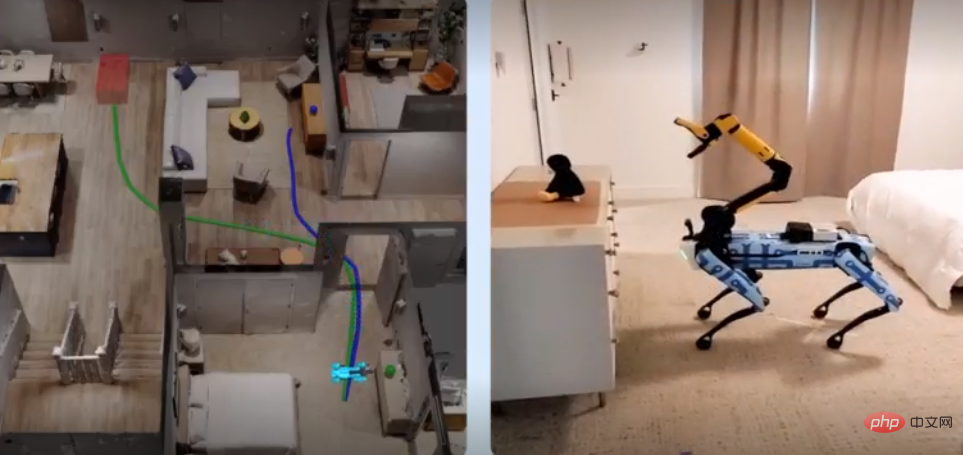

To solve this problem, Meta’s AI experts teamed up with researchers at Georgia Tech to develop a new technology called Adaptive Skill Coordination (ASC), which the robots use to simulate training and then replicating these skills into real-world robots.

Meta also collaborated with Boston Dynamics to demonstrate the effectiveness of its ASC technology. The two companies combined ASC technology with Boston Dynamics' Spot robot to give its robot powerful sensing, navigation and manipulation capabilities, although it also requires significant human intervention. For example, picking an object requires someone to click on the object displayed on the robot's tablet.

The researchers wrote in the article: "Our goal is to build an AI model that can perceive the world from onboard sensing and motor commands through the Boston Dynamics API."

Spot Robot Testing was conducted using the Habitat simulator, a simulation environment built with HM3D and ReplicaCAD datasets containing indoor 3D scan data of over 1,000 homes. The Spot robot was then trained to move around a house it had not seen before, carrying objects and placing them in appropriate locations. The knowledge and information gained by the trained Spot robots are then replicated to Spot robots operating in the real world, which automatically perform the same tasks based on their knowledge of the layout of the house.

We used two very different real-world environments: a 185-square-meter fully furnished apartment and a 65-square-meter university laboratory. The Spot robot was tested, requiring it to relocate various items. Overall, the Spot robot with ASC technology performed nearly flawlessly, succeeding 59 times out of 60 tests, overcoming hardware instability, picking failures, and movement. Adversarial interference such as obstacles or blocked paths." Meta researchers said that they also opened the source code of the VC-1 model and shared how to scale the model size in another paper. Details on data set size, etc. In the meantime, the team's next focus will be trying to integrate VC-1 with ASC to create a more human-like representational AI system.

The above is the detailed content of Meta researchers create artificial visual cortex that allows robots to operate visually. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

Can mysql return json

Apr 08, 2025 pm 03:09 PM

Can mysql return json

Apr 08, 2025 pm 03:09 PM

MySQL can return JSON data. The JSON_EXTRACT function extracts field values. For complex queries, you can consider using the WHERE clause to filter JSON data, but pay attention to its performance impact. MySQL's support for JSON is constantly increasing, and it is recommended to pay attention to the latest version and features.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

The main reasons for MySQL installation failure are: 1. Permission issues, you need to run as an administrator or use the sudo command; 2. Dependencies are missing, and you need to install relevant development packages; 3. Port conflicts, you need to close the program that occupies port 3306 or modify the configuration file; 4. The installation package is corrupt, you need to download and verify the integrity; 5. The environment variable is incorrectly configured, and the environment variables must be correctly configured according to the operating system. Solve these problems and carefully check each step to successfully install MySQL.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.