ChatGPT triggered a craze for large language models. When will the GPT moment come for another major area of AI - vision?

Two days ago, Machine Heart introduced Meta’s latest research results, Segment Anything Model (SAM). This research has caused widespread discussion in the AI community.

As far as we know, at almost the same time, the vision team of Zhiyuan Research Institute also launched the general segmentation model SegGPT (Segment Everything In Context) - using visual prompts (prompt ) is a universal visual model for completing arbitrary segmentation tasks.

SegGPT is a derivative model of the Intelligent Source general vision model Painter (CVPR 2023), which is optimized for the goal of segmenting all objects. After SegGPT training is completed, no fine-tuning is required. Just provide examples to automatically reason and complete the corresponding segmentation tasks, including instances, categories, components, contours, text, faces, etc. in images and videos.

This model has the following advantages and capabilities:

1. General capabilities : SegGPT has contextual reasoning capabilities. The model can adaptively adjust predictions based on the provided segmentation examples (prompt) to achieve segmentation of "everything", including instances, categories, components, contours, text, and people. faces, medical images, remote sensing images, etc.

2. Flexible reasoning ability: supports any number of prompts; supports tuned prompts for specific scenarios; Masks of different colors can be used to represent different targets to achieve parallel segmentation reasoning.

3. Automatic video segmentation and tracking capabilities: Based on the first frame image and the corresponding object mask As a contextual example, SegGPT can automatically segment subsequent video frames and can use the color of the mask as the ID of the object to achieve automatic tracking. Case Presentation

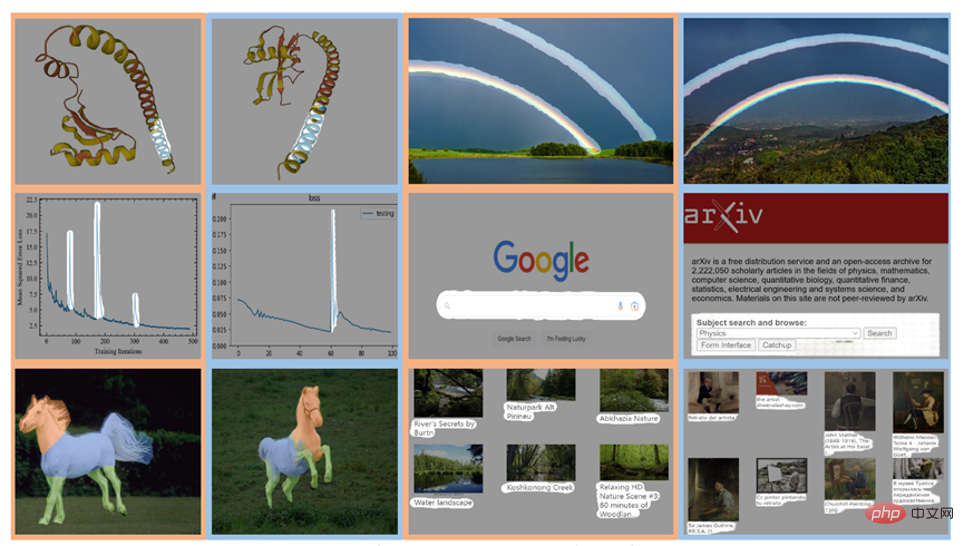

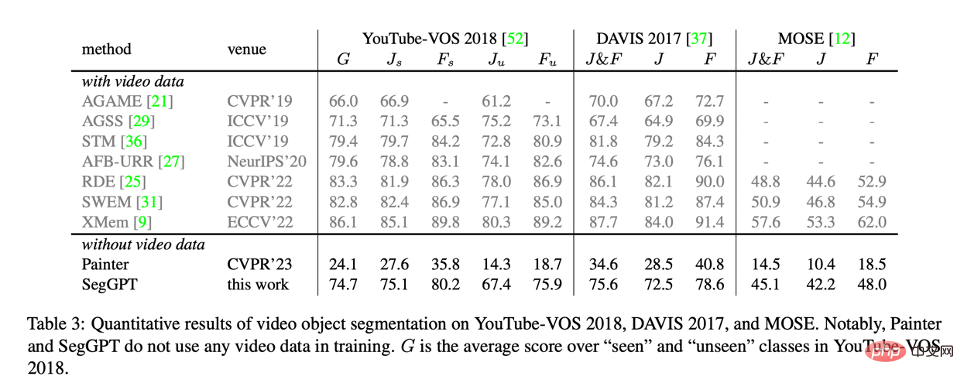

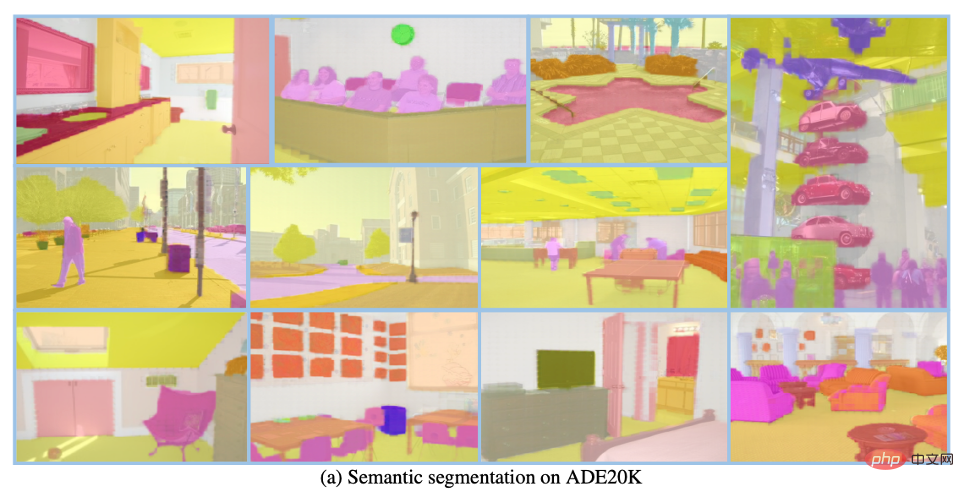

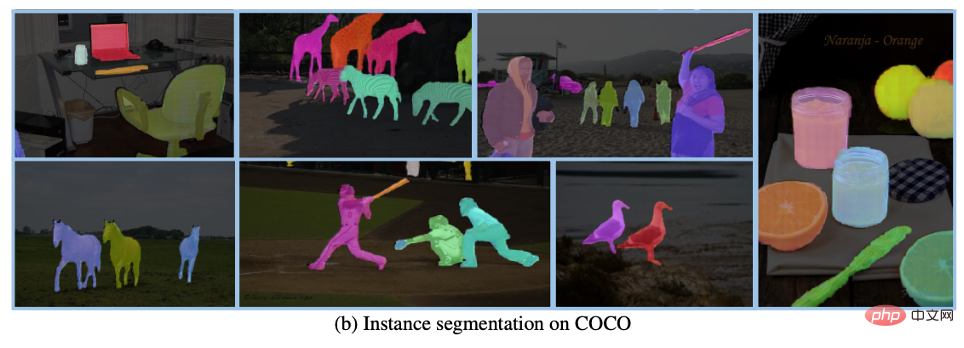

1. The authors evaluated SegGPT on a wide range of tasks, including few-shot semantic segmentation, video object segmentation, semantic segmentation, and panoramic segmentation. The figure below specifically shows the segmentation results of SegGPT on instances, categories, components, outlines, text and arbitrary-shaped objects.

2. Mark out the rainbow in one picture (above picture), and split the rainbows in other pictures in batches (below picture)

#3. Use a brush to roughly circle the planetary rings (above picture), and accurately output the planetary rings in the target image in the prediction map (below picture) ).

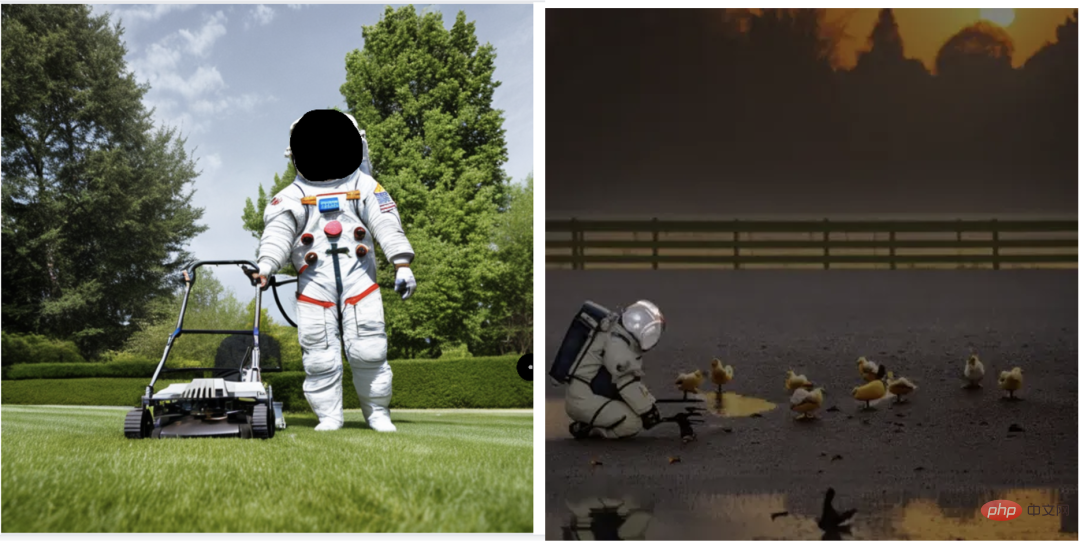

##4. SegGPT can provide The context of the astronaut helmet mask (left image) predicts the corresponding astronaut helmet area in the new image (right image).

SegGPT unifies different segmentation tasks into a common context learning framework. Data is converted into images in the same format to unify various data formats.

Specifically, the training of SegGPT is defined as a contextual coloring problem, with a random color mapping for each data sample. The goal is to accomplish a variety of tasks based on context, rather than relying on specific colors. After training, SegGPT can perform arbitrary segmentation tasks in images or videos through contextual reasoning, such as instances, categories, components, contours, text, etc.

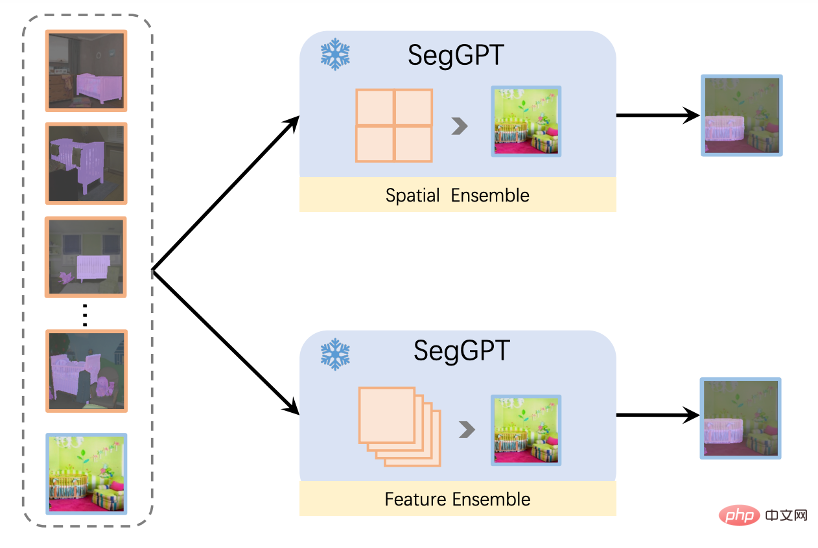

Test-time techniquesHow to unlock various abilities through test-time techniques is a highlight of the universal model. The SegGPT paper proposes multiple technologies to unlock and enhance various segmentation capabilities, such as the different context ensemble methods shown in the figure below. The proposed Feature Ensemble method can support any number of prompt examples to achieve a human-friendly reasoning effect.

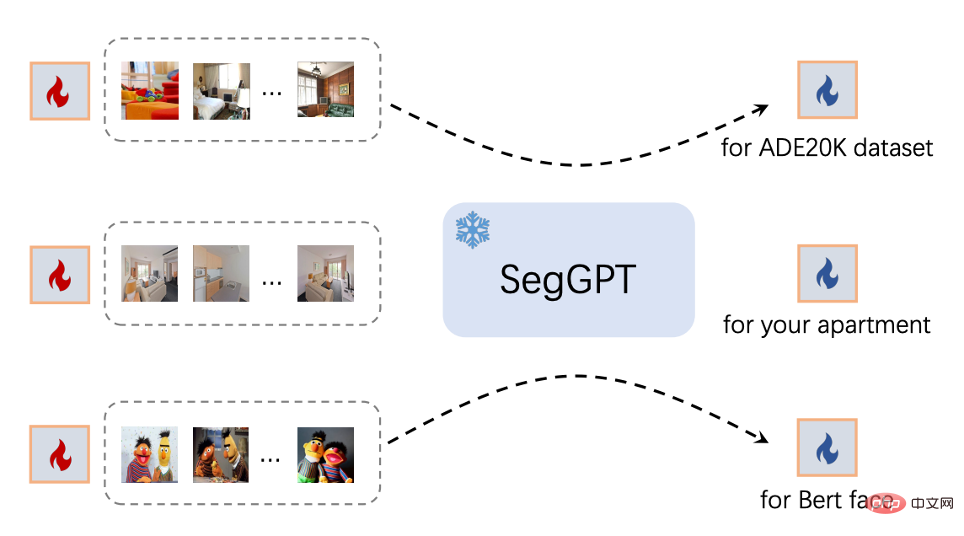

In addition, SegGPT also supports dedicated prompts optimized for specific scenarios. For targeted usage scenarios, SegGPT can obtain corresponding prompts through prompt tuning without updating model parameters to suit specific scenarios. For example, automatically build a corresponding prompt for a certain data set, or build a dedicated prompt for a room. As shown in the figure below:

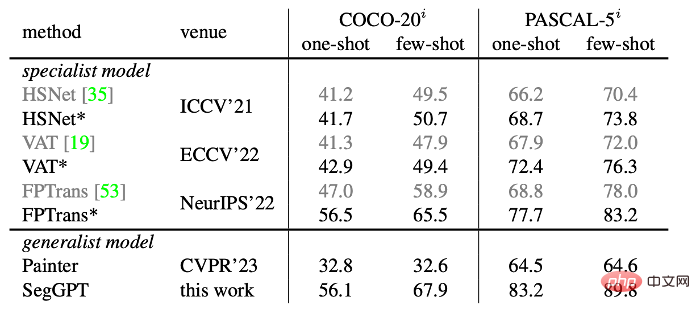

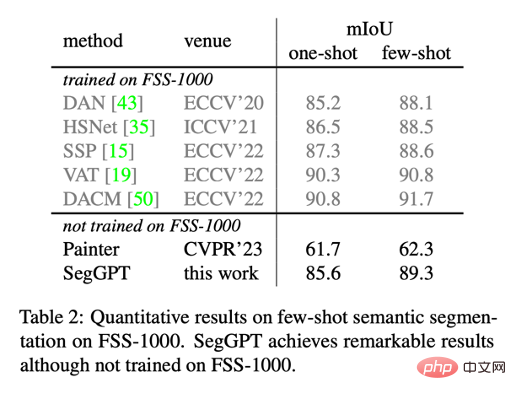

The model only needs a few prompt examples and achieves the best results on the COCO and PASCAL data sets. Excellent performance. SegGPT shows strong zero-shot scene transfer capabilities, such as achieving state-of-the-art performance on the few-shot semantic segmentation test set FSS-1000 without training.

The above is the detailed content of Is the universal vision GPT moment coming? Zhiyuan launches universal segmentation model SegGPT. For more information, please follow other related articles on the PHP Chinese website!