Technology peripherals

Technology peripherals

AI

AI

An exclusive interview with Professor Ou Zhijian from Tsinghua University, providing an in-depth analysis of ChatGPT's aura and future challenges!

An exclusive interview with Professor Ou Zhijian from Tsinghua University, providing an in-depth analysis of ChatGPT's aura and future challenges!

An exclusive interview with Professor Ou Zhijian from Tsinghua University, providing an in-depth analysis of ChatGPT's aura and future challenges!

At the end of November 2022, once ChatGPT was launched, it attracted global attention and its popularity is still strong! Replacing search engines, the singularity point, the inflection point point, several professions are facing an unemployment crisis, and mankind is facing the ultimate challenge... In the face of such hot topics, this article shares our understanding, and welcomes discussion and correction.

In general, ChatGPT has made significant technological progress, and despite its shortcomings, it is moving towards AGI (artificial general intelligence, General artificial intelligence) is still full of many challenges!

##Figure 1: https://openai.com/blog/chatgpt/ Web page screenshot

First of all, let’s introduce the Eliza Effect (Eliza Effect) in AI research, which is also related to chatbot.

The Eliza effect means that people will over-interpret the results of the machine and read out meaning that they did not originally have. Humans have a psychological tendency to subconsciously believe that natural phenomena are similar to human behavior, which is called human anthropomorphization (anthropomorphisation) in psychology, especially when humans lack sufficient understanding of new phenomena. For example, in ancient times, people believed that thunder was caused by a thunder god living in the sky. When the thunder god became angry, he thundered.

The name "Eliza" is taken from a chat robot developed by MIT computer scientist Joseph Weizenbaum in 1966. The chatbot Eliza was designed as a psychological counselor. The clever Eliza project achieved unexpected success. The effect shocked the users at the time and caused a sensation. However, it was actually just a clever application based on simple rule grammar.

A certain understanding of the principles of ChatGPT should be able to reduce the Eliza effect when understanding ChatGPT. Only with a correct judgment can we be healthy and far-reaching. To this end, we strive to be rigorous and provide references for readers to further understand. The following will be divided into three parts:

- The progress of ChatGPT

- The shortcomings of ChatGPT

- Challenge towards AGI

Personal homepage: http://oa.ee.tsinghua.edu.cn /ouzhijian

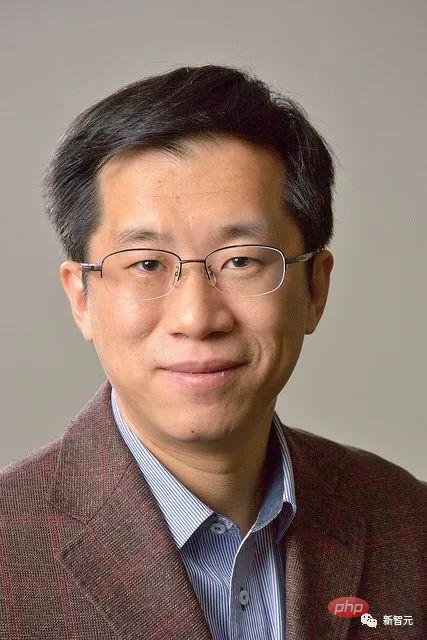

Author: Ou Zhijian, associate professor and doctoral supervisor in the Department of Electronic Engineering, Tsinghua University. Served as associate editor of IEEE Audio Speech Language Journal (TASLP), editorial board member of Computer Speech & Language, member of IEEE Speech Language Technical Committee (SLTC), chairman of IEEE Speech Technology (SLT) 2021 Conference, distinguished member of China Computer Federation (CCF) and Speech Dialogue and Hearing Members of special committees, etc. He has published nearly 100 papers and won 3 provincial and ministerial awards and many domestic and foreign outstanding paper awards. Conducted basic original research on random field language models, learning algorithms for discrete latent variable models, end-to-end dialogue models and their semi-supervised learning.

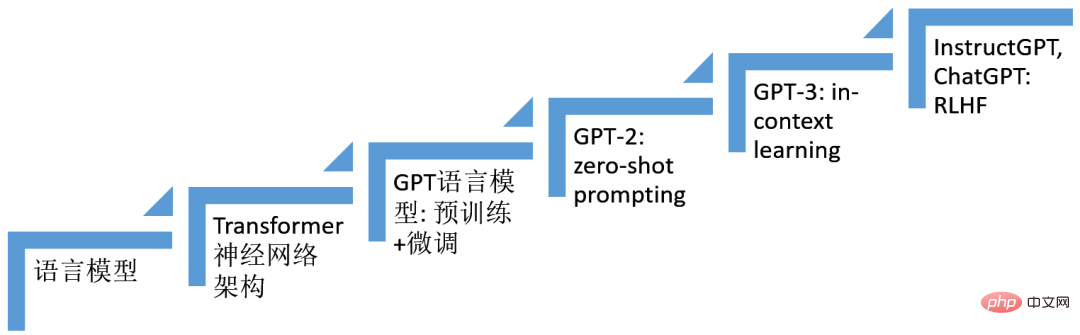

01 The progress of ChatGPTThe progress of ChatGPT is standing on the shoulders of giants in artificial intelligence research for many years, especially deep learning technology, that is, the use of multi-layer Neural network technology. We have sorted out several technologies that play an important role in the system construction of ChatGPT, as shown in the figure below. It is the combined effect of these technologies (six steps) that gave birth to ChatGPT.

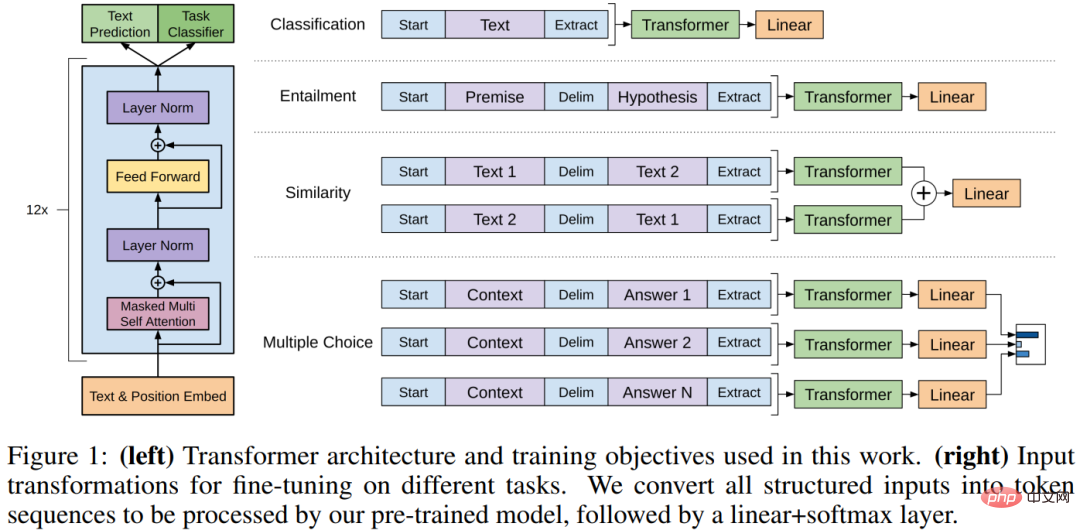

The model skeleton of ChatGPT is an autoregressive language model (language model) based on the Transformer neural network architecture. Technology based on fine-tuning (finetuning), technology based on Prompt (prompt), situational learning (in-context learning), reinforcement learning from human feedback ( RLHF) technology gradually developed and eventually led to the birth of ChatGPT.

Figure 2: The progress of ChatGPT

1. Language model (LM, language model)

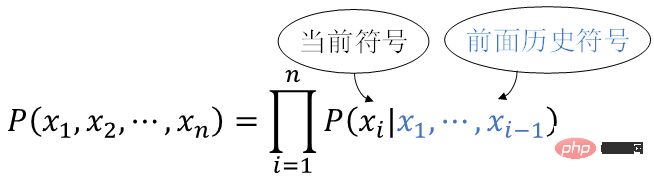

Language model is a probability model of human natural language. Human natural language is a sentence, and a sentence is a sequence of natural language symbols x1,x2,…,xn, subject to probability Distribution

Using the multiplication formula of probability theory, we can get

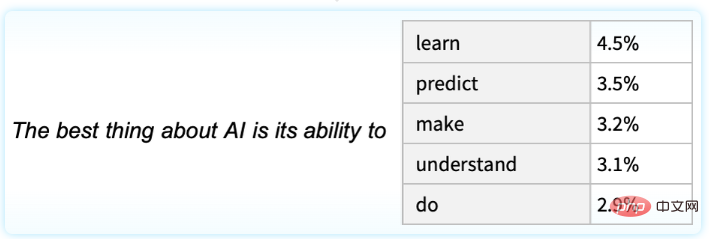

Put this from left to right, each position uses the previous historical symbolsx1,x2,…,xi-1 (i.e. above), calculate the (conditional) probability of the current symbol appearing P(xi | x1,…,x The model of i-1) is called autoregressive language model, which is very natural and can be used to generate the current symbol given the above conditions. For example, the figure below illustrates the occurrence probability of the next symbol given the above text "The best thing about AI is its ability to".

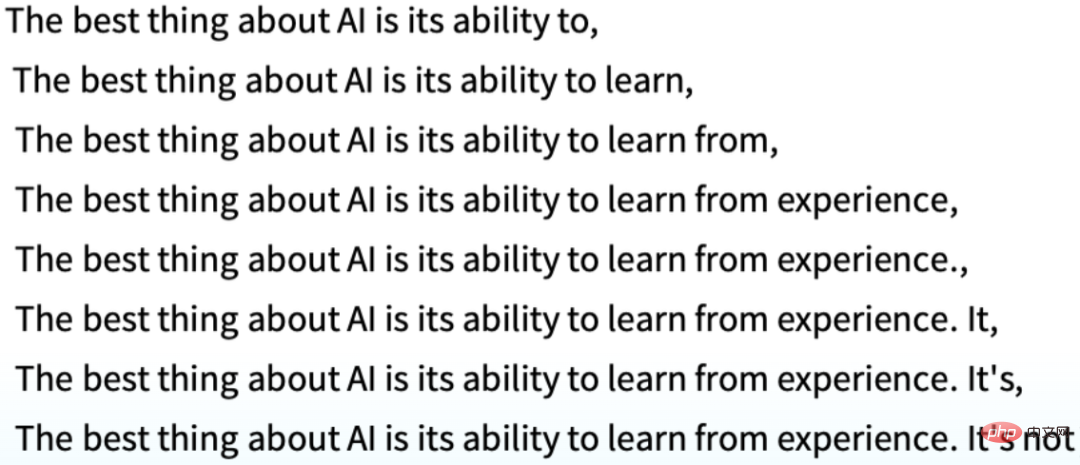

## can be generated recursively,

P(xi | x1,…,xi-1 ), and can effectively learn model parameters from big data. A technological advancement based on ChatGPT is the use of neural networks to represent P(xi | x1,…,xi-1).

2. Transformer neural network architecture

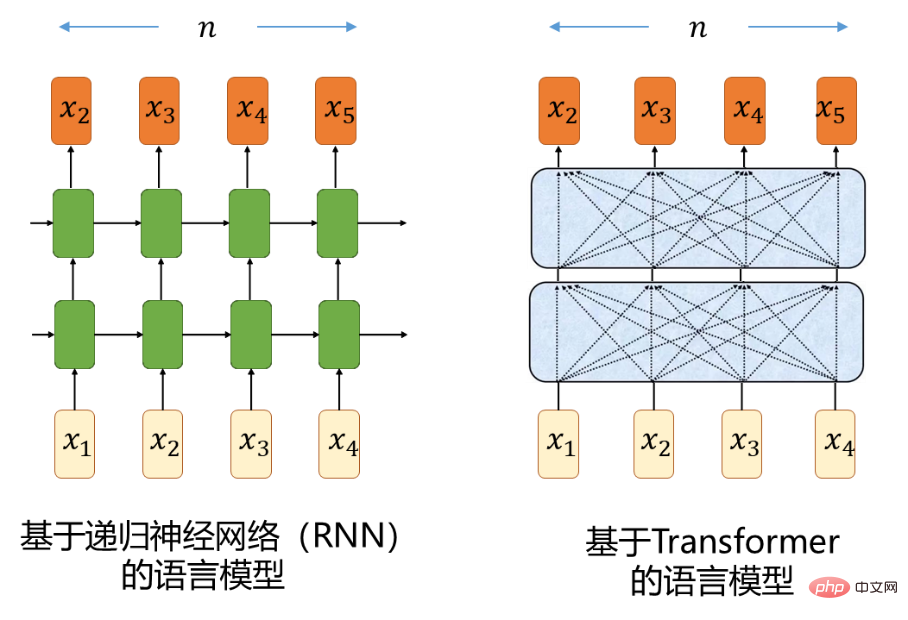

For sequence conditional distributionP (xi | ) modeling. Sequence models that use ordinary recurrent neural networks (RNN) will encounter the exploding and vanishing gradient effects [1] during training, so it is very long. At present, people use RNN based on long short-term memory network (long short-term memory, LSTM) [2] to perform sequence modeling. LSTM alleviates the defects of gradient explosion and disappearance to a certain extent by introducing a gating mechanism. The Transformer neural network architecture [3] developed in 2017 completely abandoned recursive calculations and used a feed-forward neural network (FFNN) to perform sequence construction by utilizing the self-attention mechanism. model, which better solves the defects of gradient explosion and disappearance. Let us intuitively understand the advantages of Transformer over RNN in sequence modeling. Consider two positions in the sequence that are n apart. The signal between the two positions in the forward and backward calculations and the path length traveling in the neural network are the factors that affect the neural network. An important factor in the ability to learn long-range dependencies, RNN is O(n), and Transformer is

O(1). Readers who do not understand this section can skip it without affecting the reading of the following content:-)Figure 3

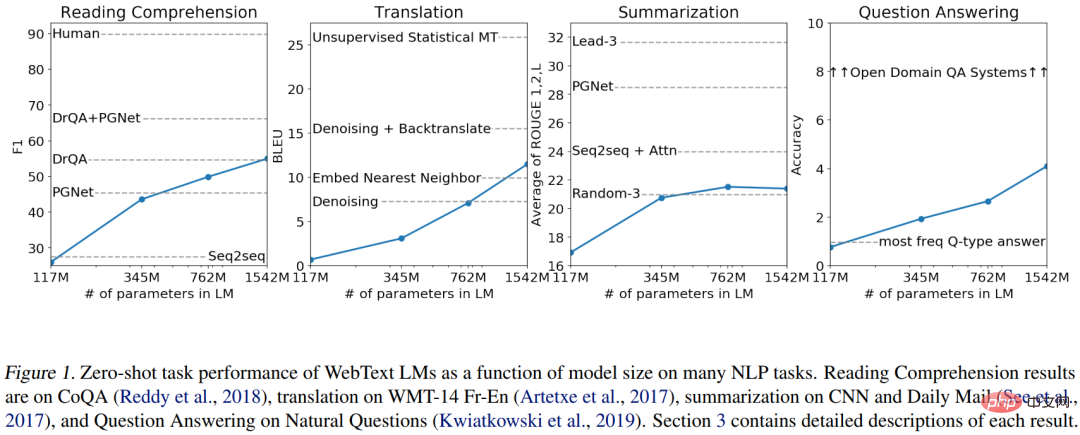

3. GPT language model and pre-training fine-tuning technology Natural language understanding includes a wide range of different tasks, such as question answering, semantic similarity assessment, text implication relationship judgment, document classification, machine translation, reading comprehension, summarization, etc. It was found that one can first train a large Transformer-LM (often called a skeleton) on a large amount of (unlabeled) text, and then fine-tune this large Transformer network using the respective annotation data of the downstream tasks when faced with different downstream tasks. , has achieved great performance improvement. This is the so-called pre-training fine-tuning technology (pre-training fine-tuning). Typical technologies include GPT [4] and BERT [5] developed in 2018-2019. GPT is an autoregressive language model based on Transformer, and BERT is a masked language model (MLM) based on Transformer. As stated in the original text of GPT, "Our work broadly falls under the category of semi-supervised learning for natural language." This unsupervised pre-training is combined with Supervised fine-tuning is a kind of semi-supervised learning, the essence of which is to collaboratively perform supervised learning and unsupervised learning. 4. GPT-2 and zero-sample prompt technology Under the framework of pre-training fine-tuning technology, each downstream task still needs to be , collect and label a lot of labeled data, and then fine-tune to obtain a "narrow expert" on the respective task. Each narrow model needs to be built and stored separately, which is time-consuming, laborious and resource-consuming. It would be great if for each task. In the 2019 GPT-2 original text [6], with such a clear statement of vision, have you already seen the flavor of moving towards AGI? :-)「We would like to move towards more general systems which can perform many tasks – eventually without the need to manually create and label a training dataset for each one.》 Let the machine learn to perform a natural language understanding task ( Such as Q&A), the essence is to estimate the conditional distribution

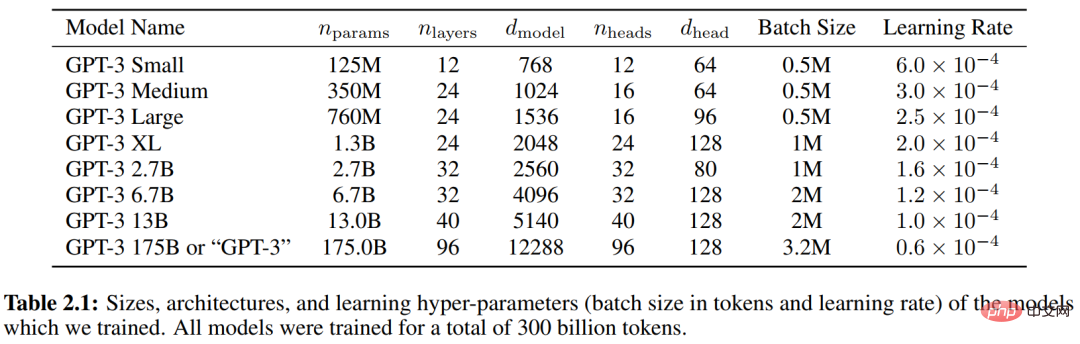

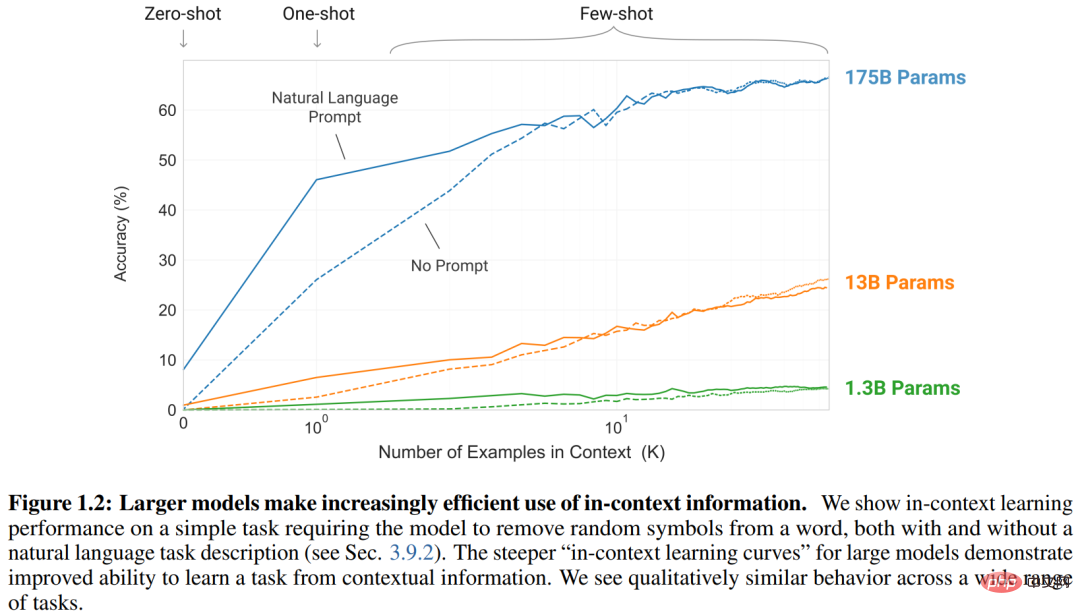

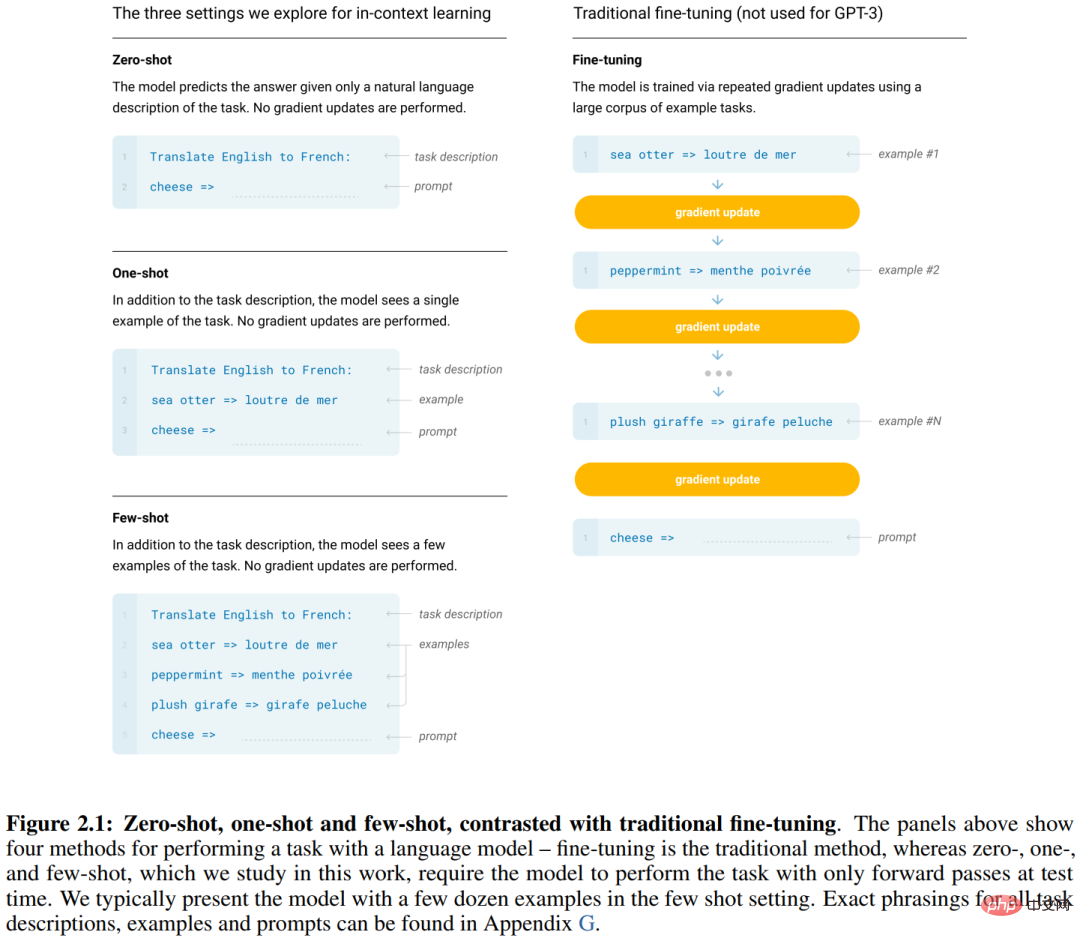

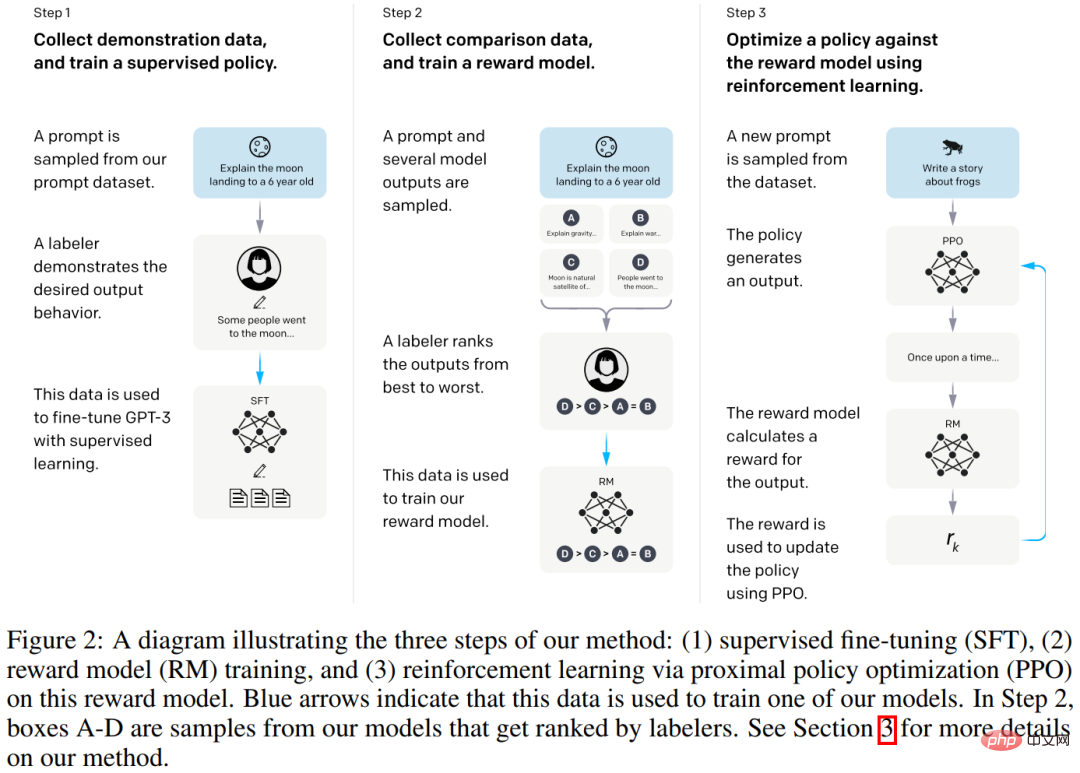

, that is, modeling are all expressed as symbol sequences in natural language, In this way, the model P(output | task, input) boils down to a language model ——Given Above, the next symbol is generated recursively. The training data for different tasks are uniformly organized into the form of symbol sequences such as ##(translate to french, english text, french text) ##(answer the question, document, question, answer) Among them, task is often called . There are many methods of prompting, and there are many related studies. This article will not introduce them. There was research on similar ideas before GPT-2. GPT-2 brought the scale (regardless of the size of the training set or the model) to a new level, collecting millions of web pages to create a With the large data set WebText (40GB), Transformer-LM with a maximum parameter size of 1.5B was trained, demonstrating excellent performance on multiple tasks under zero-sample conditions without any parameter or model architecture modification (without any parameter or modification architecture). It is worth pointing out that the approach of GPT-2 fully embodies multitask learning and meta-learning, which can be used as an intuitive explanation for the excellent performance of GPT-2. (from GPT-2 original text [6] "Language models are unsupervised multitask learners". GPT-2 trained a series of Transformers -LM, the parameter sizes are 117M, 345M, 762M, and 1542M respectively. The above figure shows that as the model parameter scale increases, the performance of each task continues to improve.) 5. GPT-3 and in-context learning The work of GPT-3 in 2020[7] continues the vision and technical route of GPT-2 , hoping to break through the shortcomings of task-specific annotation and fine-tuning in each task (there is still a need for task-specific datasets and task-specific fine-tuning), hoping to build a universal system like a human, the article clearly points out that One of the motivations for the research is to note: 「humans do not require large supervised datasets to learn most language tasks – a brief directive in natural language (e.g. 『please tell me if this sentence describes something happy or something sad』) or at most a tiny number of demonstrations (e.g. 『here are two examples of people acting brave; please give a third example of bravery』) is often sufficient to enable a human to perform a new task to at At least a reasonable degree of competence." That is to say, given the task description (directive) and demonstration samples (demonstrations), the machine should be able to perform a variety of tasks like humans. GPT-3 has once again scaled up to a new level. The training set size is 45TB text (before cleaning) and 570GB (after cleaning) , the scale of Transformer-LM has increased 10 times compared to GPT-2, reaching 175B (see Table 2.1 below). The GPT-2 article mainly conducted experiments on zero-shot prompt situations, while GPT-3 conducted experiments on zero-shot, single-shot and few-shot situations, collectively called In Context Learning. (Situational learning), the demonstration samples (demonstrations) given can be 0, 1 or more, but they will all have task descriptions (task description), see the illustration in Figure 2.1. As can be seen from Figure 1.2, as the number of demonstration samples increases, the performance of models of different sizes increases. ## (The above are all from the original GPT-3 text [7]「Language Models are Few-Shot Learners」) 6. InstructGPT, ChatGPT and RLHF technology The current practice of large language model (LLM) for natural language understanding is based on P(output | task,input), given the above task,input , recursively generates the next symbol. One starting point of InstructGPT research is to consider that in human-computer dialogue, increasing language models does not inherently make them better follow user intentions. Large language models can also exhibit unsatisfactory behavior, such as fabricating facts, generating biased and harmful text, or simply not being helpful to users. This is because the language modeling goal used by many recent large-scale LMs is to predict the next symbol on a web page from the Internet, which is different from "helpfully and safely follow the user" "Instructions" goal. Therefore, we say that the language modeling goal is misaligned. Avoiding these unexpected behaviors is especially important when deploying and using language models in hundreds of applications. In March 2022, the InstructGPT work [8] demonstrated a way to align language models with user intent on a range of tasks by fine-tuning based on human feedback. , the resulting model is called InstructGPT. Specifically, as shown in Figure 2 below, the InstructGPT construction process consists of three steps: to fine-tune GPT-3 (size 175B), training to obtain supervised policy (supervised policy). Reward Model), with a size of 6B. reinforcement learning from human feedback (reinforcement learning from human feedback, RLHF). Specifically, a strategy optimization method called PPO is used [9].

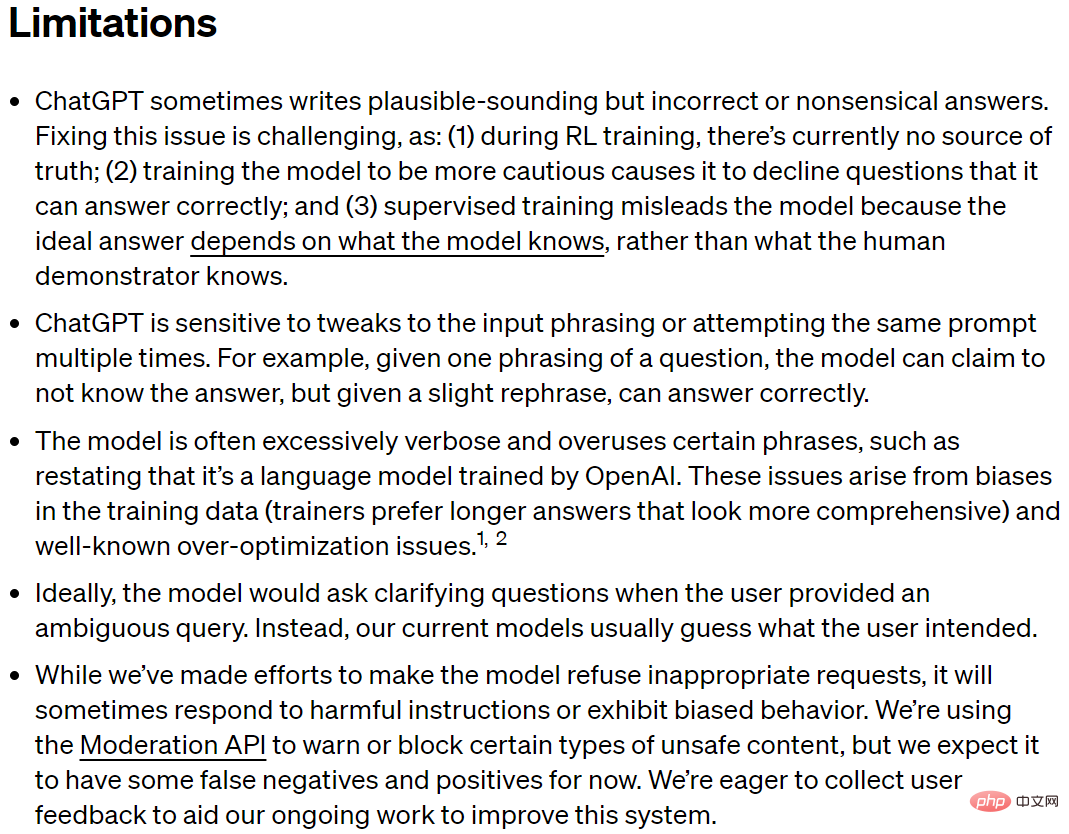

In November 2022, OpenAI released the ChatGPT model [10], which is basically a successor to the InstructGPT model. It uses the same three-step training method, but collects larger-scale data for model training. and system building. Summary: From language model research, Transformer neural network architecture, GPT language model and pre-training fine-tuning, GPT-2 and zero-shot prompting ), GPT-3 and in-context learning, developed to InstructGPT, ChatGPT and RLHF technology. Looking back, it seems to be a relatively clear technical path, but in fact, other types of language models (such as energy-based Language model [11]), other types of neural network architectures (such as state space models [12]), other types of pre-training methods (such as based on latent variable models [13]), other reinforcement learning methods (such as based on user simulation [14]), etc., are constantly developing, and the research on new methods has never stopped. Different methods inspire and promote each other, forming a rolling torrent leading to general artificial intelligence, rushing forward, and endlessly. A very important point throughout the six parts of ChatGPT is the Scale Effect, commonly known as the aesthetics of violence. When the route is basically correct, increasing the scale is a good way to improve performance. Quantitative changes lead to qualitative changes, but quantitative changes may not lead to qualitative changes, if there are deficiencies in the route. Let’s talk about the shortcomings of ChatGPT. 02 Shortcomings of ChatGPT of your work. On the contrary, avoiding talking about shortcomings is not rigorous and is not conducive to a comprehensive understanding of a technology. It may mislead the public, encourage the Eliza effect and even make wrong judgments. In fact, the original text of ChatGPT [10] has made a relatively comprehensive statement on its shortcomings.

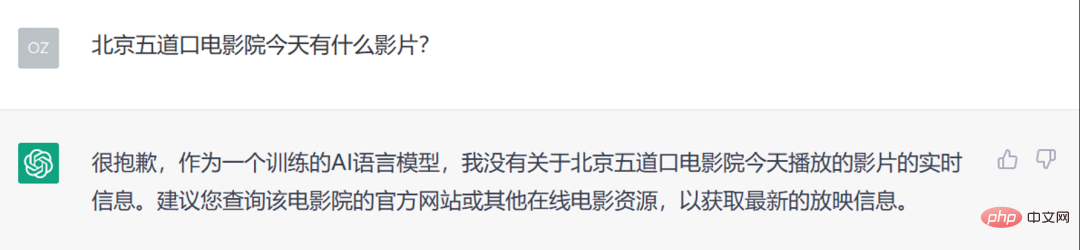

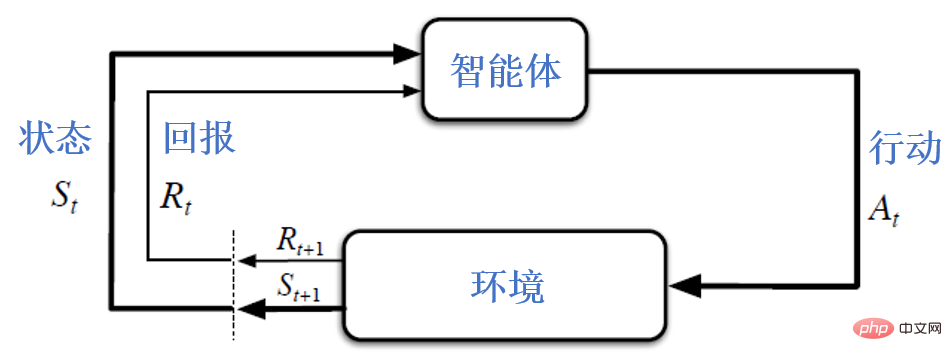

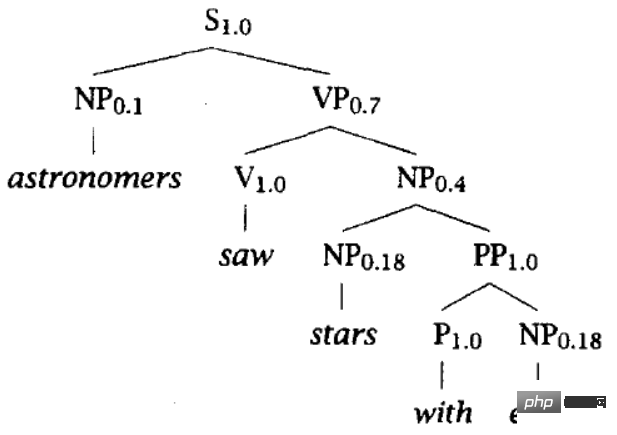

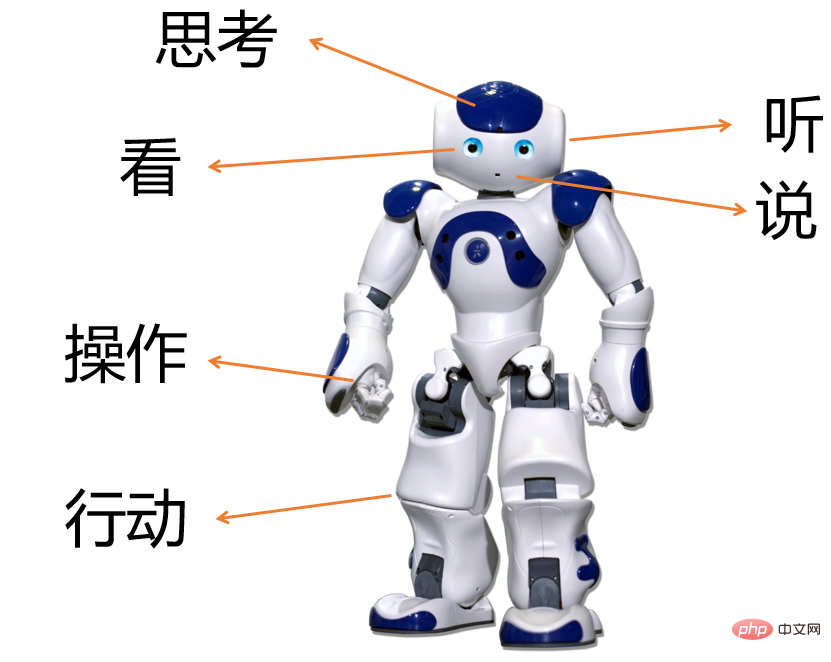

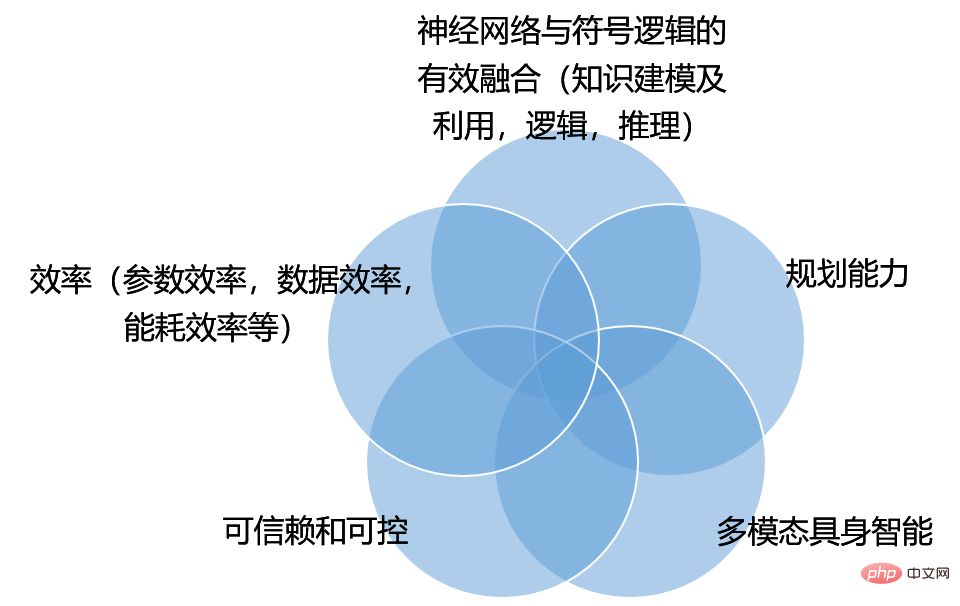

Readers can directly read the above in English, and a brief explanation in Chinese below. Readers can understand it with the following examples. Our more analysis of the shortcomings of ChatGPT will be discussed in the next chapter when we explore the challenges facing AGI (Artificial General Intelligence). L1. ChatGPT sometimes writes answers that appear reasonable but are incorrect or ridiculous L2. ChatGPT is sensitive to adjustments to input wording or multiple attempts to the same prompt . L3. ChatGPT generation, is often too verbose and overuses certain phrases , such as reiterating that it is an OpenAI trained language model. L4. Ideally, when a user provides an ambiguous query, the model should ask clarifying questions. Instead, current models often guess the user’s intent. L5. Although ChatGPT has worked hard to have the model reject inappropriate requests, it may still sometimes respond to harmful instructions or exhibit biased behavior. Figure 4: ChatGPT example about cow eggs and eggs Figure 5: Example of insufficient real-time information processing in ChatGPT Based on the shortcomings described in the original text of ChatGPT [10], we summarize the shortcomings of ChatGPT into the following five points. These five points also basically represent the challenges faced by ChatGPT in the future towards general artificial intelligence (AGI). They are important scientific issues and key technologies that need to be solved urgently towards AGI. It is worth pointing out that the perspective of this article is not to analyze shortcomings and challenges from the perspective of tasks, but more from the perspective of common issues of various tasks. If tasks are rows and problems are columns, then our perspective is to analyze by columns. Line by line can also give very good analysis and judgment. When discussing AGI, we need to go beyond the limitations of focusing only on natural language processing and examine artificial intelligence research and technology from a broader perspective. Referring to the classic work on artificial intelligence [15], artificial intelligence refers to the research and design of intelligent agents. Intelligent agent (intelligent agent) refers to any device that canobserve the surrounding environment and makeactionTo maximize the chance of success, more academically speaking, maximize expected utility (utility) or maximize expected return (return), as shown in the figure below. Careful readers will find that the following figure is also commonly used as a framework diagram for reinforcement learning. Indeed, there is a similar statement in [15], "Reinforcement learning might be considered encompassing all of AI" (reinforcement learning may be considered to encompass all of artificial intelligence). With these concepts in mind, let’s understand the shortcomings of ChatGPT. Figure 6: The interaction between the agent and the environment, often used as a framework diagram for reinforcement learning [16] 1. ChatGPT will randomly make up (seriously give out wrong information), and has obvious deficiencies in knowledge modeling and utilization. This basically corresponds to the L1 introduced earlier, which can be clearly seen from the previous example. What we call knowledge includes common sense knowledge, specialized knowledge, and real-time information. For example, from a common sense perspective, ChatGPT initially showed that it did not know that cows are mammals and cannot lay eggs. Judging from real-time information, ChatGPT is essentially a large-scale autoregressive language model based on the Transformer architecture. The knowledge it has learned is limited to its training data, and its deadline is 2021. Readers can try using ChatGPT on their own and discover its shortcomings in this regard. The above shortcomings, from a deeper level, reflect the long-standing connectionism (connectionist) and symbolism## in the history of artificial intelligence. The dispute between the two schools of thought of #(symbolism). ##Figure 7 Connectionism believes that Knowledge is buried in the weights of the neural network. Training the neural network to adjust its weights allows it to learn knowledge. Symbolism advocates that knowledge is organized by symbolic systems, such as relational databases, knowledge graphs, mathematical physics and other specialized knowledge, and mathematical logic. The two trends of thought are also intersecting and merging, such as Therefore, in order to overcome the shortcomings of ChatGPT in knowledge modeling and utilization, a deep-seated challenge to the existing technology is, The combination of neural networks and symbolic logic Effective integration. Data and knowledge are two-wheel drives. There has been a lot of research work over the years, but in general, finding an effective integration method still requires continuous efforts. 2. ChatGPT has obvious shortcomings in multi-round dialogue interaction and lacks planning capabilities. This basically corresponds to L4 above. L4 just points out that ChatGPT will not ask clarification questions. But we see more serious shortcomings than L4. From the construction process of ChatGPT, it models conditional distribution P(output | input) for prediction and does not do planning. . In the framework shown in Figure 6, a very important concept is planning. The purpose of planning is to maximize expected utility. This is significantly different from large language models that maximize the conditional likelihood of occurrence of language symbols P(output | input). InstructGPT considers that the system should follow user intentions, and uses RLHF (reinforcement learning from human feedback) technology to allow the system to output aligned to human questions, which partially alleviates the need for supervised learning of GPT-3 without planning. And caused the misalignment problem. Further improving planning capabilities to maximize expected utility will be a big challenge for ChatGPT to lead to AGI. 3. ChatGPT behavior is uncontrollable. This basically corresponds to L2, L3, and L5 above. The system output is sensitive to the input (L2), there is no way to control it for being too verbose or overusing certain phrases (L3), and there is no way to control it for responding to harmful instructions or exhibiting biased behavior (L5). These shortcomings not only appear in ChatGPT, but also in computer vision, speech recognition and other intelligent systems built with current deep learning technology, there are similar uncontrollable problems. Human beings have Socratic wisdom, that is, "you know you don't know." This is exactly what the current deep neural network system lacks, because you don't know you are wrong. Most of today's neural network systems are over-confident and do not report errors to humans. They still seem to be surprisingly high when they make mistakes, making them difficult to trust and control. Trustworthy and controllable 4. ChatGPT efficiency is insufficient. This point was not taken seriously in the shortcomings of ChatGPT statement. Efficiency includes parameter efficiency, data efficiency, energy consumption efficiency, etc.. ChatGPT has achieved outstanding performance by using extremely large data, training extremely large models, and continuously increasing its scale. However, at the same scale (same number of model parameters, same amount or cost of data labeling, same computing power, same energy consumption), does ChatGPT represent the most advanced technology? The answer is often no. For example, recent research reported [19] that the LLaMA model with 13B parameters outperformed the 175B GPT-3 model in multiple benchmark tests, so the 13B LLaMA model has better parameter efficiency. Our own recent work has also shown that a well-designed knowledge retrieval dialogue model only uses 100M, and the performance significantly exceeds that of a large model of 1B. Energy consumption efficiency is easy to understand, let’s look at data efficiency. The current construction of intelligent systems is stuck in the supervised learning paradigm relying on a large number of manual annotations, resulting in low data efficiency. Based on large-scale language models based on autoregression, it was found that they can be trained on a large amount of text (without annotation) first, and then use fine-tuning or prompting technology, which partially alleviates the shortcomings of low data efficiency of current deep learning technology, but still requires task-related annotation. data. The larger the model, the greater the labeling requirements. How to further efficiently utilize labeled data and unlabeled data is a challenge to achieve data efficiency. 5. Multimodal embodied intelligence is an important part of exploring AGI. #ChatGPT is limited to text input and output, and the many mistakes it makes also illustrate its serious lack of semantics, knowledge, and causal reasoning. The meaning of words seems to lie in their statistical co-occurrence rather than in its real-world basis. So even if future language models become larger and larger, they still perform poorly on some basic physical common sense. Intelligence goes far beyond language ability. The basic element of biological intelligence lies in the ability of animals to sensory-motor interaction with the world[20 ]. Intelligent machines in the future may not necessarily have a human form, but the multi-modal interaction between the machine and the environment through the body in listening, speaking, reading, writing, thinking, manipulating objects, acting, etc. will greatly promote the development of machine intelligence. It will also help machine intelligence transcend the limitations of a single modality of text and better help humans. Summary: From a linguistic perspective, Language knowledge includes the structure and characteristics of words - morphology and lexicon ), how words form phrases and sentences - syntax, the meaning of morphemes, words, phrases, sentences and discourse - semantics [21]. ChatGPT has learned considerable language knowledge (especially knowledge below the semantic level) through a very large model, has a certain language understanding ability, and can generate fluent sentences, but there are also obvious shortcomings: In response to these shortcomings, we have sorted out several challenges facing the future from ChatGPT and moving towards general artificial intelligence (AGI), as shown in Figure 8, and also pointed out Several important research contents. It is worth pointing out that the research areas of each area are not isolated, but intersect with each other. For example, in the study of trustworthiness and controllability, it is hoped that the system output conforms to social norms, so how to reflect this social norm in the utility of the system, so that the output of system planning can conform to social norms. Therefore, there is overlap between research on system controllability and research on improving system planning capabilities. For another example, how to integrate knowledge in systematic planning and decision-making? Figure 8: Challenges towards AGI ChatGPT is an important event in artificial intelligence research. It is very important to have a rigorous understanding of its progress, shortcomings and future challenges towards AGI. We believe that by seeking truth and being pragmatic, and by constantly innovating, we can promote the development of artificial intelligence to a new height. We welcome everyone to discuss and correct us, thank you!

Since a general system can perform many different tasks, its modeling should be further Conditional on task

Since a general system can perform many different tasks, its modeling should be further Conditional on task An innovative approach represented by GPT-2 is,

An innovative approach represented by GPT-2 is, . For example,

. For example,

In recent years, a very good practice of top conferences in the field of artificial intelligence (such as ICML, ACL) is to add an item for submissions As required, a section must be left in the article to describe the

Limitations ##The shortcomings of ChatGPT (screenshot from ChatGPT original text [10])

##The shortcomings of ChatGPT (screenshot from ChatGPT original text [10])

04 Conclusion

The above is the detailed content of An exclusive interview with Professor Ou Zhijian from Tsinghua University, providing an in-depth analysis of ChatGPT's aura and future challenges!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

Open AI’s ChatGPT Mac application is now available to everyone, having been limited to only those with a ChatGPT Plus subscription for the last few months. The app installs just like any other native Mac app, as long as you have an up to date Apple S

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

Laying out markets such as AI, GlobalFoundries acquires Tagore Technology's gallium nitride technology and related teams

Jul 15, 2024 pm 12:21 PM

According to news from this website on July 5, GlobalFoundries issued a press release on July 1 this year, announcing the acquisition of Tagore Technology’s power gallium nitride (GaN) technology and intellectual property portfolio, hoping to expand its market share in automobiles and the Internet of Things. and artificial intelligence data center application areas to explore higher efficiency and better performance. As technologies such as generative AI continue to develop in the digital world, gallium nitride (GaN) has become a key solution for sustainable and efficient power management, especially in data centers. This website quoted the official announcement that during this acquisition, Tagore Technology’s engineering team will join GLOBALFOUNDRIES to further develop gallium nitride technology. G