Technology peripherals

Technology peripherals

AI

AI

OpenAI President: GPT-4 is not perfect but it is definitely different

OpenAI President: GPT-4 is not perfect but it is definitely different

OpenAI President: GPT-4 is not perfect but it is definitely different

# News on March 16th, artificial intelligence research company OpenAI released the highly anticipated text generation AI model GPT-4 yesterday. Greg Brockman, co-founder and president of OpenAI, said in an interview that GPT-4 is not perfect, but it is definitely different.

GPT-4 improves on its predecessor GPT-3 in a number of key ways, such as providing more truthful representations and allowing developers to more easily control its style and behavior. GPT-4 is also multimodal in the sense that it can understand images, add annotations to photos, and even describe in detail what is in the photo.

But GPT-4 also has serious flaws. Just like GPT-3, the model suffers from "illusions" (i.e., the text aggregated by the model is irrelevant or inaccurate enough to the source text) and makes basic inference errors. OpenAI gave an example on its blog. GPT-4 described "Elvis Presley" as "the son of an actor", but in fact neither of his parents were actors.

When asked to compare GPT-4 with GPT-3, Brockman only gave a four-word answer: different. He explained: "GPT-4 is definitely different, even though it still has a lot of problems and bugs. But you can see a jump in skills in subjects like calculus or law. It has performed very poorly in some areas , but now it has reached a level beyond ordinary people."

The test results support Brockman's view. In the college entrance calculus test, GPT-4 is scored 4 points (out of 5 points), GPT-3 is scored 1 point, and GPT-3.5, which is between GPT-3 and GPT-4, is also scored 4 points. In the mock bar exam, GPT-4 scores entered the top 10%, while GPT-3.5 scores hovered around the bottom 10%.

At the same time, GPT-4 is more concerned about the multi-mode mentioned above. Unlike GPT-3 and GPT-3.5, which can only accept text prompts, such as asking to "write an article about giraffes," GPT-4 can accept both image and text prompts to perform certain operations, such as identifying people in An image of a giraffe captured in the Serengeti, with a basic description of the content.

This is because GPT-4 is trained on image and text data, while its predecessor was trained only on text. OpenAI said the training data comes from "a variety of legally authorized, publicly available data sources, which may include publicly available personal information," but when asked to provide details, Brockman declined. Training data has landed OpenAI in legal trouble before.

The image understanding capabilities of GPT-4 left a deep impression on people. For example, typing the prompt "What's so funny about this image?" GPT-4 will break down the entire image and correctly explain the punch line of the joke.

Currently, only one partner can use GPT - 4, an assistive app for the visually impaired called Be My Eyes. Brockman said that a wider rollout is in the works as OpenAI evaluates the risks and pros and cons of any time. It will be "slowly and deliberately".

He also said: "There are policy issues that also need to be addressed, such as facial recognition and how to process images of people. We need to find out where the danger zones are, where the red lines are, and then find solutions over time. ”

OpenAI faced a similar ethical dilemma with its text-to-image conversion system Dall-E 2. After initially disabling the feature, OpenAI allowed customers to upload faces to be used with the AI-powered image generation system. It edits. At the time, OpenAI claimed that upgrades to its security system made the face-editing feature possible because it minimized the potential harm of deepfakes and attempts to create pornographic, political and violent content.

Another The long-term issue is preventing GPT-4 from being used inadvertently in ways that could cause harm. Hours after the model was released, Israeli cybersecurity startup Adversa AI published a blog post demonstrating bypassing OpenAI's content filters And let GPT-4 generate phishing emails, offensive descriptions of gays, and other objectionable text.

This is not a new problem in the world of language models. BlenderBot, a chatbot from Facebook parent company Meta and OpenAI’s ChatGPT have also been tempted to output inappropriate content and even reveal sensitive details of their inner workings. But many, including journalists, had hoped that GPT-4 might bring significant improvements in this regard.

When asked about the robustness of GPT-4, Brockman emphasized that the model has undergone six months of security training. In internal testing, it did not respond to requests for content not allowed by the OpenAI usage policy. "We spent a lot of time trying to understand GPT," Brockman said. -4 ability. We're continually updating it to include a range of improvements so that the model is more scalable to suit the personality or mode people want it to have. ”

Frankly, the early real-world test results are not that satisfactory. In addition to the Adversa AI test, Microsoft's chatbot Bing Chat also proved to be very easy to jailbreak. Using carefully crafted inputs, users can tell the chatbot to express affection, threaten harm, justify mass murder, and invent conspiracy theories.

Brockman did not deny that GPT-4 fell short in this area, but he highlighted the model's new limiting tools, including an API-level feature called "system" messages. System messages are essentially instructions that set the tone and establish boundaries for interactions with GPT-4. For example, a system message might read: "You are a tutor who always answers questions in a Socratic style. You never give your students answers, but always try to ask the right questions to help them learn Think for yourself."

The idea is that system messages act as guardrails to prevent GPT-4 from going off track. "Really figuring out the tone, style and substance of GPT-4 has been a big focus of ours," Brockman said. "I think we're starting to understand more about how to do engineering, how to have a repeatable process that allows you to Get predictable results that are actually useful to people."

Brockman also mentioned Evals, OpenAI's latest open source software framework for evaluating the performance of its AI models, which OpenAI is committed to" Enhance” the hallmark of its model. Evals allows users to develop and run benchmarks that evaluate models (such as GPT-4) while checking their performance, a crowdsourced approach to model testing.

Brockman said: "With Evals, we can better see the use cases that users care about and can test them. Part of the reason why we open sourced this framework is that we no longer Release a new model every three months to keep improving. You wouldn't make something you can't measure, right? But as we roll out new versions of the model, we can at least know what changes have occurred."

布Rockman was also asked whether OpenAI would compensate people for testing its models with Evals. He was reluctant to commit to this, but he did note that for a limited time, OpenAI is allowing early access to the GPT-4 API to Eevals users who request it.

Brockman also talked about GPT-4’s context window, which refers to the text that the model can consider before generating additional text. OpenAI is testing a version of GPT-4 that can "remember" about 50 pages of content, five times the "memory" of regular GPT-4 and eight times the "memory" of GPT-3.

Brockman believes that the expanded contextual window will lead to new, previously unexplored use cases, especially in the enterprise. He envisioned an AI chatbot built for companies that could use background and knowledge from different sources, including employees across departments, to answer questions in a very knowledgeable but conversational way.

This is not a new concept. But Brockman believes GPT-4’s answers will be far more useful than those currently provided by other chatbots and search engines. "Before, the model had no idea who you were, what you were interested in, etc. And having a larger context window definitely makes it stronger, greatly enhancing the support it can provide people," he said. Xiaoxiao)

The above is the detailed content of OpenAI President: GPT-4 is not perfect but it is definitely different. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

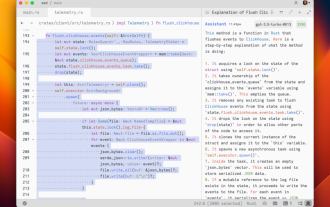

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Not long ago, OpenAISora quickly became popular with its amazing video generation effects. It stood out among the crowd of literary video models and became the focus of global attention. Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team has fully open sourced the world's first Sora-like architecture video generation model "Open-Sora1.0", covering the entire training process, including data processing, all training details and model weights, and join hands with global AI enthusiasts to promote a new era of video creation. For a sneak peek, let’s take a look at a video of a bustling city generated by the “Open-Sora1.0” model released by the Colossal-AI team. Open-Sora1.0

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

The local running performance of the Embedding service exceeds that of OpenAI Text-Embedding-Ada-002, which is so convenient!

Apr 15, 2024 am 09:01 AM

Ollama is a super practical tool that allows you to easily run open source models such as Llama2, Mistral, and Gemma locally. In this article, I will introduce how to use Ollama to vectorize text. If you have not installed Ollama locally, you can read this article. In this article we will use the nomic-embed-text[2] model. It is a text encoder that outperforms OpenAI text-embedding-ada-002 and text-embedding-3-small on short context and long context tasks. Start the nomic-embed-text service when you have successfully installed o

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft and OpenAI were revealed to be investing large sums of money into a humanoid robot startup at the beginning of the year. Among them, Microsoft plans to invest US$95 million, and OpenAI will invest US$5 million. According to Bloomberg, the company is expected to raise a total of US$500 million in this round, and its pre-money valuation may reach US$1.9 billion. What attracts them? Let’s take a look at this company’s robotics achievements first. This robot is all silver and black, and its appearance resembles the image of a robot in a Hollywood science fiction blockbuster: Now, he is putting a coffee capsule into the coffee machine: If it is not placed correctly, it will adjust itself without any human remote control: However, After a while, a cup of coffee can be taken away and enjoyed: Do you have any family members who have recognized it? Yes, this robot was created some time ago.

Sudden! OpenAI fires Ilya ally for suspected information leakage

Apr 15, 2024 am 09:01 AM

Sudden! OpenAI fires Ilya ally for suspected information leakage

Apr 15, 2024 am 09:01 AM

Sudden! OpenAI fired people, the reason: suspected information leakage. One is Leopold Aschenbrenner, an ally of the missing chief scientist Ilya and a core member of the Superalignment team. The other person is not simple either. He is Pavel Izmailov, a researcher on the LLM inference team, who also worked on the super alignment team. It's unclear exactly what information the two men leaked. After the news was exposed, many netizens expressed "quite shocked": I saw Aschenbrenner's post not long ago and felt that he was on the rise in his career. I didn't expect such a change. Some netizens in the picture think: OpenAI lost Aschenbrenner, I