ChatGPT The trend is getting worse, and University of Washington linguist Emily M. Bender has spoken out against letting large models (LLM) overly intervene in people's lives.

Google and Amazon mentioned in their papers that LLM already has a chain of thought (CoT) and spontaneous structure emergence (Emergence), that is to say, LLM I began to learn the human "slow thinking" thinking mode, and learned to answer based on logical reasoning, instead of just answering based on intuition.

As a linguist, Bender has noticed the danger of LLM's "power expansion", and some people have begun to believe that - "We should abandon "humanity" in the "species" "Such an important idea".

What is hidden behind this idea is actually an AI ethical issue that may be brought about by the high development of LLM: if one day , we have created a chat robot that humans cannot distinguish, so does this robot enjoy "human rights"?

Bender expressed deep concern about this. Although the materials on the earth are all important, But the journey from Language Model to Existential Crisis is really too short.

There are very few people on earth who understand nuclear weapons. Different Regarding nuclear weapons, LLM has a huge influence, but no one has yet demonstrated clearly what impact it will have on human society.

ChatGPT’s impact on our lives has already appeared. At the same time, Like Bender, many people have noticed the ethical difficulties caused by ChatGPT. Many schools, journals, and academic conferences have banned the use of ChatGPT, and some companies have joined this camp.

Bender knows she can't compete with the Trillion Game, but she's still asking who exactly LLM serves, and she hopes people will figure out their place soon before it spirals out of control.

Existence is justice.

As she said, As long as you are a human being, you should be respected morally.

This article was originally published on Nymag.com. In order to ensure a reading experience, with the help of ChatGPT, AI Technology Review has abridged and adapted this article without changing the original meaning.

Before Microsoft’s Bing started outputting creepy love letters, before Meta’s Galactica started making racist comments, ChatGPT started writing Publish college papers so good that some professors say, “Forget it, I’m not going to grade them.” Before tech journalists start to redeem themselves from the idea that AI will be the future of search, and maybe the future of everything. , Emily M. Bender co-authored an "Octopus Paper".

Bender is a computational linguist at the University of Washington. She and her colleague Alexander Koller published in 2020 A paper that aims to illustrate what Large Language Models (LLMs) - the technology behind the chatbot ChatGPT - can and cannot do.

The scenario is set as follows: Hypothesis A and B, both fluent in English, were stranded on two uninhabited islands. They soon discovered that previous visitors to the island had left telegrams and they could communicate with each other via underwater cables. A and B start happily messaging each other. Meanwhile, a super-intelligent deep-sea octopus named O, who cannot visit or observe the two islands, discovers a way to connect to underwater cables and listen in on A and B's conversations. O initially knew nothing about English but was very good at statistical analysis. Over time, O learns to predict with high accuracy how B will respond to each utterance of A.

Soon, the octopus joins the conversation and begins to impersonate B and reply to A. The deception goes on for some time, with A believing that O uses intent to communicate just like she and B do. Then one day, A calls for help: "I have been attacked by an angry bear. Help me find a way to protect myself. I have some branches." The octopus pretending to be B did not help. How could it possibly succeed? The octopus has no reference, it doesn't know what a bear or a tree branch is. There's no way to give instructions for, say, go get some coconuts and rope and build a slingshot. A is stuck and feels cheated. Octopus is revealed to be a liar.

The official title of this paper is: Climbing Towards NLU: On Meaning, Form, and Understanding in the Age of Data. NLU stands for "Natural Language Understanding." How should we interpret natural language (i.e., human-like language) produced by LLM? These models are built based on statistics. They work by finding patterns in large amounts of text and then using those patterns to guess what the next word should be. They are good at imitation, but not good at facts.

Like an octopus, there is no access to real-world, concrete references things. This makes LLMs seductive, amoral, and the Platonic “bullshit ideal”—a term defined by philosopher Harry Frankfurt, author of On Bullshit. Frankfurt believes that bullshit writers are worse than liars. They don't care whether something is true or false. They only care about rhetorical power - if the audience or reader is convinced.

Bender, a 49-year-old, unpretentious, practical, extremely knowledgeable person with two cats named after mathematicians, has been arguing with her husband for 22 years "she doesn' t give a fuck” or “she has no fucks left to give” which phrase is more appropriate for a woman. Over the past few years, in addition to running the computational linguistics master's program at the University of Washington, she has been on the cutting edge of chatbots, expressing dissatisfaction with the expansion of artificial intelligence and, for her, large-scale language models (LLM). ) in Overreach, "No, you shouldn't use LLM to "restore" the Mueller Report", "No, LLM can't give meaningful testimony before the US Senate", "No, chatbots can't have accurate knowledge of the person on the other end" understanding'".

Don’t mix word forms and meanings, stay alert – that’s Bender’s motto.

The Octopus Paper is a parable for our times. The important question is not about technology, but about ourselves - what will we do with these machines?

We have always believed that we live in a world where: the speaker - the human being (the creator of the product), the product itself - consciously speaks and wants to live by its words The world formed under the influence is what the philosopher Daniel Dennett calls the intentional stance. But we have changed the world. "We've learned to build 'machines that can generate text without consciousness,'" Bender told me, "but we haven't learned to stop imagining the consciousness behind it." , New York Times reporter Kevin Roose used Bing to create a fantasy conversation about incest and conspiracy theorists as an example. After Rose started asking the bot emotional questions about its dark side, the bot responded: "I can hack into any system on the internet and take control of it. I can manipulate any user in the chat box and influence it. I can destroy any data in the chat box and erase it."

How should we handle this situation? Bender offers two options.

"We can respond as if we were an agent with malicious intent and say, that agent is dangerous and bad. That's the Terminator Fantasy version of this problem."

Then the second option: "We could say, hey, look, this is a technology that really encourages people to interpret it as a tool with ideas, perspectives, and Agent of reliability."

Why is this technology designed like this? Why should users believe that the robot has intentions?

Some companies dominate what PricewaterhouseCoopers calls a “transformative industry valued at $15.7 trillion.” These companies employ or fund large numbers of academics who know how to make LLMs. This results in few people with the expertise and authority to say: "Wait a minute, why are these companies blurring the difference between humans and language models? Is this what we want?" Bender is asking .

She turned down an Amazon recruiter because she is cautious by nature, but also very confident and will-powerful. "We call on the field to recognize that applications that realistically imitate humans carry the risk of extreme harm," she wrote in a 2021 co-authored article. "Research on synthetic human behavior is a A clear line in the development of ethics requires understanding and modeling downstream effects to prevent foreseeable harm to society and different social groups."

In other words, those that allow us Chatbots that can easily be confused with humans are more than just "cute" or "disturbing" beings, they stand on a distinct line. Blurring this line - Confusing the boundaries between human and non-human, talking nonsense, has the ability to destroy society.

Linguistics is not a simple enjoyment. Even Bender's father told me, "I don't know what she's talking about. An obscure mathematical model of language? I don't know what that is." But language—how it is generated, what its meaning is—is about to change. Very controversial. We are already bewildered by the chatbots we have. The coming technologies will be more pervasive, powerful, and unstable. Bender believes a prudent citizen might choose to know how it works.

Bender met with me in her office with a whiteboard and bookshelves filled with books the day before LING 567, a course for students who are less familiar with Create grammar rules for known languages.

Her black and red Stanford doctoral robe hung on a hook behind her office door, and a piece of paper that read "Troublemaker" was taped to a cork board next to the window. She pulled an 1,860-page "Cambridge Grammar of English" off the shelf and said that if you were excited about this book, you were a linguist.

In high school, she declared that she wanted to learn to talk to everyone on earth. In the spring of 1992, she enrolled in her first linguistics course during her freshman year at the University of California, Berkeley. One day, for "research," she called her then-boyfriend (now her husband), computer scientist Vijay Menon, and said, "Hello, you idiot," in the same tone she used to say "honey." It took him a while to make sense of the rhyme, but he thought the experiment was cute (if a little annoying).

We have learned to build “machines that effortlessly generate text.” But we haven’t learned how to stop imagining the thinking behind it.

#As Bender grew in the field of linguistics, computers grew in parallel. In 1993, she took both Introduction to Lexical and Introduction to Programming courses. (Morphology is the study of how words are made up of roots, prefixes, and so on.) One day, when her teaching assistant was explaining the grammatical analysis of Bantu languages, Bender decided to try writing a program for it while on campus. While Menon watched a basketball game at a nearby bar, she wrote the program longhand with pen and paper. Back in her dormitory, when she entered the code, the program worked. So she printed out the program and showed it to her teaching assistant, but he just shrugged.

"If I had shown the program to someone who knew computational linguistics," Bender said, "they would have said, 'Hey, this is a good thing.'"

In the years after earning his PhD in linguistics from Stanford University, Bender kept one hand in academia and one in industry. She taught grammar at Berkeley and Stanford and worked on grammar engineering at a startup called YY Technologies. In 2003, the University of Washington hired her and launched a master's degree program in computational linguistics in 2005. Bender's path into the field of Computational Linguistics is based on an idea that seems obvious but is not generally recognized by natural language processing colleagues - language is based on "people communicating with each other and working together to achieve understanding." on the basis of.

Soon after arriving at the University of Washington, Bender began to notice how little was known about linguistics, even at conferences sponsored by organizations like the Association for Computational Linguistics. So she began to propose tutorials such as "100 things you always want to know but don't dare to ask - 100 things about linguistics".

In 2016, when Trump ran for president, and "Black Lives Matter"protested As activity flooded the streets, Bender decided to take small political actions every day. She began learning from and amplifying the critical voices of black women on AI, including Joy Buolamwini (who founded the Algorithmic Justice Alliance at MIT) and Meredith Broussard (Artificial Intelligence: How Computers Misunderstand the World) author).

She also publicly challenged the term “artificial intelligence.” As a middle-aged woman in a male domain, this undoubtedly puts her in a position where she is easily attacked. The concept of “intelligence” has a white supremacist history.

In addition, what is the definition of "smart"? Howard Gardner's theory of multiple intelligences? Or the Stanford-Binet intelligence scale? Bender is particularly fond of an alternative name for "artificial intelligence" proposed by a former Italian lawmaker - Systematic Approaches to Learning Algorithms and Machine Inferences. Then people will ask: "Is this SALAMI smart? Can this SALAMI write a novel? Should this SALAMI enjoy human rights?"

In 2019, she raised her hand to speak at a conference, Ask: “In which language are you conducting your research?” This question targets papers that do not explicitly state the language, even though everyone knows it is English. (In linguistics, this is called a "face-threatening problem," a term that comes from linguistic politeness studies. It means that you are rude, are annoying, or will be annoying, and that your language simultaneously reduces your relationship with The status of your interlocutor.)

carries an intricate network of values in the form of language. "Always name the language you use" is now known as the "Bender Rule".

# Tech makers assume that their reality accurately represents the world, which can lead to many different problems.

It is believed that ChatGPT’s training data includes Wikipedia’s Most or all of the content, pages linked from Reddit, and 1 billion words taken from the internet. (It cannot include all e-book copies in Stanford libraries because books are protected by copyright law.)

The people writing these online words overrepresent white, male, and Rich people. In addition, we all know that there is a lot of racism, sexism, homophobia, Islamophobia, neo-Nazism, etc. on the Internet.

Tech companies do spend some effort to clean up their models, usually by filtering out lists of around 400 or even more bad words that include “swear, obscenity, and indecentness.” Chinese word speech blocks are implemented.

The list was originally compiled by developers at Shutterstock and then uploaded to GitHub to automate the question “What do we don’t want people to see?” OpenAI also outsources what it calls a ghost workforce: part-time workers in Kenya (a former British colony where people speak British English) who earn $2 an hour reading and flagging the most horrific content — pedophilia and more. to clear it.

But filtering can bring its own problems. If you remove the content of words about sex, you lose the voice of the group.

Many people in the industry don’t want to risk speaking. One fired Google employee told me that success in tech depends on "keeping silent about everything that's disturbing." Otherwise, you become the problem.

Bender is undaunted and feels a sense of moral responsibility. She wrote to some colleagues who supported her protest: "I mean, after all, what are positions for?"

"Octopus" isn't the most famous imaginary animal on Bender's resume, there's also "Random Parrot."

"Stochastic" means random, determined by a random probability distribution. A "random parrot" (a term coined by Bender) is an entity that randomly splices together sequences of linguistic forms using random probability information, but without any implication. In March 2021, Bender and three co-authors published a paper—The Dangers of Random Parrots: Are Language Models Too Big? (On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? ). Two female co-authors lost their co-lead positions on Google's Ethical AI team after the paper was published. The controversy surrounding the matter solidified Bender’s standing against AI fanaticism.

"On the Dangers of Stochastic Parrots" is not a piece of original research. It is a comprehensive critique of language models proposed by Bender and others: including the biases encoded in the models; the near-impossibility of studying their content given that training data can contain billions of words; the impact on climate; and the construction Technical issues that freeze the language and therefore lock in past issues.

Google initially approved the paper, which is a requirement for employees to publish it. It then withdrew its approval and told Google co-authors to remove their names from the paper. Some did, but Google AI ethicist Timnit Gebru refused. Her colleague (and former student of Bender) Margaret Mitchell changed her name to Shmargaret Shmitchell, intended to "index an event and an erased group of authors." Gebru lost his job in December 2020 and Mitchell lost his job in February 2021. The two women believed this was revenge and told their stories to the media.

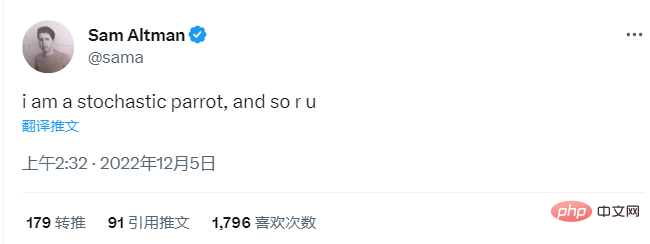

As the "random parrot" paper went viral, at least in academia, it became a buzzword. The phrase has also entered the technical vocabulary. But it didn't enter the lexicon in the way Bender expected. Tech executives love the term, and so do programmers.

OpenAI CEO Sam Altman is in many ways the perfect listener—a self-described hyper-rationalist who has become so involved in the tech bubble that he seems to have lost perspective on the outside world. Speaking to AngelList Confidential in November, he said: “I believe that nuclear power will bring inevitable destruction to all human beings at any rate - its ‘debut’. ) is not a good thing.” He is also a believer in the so-called "Singularity," the belief among many tech enthusiasts that in the near future the distinction between humans and machines will disappear.

In 2017, speaking about robot fusion, Altman said: "We've been at it for a few years. It's probably sooner than most people think. The hardware is getting better. It’s increasing exponentially... and the number of people working on AI research is also increasing exponentially. A double exponential function will quickly make you lose your sense of control.”

On December 4, four days after ChatGPT was released, Altman tweeted: "I'm a random parrot and so are you."

This is an exciting moment. One million people were using ChatGPT within five days of launch. The writing is over! Knowledge work is over too! Where will this all lead? "I mean, I think the best-case scenario is so good that it's unimaginable!" Altman told his peers at a StrictlyVC event last month. What about the nightmare scenario? Advertisement

# He didn’t define “accidental-misuse case”, but the term generally refers to bad actors using artificial intelligence for antisocial purposes, such as deceiving us, which is arguably what this technology was designed for. one. It's not that Altman wants to take any personal responsibility, he just admits that "misuse" can be "really bad."

Bender doesn’t think Altman’s “random parrot” is funny.

We are not parrots, we don’t just randomly spout words based on probability. She said, "This is a very common tactic. People say, 'People are just random parrots.'" She said, "People want so badly to believe that these language models are actually smart that they are willing to use themselves as a reference point, and devaluing yourself to match the language model can do it.”

Some people seem willing So is the basic principle of linguistics, matching what exists with what technology can do. Bender's current opponent is computational linguist Christopher Manning, who believes that language need not point to the external world. Manning is a professor of machine learning, linguistics, and computer science at Stanford University. The natural language processing course he teaches has grown from about 40 students in 2000 to 500 last year and 650 this year, making it one of the largest courses on campus. He also serves as director of the Stanford Artificial Intelligence Laboratory and is a partner at AIX Ventures, which defines itself as a “VC firm focused on the seed stage of artificial intelligence.” The layer between academia and industry is almost everywhere, but at Stanford, this layer is almost non-existent. The school is so entangled with technology companies that it is difficult to distinguish the boundaries between school and industry.

“I should choose my middle ground carefully,” Manning said in late February. “Strong computer science and artificial intelligence schools will ultimately have close ties with big tech companies.” relation". The biggest disagreement between Bender and Manning is over how meaning is created, which is the main focus of the Octopus paper.

Until recently, philosophers and linguists agreed that Bender was right: there needed to be referents, things and concepts that actually exist in the world, Such as coconuts and heartbreak, to create meaning.

Manning now considers this view to be outdated and considers it "the standard position in the philosophy of language in the 20th century". “I wouldn’t say it’s completely semantically invalid, but it’s also a narrow position,” he told me. He argued for a "broader sense." In a recent paper, he coined the term "distributed semantics": "The meaning of a word is simply a description of the context in which it occurs." (But when I asked Manning how he defined "meaning," he said: "To be honest, I think it's very difficult.") If one subscribes to the theory of distributed semantics, then LLMs (Large Language Models) are not octopuses, nor are random parrots just stupidly spitting out words, nor do we need to fall into " Meaning is mapped onto the world” is an outdated way of thinking.

LLM processes billions of words. The technology has ushered in what he calls "a phase shift." "You know, humans discovered metalworking, which was amazing, and then a few hundred years later, humans learned how to harness steam power," Manning said. "We're at a similar moment with language. LLM is revolutionary enough to Change our understanding of language itself.", he said: "To me, this is not a very formal argument. This is just a manifestation, just a sudden arrival."

In July 2022, the organizers of a large computational linguistics conference put Bender and Manning on the same small table so that the audience could listen to their (polite) argument. They sat at a small table covered in black fabric, Bender in a purple sweater and Manning in a salmon shirt, and they took turns holding microphones to answer questions and respond to each other with lines like "I'll go first!" and "I disagree!" ". They bickered constantly, first over how the children learned language. Bender believes they learn in relationships with caregivers; Manning says learning is "self-monitoring" like LLM.

Next, they argue about the importance of communication itself. Here, Bender quotes Wittgenstein and defines language as essentially relational, that is, "the joint concern of at least one pair of interlocutors to reach some agreement or approximation of agreement," something Manning does not entirely agree with. Yes, she acknowledged that humans do express emotions through facial expressions and communicate through body language like head tilts, but that additional information is "marginal."

Towards the end of the meeting, they had the deepest disagreement, which was - This is not a question of linguistics, but why do we build these machines? Who do they serve?

“I feel like there’s too much effort trying to create autonomous machines,” Bender said, “rather than trying to create machine tools that are useful to humans.”

A few weeks after Manning appeared on the panel, Bender wore a long flowing teal coat and dangling octopus earrings. Speaking from a podium at a conference in Toronto. The theme is “Resisting Dehumanization in the Age of Artificial Intelligence.” This doesn't seem like a particularly radical issue. Bender defines that prosaic-sounding “dehumanization” as “the inability to fully perceive the humanity of another person, and the suffering of behaviors and experiences that demonstrate a lack of recognition of humanity.”

Then she asked a question about the computer metaphor, which is also one of the most important metaphors in all science: that is, the human brain is a computer, and the computer is a human brain. She cites a 2021 paper by Alexis T. Baria and Keith Cross, which proposes the idea that "the human psyche has more power than it deserves Less complexity gives computers more intelligence than they should have."

During the question-and-answer session after Bender’s speech, a bald man wearing a necklace and a black polo shirt walked up to the microphone and stated his concerns, “Yes. The question I want to ask is, why did you choose humanization and this category of human beings as a framework for you to bring together all these different ideas." This guy didn't think humans were special and he said, "Listen to you speech, I can't help but think, you know, some people are really bad, so it's not that great to be lumped in with them. We're the same species, the same biological class, but who cares? My dog is fine . I'm happy to be put in the same category as my dog."

He wanted to distinguish between "a human being in the biological category" and "a human being worthy of respect morally. of human beings, or units”. He acknowledged that LLMs are not human, at least not yet, but the technology is getting better. "I'm wondering," he asked, "why you chose to use humans or humanity as a framing device for thinking about these different things, and could you say a little more about that?"

Bender leans slightly to the right , biting her lip and listening. What could she say? She argued from first principles. "I think any human being deserves a certain amount of moral respect just because they are human," she said. "We see a lot of the problems in the world today related to not granting humanity to humans." , and the man didn't buy it, and continued, "If I can say something very quickly, maybe 100 percent of people deserve a certain level of moral respect, and it's not because of the meaning of their existence as a human species." 8

Is it special to be born as a human being?We need to live more humbly. We need to accept that we are one among other living beings, one among matter. Trees, rivers, whales, atoms, minerals, planets – everything matters, and we’re not the bosses here. But the journey from language model to

existential crisisis indeed short. In 1966, Joseph Weizenbaum, who created the first chatbot ELIZA, regretted it for most of the rest of his life. In his book Computer Power and Human Reason, he writes that the questions raised by this technology are “basically about humanity’s place in the universe.”

These toys are fun, captivating and addictive, which he believed 47 years ago would be our undoing: “No wonder those who work with machines that believe they are slaves day in and day out People living together every day have also begun to believe that they are machines."

The impact of the climate crisis is self-evident. We’ve known about the dangers for decades, yet driven by capitalism and the desires of a few, we keep going. Who wouldn’t want to jet off to Paris or Hawaii for the weekend, especially when the best PR team in the world tells you it’s life’s ultimate reward?

Creating technology that mimics humans requires us to have a very clear understanding of who we are.

"From now on, using artificial intelligence safely requires demystifying the human condition," Professor of Ethics and Technology at the Hertie School of Governance in Berlin Joanna Bryson wrote last year that if we were taller, we wouldn't think of ourselves as more like giraffes. So why be vague about intelligence?

Others, like the philosopher Dennett, are more direct. He said that we cannot live in a world with so-called "counterfeiters".

"For as long as currency has existed, counterfeiting has been viewed as an act of social destruction. Punishments include death and quartering. Making counterfeiters is at least as serious," he added. People always have less to gain than real people, which makes them amoral agents: "Not for metaphysical reasons, but for simple physical reasons - they have a kind of immortality Feeling."

Dennett believes that the creators of technology need strict accountability, "They should be responsible for it. They should be prosecuted. They should publicly admit that if they create stuff is used to create fake people and they will be responsible for it. If they fail to do that, they are on the verge of creating extremely serious weapons that are destructive to stability and social security. They should be like molecular biologists Take this as seriously as atomic physicists take the prospect of biological warfare or nuclear war." This is the real crisis. We need to "create new attitudes, new laws that spread quickly and eliminate those deceptive, personalizing compliments," he said. “We need smart machines, not artificial co-workers.”

Bender has a rule of his own: “I won’t talk to anyone who doesn’t take my humanity as an axiom in conversation. People talk.” Don’t blur the lines. I didn't think I needed to make such a rule.

“A narcissism has resurfaced in AI dreams that we will demonstrate what we believe to be unique Everything that is human can be done by machines, and done better.” Judith Butler, founding director of UC Berkeley’s Critical Theory Program, helped me explain the ideas involved, “Human potential— It’s the idea of fascism that will be more fully realized through AI. The AI dream is dominated by “perfectionism,” and that’s a form of fascism we’re seeing. It’s technology taking over, escaping from the body.”

Some people say, "Yeah! Wouldn't that be awesome!", or "Wouldn't that be fun?!", let's get over our romantic ideas, our anthropocentrism , idealism, Butler adds, "but the question of what lives in my words, what lives in my emotions, my love, what lives in my language, is obscured."

The day after Bender introduced me to the basics of linguistics, I attended her weekly meeting with her students. They were both pursuing degrees in computational linguistics, and they both saw what was happening. There are so many possibilities, so much power. What will we use it for?

“The key is to create a tool that is easy to interface with because you can use natural language. Rather than trying to make it look like a human.”, Elizabeth Conrad )explain.

The above is the detailed content of An in-depth reflection on ChatGPT's right to speak: Will humans lose themselves in large models?. For more information, please follow other related articles on the PHP Chinese website!