Technology peripherals

Technology peripherals

AI

AI

Why is Weibo addictive? Decryption of the behind-the-scenes recommendation algorithm

Why is Weibo addictive? Decryption of the behind-the-scenes recommendation algorithm

Why is Weibo addictive? Decryption of the behind-the-scenes recommendation algorithm

On July 13, 2021, young people who had worked hard for a day were about to lie down and take out their mobile phones, open the familiar Xiaopozhan App, and connect to the latest videos of their favorite up hosts with one click. .

As a result, I suddenly found that my vision went dark:

After a year, Station B The secret was finally revealed: a "scheming 0".

#However, have you ever thought about why this Weibo did not collapse even after experiencing a crazy influx of users?

#What is the relationship between AI and Weibo?

Before uncovering this mystery, we need to start with the development of artificial intelligence.

On July 27, the 2022 New Wise Conference of "Integrating Ecology and Co-creating Value", guided by the Internet Society of China and hosted by Weibo and Sina News, was successfully held.

In the topic "Intelligence drives everything: AI promotes the accelerated arrival of the Internet of Everything", Weibo COO, Sina Mobile CEO, and Sina AI Media Research Institute Dean Wang Wei delivered a speech titled Keynote speech on "Cloud Empowers Weibo's Complex Business Scenarios for Integrated Applications of Digital and Intelligent Technology".

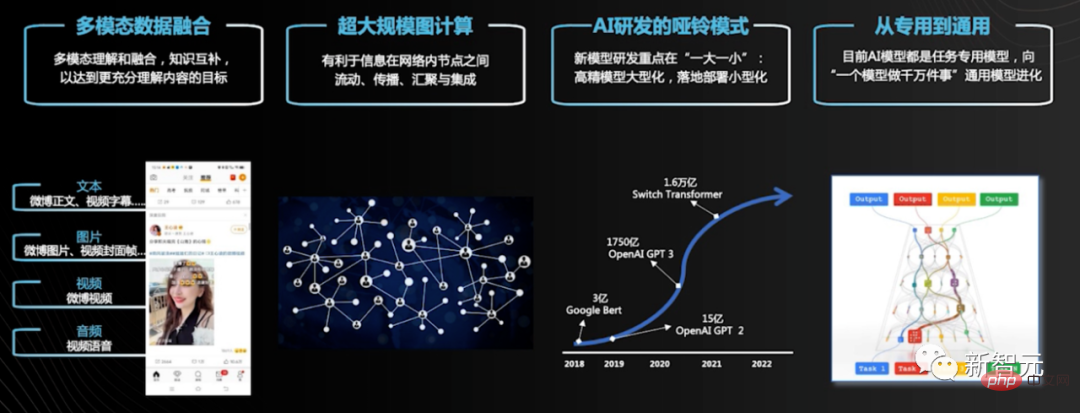

##Wang Wei said that if we look back at the development history of machine learning, we can It can be seen that the overall development trend of AI is: massive quantification and diversification of training data, complexity and generalization of AI models, and efficiency and scale of computing power.

The first is multi-modal data fusion.

#With the rapid development of 5G, image and video type modal content accounts for an increasing proportion of online content, so modal fusion is very necessary.

For Weibo, if we can perform multi-modal fusion of text, pictures, and videos at the same time, we can better understand the content of this Weibo.

Second, it is ultra-large-scale graph computing.

Compared with other machine learning models, ultra-large-scale graph computing has a special advantage: it promotes the flow, aggregation and integration of information through the transmission of information in the network.

For example, for a cold-start user with few behaviors, we can deduce the user's interests through information dissemination through the people in his follow list and the content posted by these people.

The third is the dumbbell mode developed by AI.

The current focus of AI research and development is one is increasingly larger super models, and the other is model miniaturization technology.

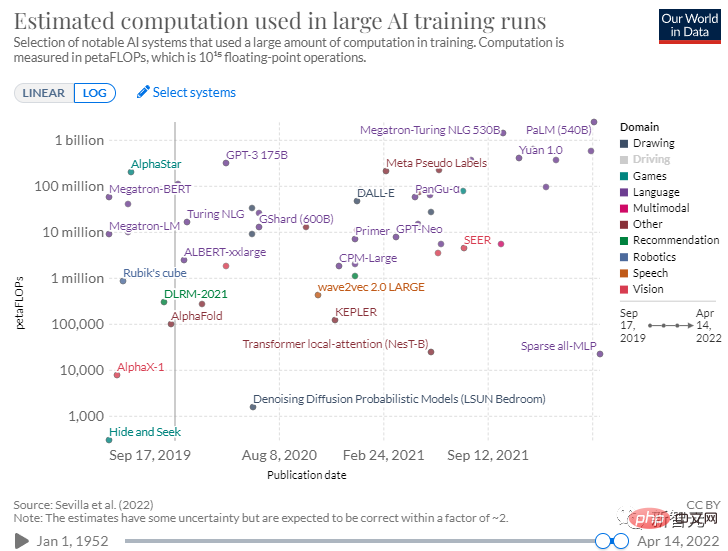

We all know that as the scale of model parameters is getting larger and larger, the model effect is getting better and better, and high-precision models are still increasing. For example, in 2018, Google’s Bert just When it came out, the model parameter size was 300 million, which was not too big, but since then this number has been growing rapidly.

The GPT-2 model developed by OpenAI has a parameter size of 1.5 billion, the GPT-3 model has a parameter size of 175 billion, and by the Switch Transformer released by Google in 2021, the parameter size has reached 1.6 trillion.

#On the other hand, although the larger the model, the better the effect, but because the model is too large, it sometimes makes it impossible to implement the actual application. Therefore, another focus of research and development is to miniaturize and lightweight these large models, such as model distillation, model pruning and other technologies.

Fourth, the AI model is moving from a special model to a general model.

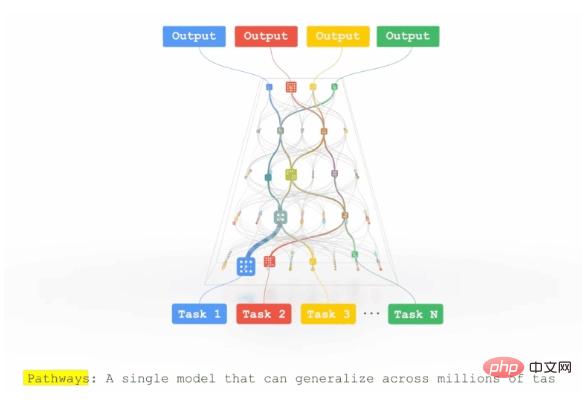

Google disclosed the Pathways model framework in the second half of 2021. It first proposed this idea and hoped that by constructing a general large model, it could achieve "one model can do tens of millions of tasks". "thing" goal.

The specific idea is that after different task data are input, part of the path of the neural network is selected through the routing algorithm to reach the model output layer. Different tasks have both parameter sharing and task-specific model parameters.

Super large-scale graph with 1 billion nodes and 10 billion edges

Why have you been talking about machine learning for so long? Because what’s coming next is the “Weibo Featured Recommendation System”.

As we all know, as the largest social media network in China, Weibo’s current monthly active users have reached 582 million! Such a large user scale will inevitably make the network environment on Weibo very complex.

Coupled with the high timeliness and high diversity of content, today’s major Internet events will explode on Weibo immediately.

In addition, the scenarios faced by Weibo are still very diversified, and it is necessary to distribute "thousands of people and thousands of faces" to users in many scenarios such as relationship flow, hot spot flow, video flow, etc. "Content.

I can live without fingers, but I can’t live without my phone

Face In complex business scenarios, how does Weibo use AI and big data to create a recommendation system that can adapt to changing circumstances?

Wang Wei introduced to us that the Weibo recommendation system consists of three parts: content understanding, user understanding, and recommendation system.

First of all, it is content understanding.

If you want to understand what a Weibo is saying, it is not enough to just understand the text content. You must use multi-modal understanding technology to integrate blog posts, pictures, and videos. and other media information.

To this end, Weibo has trained its own Weibo multi-modal pre-training model. Through "contrastive learning", this self-supervised learning method is used to conduct multi-modal pre-training. .

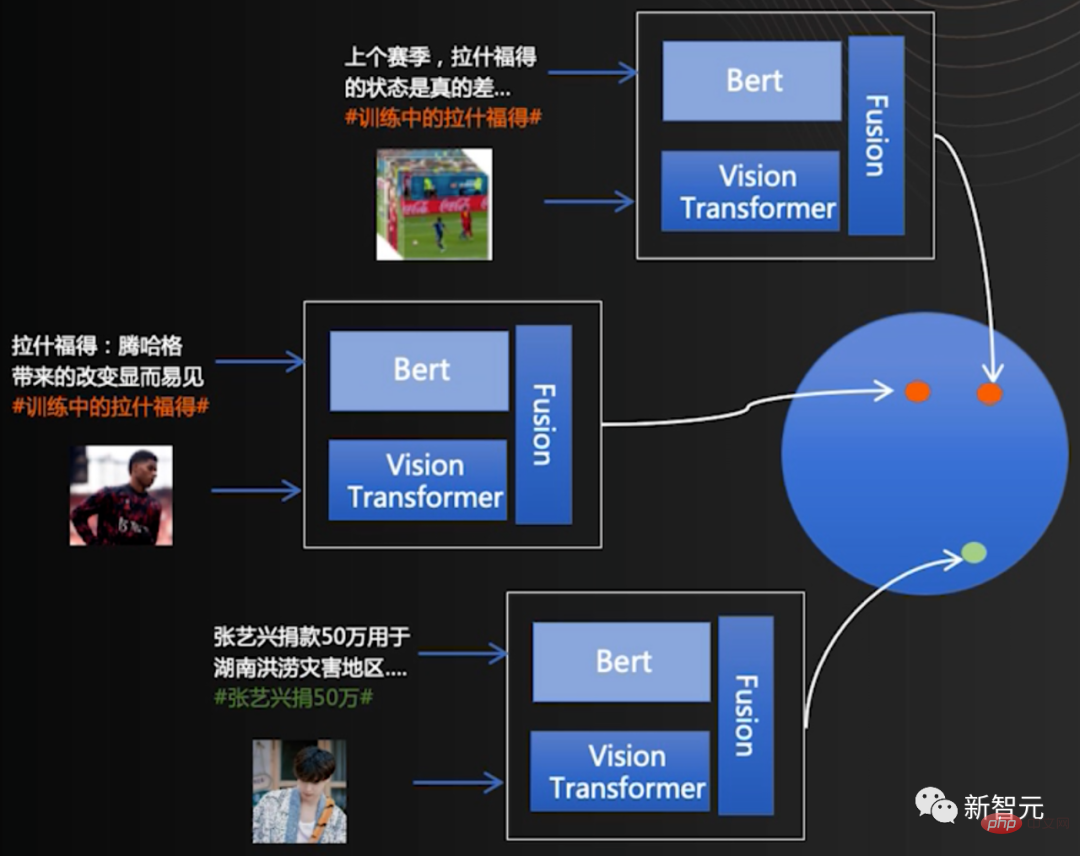

The example below shows how Weibo uses its own "topics" to automatically construct training data.

For example, we take two Weibo posts that both say "Rashford in training" as positive examples and randomly select some Microblogs of different topics are used as negative examples, so that training data can be automatically constructed.

For a certain Weibo, the text content is encoded by Bert, the image and video content are encoded by ViT, and then the information is fused through the fusion sub-network to form the embedding encoding of the Weibo. This is a pre-training process.

After pre-training, the well-learned Weibo encoder can be used to multi-modally encode new Weibo content to form embedding, which can be used in downstream tasks such as recommendation.

Secondly, in terms of user understanding, Weibo uses ultra-large-scale graph computing to better understand users’ reading interests. After all, Weibo has its own social media attributes, which naturally matches it well with large-scale graph computing.

Using users and blog posts as nodes in the graph, constructing edges in the graph based on the attention relationship between users, user and blog post reading, re-commenting and likes and other interactive behaviors, Weibo establishes A very large graph containing 1 billion nodes and 10 billion edges was created.

Through information dissemination, aggregation and integration in large-scale graph computing, embedding vectors representing user interests can be formed to better understand user interests.

In this way, it is possible to handle the following relationships between users, the re-comments and likes between users and blog posts, etc. at the same time.

After understanding what users are talking about and understanding the interests of Weibo users, the Weibo recommendation system will distribute high-quality Weibo posts to interested users in a personalized manner.

#So, how to construct an efficient recommendation system in such a complex scenario?

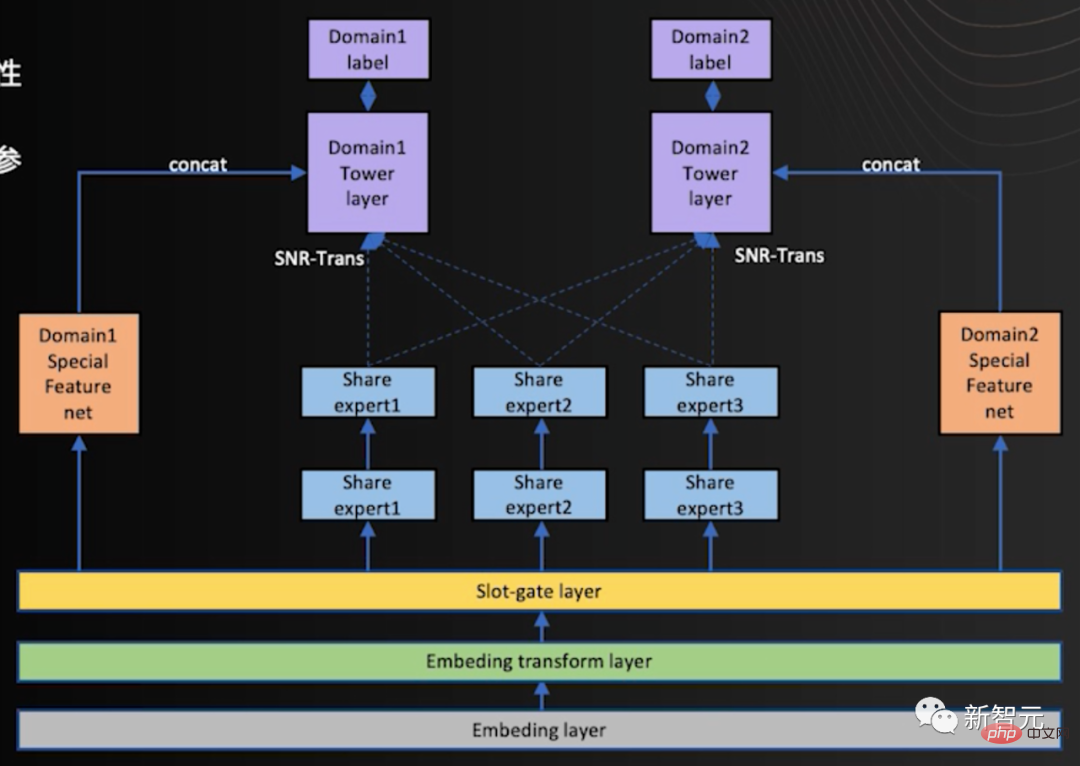

Weibo adopts a multi-scenario modeling approach. The ideal situation is to build only one recommendation model and use it to serve multiple scenarios.

So how to express the commonality and individuality between scenes? Network parameters can be shared between scenes, or the scenes can have exclusive private network parameters to reflect the commonality and individuality of the scenes.

#For example, in this model diagram, in the underlying feature input layer of the model and a part of the "expert sub-network" in the middle of the network, these network parameters are Shared by each scene; while other sub-network parameters are unique to a certain scene

In this way, one model can serve multiple scenes and save model resources.

Tangshan incident: What should I do if the traffic doubled?

Now, let’s go back to the original “suspense”.

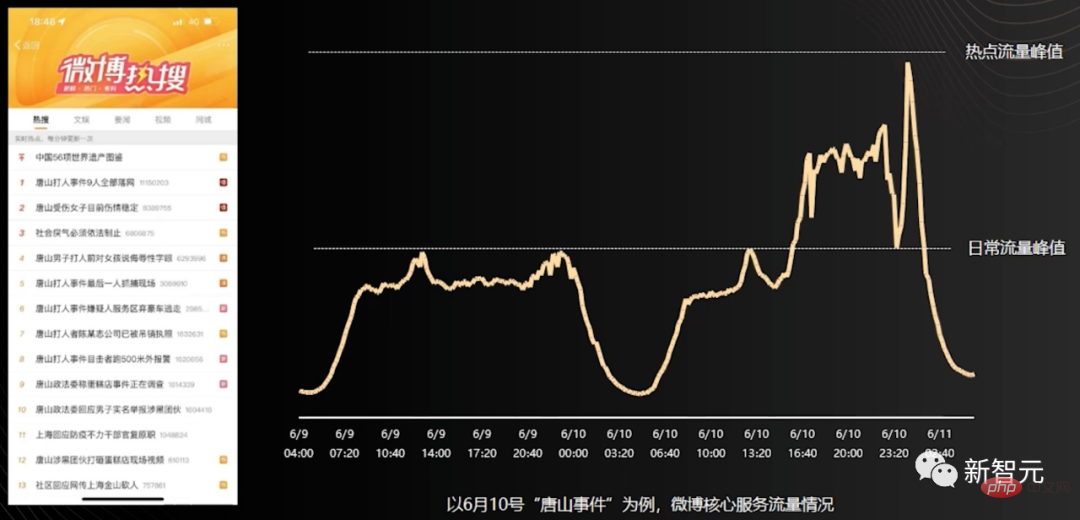

For Weibo, this hot spot that will "explode" if it is not fully protected has always been a very big challenge.

For example, the "Tangshan Incident" that has attracted widespread attention recently, the hot traffic on the day of the incident fully doubled the daily traffic peak.

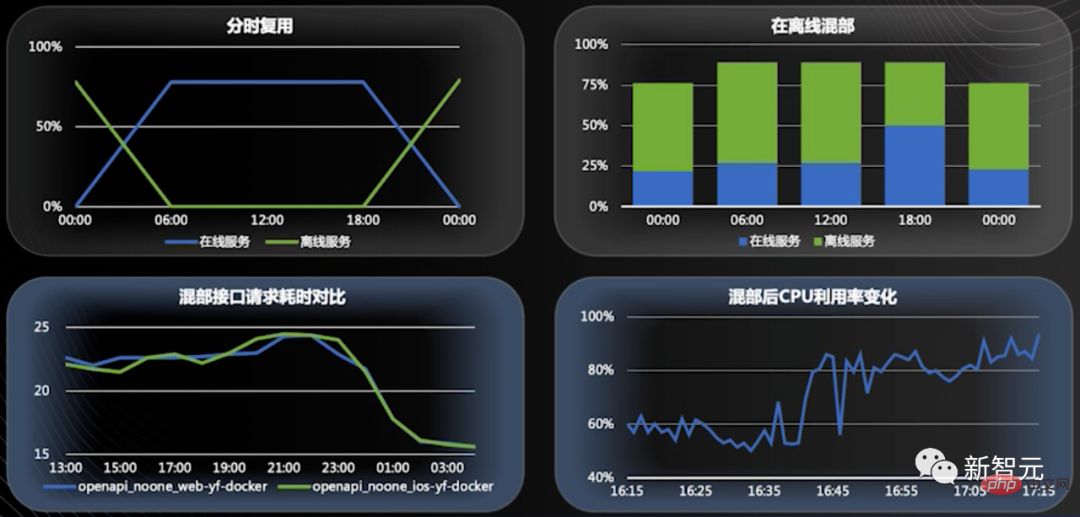

In this regard, Wang Wei said that Weibo has applied microservice Docker containerization technology very early, which not only improves the efficiency of service operation and maintenance, but also It also realizes the dynamic expansion and contraction capabilities of services. Currently, Weibo has the capacity to schedule more than 10,000 servers in 10 minutes, and has enough servers to handle hot traffic.

In addition, Weibo has also established a hotspot monitoring mechanism and hotspot linkage system, and through Weibo Mesh technology independently developed by Weibo, it can realize efficient cross-language calls between different services and improve the overall Service performance and linkage expansion efficiency.

Finally, Weibo adopts offline real-time hybrid deployment technology. The combination of CPU real-time preemptive scheduling technology and containerization technology is used to achieve offline real-time hybrid deployment capabilities of Weibo services.

After combining the above operations, when hot traffic comes, you can take over the hot traffic of core services in seconds. Finally, let us review the development history of the Internet.

If the PC Internet is the beginning of the online world, then the rise of the mobile Internet allows us to put this invisible information space in our pockets. With the overlay and integration of big data, cloud computing, artificial intelligence and other technologies with the mobile Internet, we have entered the era of intelligent information.

Now, the hottest topic is the multiverse. Since last year, the Metaverse has triggered extensive discussions, such as digital twins, digital people, XR, blockchain technology, etc.

Wang Wei believes that the current application scenarios based on cutting-edge technologies such as AI, blockchain, and XR have already reflected some prototypes of the metaverse. Fields such as gaming and social networking are very good application scenarios for the Metaverse, which will ignite everyone's enthusiasm for participating in the Metaverse.

The above is the detailed content of Why is Weibo addictive? Decryption of the behind-the-scenes recommendation algorithm. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Best Practice Guide for Building IP Proxy Servers with PHP

Mar 11, 2024 am 08:36 AM

Best Practice Guide for Building IP Proxy Servers with PHP

Mar 11, 2024 am 08:36 AM

In network data transmission, IP proxy servers play an important role, helping users hide their real IP addresses, protect privacy, and improve access speeds. In this article, we will introduce the best practice guide on how to build an IP proxy server with PHP and provide specific code examples. What is an IP proxy server? An IP proxy server is an intermediate server located between the user and the target server. It acts as a transfer station between the user and the target server, forwarding the user's requests and responses. By using an IP proxy server

How to configure Dnsmasq as a DHCP relay server

Mar 21, 2024 am 08:50 AM

How to configure Dnsmasq as a DHCP relay server

Mar 21, 2024 am 08:50 AM

The role of a DHCP relay is to forward received DHCP packets to another DHCP server on the network, even if the two servers are on different subnets. By using a DHCP relay, you can deploy a centralized DHCP server in the network center and use it to dynamically assign IP addresses to all network subnets/VLANs. Dnsmasq is a commonly used DNS and DHCP protocol server that can be configured as a DHCP relay server to help manage dynamic host configurations in the network. In this article, we will show you how to configure dnsmasq as a DHCP relay server. Content Topics: Network Topology Configuring Static IP Addresses on a DHCP Relay D on a Centralized DHCP Server

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

What should I do if I can't enter the game when the epic server is offline? Solution to why Epic cannot enter the game offline

Mar 13, 2024 pm 04:40 PM

What should I do if I can't enter the game when the epic server is offline? Solution to why Epic cannot enter the game offline

Mar 13, 2024 pm 04:40 PM

What should I do if I can’t enter the game when the epic server is offline? This problem must have been encountered by many friends. When this prompt appears, the genuine game cannot be started. This problem is usually caused by interference from the network and security software. So how should it be solved? The editor of this issue will explain I would like to share the solution with you, I hope today’s software tutorial can help you solve the problem. What to do if the epic server cannot enter the game when it is offline: 1. It may be interfered by security software. Close the game platform and security software and then restart. 2. The second is that the network fluctuates too much. Try restarting the router to see if it works. If the conditions are OK, you can try to use the 5g mobile network to operate. 3. Then there may be more

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images