Technology peripherals

Technology peripherals

AI

AI

Microsoft spends another $10 billion on OpenAI! The giant AI has been playing chess for 4 years. Who is the biggest winner?

Microsoft spends another $10 billion on OpenAI! The giant AI has been playing chess for 4 years. Who is the biggest winner?

Microsoft spends another $10 billion on OpenAI! The giant AI has been playing chess for 4 years. Who is the biggest winner?

At the beginning of 2023, Microsoft has shown its status as a "big winner" in the field of AI.

Recently, sparks have been constantly flying between the popular ChatGPT and Microsoft.

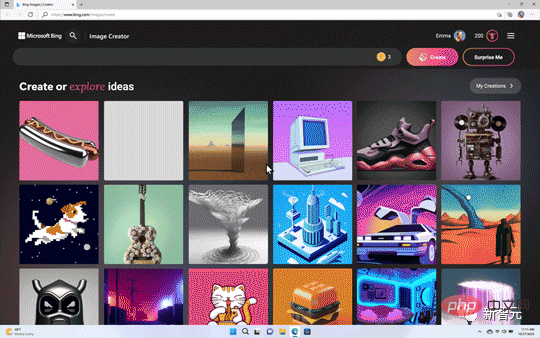

Microsoft first announced that it would integrate ChatGPT into its own search engine Bing. A few days later, it announced that it would integrate it into the "three major items" in the Office suite: Word and Excel. , PPT. You can experience ChatGPT using Office, which has made many people happy.

Judging from Microsoft’s size in office software, this move may change the way more than 1 billion people write documents, presentations and emails.

As early as 2019, Microsoft invested US$1 billion in OpenAI, the owner of ChatGPT. This frequent "interaction" has also made the industry think about it. Next, Microsoft will invest more chips. , and even acquiring OpenAI and ChatGPT together became a natural thing.

There were no surprises, no reversals, and people didn’t have to wait too long. Microsoft gave the answer: invest another $10 billion.

Microsoft invests $10 billion

According to people familiar with the matter, Microsoft has been negotiating with OpenAI for additional investment as early as October last year. It started.

If the funding is finalized, including new investments, OpenAI would be valued at $29 billion.

According to reports, Microsoft’s capital injection will be part of a complex transaction. After the investment, Microsoft will receive 75% of OpenAI’s profits until the investment is recovered.

Previously, OpenAI had been purchasing services from Microsoft’s cloud computing department. It is not yet known whether the money will be counted in its account.

After recouping the investment, under the OpenAI equity structure, Microsoft will own 49% of the shares, other investors will receive the other 49%, and OpenAI’s non-profit parent company will receive 2 % shares.

According to reports, it is unclear whether the transaction has been finalized, but relevant documents recently received by potential investors show that the transaction was originally scheduled to be completed before the end of 2022.

Currently, both Microsoft and OpenAI have declined to make substantive comments. A Microsoft spokesperson said in an emailed statement that the company does not "comment on speculation."

The economic potential of artificial intelligence is huge and may be greater than all current software spending.

Designing a better search engine, one that intuitively knows what users are looking for, would pose a major threat to Google parent Alphabet’s $1.1 trillion valuation . The next step may be to design AI programs for drugs.

Of course, there’s a good chance that OpenAI will disappear, as most tech companies do. Even so, Microsoft's investment may not be in vain.

Morgan Stanley estimates that Alphabet has spent about $100 billion on research and development in the past three years alone, betting heavily on such products that spending over the next three years will Growing at an annual rate of 13%.

By locking up a promising company and scarce researchers, Microsoft could prevent Alphabet from winning and potentially force it to increase spending.

Microsoft’s layout: In the AI era, those who gain technology first will win the world

29 billion US dollars is a huge valuation for OpenAI. Currently, OpenAI Although it is famous, its business model and route are still unclear at present, and US$10 billion is not a small number for Microsoft shareholders.

However, some Wall Street analysts believe that although US$10 billion is a reasonably large amount of money, Microsoft is not considered a "high risk" in terms of investment timing and investment strategy. gamble".

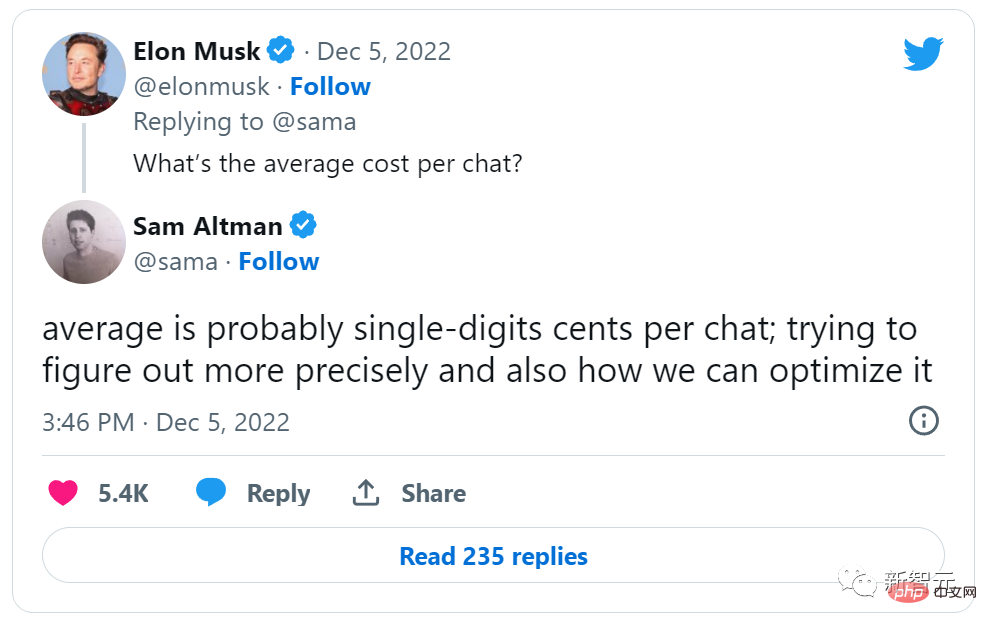

ChatGPT is popular all over the world and its popularity is explosive. This is true, but the risk of making ends meet has become more and more serious with the rapid increase in users.

According to OpenAI CEO Sam Altman on Twitter last month, every time someone asked a question in ChatGPT, the company had to pay a few cents of computing power, which sparked concerns that OpenAI was "too much." Worry about burning money. Much of this computing power is obtained from Microsoft's cloud computing platform.

If OpenAI figures out how to make money on products like ChatGPT and image creation tool Dall-E, 75% of the profits go to Microsoft until it recoups its entire initial investment.

In addition to profits, there is also the most important technology market.

Since Microsoft’s first investment in 2019, OpenAI and Microsoft have maintained a substantial partnership. This investment is basically the formalization of this partnership. The two companies Companies are able to join forces and work together to accelerate technology research.

After this investment, Microsoft can work with OpenAI to develop technology on its own cloud platform.

This almost immediately puts Microsoft at the forefront of what may be the most important consumer technology of the next decade. When the Azure platform, which already dominates three-thirds of the world in the commercial cloud field, meets OpenAI and ChatGPT, in the future cloud market, it seems that Microsoft wants to "have it all" in 2B and 2C.

For Microsoft, from a strategic point of view, this investment is a huge "coup."

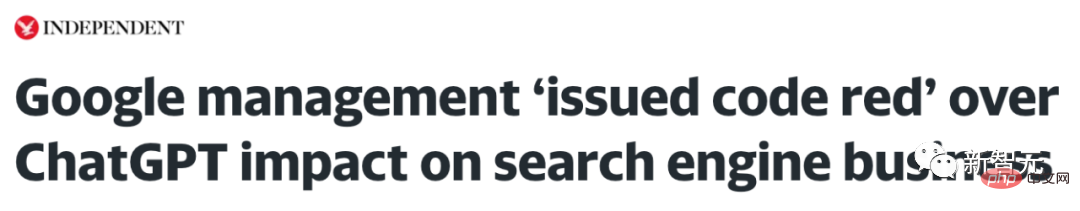

In particular, important competitor Google has previously participated in the development of some OpenAI technologies. The integration of ChatGPT and Bing directly targets Google's core search business. This investment is expected to further squeeze Google's ambitions in AI layout.

In Microsoft’s view, OpenAI will most likely continue to be far ahead of other AI algorithm companies using products such as ChatGPT in the future.

After big companies have entered the game, it will become increasingly difficult for these new AI companies to dig out new "moats" and generate network effects. Without this For one thing, it's hard to make money in consumer technology.

From this perspective, Microsoft’s investment focuses on positioning.

Many technology giants are also paying close attention to the future of artificial intelligence. They know that companies that control core technologies will have a great advantage in the next few years. By investing in OpenAI, Microsoft is positioning itself at the forefront of this AI revolution.

As the world continues to be transformed by artificial intelligence, this combination of Microsoft and OpenAI may be just the beginning. The future is bright, and both Microsoft and OpenAI hope to be at the forefront of this AI revolution.

AI fights fiercely against the crowd, who can have the last laugh?

Google: A little disappointed

Microsoft has been soaring in the field of AI, making Google a little bit vomiting blood.

The recent popularity of ChatGPT has attracted the attention of the world, and Google is probably feeling a little sour.

Google once had the opportunity to go this route. Google is not at a disadvantage when it comes to chatbots. As early as the I/O conference in May 2021, Google's artificial intelligence system LaMDA amazed everyone when it was unveiled.

But due to considerations such as "reputational risk", Google had not planned to market chatbots before.

Google is considered the big brother in the field of AI.

Pichai instructed some teams to switch directions and develop AI products.

Transformer invented by Google is the key technology to support the latest AI model; according to rumors, Google’s LaMDA chat robot has far better performance than ChatGPT; in addition, Google also claims that its own The image generation capability of model Imagen is better than Dall-E and other companies' models.

However, what is slightly embarrassing is that Google’s chatbot and image models currently only exist in “claims” and there are no actual products on the market.

It’s not surprising that Google would lay it out like this. In many cases, Google does not expect to use AI for commercial purposes.

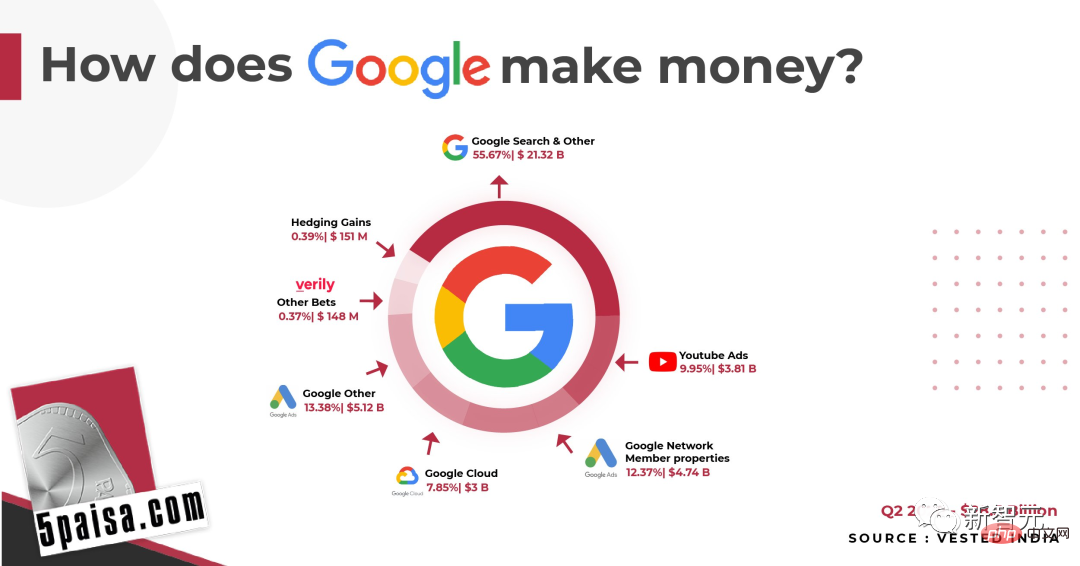

Google has long been committed to using machine learning to improve its search engine and other consumer-facing products and to offering Google Cloud technology as a service. Search engine has always been Google's core business.

There is an unavoidable problem: in search engines, users are always the final decision-maker. Although the link is provided by Google, the user is the one who decides which link to click.

Google makes its products very clever. Instead of charging advertisers per impression (because that value was difficult to determine, especially 20 years ago), it charges per click. This is truly a revolutionary product.

Most of Google’s revenue comes from online advertising

Now, Google can earn US$208 billion a year from advertising revenue on its search engine, accounting for 81% of total revenue. Therefore, it is conservative in how to lay out AI such as language models.

Seven years ago, American business analyst Ben Thompson wrote an article "Google and Strategic Limitations", mentioning the difficulties faced by Google's business in the field of AI——

One year before iOS 6, Apple introduced the voice assistant Siri for the first time. The implications for Google are profound because the voice assistant must be more proactive than a page of search results. It's not enough to just provide possible answers. The assistant needs to give the right answer.

In 2016, Google released Google Assistant. However, for hundreds of millions of iOS users, if they want to use Google Assistant, they have to download it separately. In addition, the Google search engine can make money by letting users click more times. What about Google Assistant?

Now, seven years have passed, and no matter how innovative Google’s main business model is, it still remains at “packing more ads into the search process.” On mobile devices, this works great.

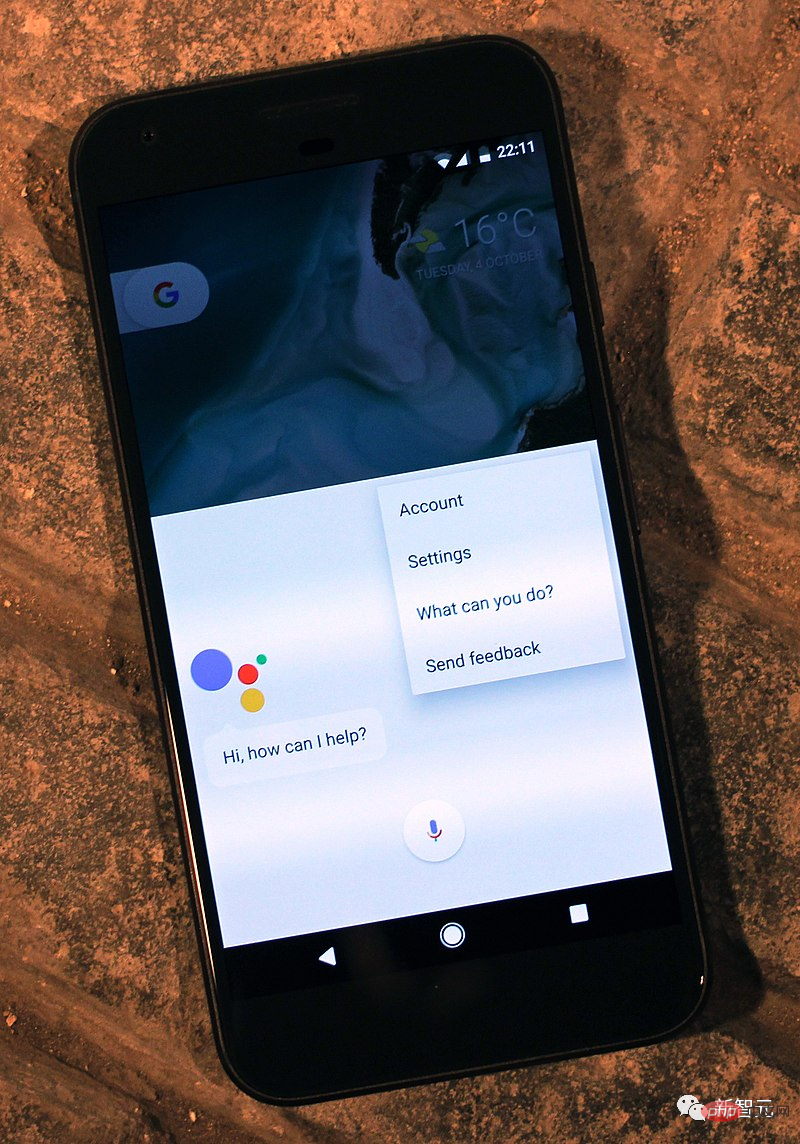

Google Assistant running on Pixel

Google Assistant running on Pixel

But Now, the general environment has changed. Large language models such as ChatGPT bring disruptive innovation.

While disruptive products are getting better and better, Google’s current products are becoming more and more bloated. No matter how you look at it, this is not a good sign.

Recently, Google announced an upgrade to its search engine, allowing users to enter fewer keywords and obtain more results.

Regarding the crisis faced by Google, Emad Mostaque, founder of Stability AI, commented: "Google is still the leader in the field of large language models (LLM), in the innovation of generative AI. , they are a force that cannot be ignored."

Despite this, he also admitted that Google "did not communicate well with shareholders and the market. , a bit too cautious."

What will Google do next? With the AI laboratory in hand, can its AI products successfully find a commercialization path?

Meta: The money earned from social media is burned to the Metaverse

When it comes to the melee in the AI field, how can it be without Meta.

For Meta, AI is a huge opportunity, and accordingly, Meta has been investing huge amounts of capital in it.

Meta has huge data centers that are primarily used for CPU computing, which is necessary to power Meta’s services. The advertising models that drive Meta, as well as the algorithms that recommend content on the network, all require CPU computing.

As a long-term solution for the advertising business, Meta needs to build a probabilistic model and understand what has been converted and what has not been converted. These probabilistic models require a lot of GPUs, and if you use NVIDIA's A100, the cost will be as high as five figures (USD). However, this is not expensive for Meta.

Obviously, Meta needs to know the "certain" advertising effect, because a clearer measurement standard is needed in investment. Whether it is Facebook or Reels, AI models are the key to recommending content, and building these models will inevitably cost a lot of money.

This investment will pay off in the long run. If there are better positioning and recommendations for users, revenue will also increase; once these AI data centers are built, the cost of maintenance and upgrades should be significantly lower than the initial cost of building them for the first time. Moreover, such a huge investment cannot be afforded by companies in the world other than Google.

However, this will also help Meta’s products become more and more integrated. Meta is also developing its own AI chip.

Now, Meta’s advertising tools are so powerful that the entire process of generating and A/B testing copy and images can be done by AI, and no company is delivering these capabilities at scale. Better than Meta.

Meta's advertising goal is to draw consumers' attention to products and services they were previously unaware of. This means there are a lot of mistakes because the vast majority of ads don’t convert, but it also means there’s a lot of room for experimentation and iteration.

This is very suitable for AI.

Apple: The gift of open source

Many big companies will invest in open source software. Because smart companies will try to commercialize product complements. When the prices of a product's complements fall, demand for the product increases, allowing the company to charge more and make more money.

The most famous example of Apple’s investment in open source technology is the Darwin kernel and WebKit browser engine used in its operating system.

Meanwhile, Apple’s AI efforts are limited to one small area—research on traditional machine learning models for recommendations, photo recognition, and speech recognition, which don’t seem to be There was no significant impact on Apple's business.

However, Apple did receive an incredible gift from the open source world: Stable Diffusion.

Stable Diffusion is notable not only because it is open source, but also because its model is surprisingly small: when it was first released, it was already available on some consumer graphics cards It ran; within a few weeks, it was optimized to run on the iPhone.

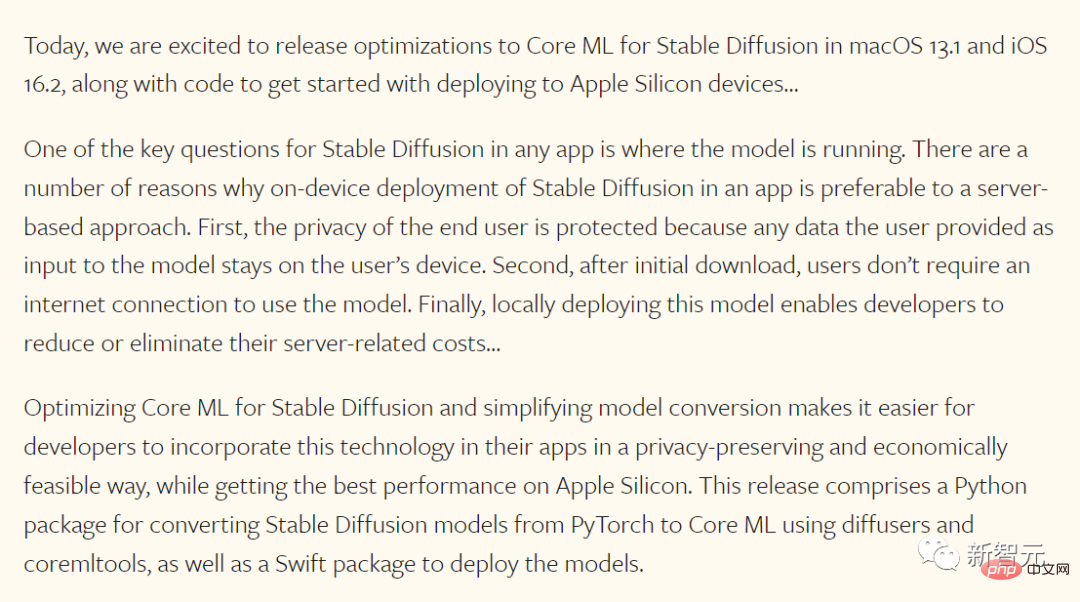

To its credit, Apple has seized on this opportunity, with its machine learning team making the following announcement last month:

Knocking on the blackboard, this announcement is divided into two parts: first, Apple has optimized the Stable Diffusion model itself (Apple can do this because it is open source); second, Apple has updated the operating system, thanks to Apple's integrated model, which it has adapted for its own chips.

It’s safe to say that this is just the beginning. Although Apple has been launching the so-called "neural engine" on its own chips for many years, the artificial intelligence-specific hardware has been adjusted to Apple's own needs; it seems that future Apple chips will also be adjusted for Stable Diffusion.

Meanwhile, Stable Diffusion itself could be built into Apple's operating system and provide an easily accessible API for any developer without having to Like Lensa, a backend infrastructure is required.

In the Apple Store era, Apple sounds like a winner - the advantages of integration and silicon can be used to provide differentiated applications, while small independent application manufacturing merchants, with APIs and distribution channels to build new businesses.

It seems that the losers are the centralized image generation services (Dall-E or MidJourney) and the cloud providers that support them.

To be sure, Stable Diffusion on Apple devices won’t take over the entire market—both Dall-E and MidJourney are better than Stable Diffusion—but the built-in native functionality will. Impact on the ultimate target market for centralized services and centralized computing.

Amazon: I have the cloud

Amazon, like Apple, uses machine learning in its apps; however, for Amazon, images and text The consumer use cases for generative AI may seem less obvious.

More important to Amazon is AWS, which sells access to GPUs in the cloud. Some of those GPUs were used for training, including Stable Diffusion, which ran for 150,000 hours using 256 Nvidia A100s and has a street price of $600,000, according to Emad Mostaque, founder and CEO of Stability AI. The price is already amazingly low.

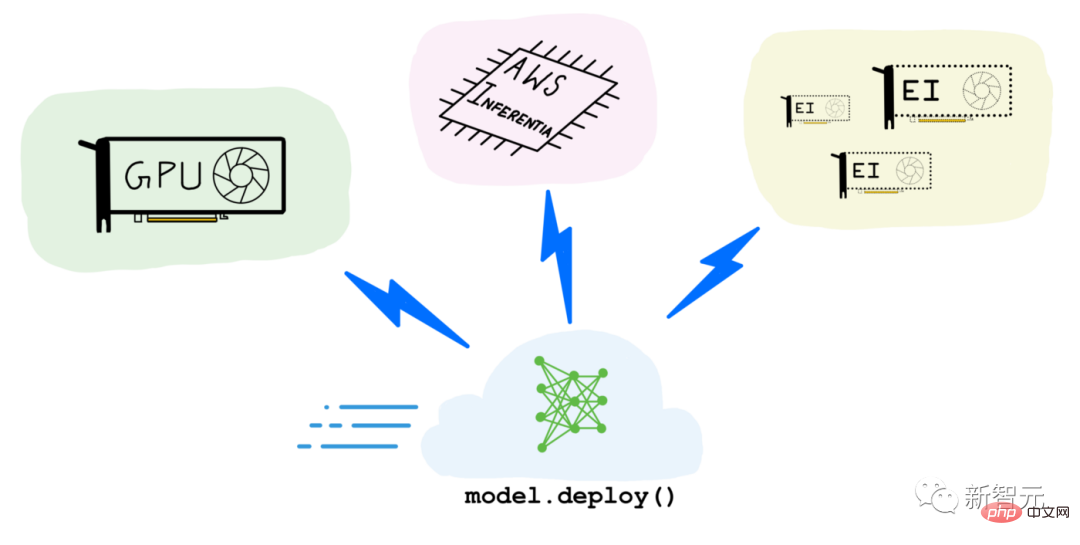

The bigger use case, though, is inference, which is applying a model to generate images or text. Every time a user generates an image in MidJourney, or an avatar in Lensa, inference runs on GPUs in the cloud.

Amazon’s prospects in this area will depend on a variety of factors.

The first, and most obvious, is how useful these products end up being in the real world. Amazon is a chipmaker in its own right, though: While most of its efforts so far have been focused on Graviton CPUs, it could build its own dedicated hardware for models like Stable Diffusion and compete on price.

Despite this, AWS is betting on both sides. Cloud services are also a major partner for Nvidia's products.

#Amazon’s short-term problem is how to measure demand: Not enough GPUs will lead to a loss of money; however, buying too many unused products will be a problem for the company. Said it would be a significant cost. In addition, one of the challenges facing AI is that reasoning costs money - there is a marginal cost in using AI to make things.

At present, large companies competing to develop eye-catching AI products seem to have not yet realized the challenge of marginal cost. While cloud services always come with a cost, the discrete nature of AI products makes it more difficult to fund the iterations required for product-market matching.

Currently, ChatGPT appears to be the biggest breakthrough product yet, not only is it free for users, but it's powered by OpenAI, which built its own model and struck a deal with Microsoft for computing power. It was no accident that we got a good deal.

In short, if AWS sells GPUs at low prices, it may stimulate more usage in the long run.

Microsoft, will you have the last laugh in 2023?

Looking at it this way, Microsoft seems to be in the best position.

Like AWS, it has Azure, a cloud service that sells GPUs; moreover, it is also the exclusive cloud provider for OpenAI.

Meanwhile, Bing is like Mac on the eve of the iPhone—contributing a fair amount of revenue, but only a dominant fraction. If ChatGPT is integrated into Bing, perhaps Bing will risk its business model and gain huge market share.

Clearly, Microsoft is worth the bet.

In addition, The Information reported that GPT will help Microsoft’s office software, and the software may add new paid features, which will be in line with Microsoft’s The subscription business model fits perfectly. Microsoft already has a successful example. By imitating GitHub Copilot, it knows how to make an assistant instead of an annoying wizard like Clippy.

GPT enters office software, which is likely to be a revolutionary step. From now on, the way 1 billion people write documents, presentations and emails may be forever changed.

Nvidia and TSMC may be the biggest winners?

Now, AI is becoming a commodity, and various models are proliferating every day.

In the end, perhaps the biggest winners are NVIDIA and TSMC.

Nvidia has invested in the CUDA ecosystem, which means that Nvidia not only has the best AI chip, but also the best AI ecosystem, and , Nvidia’s investment continues to expand.

This has caused irritation to competitors, such as Google’s TPU chips.

Also, at least for the foreseeable future, every company will have to produce chips at TSMC.

The above is the detailed content of Microsoft spends another $10 billion on OpenAI! The giant AI has been playing chess for 4 years. Who is the biggest winner?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Laravel's geospatial: Optimization of interactive maps and large amounts of data

Apr 08, 2025 pm 12:24 PM

Efficiently process 7 million records and create interactive maps with geospatial technology. This article explores how to efficiently process over 7 million records using Laravel and MySQL and convert them into interactive map visualizations. Initial challenge project requirements: Extract valuable insights using 7 million records in MySQL database. Many people first consider programming languages, but ignore the database itself: Can it meet the needs? Is data migration or structural adjustment required? Can MySQL withstand such a large data load? Preliminary analysis: Key filters and properties need to be identified. After analysis, it was found that only a few attributes were related to the solution. We verified the feasibility of the filter and set some restrictions to optimize the search. Map search based on city

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

How to solve mysql cannot be started

Apr 08, 2025 pm 02:21 PM

There are many reasons why MySQL startup fails, and it can be diagnosed by checking the error log. Common causes include port conflicts (check port occupancy and modify configuration), permission issues (check service running user permissions), configuration file errors (check parameter settings), data directory corruption (restore data or rebuild table space), InnoDB table space issues (check ibdata1 files), plug-in loading failure (check error log). When solving problems, you should analyze them based on the error log, find the root cause of the problem, and develop the habit of backing up data regularly to prevent and solve problems.

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

How to use mysql after installation

Apr 08, 2025 am 11:48 AM

The article introduces the operation of MySQL database. First, you need to install a MySQL client, such as MySQLWorkbench or command line client. 1. Use the mysql-uroot-p command to connect to the server and log in with the root account password; 2. Use CREATEDATABASE to create a database, and USE select a database; 3. Use CREATETABLE to create a table, define fields and data types; 4. Use INSERTINTO to insert data, query data, update data by UPDATE, and delete data by DELETE. Only by mastering these steps, learning to deal with common problems and optimizing database performance can you use MySQL efficiently.

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote senior backend engineers (platforms) need circles

Apr 08, 2025 pm 12:27 PM

Remote Senior Backend Engineer Job Vacant Company: Circle Location: Remote Office Job Type: Full-time Salary: $130,000-$140,000 Job Description Participate in the research and development of Circle mobile applications and public API-related features covering the entire software development lifecycle. Main responsibilities independently complete development work based on RubyonRails and collaborate with the React/Redux/Relay front-end team. Build core functionality and improvements for web applications and work closely with designers and leadership throughout the functional design process. Promote positive development processes and prioritize iteration speed. Requires more than 6 years of complex web application backend

Can mysql return json

Apr 08, 2025 pm 03:09 PM

Can mysql return json

Apr 08, 2025 pm 03:09 PM

MySQL can return JSON data. The JSON_EXTRACT function extracts field values. For complex queries, you can consider using the WHERE clause to filter JSON data, but pay attention to its performance impact. MySQL's support for JSON is constantly increasing, and it is recommended to pay attention to the latest version and features.

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Understand ACID properties: The pillars of a reliable database

Apr 08, 2025 pm 06:33 PM

Detailed explanation of database ACID attributes ACID attributes are a set of rules to ensure the reliability and consistency of database transactions. They define how database systems handle transactions, and ensure data integrity and accuracy even in case of system crashes, power interruptions, or multiple users concurrent access. ACID Attribute Overview Atomicity: A transaction is regarded as an indivisible unit. Any part fails, the entire transaction is rolled back, and the database does not retain any changes. For example, if a bank transfer is deducted from one account but not increased to another, the entire operation is revoked. begintransaction; updateaccountssetbalance=balance-100wh

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

MySQL can't be installed after downloading

Apr 08, 2025 am 11:24 AM

The main reasons for MySQL installation failure are: 1. Permission issues, you need to run as an administrator or use the sudo command; 2. Dependencies are missing, and you need to install relevant development packages; 3. Port conflicts, you need to close the program that occupies port 3306 or modify the configuration file; 4. The installation package is corrupt, you need to download and verify the integrity; 5. The environment variable is incorrectly configured, and the environment variables must be correctly configured according to the operating system. Solve these problems and carefully check each step to successfully install MySQL.

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The primary key of mysql can be null

Apr 08, 2025 pm 03:03 PM

The MySQL primary key cannot be empty because the primary key is a key attribute that uniquely identifies each row in the database. If the primary key can be empty, the record cannot be uniquely identifies, which will lead to data confusion. When using self-incremental integer columns or UUIDs as primary keys, you should consider factors such as efficiency and space occupancy and choose an appropriate solution.