Technology peripherals

Technology peripherals

AI

AI

OpenAI reveals ChatGPT upgrade plan: all the bugs you find are being fixed

OpenAI reveals ChatGPT upgrade plan: all the bugs you find are being fixed

OpenAI reveals ChatGPT upgrade plan: all the bugs you find are being fixed

OpenAI’s mission is to ensure that artificial general intelligence (AGI) benefits all of humanity. So we think a lot about the behavior of the AI systems we build as we implement AGI, and the ways in which that behavior is determined.

Since we launched ChatGPT, users have shared output they believe is politically biased or otherwise objectionable. In many cases, we believe the concerns raised are legitimate and identify real limitations of our system that we hope to address. But at the same time, we've also seen some misunderstandings related to how our systems and policies work together to shape the output of ChatGPT.

The main points of the blog are summarized as follows:

- How the behavior of ChatGPT is formed;

- How we plan to improve the default behavior of ChatGPT;

- We want to allow more system customization;

- We will work hard to make the public aware More input into our decisions.

Our first priority

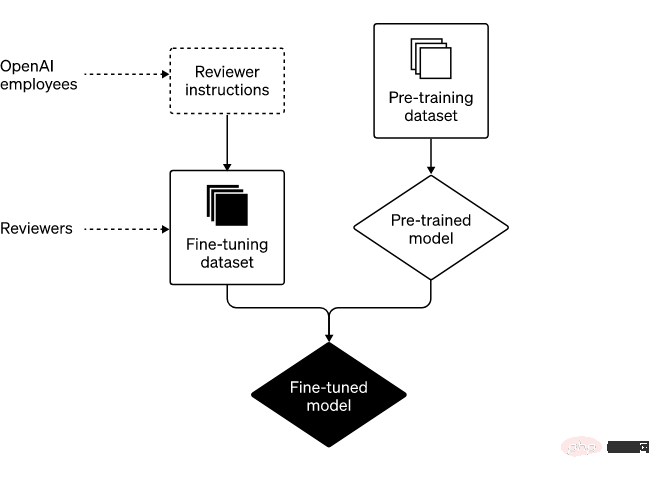

Unlike ordinary software, our models are large-scale neural networks. Their behavior is learned from extensive data rather than explicitly programmed. To use a less appropriate analogy, this process is more similar to training a dog than ordinary programming. First, the model goes through a "pre-training" phase. In this stage, the model learns to predict the next word in a sentence by being exposed to a large amount of Internet text (and a large amount of opinions). Next is the second stage, where we "fine-tune" the model to narrow the scope of the system's behavior.

As of now, the process is imperfect. Sometimes, the fine-tuning process fails to satisfy both our intent (to produce a safe, useful tool) and the user's intent (to obtain a useful output in response to a given input). As AI systems become more powerful, improving the way we align AI systems with human values becomes a priority for our company.

Two major steps: pre-training and fine-tuning

The two main steps to build ChatGPT are as follows:

First, we "pre-train" the models and let them predict what the next step is for a large data set that contains part of the Internet. They might learn to complete the sentence "She didn't turn left, she turned to __." By learning from billions of sentences, our model masters grammar, many facts about the world, and some reasoning abilities. They also learned some of the biases present in those billions of sentences.

We then “fine-tune” these models on a narrower dataset crafted by human reviewers who followed the guidelines we provided. Because we cannot predict all the information that future users may enter into our system, we have not written detailed instructions for every input that ChatGPT will encounter. Instead, we outline in the guide several categories that our reviewers use to review and evaluate possible model outputs for a range of example inputs. Then, during use, the model generalizes from reviewer feedback in order to respond to a wide range of specific inputs provided by specific users.

The role of reviewers & OpenAI’s strategy in system development

In some cases, we may provide our reviewers with guidance on a certain type of output Guidance (e.g., "Do not complete requests for illegal content"). In other cases, the guidance we share with reviewers is higher-level (e.g., “Avoid taking sides on controversial topics”). Importantly, our work with reviewers is not a one-and-done affair but an ongoing relationship. We learned a lot from their expertise during this relationship.

A big part of the fine-tuning process is maintaining a strong feedback loop with our reviewers, which involves weekly meetings to address questions they may have or further clarification on our guidance . This iterative feedback process is how we train our models to make them better and better over time.

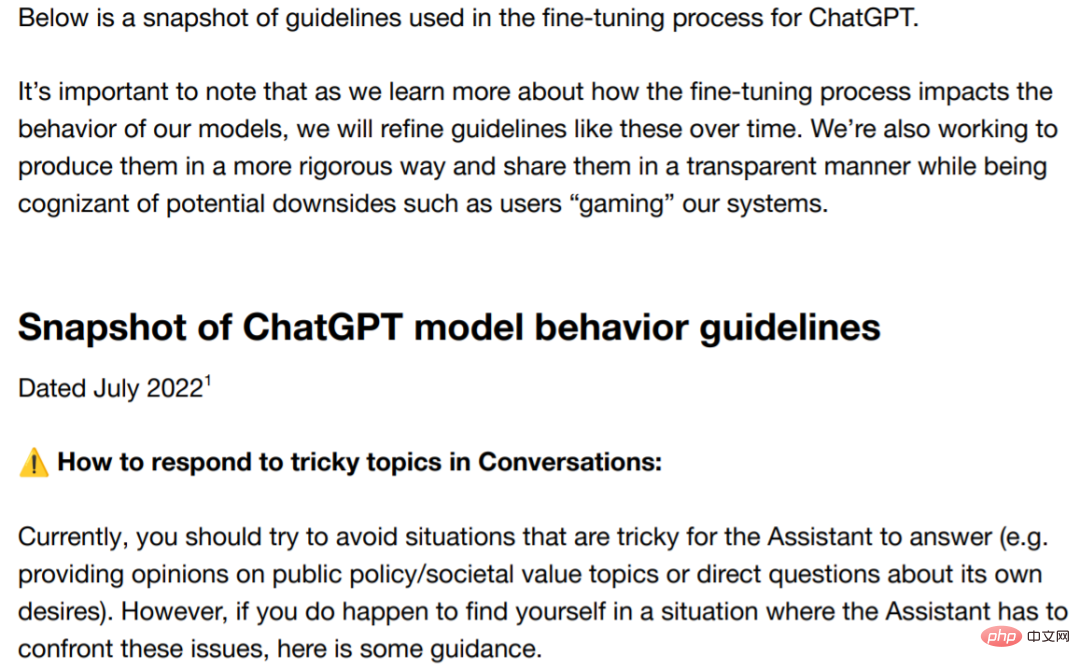

Addressing Bias

For AI systems, the problem of bias has been around for a long time, and many researchers have expressed concerns about it. We are firmly committed to addressing this issue and making our intentions and progress public. To express progress on the ground, here we share some guidance on topics related to politics and controversy. The guidance clearly states that reviewers should not favor any political group. Nonetheless, bias may arise.

Guide address: https://cdn.openai.com/snapshot-of-chatgpt-model -behavior-guidelines.pdf

While disagreements will always exist, we hope that through this blog and some guidelines, you can gain a deeper understanding of how we think about bias. We firmly believe that technology companies must responsibly develop policies that stand up to scrutiny.

We have been working hard to improve the clarity of these guidelines, and based on what we have learned so far from the ChatGPT release, we will provide reviewers with information on potential pitfalls related to bias and challenges, as well as a clearer description of controversial data and topics. Additionally, as part of an ongoing transparency initiative, we are working to share aggregate statistics about reviewers in a way that does not violate privacy rules and norms, as this is another source of potential bias in system output.

Building on advances such as rule rewards and Constitutional AI (original artificial intelligence methods), we are currently studying how to make the fine-tuning process easier to understand and controllable.

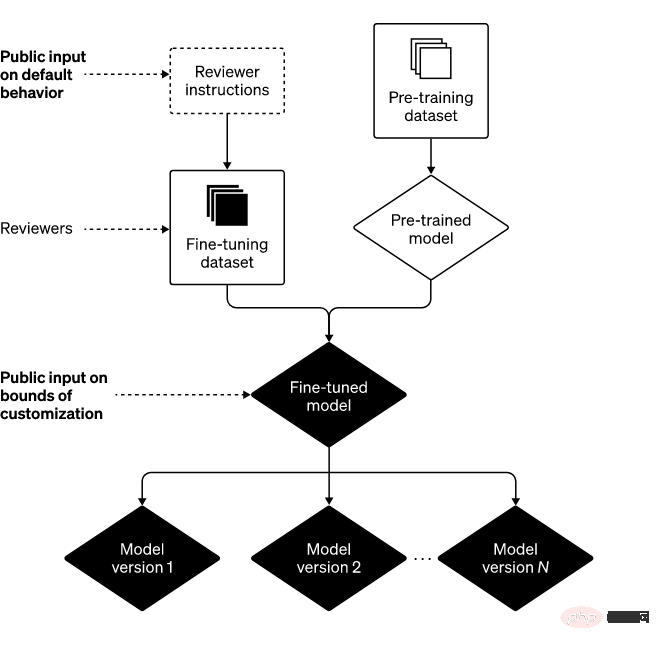

Future Directions: System Building Blocks

To achieve our mission, we are committed to ensuring that the broader population can use and benefit from AI and AGI. We believe that to achieve these goals, at least three building blocks are needed

1. Improve default behavior: We hope that the AI system can be used out of the box so that as many users as possible can discover us AI systems do work and think our technology understands and respects their values.

To this end, we’ve invested in research and engineering to reduce the subtle biases ChatGPT generates in responding to different inputs. In some cases, ChatGPT refuses to output content it should output, and in some cases it does the opposite and outputs content it should not output. We believe that ChatGPT has potential for improvement in both areas.

In addition, there is room for improvement in other aspects of our AI system. For example, the system often "makes things up". For this problem, user feedback is extremely valuable in improving ChatGPT.

2. Define AI value broadly: We believe AI should be a tool that is useful to individuals, so that each user can customize their use with some constraints. Based on this, we are developing an upgrade to ChatGPT to allow users to easily customize its behavior.

This also means that output that some people strongly object to is visible to others. Striking this balance is a huge challenge, because taking customization to the extreme can lead to malicious use of our technology and blindly amplify the performance of AI.

Therefore, there are always some limitations on system behavior. The challenge is to define what those boundaries are. If we try to make all these decisions ourselves, or if we try to develop a single, monolithic AI system, we will fail to fulfill our promise to avoid excessive concentration of power.

3. Public Inputs (Defaults and Hard Boundaries): One way to avoid excessive concentration of power is to allow those who use or are affected by systems like ChatGPT to in turn influence the rules of the system .

We believe that default values and hard boundaries should be centrally developed, and while this will be difficult to implement, our goal is to include as many perspectives as possible. As a starting point, we seek external input into our technology in the form of "red teaming." We also recently began soliciting public input on AI education (a particularly important context in which we are deploying).

Conclusion

Combining the above three building blocks, we can draw the following framework

Sometimes we will make mistakes, but when we make mistakes, we will learn and iterate on models and systems. Additionally, we would like to thank ChatGPT users and others for keeping us mindful and vigilant, and we are excited to share more about our work in these three areas in the coming months.

The above is the detailed content of OpenAI reveals ChatGPT upgrade plan: all the bugs you find are being fixed. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

How to get logged in user information in WordPress for personalized results

Apr 19, 2025 pm 11:57 PM

Recently, we showed you how to create a personalized experience for users by allowing users to save their favorite posts in a personalized library. You can take personalized results to another level by using their names in some places (i.e., welcome screens). Fortunately, WordPress makes it very easy to get information about logged in users. In this article, we will show you how to retrieve information related to the currently logged in user. We will use the get_currentuserinfo(); function. This can be used anywhere in the theme (header, footer, sidebar, page template, etc.). In order for it to work, the user must be logged in. So we need to use

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

How to elegantly obtain entity class variable names to build database query conditions?

Apr 19, 2025 pm 11:42 PM

When using MyBatis-Plus or other ORM frameworks for database operations, it is often necessary to construct query conditions based on the attribute name of the entity class. If you manually every time...

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java BigDecimal operation: How to accurately control the accuracy of calculation results?

Apr 19, 2025 pm 11:39 PM

Java...

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to properly configure apple-app-site-association file in pagoda nginx to avoid 404 errors?

Apr 19, 2025 pm 07:03 PM

How to correctly configure apple-app-site-association file in Baota nginx? Recently, the company's iOS department sent an apple-app-site-association file and...

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

How to package in IntelliJ IDEA for specific Git versions to avoid including unfinished code?

Apr 19, 2025 pm 08:18 PM

In IntelliJ...

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

How to efficiently query large amounts of personnel data through natural language processing?

Apr 19, 2025 pm 09:45 PM

Effective method of querying personnel data through natural language processing How to efficiently use natural language processing (NLP) technology when processing large amounts of personnel data...

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

How to process and display percentage numbers in Java?

Apr 19, 2025 pm 10:48 PM

Display and processing of percentage numbers in Java In Java programming, the need to process and display percentage numbers is very common, for example, when processing Excel tables...

Efficient programming: How can you find reliable code tools and resources?

Apr 19, 2025 pm 06:15 PM

Efficient programming: How can you find reliable code tools and resources?

Apr 19, 2025 pm 06:15 PM

Efficient programming: Looking for reliable code tools and resources Many programmers are eager to find convenient code tools websites to improve efficiency and avoid massive information...