Technology peripherals

Technology peripherals

AI

AI

How to improve the efficiency of deep learning algorithms, Google has these tricks

How to improve the efficiency of deep learning algorithms, Google has these tricks

How to improve the efficiency of deep learning algorithms, Google has these tricks

Ten years ago, the rise of deep learning was driven in part by the incorporation of new algorithms and architectures, a significant increase in data, and improvements in computing power. Over the past decade, AI and ML models have become deeper and more complex, with more parameters and training data, and thus larger and more cumbersome, resulting in some of the most transformative results in machine learning history.

These models are increasingly used in production and business applications, and at the same time, their efficiency and cost have evolved from secondary considerations to major limitations. To address major challenges at four levels: efficient architecture, training efficiency, data efficiency, and inference efficiency, Google continues to invest heavily in ML efficiency. In addition to efficiency, these models face many challenges regarding authenticity, security, privacy, and freshness. Next, this article will focus on Google Research’s efforts in developing new algorithms to address the above challenges.

The basic question of the research is "Is there a better way to parameterize the model to improve efficiency?" In 2022, researchers focus on retrieving context, hybrid expert systems, and improving Transformer (the heart of large ML models) efficiency to develop new technologies that inject external knowledge by enhancing the model.

Context-augmented model

In pursuit of higher quality and efficiency, neural models can be enhanced with external context from large databases or trainable memory. By leveraging retrieved context, neural networks can achieve better parameter efficiency, interpretability, and realism without the need to extensively store knowledge in their internal parameters.

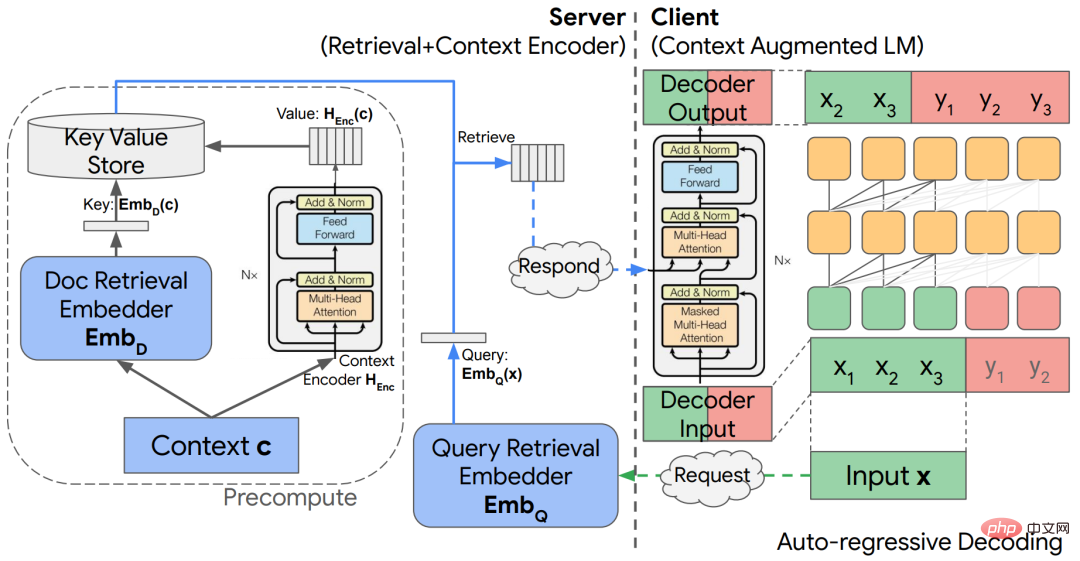

An article titled "Decoupled Context Processing for Context Augmented Language Modeling" explores a method based on decoupling A simple architecture of encoder-decoder architecture for incorporating external context into language models. This provides significant computational savings in autoregressive language modeling and open-domain question answering tasks. However, pre-trained large language models (LLMs) consume a large amount of information through self-supervision on large training sets. However, it is unclear how these models' knowledge of the world interacts with the context presented. Through knowledge-aware fine-tuning (KAFT), researchers incorporate counterfactual and irrelevant context into standard supervised datasets, which enhances the controllability and robustness of LLM.

## Paper address: https://arxiv.org/abs/2210.05758

Encoder-decoder cross-attention mechanism for context merging, allowing context encoding to be decoupled from language model inference, thereby improving context enhancement models s efficiency.

In the process of seeking modular deep networks, one of the issues is how to design a concept database with corresponding computing modules. The researchers proposed a theoretical architecture that stores "remember events" in the form of sketches in an external LSH table, including a pointers module to handle sketches.

Utilizing accelerators to quickly retrieve information from large databases is another major challenge for context-augmented models. The researchers developed a TPU-based similarity search algorithm that is consistent with the TPU's performance model and provides analytical guarantees on expected recall, achieving peak performance. Search algorithms often involve a large number of hyperparameters and design choices, which makes it difficult to tune them when performing new tasks. Researchers propose a new constrained optimization algorithm for automated hyperparameter tuning. With the desired cost or recall fixed as input, the proposed algorithm produces tunings that are empirically very close to the speed-recall Pareto frontier and provide leading performance on standard benchmarks. .

Mixed Expert Model

The Mixed Expert (MoE) model has been proven to be an effective means of increasing the capacity of neural network models without excessively increasing computational costs. The basic idea of MoE is to build a unified network from many expert sub-networks, where each input is processed by an appropriate expert subset. As a result, MoE invokes only a small fraction of the entire model compared to standard neural networks, resulting in high efficiencies as shown in language model applications such as GLaM.

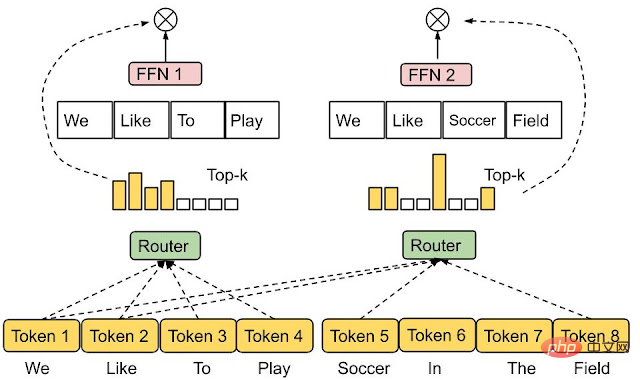

Each input token in the GLaM architecture is dynamically routed to two of the 64 expert networks. make predictions.

For a given input, the routing function is responsible for deciding which experts should be activated. The design of this function is challenging because the researchers want to avoid underutilizing each expert. and overexploitation. A recent work proposes expert selection routing, a new routing mechanism that instead of assigning each input token to top-k experts, assigns each expert to top-k tokens. This will automatically ensure expert load balancing while also naturally allowing multiple experts to handle an input token.

Experts choose the route. Experts with predetermined buffer capacity are assigned top-k tokens, thus ensuring load balancing. Each token can be processed by a variable number of experts.

Effective Transformer

Transformer is a sequence-to-sequence model that is currently popular, and is used in a series of challenging problems from vision to natural language understanding. achieved remarkable success. The core component of this model is the attention layer, which identifies similarities between queries and keys and uses these similarities to construct an appropriately weighted combination of values. Although the performance is strong, the computational efficiency of the attention mechanism is not high, and the complexity is usually the second power of the length of the input sequence.

As the scale of Transformer continues to grow, research on one of the issues is very valuable, that is, whether there are any naturally occurring structures or patterns of learning models that can solve the principle of effective attention. . To this end, the researchers studied learned embeddings in intermediate MLP layers and found that they are very sparse—for example, the T5-Large model has 1% non-zero entries. The sparsity further demonstrates that one can potentially reduce FLOPs without affecting model performance.

Paper address: https://arxiv.org/pdf/2210.06313.pdf

Recently, there is research to launch Treeformer—— 1 An alternative to standard attention computation that relies on decision trees. Simply put, this quickly identifies a small subset of keys that are relevant to a query and performs attention operations only on that set. As a rule of thumb, Treeformer can reduce FLOPs of the attention layer by 30x. In addition to this there is Sequential Attention - a differentiable feature selection method that combines attention and greedy algorithms. The technique has strong provable guarantees for linear models and scales seamlessly to large embedding models.

Another way to improve the efficiency of Transformer is to speed up the softmax calculation in the attention layer. Based on the research on "low-rank approximation of the softmax kernel", the researchers proposed a new type of random features, providing the first "positive and bounded" random feature approximation of the softmax kernel, and the calculation on the sequence length is Linear.

Training Efficiency

Efficient optimization methods are the cornerstone of modern ML applications, and this is especially important in large-scale settings. In this setting, even first-order adaptive methods like Adam are often expensive and face challenges with training stability. In addition, these methods are usually agnostic to the architecture of the neural network, thereby ignoring the richness of the architecture, resulting in low training efficiency. This also prompts new technologies to be continuously proposed to optimize modern neural network models more effectively. Researchers are developing new architecture-aware training techniques. For example, some studies for training Transformer networks include new scale-invariant Transformer networks and new pruning methods combined with stochastic gradient descent (SGD) to Speed up the training process. With the help of this method, researchers were able to effectively train BERT using simple SGD for the first time, without the need for adaptation.

Paper address: https://arxiv.org/pdf/2210.05758.pdf

In addition, the researchers proposed a new method with the help of LocoProp - while using the same computing and memory resources as the first-order optimizer, it achieves the same performance as the second-order optimizer. Optimizer-like performance. LocoProp takes a modular view of neural networks, breaking them down into compositions of layers. Each layer is then allowed to have its own loss function as well as output targets and weight regularizers. With this setup, after appropriate forward and backward passes, LocoProp continues to update the local loss of each layer in parallel. In fact, these updates can be shown to be similar to those of higher-order optimizers, both theoretically and empirically. On the deep autoencoder benchmark, LocoProp achieves performance comparable to high-order optimizers while having a speed advantage.

Paper link: https://proceedings.mlr.press/v151/amid22a.html

Similar to backpropagation, LocoProp Apply a forward pass to compute activations. In a backward pass, LocoProp sets per-neuron targets for each layer. Finally, LocoProp splits model training into independent problems across layers, where several local updates can be applied to each layer's weights in parallel.

The core idea of optimizers such as SGD is that each data point is sampled independently and identically from the distribution. Unfortunately this is difficult to satisfy in real-world settings, such as reinforcement learning, where the model (or agent) must learn from data generated based on its own predictions. The researchers proposed a new SGD algorithm based on reverse experience replay, which can find optimal solutions in linear dynamic systems, nonlinear dynamic systems, and Q-learning. Furthermore, studies have proven that an enhanced version of this method, IER, is currently the state-of-the-art and the most stable experience replay technique in various popular RL benchmarks.

Paper address: https://arxiv.org/pdf/2103.05896.pdf

Data Efficiency

In many tasks, deep neural networks rely heavily on large data sets. In addition to the storage costs and potential security/privacy issues that come with large datasets, training modern deep neural networks on such datasets also incurs high computational costs. One possible way to solve this problem is to select a subset of the data.

The researchers analyzed a subset selection framework designed for use with arbitrary model families in practical batch processing settings. In this case, the learner can sample one example at a time, accessing both the context and the true label, but to limit the overhead, its state (i.e. further training model weights) can only be updated after a sufficient batch of examples has been selected. The researchers developed an algorithm, called IWeS, that selects examples through importance sampling, where the sampling probability assigned to each example is based on the entropy of a model trained on previously selected batches. The theoretical analysis provided by the study demonstrates bounds on generalization and sampling rates.

Paper address: https://arxiv.org/pdf/2301.12052.pdf

Another problem with training large networks is that they may be inconsistent with the training data and The distribution changes seen across data at deployment time are highly sensitive, especially when working with a limited amount of training data that may not cover all deployment-time scenarios. A recent study that hypothesized that "extreme simplicity bias" is the key issue behind this fragility of neural networks made this hypothesis feasible, leading to the combination of two new complementary methods - DAFT and FRR. can provide significantly more robust neural networks. In particular, these two methods use adversarial fine-tuning as well as reverse feature prediction to strengthen the learning network.

Paper address: https://arxiv.org/pdf/2006.07710.pdf

Inference Efficiency

It has been proven that increasing the size of a neural network can improve its predictive accuracy, however, achieving these gains in the real world is challenging, Because the inference cost of large models is very high for deployment. This drives strategies to improve service efficiency without sacrificing accuracy. In 2022, experts studied different strategies to achieve this goal, especially those based on knowledge distillation and adaptive computing.

Distillation

Distillation is a simple and effective model compression method that greatly scales large neural networks. potential applicability of the model. Studies have proven that distillation can play its role in a series of practical applications such as advertising recommendations. Most use cases for distillation involve the direct application of a basic recipe to a given area, with limited understanding of when and why this should work. Google's research this year looked at customizing distillation for specific environments and formally examined the factors that control distillation success.

On the algorithm side, the research developed an important way to reweight the training examples by carefully modeling the noise in the teacher labels, and an effective measure to classify the data. Sets are sampled to obtain teacher labels. Google stated in "Teacher Guided Training: An Efficient Framework for Knowledge Transfer" that instead of passively using teachers to annotate fixed data sets, teachers are actively used to guide the selection of informative samples to be annotated. This makes the distillation process stand out in limited data or long-tail settings.

Paper address: https://arxiv.org/pdf/2208.06825.pdf

In addition, Google also studied New methods from cross-encoder (dual-encoder, such as BERT) to factorial dual-encoder (dual-encoder), which is also an important setting for scoring the relevance of (query, document) pairs. The researchers explored the reasons for the performance gap between cross-encoders and dual-encoders, noting that this may be a result of generalization rather than capacity limitations of dual-encoders. Careful construction of the distillation loss function can alleviate this situation and reduce the gap between cross-encoder and dual-encoder performance. Subsequently, in embedtitil, we investigate further improving dual-encoder distillation by matching the embeddings in the teacher model. This strategy can also be used to extract information from large-to-small dual-encoder models, where inheriting and freezing the teacher’s document embeddings can prove to be very effective.

Paper address: https://arxiv.org/pdf/2301.12005.pdf

Theoretically, the research provides a new perspective on distillation from the perspective of supervisory complexity, which is a method of measuring how well students predict teacher labels. NTK (neural tangent kernel) theory provides conceptual insights. Research further demonstrates that distillation can lead to students not fitting into points that the teacher model deems difficult to model. Intuitively, this can help students focus their limited abilities on those samples that can be reasonably modeled.

Paper address: https://arxiv.org/pdf/2301.12245.pdf

Adaptive Computing

While distillation is an effective means of reducing the cost of inference, it is consistent across all samples. However, intuitively, some easy samples may inherently require less computation than hard samples. The goal of adaptive computing is to design mechanisms that enable such sample-dependent computation.

CALM (Confident Adaptive Language Modeling) introduces controlled early-exit functionality for Transformer-based text generators such as T5.

Paper address: https://arxiv.org/pdf/2207.07061.pdf

In this form of adaptive computation, the model dynamically modifies the number of Transformer layers used at each decoding step. The early exit gate uses a confidence measure with a decision threshold that is calibrated to meet statistical performance guarantees. This way, the model only needs to compute the full stack of decoder layers for the most challenging predictions. Simpler predictions only require computing a few decoder layers. In practice, the model uses about one-third as many layers on average to make predictions, resulting in 2-3x speedups while maintaining the same level of generation quality.

Generate text using a regular language model (top) and CALM (bottom). CALM attempts to make early predictions. Once it's confident enough in the generated content (dark blue tint), it skips over to save time.

A popular adaptive computing mechanism is the cascade of two or more basic models. A key question when using cascades: whether to simply use the current model's predictions or defer predictions to downstream models. Learning when to delay requires designing a suitable loss function that can leverage appropriate signals as supervision for delaying decisions. To achieve this goal, the researchers formally studied existing loss functions, demonstrating that they may not be suitable for training samples due to the implicit application of label smoothing. Research has shown that this can be mitigated by post-hoc training of delayed rules, which does not require modifying the model internals in any way.

Paper address: https://openreview.net/pdf?id=_jg6Sf6tuF7

For retrieval applications, standard semantic search techniques use a fixed representation for each embedding generated by large models. That is, the size and capabilities of the representation are essentially fixed regardless of the downstream tasks and their associated computing environments or constraints. MRL (Matryoshka representation learning) introduces the flexibility to adapt the representation according to the deployment environment. When used in conjunction with standard approximate nearest neighbor search techniques such as ScaNN, MRL is able to provide up to 16 times lower computation while having the same recall and precision metrics.

##Paper address: https://openreview.net/pdf?id=9njZa1fm35

The above is the detailed content of How to improve the efficiency of deep learning algorithms, Google has these tricks. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images