Learning and thinking about graph computing

Good software is not discovered by program analysis and error detection, but is built by the right people.

Graphs have become an increasingly important computing object. The graph structure is an abstraction of group relationships and can describe rich objects and relationships. The core of graph computing is how to model data into a graph structure and how to transform the solution of the problem into a computing problem on the graph structure. When the problem involves correlation analysis, graph computing can often make the solution to the problem naturally expressed as a A series of operations and calculations on graph structures. For example, the PageRank algorithm based on the graph structure of web page links is used to obtain the weight of the web page, which is used as a reference for search engine ranking. The user behavior data of the graph structure is used to obtain accurate group preference analysis and personalized product recommendation results.

1. What is graph computing?

Graph computing is a technology that studies things in the human world and the relationships between things, and describes, depicts, analyzes and calculates them. The graph here is "graph", not the graph "image", which comes from graph theory in mathematics.

Graphs are the most flexible connection method, allowing entities to be connected without restrictions. Graph computing is not just a technology, but a way of understanding the world. Graph data can well describe the connections between things, including describing the direction and attributes of the connections. From the perspective of data structure, graph is a native expression of the relationship between things. To a certain extent, relational databases should be called table databases, while graph databases should be called relational databases. Broadly defined graph computing refers to various processing based on graph data, including graph databases.

Graph computing technology solves the problems of low efficiency and high cost of associated queries in traditional computing modes. It completely depicts relationships in the problem domain and has rich, efficient and agile data analysis capabilities. , its characteristics are as follows:

- Data model based on graph abstraction

- Parallel abstraction of graph data model

- Graph model system optimization

For graph computing, performance cost, fault tolerance mechanism and scalability are all very important.

2. Looking at graph computing from the perspective of historical development

Graph computing can be traced back to tree-structured databases in the 1960s, and attribute graph-oriented models and technologies appeared in the 1970s and 1980s. Such as LDM (logical data model), etc. Until 2007, the first commercial graph database Neo4j was established, marking that graph computing entered a stage of development.

The real beginning of graph computing research was in 2004 when Google developed MapReduce, a computing model for parallel processing of big data. The launch of this model brought a huge revolutionary impact to parallel processing of big data. Subsequently, in 2006, the Apache Hadoop team introduced the Hadoop Distributed File System (HDFS) and the new Hadoop MapReduce framework. In 2009, the AMP Lab at the University of California, Berkeley developed the Spark system.

Since 2010, graph computing research directions such as large-scale distributed architecture, multi-modal support, and graph query language design have gradually attracted attention. Google proposed Pregel, a distributed graph computing system designed for the characteristics of graph algorithms, following the BSP computing model; then the CMU Select Laboratory GraphLab project team proposed the GAS computing model. . Although pregel and GraphLab are both processing frameworks for complex machine learning calculations and are used for iteration calculations, their implementation methods take different paths: Pregel is based on a large-block message passing mechanism, and GraphLab is based on The memory sharing mechanism has had a profound impact on the subsequent design of other graph computing systems.

Google proposed the concept of knowledge graph in May 2012, which is a new way of connecting information. Its basic unit is the "entity-relationship-entity" triplet, and entities are connected through Relationships are interconnected to form a networked knowledge structure. The core of the establishment of knowledge graph is the knowledge reasoning mechanism of computers, and graph computing provides important underlying technical support for it.

In 2015, with the rapid growth of data volume, the application market gradually opened up, and the demand for the scalability and efficiency of graph computing systems continued to increase. Academic and industrial research in the field of graph computing in China has begun to gradually develop its own graph computing systems and platforms, such as Tsinghua University's Gemini and so on.

In recent years, with the development of artificial intelligence technology, graph neural networks have also shown their talents in the industry.

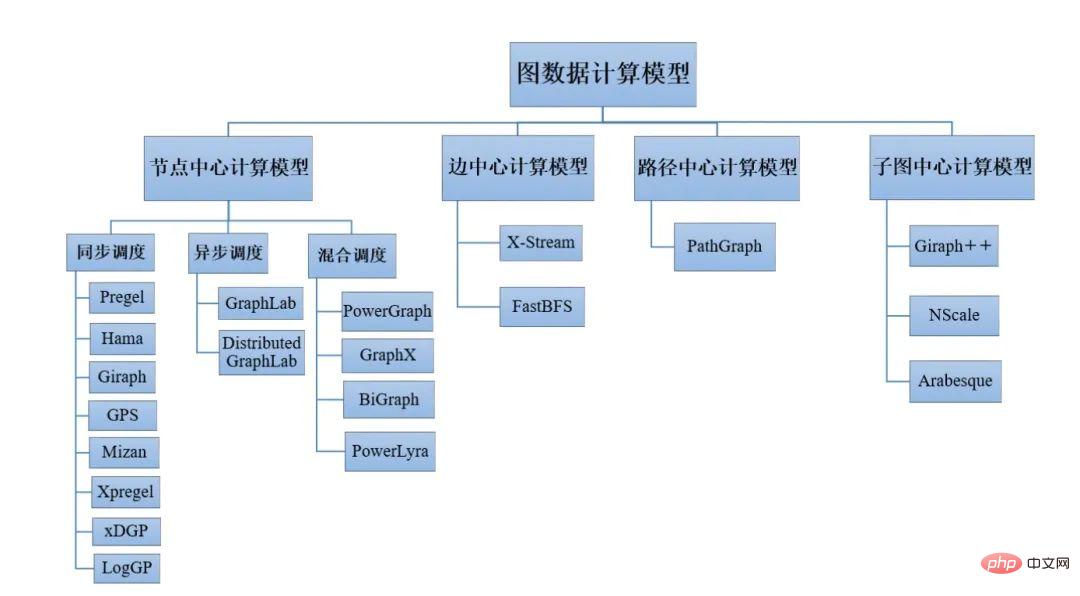

3. Looking at graph computing from the framework model

The graph computing framework basically follows the BSP (Bulk Synchronous Parallell) computing model. The unique feature of the BSP mode bulk synchrony mechanism lies in the introduction of the superstep concept. A calculation process consists of a series of global supersteps. Each superstep includes three stages: parallel calculation (local computation), global communication (non-local data communication), and fence synchronization (waiting for the end of communication behavior).

The BSP mode has the following characteristics:

Divides calculations into supersteps one by one, effectively avoiding deadlocks;

Separates the processor and router, emphasizing The separation of computing tasks and communication tasks, while routers only complete point-to-point message delivery and do not provide functions such as assembly, replication, and broadcast, not only conceal the specific interconnection network topology, but also simplify the communication protocol;

Adopt synchronous mode to implement global synchronization and controllable coarse-grained level in hardware to execute tightly coupled synchronous parallel algorithms.

Some representative graph computing frameworks are as follows:

- Neo4j-APOC: Based on the graph database, it supports some basic graph algorithms , the distributed version is not open source.

- Pregel: Google proposed it in 2009 and is the founder of the graph computing model. Many subsequent works have been influenced by its ideas. Not open source.

- Giraph: Facebook’s open source implementation based on Pregel’s ideas.

- Gemini: Tsinghua University has implemented a number of improvements based on Pregel ideas, with excellent performance. Only free demo is provided, the commercial version is not open source.

- KnightKing: A graph computing framework specially designed for Walker walking algorithms, which is not universal.

- GraphX: A graph computing framework implemented by the Apache Foundation based on Spark, with high community activity.

- GraphLab (PowerGraph): commercial software, not open source. Has been acquired by Apple.

- Plato: Tencent’s C open source implementation based on the ideas of Gemini and KnightKing. It is a high-performance, scalable, and easy-to-plug graph computing framework.

4. Looking at graph calculations from the perspective of algorithms

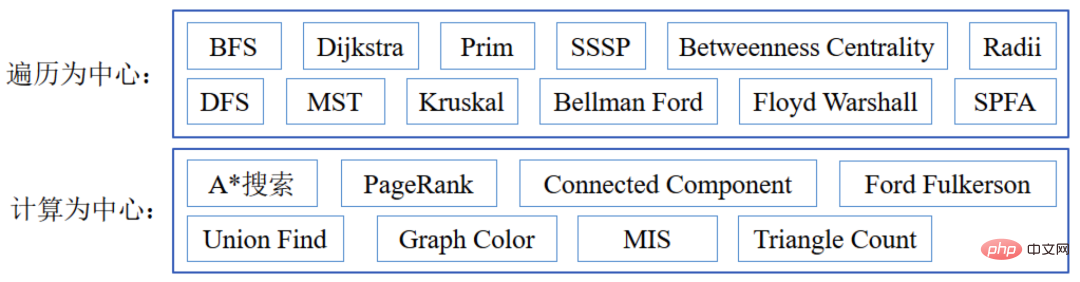

Graph algorithm refers to a simple method that uses specific vertices and edges to find answers, such as undirected graphs, directed graphs and networks Can use many commonly used graph algorithms. For graph data, traversal algorithms (depth/breadth first) are the basis for other algorithms. Typical graph algorithms include PageRank, shortest path, connected branch, maximum independent set, minimum spanning tree, and Bayesian Belief Propagation. The minimum spanning tree of a graph often represents the lowest cost or minimum cost in life, and Prim's algorithm and Kruskal's algorithm are commonly used. Community discovery, shortest path, topological sorting, and critical path also have corresponding algorithms.

Graph algorithms include diverse data analysis technologies such as search, matching, classification, and evaluation. They can be roughly divided into two categories from the algorithm structure dimension: traversal-centered algorithms and calculation-centered algorithms. Traversal-centric algorithms need to traverse the graph from specific vertices in a specific way, and there is a large amount of random access. Calculation-centered algorithms require a large number of operations in an iteration cycle, and have relatively good data locality.

5. Looking at graph computing from the perspective of computer architecture

Graph computing is generally a data-driven calculation, and the computing structure cannot be accurately predicted before running. , there is no obvious pattern in the form, and it is difficult to divide it efficiently and with high quality. Existing caching mechanisms can often only speed up data access with good locality, and access to large amounts of data will frequently put the processor in a state of waiting for I/O.

The load of graph computing is complex, and there is no single most representative graph computing load. The edges connecting vertices are only a small subset of countless possible connections and are highly irregular. In the process of graph computing, the spatiotemporal locality of reading and writing is difficult to grasp, and the bandwidth occupancy is difficult to predict.

Most algorithms may occupy less than 50% of the memory bandwidth. What limits the utilization of memory bandwidth? The processor needs to obtain instructions, there is space between instruction windows, and the register operands need to wait until the operands are available, and the related dependencies will not be released. Due to the high instruction hit rate, the parallelism at the memory level may be reduced, making it difficult to fully utilize the memory bandwidth of the platform. A low cache data usage ratio means that it is difficult for applications to benefit from spatial locality, and the data prefetching strategy will be ineffective. Data prefetching generally helps improve performance, but it also generates a large number of useless prefetch operations. For applications with limited memory bandwidth or cache capacity, data prefetching may cause a certain waste of resources. In the case of multi-threaded computing, triggering remote memory access with higher latency will also offset the benefits of multi-threading.

What kind of processor core is needed for graph computing? Generally, an architecture with many small computing cores and a high number of threads is used, which is suitable for processing large-graph calculations that traditional multi-core processors are not good at. In the concurrent calculation of multiple graphs, there are two strategies: shared allocation and exclusive allocation. The shared allocation strategy means that each of the m requests is processed in parallel using n logical cores, and the OS manages the switching of different requests on the logical cores. The exclusive allocation strategy refers to allocating n/m logical cores to each request so that the logical cores do not need to switch between tasks. The exclusive allocation strategy is more suitable for concurrent graph calculations. Exclusive can usually reduce the overall running time for the same concurrent requests. The low contention of the reorder cache may be the reason why the exclusive strategy is better than the shared strategy in concurrent graph computing scenarios.

As far as the power consumption generated by graph calculation is concerned, load changes lead to system power fluctuations, and peak and valley staggered situations will occur. Increasing concurrent tasks will change the peak-to-trough ratio and increase power consumption. Generally speaking, in terms of CPU power consumption, calculation-centered algorithms consume a large amount of energy per instruction on average, while traversal-centered algorithms have the opposite effect; in terms of memory power consumption, calculation-centered algorithms consume less memory. The average energy consumption is small, and the opposite is true for traversal-centric algorithms.

Most graph computing-based applications are memory-limited, but there are also insufficient memory utilization caused by limitations of core components. Enough active threads create concurrent access, which may improve utilization. More threads are needed, but due to the imbalance among threads, they may not be used efficiently. A more scalable parallel strategy needs to be provided to optimize the high-bandwidth memory usage of multi-core processors. Power consumption and energy consumption behavior are different from the instruction perspective and the vertex calculation perspective, requiring precise power management methods, and extensive adjustments may not be effective.

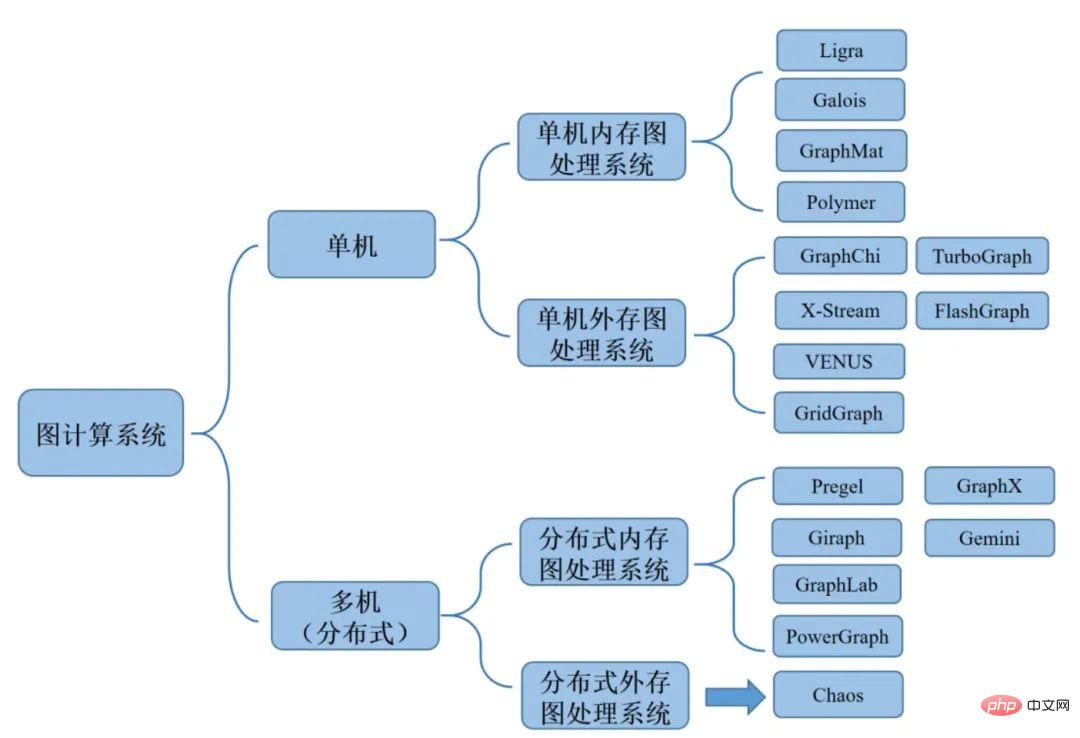

6. View graph calculation from the system

Based on the usage scenarios of large-scale graph computing systems and the differences in computing platform architecture, they can be divided into single-machine memory graph computing systems and single-machine external memory graph systems. Computing system, distributed memory graph computing system and distributed external memory graph computing system.

The single-machine memory graph processing system is a graph processing system that runs in a single-machine environment and buffers all graph data into the memory. The stand-alone external memory graph processing system is an efficient graph algorithm in which the graph processing system runs in a stand-alone environment and continuously interacts with the memory and disk through calculation of graph data. The distributed memory system is a graph processing system running in a distributed cluster environment, and all graph data is loaded into the memory. The distributed external memory graph computing system expands single machine out-of-core systems into clusters and can handle graphs with edges on the order of trillions.

7. Looking at graph computing from AI

The graph neural network (GNN) generated by the fusion of AI and graph computing is currently a rapidly developing and important field. How to combine the relationship data between various entities with neural networks? Graph neural network uses representation learning. Through the structure of the graph, each node or edge is first represented by a vector, and then further processed using a neural network. This expands the scope of neural network use and introduces the relationship between entities into AI processing.

Graph neural network can be seen as a learning process of graph features, such as representative features of nodes or graph-level representative features. Generally, it takes the properties of the graph and the structure of the graph as input and outputs a set of updated nodes. express. Generally this process is also called graph filtering operation. Graph filtering updates node features but does not change the structure of the graph. The development of graph neural networks is developed from different theoretical motivations. For example, if GNN is regarded as a convolution generalization of non-Euclidean distance, it is developed based on graph signals; now most GNNs are based on neural message passing methods. Message passing algorithm proposed through analogy to probabilistic reasoning in graphical models.

Whether it is a spectral method or a space-based idea, the graph neural network can finally be unified into a framework based on message passing. The basic idea of the GNN message passing framework is that at each iteration, each node aggregates the information of its neighbor nodes. As the number of iterations increases, each node contains a larger range of information on the graph. For example, after k iterations, the central node can obtain information about its k-hop neighbors. The key idea is to generate node representations based on graph structure and known feature information. GNN uses the structure and node feature information on the graph to generate deep embedding representations, while the traditional graph embedding method only uses the structural information of the graph to generate layer embeddings through table lookup.

7.1 GNN VS MLP/CNN/RNN

Node neighbors in graph data have two characteristics, one is the indefinite number, and the other is the uncertain order, so MLP/ CNN/RNN cannot directly handle such non-Euclidean data and can only be modeled with GNN. In fact, GNN can be regarded as a more general model. For example, RNN is equivalent to GNN on a linear graph, and Transformer is equivalent to GNN on a complete graph.

7.2 GNN VS Graph Embedding

Many Graph Embedding methods have emerged before GNN and are widely used in the vector recall phase of search services. This type of method Designed inspired by Word2vec, from the original Item2Vec to Node2Vec based on the improvement of balancing homogeneity and structure, to MetaPath2Vec based on the improvement of the heterogeneity of the graph, and the introduction of attribute data to alleviate the sparsity of behavioral data, these methods have all Follow the Skip-Gram paradigm.

Compared with these methods, GNN can be trained end-to-end in combination with the target task, while Graph Embedding is more like pre-training, and the Embedding it learns is not necessarily related to the target task, especially in the sample size In huge business scenarios, the Embedding obtained by end-to-end training is more effective than the Embedding obtained by pre-training.

The hierarchical network structure of GNN is easy to combine with other deep learning technologies, such as GCN Attentinotallow=GAT. GNN can be applied to Inductive tasks, that is, when the structure of the graph changes and some new nodes are added, the model needs to be retrained if it is a Graph Embedding method, and GNN can use a method similar to GraphSage Node-Wise Sampling, using the already The trained model directly infers new nodes, and a variety of features can be used in the message passing process.

7.3 GNN VS Feature Concat & Collaborative Filtering & Proximity Loss

Feature Concat means splicing features together and then learning first-order attribute association information through feature intersection . Collaborative Filtering can also learn first-order behavioral correlation information through user historical behaviors. Proximity Loss means adding regular terms to the loss function to make adjacent nodes more similar, but on the one hand it is an implicit way, on the other hand if you want to ensure that high-order similarity relationships are learned, you need to add more complex The K-order regular term is also one of the starting points when GCN was proposed. Compared with these three methods, GNN can learn high-order correlation information explicitly by stacking multiple layers.

There is a key condition that must be met in the design of graph neural networks, which is permutation invariance or permutation equivariance. That is, the designed function is not affected by the order of nodes or the order of input when processing graph data. Transform domain outputs are in the same order.

8. Summary

Graph is the most flexible connection method, allowing entities to be connected without restrictions. Graph computing is a field that studies how to efficiently calculate, store and manage graph data in large amounts of data. It can be applied to a wide range of business scenarios, such as capital market risk management, life science research, healthcare delivery, monitoring and responding to road accidents. , intelligent infrastructure management, etc. Graph computing that efficiently processes large-scale data can promote the development of emerging application fields such as social network analysis, semantic web analysis, biological information network analysis, and natural language processing.

[Reference Materials]

"Graph Computing of Artificial Intelligence", Tsinghua University Artificial Intelligence Research Institute, Beijing Zhiyuan Artificial Intelligence Research Institute, Tsinghua-Engineering Academy of Engineering Knowledge Intelligence Joint Research Center, 2019- 2

https://www.php.cn/link/c9e5c2b59d98488fe1070e744041ea0e

https://www.php.cn /link/d40d35b3063c11244fbf38e9b55074be

##https://www.php.cn/link/315f006f691ef2e689125614ea22cc61

https ://www.php.cn/link/51d1cd3a02276948f566e6ea0a7d78cb

The above is the detailed content of Learning and thinking about graph computing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

Use ddrescue to recover data on Linux

Mar 20, 2024 pm 01:37 PM

DDREASE is a tool for recovering data from file or block devices such as hard drives, SSDs, RAM disks, CDs, DVDs and USB storage devices. It copies data from one block device to another, leaving corrupted data blocks behind and moving only good data blocks. ddreasue is a powerful recovery tool that is fully automated as it does not require any interference during recovery operations. Additionally, thanks to the ddasue map file, it can be stopped and resumed at any time. Other key features of DDREASE are as follows: It does not overwrite recovered data but fills the gaps in case of iterative recovery. However, it can be truncated if the tool is instructed to do so explicitly. Recover data from multiple files or blocks to a single

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

Open source! Beyond ZoeDepth! DepthFM: Fast and accurate monocular depth estimation!

Apr 03, 2024 pm 12:04 PM

0.What does this article do? We propose DepthFM: a versatile and fast state-of-the-art generative monocular depth estimation model. In addition to traditional depth estimation tasks, DepthFM also demonstrates state-of-the-art capabilities in downstream tasks such as depth inpainting. DepthFM is efficient and can synthesize depth maps within a few inference steps. Let’s read about this work together ~ 1. Paper information title: DepthFM: FastMonocularDepthEstimationwithFlowMatching Author: MingGui, JohannesS.Fischer, UlrichPrestel, PingchuanMa, Dmytr

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

How to use Excel filter function with multiple conditions

Feb 26, 2024 am 10:19 AM

If you need to know how to use filtering with multiple criteria in Excel, the following tutorial will guide you through the steps to ensure you can filter and sort your data effectively. Excel's filtering function is very powerful and can help you extract the information you need from large amounts of data. This function can filter data according to the conditions you set and display only the parts that meet the conditions, making data management more efficient. By using the filter function, you can quickly find target data, saving time in finding and organizing data. This function can not only be applied to simple data lists, but can also be filtered based on multiple conditions to help you locate the information you need more accurately. Overall, Excel’s filtering function is a very practical

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Slow Cellular Data Internet Speeds on iPhone: Fixes

May 03, 2024 pm 09:01 PM

Facing lag, slow mobile data connection on iPhone? Typically, the strength of cellular internet on your phone depends on several factors such as region, cellular network type, roaming type, etc. There are some things you can do to get a faster, more reliable cellular Internet connection. Fix 1 – Force Restart iPhone Sometimes, force restarting your device just resets a lot of things, including the cellular connection. Step 1 – Just press the volume up key once and release. Next, press the Volume Down key and release it again. Step 2 – The next part of the process is to hold the button on the right side. Let the iPhone finish restarting. Enable cellular data and check network speed. Check again Fix 2 – Change data mode While 5G offers better network speeds, it works better when the signal is weaker

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

The vitality of super intelligence awakens! But with the arrival of self-updating AI, mothers no longer have to worry about data bottlenecks

Apr 29, 2024 pm 06:55 PM

I cry to death. The world is madly building big models. The data on the Internet is not enough. It is not enough at all. The training model looks like "The Hunger Games", and AI researchers around the world are worrying about how to feed these data voracious eaters. This problem is particularly prominent in multi-modal tasks. At a time when nothing could be done, a start-up team from the Department of Renmin University of China used its own new model to become the first in China to make "model-generated data feed itself" a reality. Moreover, it is a two-pronged approach on the understanding side and the generation side. Both sides can generate high-quality, multi-modal new data and provide data feedback to the model itself. What is a model? Awaker 1.0, a large multi-modal model that just appeared on the Zhongguancun Forum. Who is the team? Sophon engine. Founded by Gao Yizhao, a doctoral student at Renmin University’s Hillhouse School of Artificial Intelligence.

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects