Technology peripherals

Technology peripherals

AI

AI

Nature issued an article: The speed of basic scientific innovation has slowed down and has entered the 'incremental era'

Nature issued an article: The speed of basic scientific innovation has slowed down and has entered the 'incremental era'

Nature issued an article: The speed of basic scientific innovation has slowed down and has entered the 'incremental era'

In the past few decades, the number of scientific and technological research papers published worldwide has increased dramatically. But based on an analysis of the papers and previous literature, scientists found that the "disruptiveness" of these papers was declining sharply.

Data from millions of manuscripts show that research completed in the 21st century is more of a "step-by-step" advance in science than it was in the mid-20th century. It’s not about charting a new direction and making previous work completely obsolete. An analysis of patents from 1976 to 2010 shows the same trend.

The report was published in the journal Nature on January 4. "These data suggest that something is changing," said Russell Funk, a sociologist at the University of Minnesota and co-author of the analysis. "The intensity of the disruptive discoveries that were there is no longer there."

What does the number of citations indicate?

Although the last century has witnessed an unprecedented expansion of scientific and technological knowledge, there are concerns that innovative activity is slowing down. Papers, patents, and even grant applications become less novel than previous work and less likely to connect different areas of knowledge. In addition, the gap between the year a Nobel Prize is discovered and the year it is awarded is growing, suggesting that some contributions are no longer as important as they once were.

This slowdown in innovation requires rigorous analysis and explanation. The report's authors reasoned that if a study was highly disruptive, subsequent studies would be less likely to cite references to that study and instead cite the study itself.

So the researchers analyzed 25 million papers (1945-2010) in the Web of Science (WoS) and 390 in the United States Patent and Trademark Office (USPTO) Patent Views database. million patents (1976-2010) to understand the creation of innovation gaps. WoS data includes 390 million citations, 25 million paper titles, and 13 million abstracts; Patents View data includes 35 million citations, 3.9 million patent titles, and 3.9 million abstracts. They then used the same analysis method on four additional datasets (JSTOR, American Physical Society Corpus, Microsoft Academic Graph, and PubMed) containing 20 million papers.

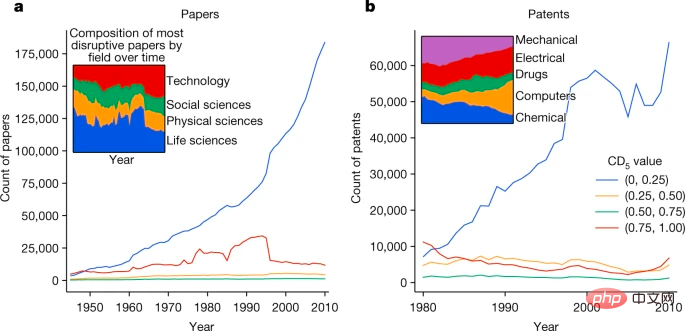

Using citation data from these 45 million manuscripts and 3.9 million patents, the researchers calculated an index to measure disruption, called the "CD index", with values ranging from - 1 to 1 distribution, from the least disruptive work to the most disruptive work.

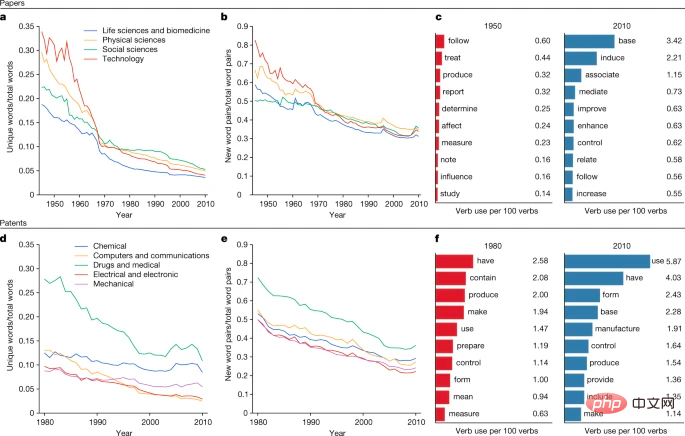

The average CD index of research manuscripts fell by more than 90% from 1945 to 2010, and the average CD index of patents fell by more than 78% from 1980 to 2010. Disruption declines across all research fields and patent types analyzed, even after accounting for potential differences in factors such as citation conventions.

Changes in Language Habits

The authors also analyzed the most commonly used verbs in the manuscripts and found that studies from the 1950s were more likely to use meanings of creating or discovering terms such as "generate" or "determine," whereas studies in the 2010s were more likely to refer to incremental progress, using terms such as "improvement" or "enhancement."

"It's great that this phenomenon can be documented in such a detailed way," says Dashun Wang, a computational social scientist at Northwestern University in Evanston, Illinois. They used 100 Looking at this problem in different ways, I think it is generally very convincing."

From papers and patents The decline of disruptive science and technology can be seen in changes in language.

Other research shows that scientific innovation has also slowed in recent decades, said Yian Yin, a computational social scientist also at Northwestern University. But the study provides "a new starting point for studying how science changes in a data-driven way," he added.

Dashun Wang said that disruption itself is not necessarily a good thing, and at the same time, incremental science is not necessarily a bad thing. He also mentioned a situation: For example, the first direct observation of gravitational waves is both a revolutionary achievement and a product of incremental science.

The ideal situation is a healthy mix of incremental and disruptive research, says John Walsh, a technology policy expert at the Georgia Institute of Technology in Atlanta: "In a world where we care about the validity of research results, there's more replication. And reproduction can be a good thing."

What is slowing down innovation?

What exactly caused the decline of disruption?

John Walsh says it's important to understand the reasons for this dramatic change, and part of it may stem from changes in the scientific enterprise. For example, there are many more researchers today than there were in the 1940s, creating a more competitive environment that raises the stakes of publishing research and pursuing patents. This in turn changes the incentives for researchers to do their work. For example, large research teams have become more common, and Dashun Wang and colleagues found that large teams are more likely to produce incremental rather than disruptive science.

John Walsh said it's not easy to find an explanation for the decline. While the overall proportion of disruptive research fell significantly between 1945 and 2010, the amount of highly disruptive research remained essentially the same.

Data show that the emergence of highly disruptive research is not inconsistent with the slowdown in innovation.

#At the same time, the rate of decline is puzzling. The CD index declined sharply from 1945 to 1970 and then even more significantly from the late 1990s to 2010.

"Whatever explanation you have for the decline in disruption, you need to explain its plateauing in the 2000s," he said.

The above is the detailed content of Nature issued an article: The speed of basic scientific innovation has slowed down and has entered the 'incremental era'. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

New 'AI scientists” combine theory and data to discover scientific equations

May 18, 2023 am 10:49 AM

New 'AI scientists” combine theory and data to discover scientific equations

May 18, 2023 am 10:49 AM

Scientists aim to discover meaningful formulas that accurately describe experimental data. Mathematical models of natural phenomena can be created manually based on domain knowledge, or they can be created automatically from large data sets using machine learning algorithms. The academic community has studied the problem of merging related prior knowledge and related function models, and believes that finding a model that is consistent with prior knowledge of general logical axioms is an unsolved problem. Researchers from the IBM research team and Samsung AI team developed a method "AI-Descartes" that combines logical reasoning with symbolic regression to conduct principled derivation of natural phenomenon models from axiomatic knowledge and experimental data. The study is based on "Combining data and theory for

Which computer should Geographic Information Science majors choose?

Jan 13, 2024 am 08:00 AM

Which computer should Geographic Information Science majors choose?

Jan 13, 2024 am 08:00 AM

Recommended computers suitable for students majoring in geographic information science 1. Recommendation 2. Students majoring in geographic information science need to process large amounts of geographic data and conduct complex geographic information analysis, so they need a computer with strong performance. A computer with high configuration can provide faster processing speed and larger storage space, and can better meet professional needs. 3. It is recommended to choose a computer equipped with a high-performance processor and large-capacity memory, which can improve the efficiency of data processing and analysis. In addition, choosing a computer with larger storage space and a high-resolution display can better display geographic data and results. In addition, considering that students majoring in geographic information science may need to develop and program geographic information system (GIS) software, choose a computer with better graphics processing support.

'Father of Machine Learning' Mitchell writes: How AI accelerates scientific development and how the United States seizes opportunities

Jul 29, 2024 pm 08:23 PM

'Father of Machine Learning' Mitchell writes: How AI accelerates scientific development and how the United States seizes opportunities

Jul 29, 2024 pm 08:23 PM

Editor | ScienceAI Recently, Tom M. Mitchell, a professor at Carnegie Mellon University and known as the "Father of Machine Learning," wrote a new AI for Science white paper, focusing on "How does artificial intelligence accelerate scientific development? How does the U.S. government Help achieve this goal? ” theme. ScienceAI has compiled the full text of the original white paper without changing its original meaning. The content is as follows. The field of artificial intelligence has made significant recent progress, including large-scale language models such as GPT, Claude, and Gemini, thus raising the possibility that a very positive impact of artificial intelligence, perhaps greatly accelerating

96 Chinese scholars were selected as the world's top 1,000 computer scientists, and Zhang Lei became the first in mainland China

Apr 21, 2023 pm 06:58 PM

96 Chinese scholars were selected as the world's top 1,000 computer scientists, and Zhang Lei became the first in mainland China

Apr 21, 2023 pm 06:58 PM

Recently, Research (Guide2Research) released the ranking of the world's top 1,000 computer scientists in 2023. 583 American scientists dominate the list. There are 96 Chinese scientists on the list, with Zhang Lei ranking first among mainland Chinese scientists. Among the top 20 scientists in the world, there are many big names in AI, such as Turing Award winners Bengio and Hinton. Netizens pointed out that among the top 100 computer scientists in the United States, there are only 7 women. Research’s ranking of the best scientists in computer science has been updated to its 9th edition. This ranking reviewed various academic indicators of more than 14,400 scientists around the world and selected the top 1,000 top scientists. Ranking based on including O

Science's annual top ten scientific research announcements: the Webb telescope was selected, accompanied by AIGC!

Apr 11, 2023 pm 03:37 PM

Science's annual top ten scientific research announcements: the Webb telescope was selected, accompanied by AIGC!

Apr 11, 2023 pm 03:37 PM

Finally, the 2022 Science Magazine’s biggest awards are announced! On December 16, the science official website released the "Top Ten Scientific Breakthroughs in 2022", among which the Webb Telescope won the crown and was published on the cover of the latest issue. The reason for the award given by Science magazine is: Due to its technical feats of construction and launch and its huge prospects for exploring the universe, the James Webb Telescope was named Science Magazine's 2022 Scientific Breakthrough of the Year. In addition, major achievements in the scientific community in the past year, including AIGC, NASA's successful impact on an asteroid, and Yunnan University's creation of perennial rice, have also been selected. Let’s review these blockbuster studies over the past year. Breakthrough of the Year—Webb Telescope July 12, NA

What are the pros and cons of implementing artificial intelligence in healthcare?

Apr 12, 2023 pm 10:34 PM

What are the pros and cons of implementing artificial intelligence in healthcare?

Apr 12, 2023 pm 10:34 PM

Artificial intelligence in healthcare covers a wide range of things that can help health systems and workers, but what are the specific benefits and drawbacks of adopting AI? From transportation to service delivery, artificial intelligence (AI) has demonstrated science and technology over the years developments, especially with the implementation of AI in healthcare. However, it doesn't stop there. One of its biggest leaps forward has been in health care, which has elicited mixed reactions among the general public and medical professionals. Artificial intelligence in healthcare encompasses a broad range of assistance with algorithms and tedious tasks that are part of a healthcare worker's job. This includes streamlining time-consuming tasks, streamlining complex procedures, and even real-time clinical decision-making. But like all aspects of human progress, in order to see the bigger picture

Do you need to ask about language models when dating your boyfriend? Nature: Propose ideas and summarize notes. GPT-3 has become a contemporary 'scientific research worker'

Apr 14, 2023 pm 05:19 PM

Do you need to ask about language models when dating your boyfriend? Nature: Propose ideas and summarize notes. GPT-3 has become a contemporary 'scientific research worker'

Apr 14, 2023 pm 05:19 PM

Let a monkey press keys randomly on a typewriter, and given enough time, it can type out the complete works of Shakespeare. What if it was a monkey that understood grammar and semantics? The answer is that even scientific research can be done for you! The development momentum of language models is very rapid. A few years ago, it could only automatically complete the next word to be input on the input method. Today, it can already help researchers analyze and write scientific papers and generate code. The training of large language models (LLM) generally requires massive text data for support. In 2020, OpenAI released the GPT-3 model with 175 billion parameters. It can write poems and do math problems. It can do almost everything the generative model can do. GPT-3 has already achieved the ultimate. Even today, GPT-3 is still used by many language models.

2023 Nobel Prize in Physics announced, three scientists win for attosecond light pulses

Oct 03, 2023 pm 09:21 PM

2023 Nobel Prize in Physics announced, three scientists win for attosecond light pulses

Oct 03, 2023 pm 09:21 PM

According to news from this site on October 3, the 2023 Nobel Prize in Physics was announced to be awarded to Pierre Agostini, Ferenc Kraus and Anne Lhuillier in recognition of their research on electrons in matter. The experimental method for generating attosecond light pulses in terms of dynamics officially stated that "their contribution enables people to study extremely short processes that could not be tracked before", and the prize of 11 million Swedish kronor (note from this site: approximately 7.3 million yuan) The prize will be divided equally among the three winners. The Royal Swedish Academy of Sciences said the three physicists "demonstrated a method of creating extremely short light pulses that can be used to measure the rapid process of electron movement or energy change", which "provides human exploration of the electronic world inside atoms and molecules." New ways”. Since the time scale of people’s observation of the world reaches