Technology peripherals

Technology peripherals

AI

AI

Meta and CMU team up to launch an epic VR upgrade! The latest HyperReel model realizes high-fidelity 6 degrees of freedom video rendering

Meta and CMU team up to launch an epic VR upgrade! The latest HyperReel model realizes high-fidelity 6 degrees of freedom video rendering

Meta and CMU team up to launch an epic VR upgrade! The latest HyperReel model realizes high-fidelity 6 degrees of freedom video rendering

Recently, HyperReel, a 6-DoF video representation model proposed by Meta and Carnegie Mellon University, may indicate that a new VR "killer" application is about to be born!

The so-called "six degrees of freedom video" (6-DoF) is simply an ultra-high-definition 4D experiential playback.

Among them, users can completely "immerse themselves" in dynamic scenes and move freely. And when they change their head position (3 DoF) and direction (3 DoF) arbitrarily, the corresponding views will also be generated accordingly.

##Paper address: https://arxiv.org/abs/2301.02238

Compared with previous work, HyperReel’s biggest advantage lies in memory and computing efficiency, both of which are crucial for portable VR headsets.

And just using vanilla PyTorch, HyperReel can achieve megapixel resolution rendering at 18 frames per second on a single NVIDIA RTX 3090.

Too long to read:

1 . Propose a light condition sampling prediction network that can achieve high-fidelity, high-frame-rate rendering at high resolutions, as well as a compact and memory-efficient dynamic volume representation;

2. The 6-DoF video representation method HyperReel combines the above two core parts to achieve an ideal balance between speed, quality and memory while rendering megapixel resolution in real time;

#3. HyperReel is superior to other methods in many aspects such as memory requirements and rendering speed.

Paper introductionVolumetric scene representation can provide realistic view synthesis for static scenes and constitutes the existing 6-DoF Fundamentals of video technology.

However, the volume rendering programs that drive these representations require careful trade-offs in terms of quality, rendering speed, and memory efficiency.

Existing methods have a drawback - they cannot simultaneously achieve real-time performance, small memory footprint and high-quality rendering, which are extremely difficult in challenging real-world scenarios. important.

To solve these problems, researchers proposed HyperReel, a 6-DoF video representation method based on NeRF technology (Neural Radiation Field).

Among them, the two core parts of HyperReel are:

1. A sampling prediction network under light conditions, capable of high resolution High-fidelity, high-frame-rate rendering;

2. A compact and memory-efficient dynamic volume representation.

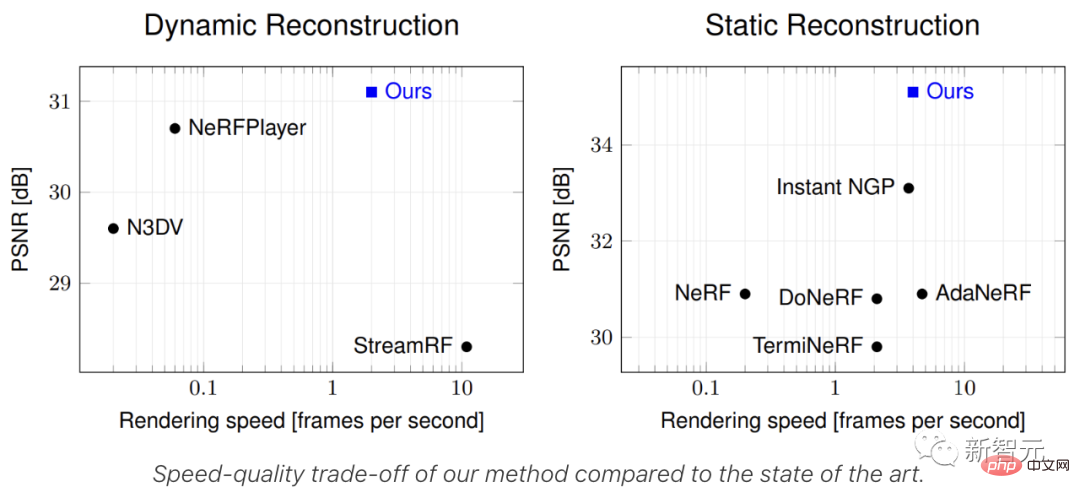

Compared to other methods, HyperReel’s 6-DoF video pipeline not only performs extremely well in terms of visual quality, but also requires very little memory.

At the same time, HyperReel can achieve a rendering speed of 18 frames/second at megapixel resolution without any custom CUDA code.

Specifically, HypeReel achieves high rendering quality, speed and memory by combining a sample prediction network and a keyframe-based volumetric representation. balance between efficiency.

The sample prediction network can both accelerate volume rendering and improve rendering quality, especially for scenes with challenging view dependencies.

In terms of volume representation based on key frames, researchers use an extension of TensoRF.

This method can accurately represent a complete video sequence while consuming roughly the same memory as a single static frame TensoRF.

Real-time Demonstration

Next, we will demonstrate in real time how HypeReel performs dynamic and static scenes at a resolution of 512x512 pixels. Rendering effect.

It is worth noting that the researchers used smaller models in the Technicolor and Shiny scenes, so the frame rate of the rendering was greater than 40 FPS. For the remaining data sets, the full model is used, but HypeReel is still able to provide real-time inference.

Technicolor

Technicolor

##Shiny

##Shiny

Stanford

Stanford

Immersive

Immersive

DoNeRF

Implementation methodIn order to implement HeperReel, the first issue to consider is to optimize the static view synthesis Volume characterization.

Volume representation like NeRF models the density and appearance of each point of a static scene in 3D space.

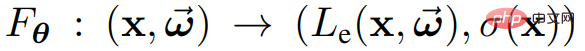

More specifically, map the position x and direction  along a ray to a color via the function

along a ray to a color via the function

and density σ(x).

and density σ(x).

The trainable parameter θ here can be a neural network weight, an N-dimensional array entry, or a combination of both.

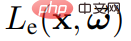

Then you can render a new view of the static scene

where  represents the transmittance from o to

represents the transmittance from o to  .

.

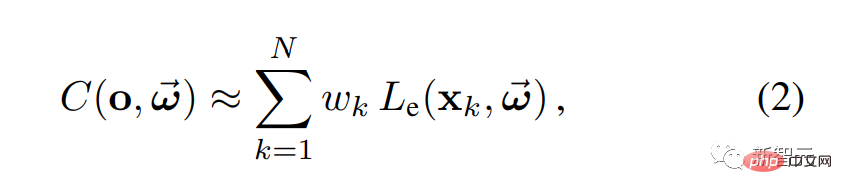

In practice, Equation 1 can be calculated by taking multiple sample points along a given ray and then using numerical quadrature:

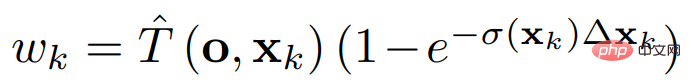

The weight  specifies the contribution of the color of each sample point to the output.

specifies the contribution of the color of each sample point to the output.

Mesh example for volume rendering

In HyperReel of a static scene, given a set of images and camera pose, and the training goal is to reconstruct the measured color associated with each ray.

Most scenes are composed of solid objects whose surfaces lie on a 2D manifold within the 3D scene volume. In this case, only a small number of sample points affect the rendered color of each ray.

So, to speed up volume rendering, the researchers wanted to query color and opacity only for non-zero  points.

points.

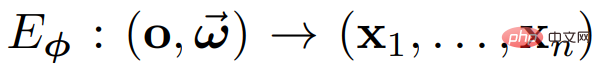

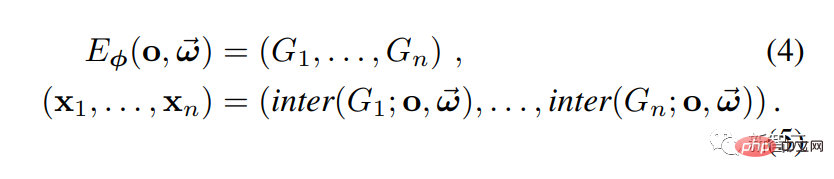

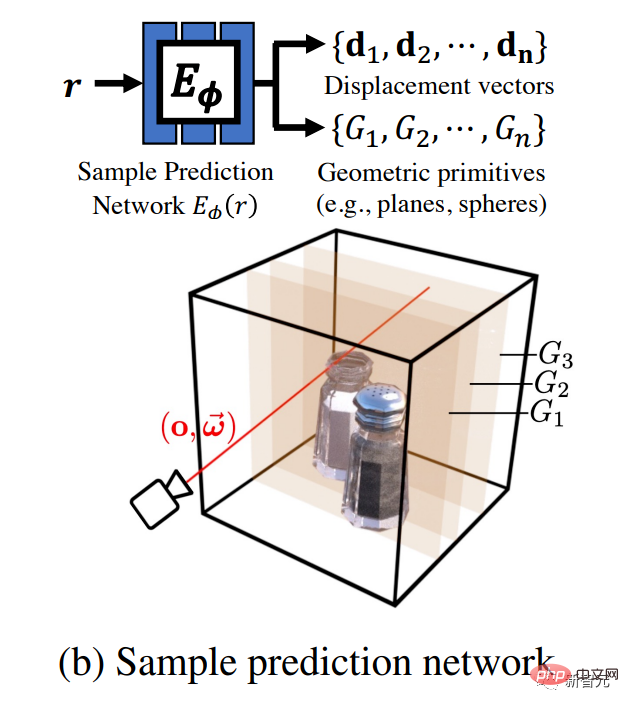

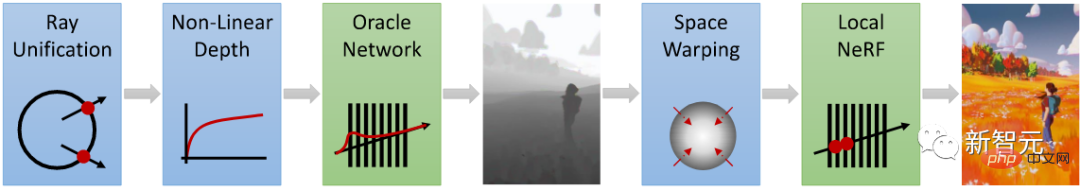

As shown in the figure below, researchers use a feed-forward network to predict a set of sample locations . Specifically, the sample prediction network

. Specifically, the sample prediction network  is used to map the ray

is used to map the ray  to the sample point

to the sample point  to obtain the volume equation 2 Rendering in .

to obtain the volume equation 2 Rendering in .

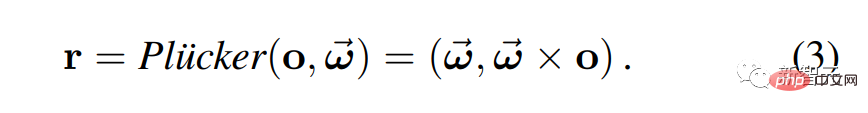

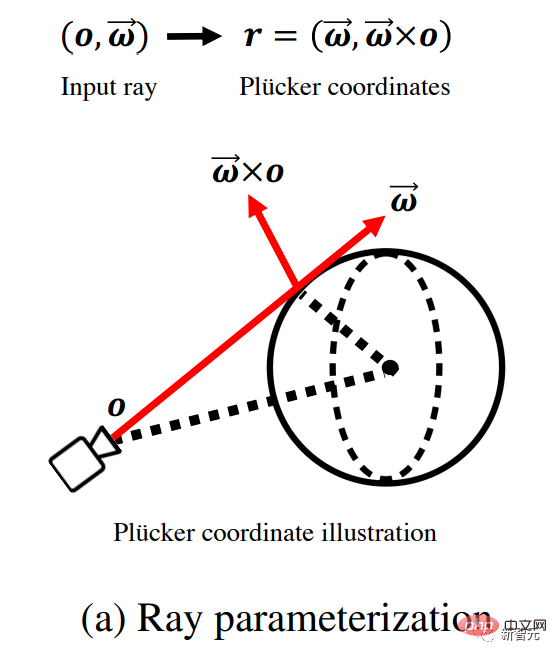

Here, the researchers used Plucker’s parameterization to characterize the light.

But there is a problem: giving the network too much flexibility may have a negative impact on the quality of view synthesis. For example, if (x1, . . . , xn) are completely arbitrary points, then the rendering may not look consistent across multiple views.

In order to solve this problem, the researchers chose to use a sample prediction network to predict the parameters of a set of geometric primitives G1, ..., Gn, where the parameters of the primitives can be determined according to the input ray vary depending on the. To obtain sample points, a ray is intersected with each primitive.

As shown in Figure a, given an input ray originating from the camera origin o and propagating along the direction ω, the researchers first used Plucker coordinates , re-parameterize the light.

As shown in Figure b, a network takes this ray as input and outputs a set of Parameters for geometric primitives {} (such as axis-aligned planes and spheres) and displacement vectors {}.

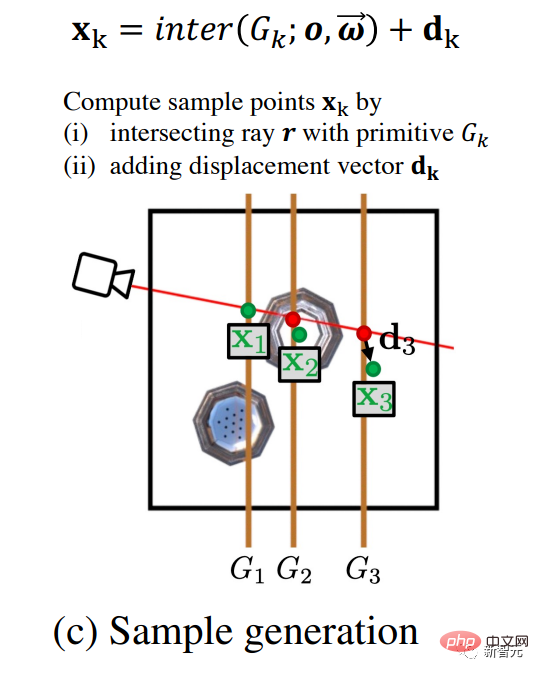

As shown in Figure c, to generate sample points { #} for volume rendering, the researchers calculated ray and geometric basis intersection between elements and the displacement vector is added to the result. The advantage of predicting geometric primitives is that the sampled signal is smooth and easy to interpolate.

#} for volume rendering, the researchers calculated ray and geometric basis intersection between elements and the displacement vector is added to the result. The advantage of predicting geometric primitives is that the sampled signal is smooth and easy to interpolate.

Displacement vectors provide additional flexibility for sample points, enabling better capture of complex line-of-sight dependent appearances.

As shown in Figure d, finally, the researchers performed volume rendering through Formula 2 to generate a pixel color and based on the corresponding observation results , it was supervised and trained.

Dynamic volume based on key frames

Through the above method, the 3D scene volume can be effectively sampled.

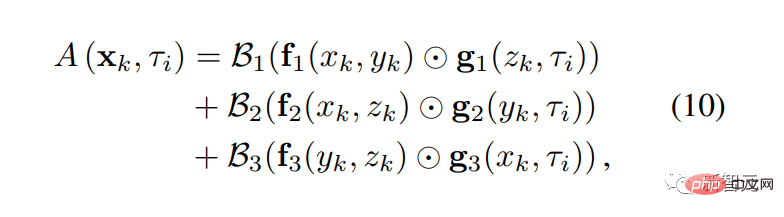

How to characterize volume? In the static case, the researchers used the memory-efficient tensor radiation field (TensoRF) method; in the dynamic case, TensoRF was extended to keyframe-based dynamic volume representation.

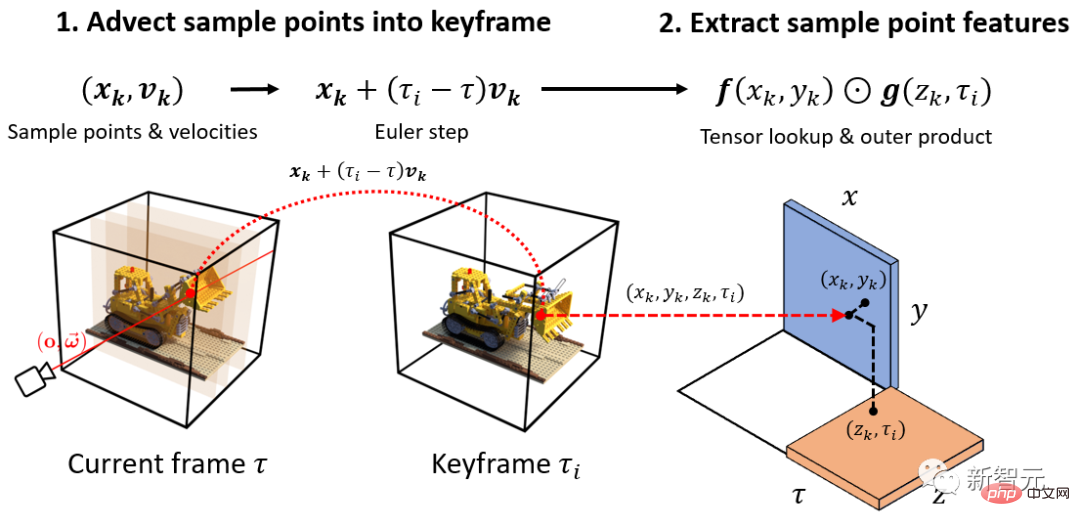

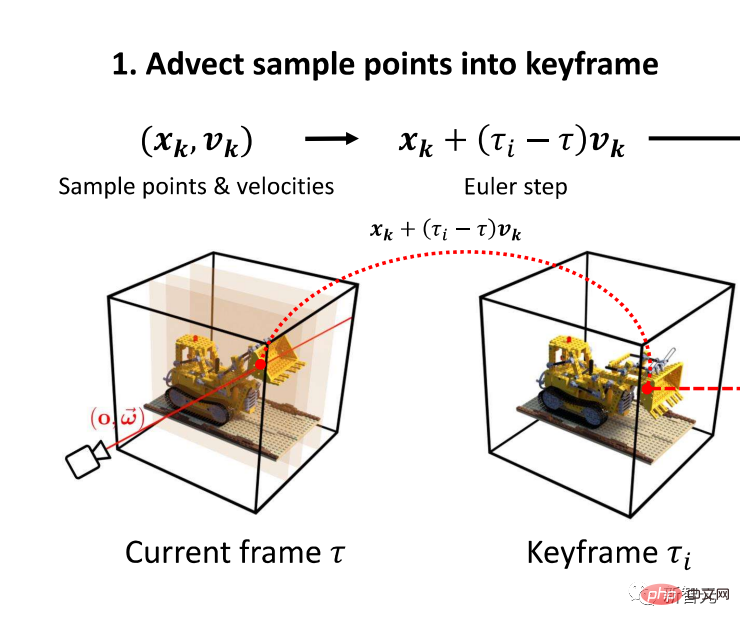

The following figure explains the process of extracting dynamic sample point representation from keyframe-based representation.

As shown in Figure 1, first, the researchers used the speed of predicting the network output from samples{}, translate the sample point {} at time to the nearest keyframe middle.

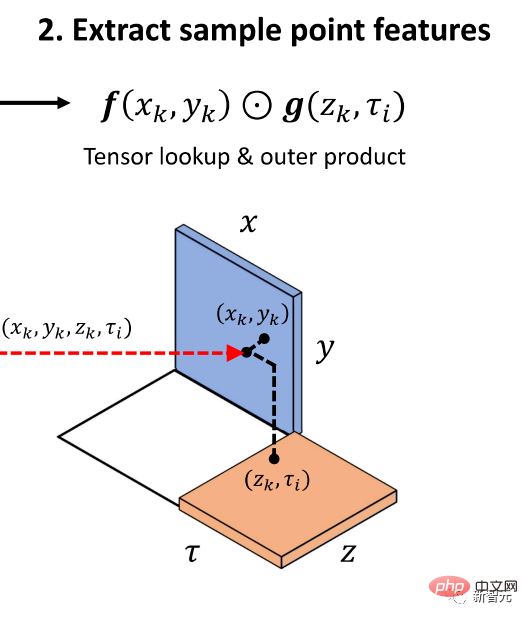

Then, as shown in Figure 2, the researchers queried the outer product of the spatiotemporal texture to produce the appearance characteristics of each sample point, and then Convert this to color via Equation 10.

Through this process, the researchers extracted the opacity of each sample.

Comparison of results

Comparison of static scenes

Here, study The researchers compared HyperReel with existing static view synthesis methods, including NeRF, InstantNGP, and three sampling network-based methods.

- DoNeRF dataset

The DoNeRF dataset contains six synthetic sequences, images The resolution is 800×800 pixels.

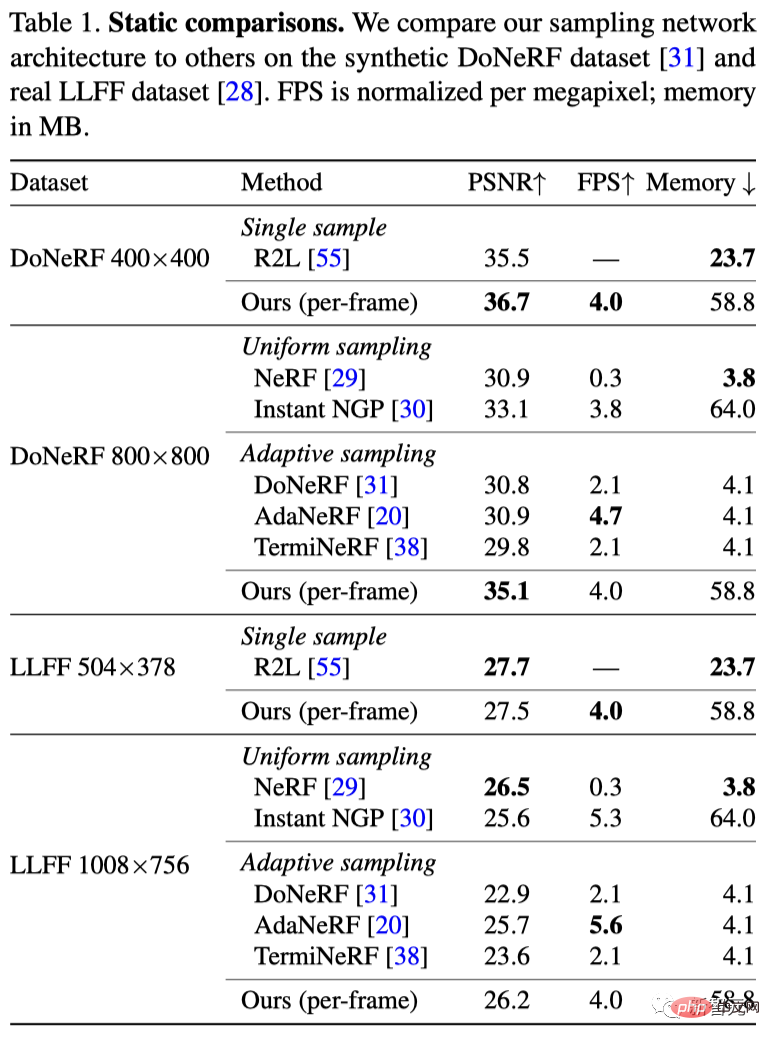

As shown in Table 1, HyperReel’s method outperforms all baselines in quality and improves the performance of other sampling network schemes to a large extent.

Meanwhile, HyperReel is implemented with vanilla PyTorch and can render an 800×800 pixel image at 6.5 FPS on a single RTX 3090 GPU (or 29 FPS with the Tiny model rendering).

In addition, compared with R2L’s 88-layer, 256-hidden-unit deep MLP, the researchers’ proposed 6-layer, 256-hidden-unit network plus the TensoRF volumetric backbone has an inference speed of Faster

- LLFF data set

The LLFF data set contains 8 Real-world sequence of 1008×756 pixel images.

As shown in Table 1, HyperReel’s method is better than DoNeRF, AdaNeRF, TermiNeRF and InstantNGP, but the quality achieved is slightly worse than NeRF.

This dataset is a huge challenge for explicit volumetric representation due to incorrect camera calibration and sparsity of input views.

Comparison of dynamic scenes

- Technicolor Dataset

The Technicolor light field dataset contains videos of various indoor environments captured by a time-synchronized 4×4 camera setup, with each image in each video stream Both are 2048×1088 pixels.

The researchers compared HyperReel and Neural 3D Video at full image resolution for five sequences of this dataset (Birthday, Fabien, Painter, Theater, Trains), each The sequence is 50 frames long.

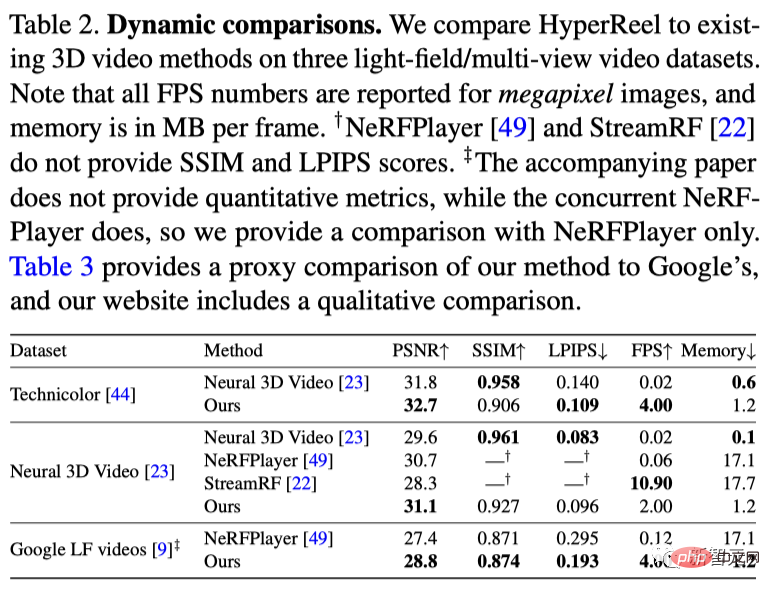

As shown in Table 2, the quality of HyperReel exceeds that of Neural 3D Video, while the training time per sequence is only 1.5 hours (instead of more than 1000 hours for Neural 3D) , and render faster.

- Neural 3D Video data set

Neural 3D Video data set contains 6 Indoor multi-view video sequence, captured by 20 cameras at a resolution of 2704×2028 pixels.

As shown in Table 2, HyperReel outperforms all baseline methods on this dataset, including recent works such as NeRFPlayer and StreamRF.

In particular, HyperReel exceeds NeRFPlayer in quantity, and the rendering speed is about 40 times; it exceeds StreamRF in quality, although it uses Plenoxels as the backbone method (using customized CUDA kernels to speed up inference) and render faster.

In addition, HyperReel consumes much less memory per frame on average than both StreamRF and NeRFPlayer.

- Google Immersive Dataset

Google Immersive Dataset contains various indoor and light field videos of outdoor environments.

As shown in Table 2, HyperReel is 1 dB better than NeRFPlayer in quality, and the rendering speed is also faster.

Unfortunately, HyperReel has not yet reached the rendering speed required by VR (ideally 72FPS, stereo).

However, since this method is implemented in vanilla PyTorch, performance can be further optimized through work such as a custom CUDA kernel.

Author introduction

The first author of the paper, Benjamin Attal, is currently pursuing a PhD at Carnegie Mellon Robotics Institute. Research interests include virtual reality, and computational imaging and displays.

The above is the detailed content of Meta and CMU team up to launch an epic VR upgrade! The latest HyperReel model realizes high-fidelity 6 degrees of freedom video rendering. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Top 10 latest releases of virtual currency trading platforms for bulk transactions

Apr 22, 2025 am 08:18 AM

Top 10 latest releases of virtual currency trading platforms for bulk transactions

Apr 22, 2025 am 08:18 AM

The following factors should be considered when choosing a bulk trading platform: 1. Liquidity: Priority is given to platforms with an average daily trading volume of more than US$5 billion. 2. Compliance: Check whether the platform holds licenses such as FinCEN in the United States, MiCA in the European Union. 3. Security: Cold wallet storage ratio and insurance mechanism are key indicators. 4. Service capability: Whether to provide exclusive account managers and customized transaction tools.

Summary of the top ten Apple version download portals for digital currency exchange apps

Apr 22, 2025 am 09:27 AM

Summary of the top ten Apple version download portals for digital currency exchange apps

Apr 22, 2025 am 09:27 AM

Provides a variety of complex trading tools and market analysis. It covers more than 100 countries, has an average daily derivative trading volume of over US$30 billion, supports more than 300 trading pairs and 200 times leverage, has strong technical strength, a huge global user base, provides professional trading platforms, secure storage solutions and rich trading pairs.

What are the top ten virtual currency trading apps? Recommended on the top ten digital currency exchange platforms

Apr 22, 2025 pm 01:12 PM

What are the top ten virtual currency trading apps? Recommended on the top ten digital currency exchange platforms

Apr 22, 2025 pm 01:12 PM

The top ten secure digital currency exchanges in 2025 are: 1. Binance, 2. OKX, 3. gate.io, 4. Coinbase, 5. Kraken, 6. Huobi, 7. Bitfinex, 8. KuCoin, 9. Bybit, 10. Bitstamp. These platforms adopt multi-level security measures, including separation of hot and cold wallets, multi-signature technology, and a 24/7 monitoring system to ensure the safety of user funds.

What are the stablecoins? How to trade stablecoins?

Apr 22, 2025 am 10:12 AM

What are the stablecoins? How to trade stablecoins?

Apr 22, 2025 am 10:12 AM

Common stablecoins are: 1. Tether, issued by Tether, pegged to the US dollar, widely used but transparency has been questioned; 2. US dollar, issued by Circle and Coinbase, with high transparency and favored by institutions; 3. DAI, issued by MakerDAO, decentralized, and popular in the DeFi field; 4. Binance Dollar (BUSD), cooperated by Binance and Paxos, and performed excellent in transactions and payments; 5. TrustTo

How many stablecoin exchanges are there now? How many types of stablecoins are there?

Apr 22, 2025 am 10:09 AM

How many stablecoin exchanges are there now? How many types of stablecoins are there?

Apr 22, 2025 am 10:09 AM

As of 2025, the number of stablecoin exchanges is about 1,000. 1. Stable coins supported by fiat currencies include USDT, USDC, etc. 2. Cryptocurrency-backed stablecoins such as DAI and sUSD. 3. Algorithm stablecoins such as TerraUSD. 4. There are also hybrid stablecoins.

Which of the top ten transactions in the currency circle? The latest currency circle app recommendations

Apr 24, 2025 am 11:57 AM

Which of the top ten transactions in the currency circle? The latest currency circle app recommendations

Apr 24, 2025 am 11:57 AM

Choosing a reliable exchange is crucial. The top ten exchanges such as Binance, OKX, and Gate.io have their own characteristics. New apps such as CoinGecko and Crypto.com are also worth paying attention to.

What are the next thousand-fold coins in 2025?

Apr 24, 2025 pm 01:45 PM

What are the next thousand-fold coins in 2025?

Apr 24, 2025 pm 01:45 PM

As of April 2025, seven cryptocurrency projects are considered to have significant growth potential: 1. Filecoin (FIL) achieves rapid development through distributed storage networks; 2. Aptos (APT) attracts DApp developers with high-performance Layer 1 public chains; 3. Polygon (MATIC) improves Ethereum network performance; 4. Chainlink (LINK) serves as a decentralized oracle network to meet smart contract needs; 5. Avalanche (AVAX) trades quickly and

What is DLC currency? What is the prospect of DLC currency

Apr 24, 2025 pm 12:03 PM

What is DLC currency? What is the prospect of DLC currency

Apr 24, 2025 pm 12:03 PM

DLC coins are blockchain-based cryptocurrencies that aim to provide an efficient and secure trading platform, support smart contracts and cross-chain technologies, and are suitable for the financial and payment fields.