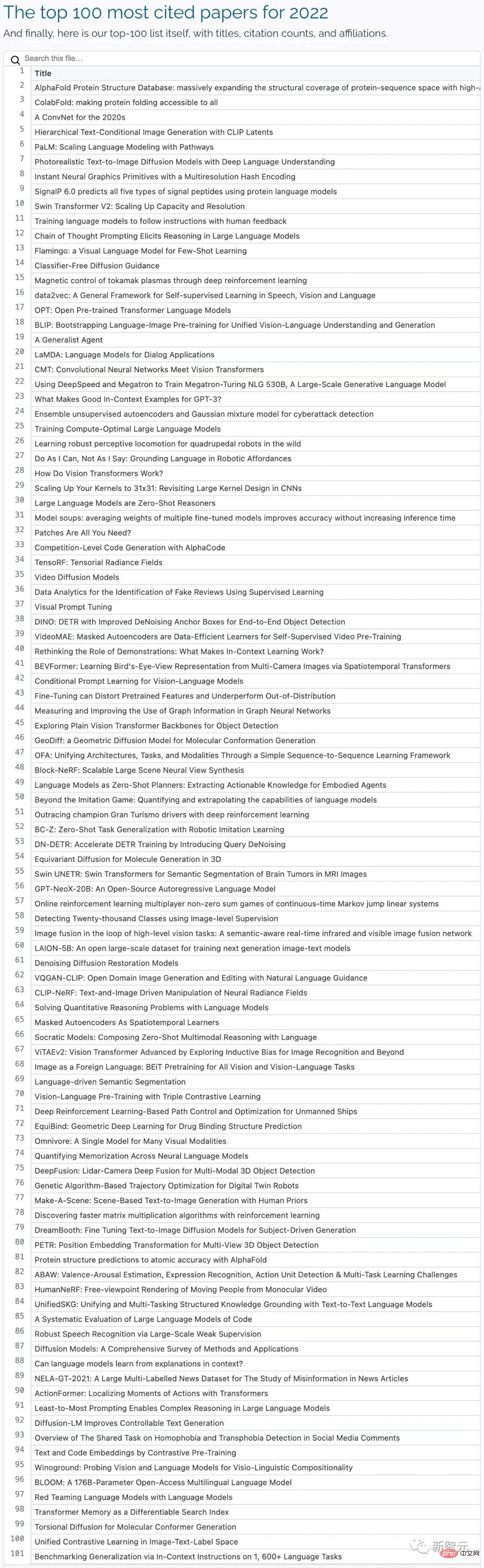

The pace of innovation in the field of artificial intelligence is getting faster and faster, and the number of papers has exploded, even reaching the point where humans cannot read it.

Among the massive papers published in 2022, which institutions have the most influence? Which papers are more worth reading?

Recently, the foreign media Zeta Alpha used the classic citation count as an evaluation indicator, and collected and compiled the 100 most cited papers in in 2022 , and analyzed the number of highly cited papers published by different countries and institutions in the past three years.

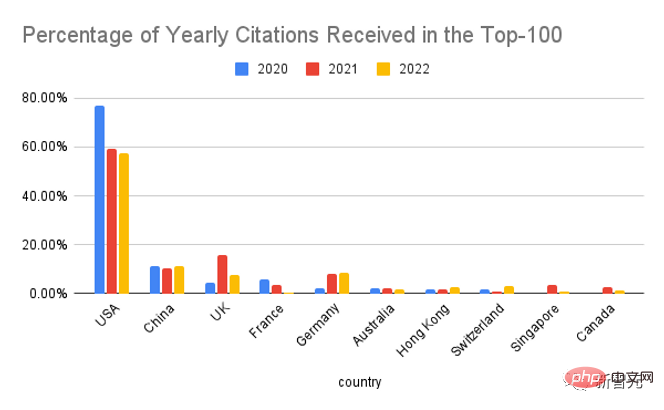

If divided by country, the United States still occupies the leading position, but its proportion of the Top-100 papers is compared It will drop significantly in 2020.

China ranks second, with data slightly higher than last year; third is the United Kingdom, with DeepMind’s output last year Accounting for 69% of the UK's total, exceeding 60% in previous years; Singapore and Australia's influence in the AI field also exceeded analysts' expectations.

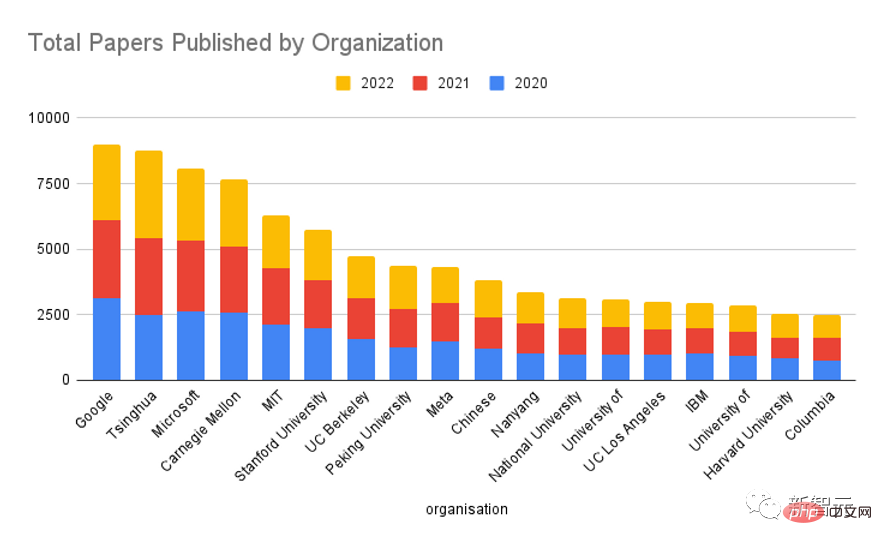

If divided by organization, we can see that Google has always been the strongest player in the field of AI, followed by Meta, Microsoft, University of California, Berkeley, DeepMind and Stanford University , ranking first in the country is Tsinghua University.

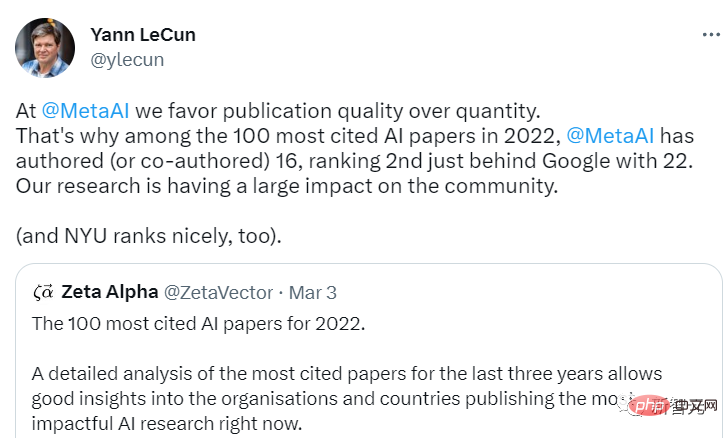

As the leader of Meta AI, Yann LeCun is also proud to announce Meta’s influence in the industry and said that Meta AI pays more attention to the quality of publication rather than the quantity of publication.

As for the issue of Google and DeepMind, both belonging to Alphabet, being calculated separately in the list, LeCun It said that DeepMind has always insisted that they operate independently from Google, which is strange. Google employees do not have access to DeepMind's code base.

Although most of today's artificial intelligence research is led by the industry, and a single academic institution has little impact, due to the long tail effect, the academic community as a whole is still on par with the industry. When the data is aggregated by organization type, it can be seen that the impact of both is roughly equal.

If we look back at the past three years and count the total number of research results of various institutions, we can see that Google is still in the leading position, but the gap is much smaller compared with other institutions. It is worth checking out. It is worth mentioning that Tsinghua University ranks second after Google.

OpenAI and DeepMind are not even in the top 20, of course, the articles published by these institutions The number is small, but the impact of each article is huge.

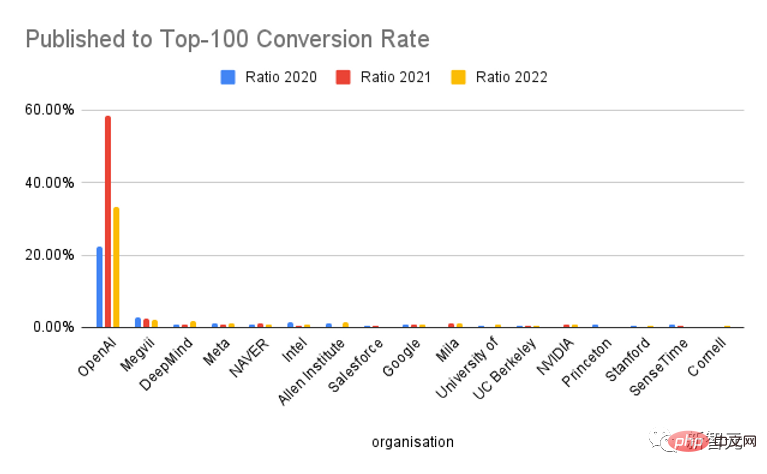

If we look at the proportion of publications entering the Top-100, OpenAI is unique and far surpasses other institutions in terms of conversion rate. Basically, one of the two papers has become the "Top-100 of the Year" Big thesis".

Of course, judging from the popularity of ChatGPT, OpenAI is indeed very good at marketing, which has promoted the increase in citations to a certain extent. It is undeniable that the quality of their research results is very high.

First of all The Zeta Alpha platform collects the most cited papers in each year, and then manually checks the first publication date (usually arXiv preprint) and categorizes it into the corresponding year.

Supplement this list by mining Semantic Scholar for highly cited artificial intelligence papers, which have broader coverage and can be sorted by citation count, mainly from influential closed source publishers (such as " Nature, Elsevier, Springer and other journals) for additional papers.

Then the number of citations of each paper on Google Scholar is used as a representative indicator, and the papers are sorted according to this number to obtain the top 100 papers within a year.

For these papers, use GPT-3 to extract the authors, their affiliations, and countries, and manually check these results (if the country is not reflected in the publication, use the organization's headquarters country).

Papers by authors with multiple institutions are counted once for each affiliation.

1. AlphaFold Protein Structure Database: massively expanding the structural coverage of protein-sequence space with high-accuracy models

Paper link: https://academic.oup.com/nar/article/50/D1/D439/6430488

Publishing institution: European Molecular Biology Laboratory, DeepMind

AlphaFold DB: https:// alphafold.ebi.ac.uk

Citations: 1331

##AlphaFoldProtein structure databaseAlphaFold DB It is a publicly accessible, extensive, highly accurate database of protein structure predictions.

Powered by DeepMind’s AlphaFold v2.0, the database enables an unprecedented expansion of structural coverage of known protein sequence space.

AlphaFold DB provides programmatic access and interactive visualization of predicted atomic coordinates, model confidence estimates for each residue and pair, and predicted alignment errors.

The initial version of AlphaFold DB contains more than 360,000 predicted structures covering the proteomes of 21 model organisms, and will be expanded to cover most of the UniRef90 data set (more than 100 million ) representative sequence.

2. ColabFold: making protein folding accessible to all

Paper link: https://www.nature.com/articles/s41592-022-01488-1

Code link: https://github.com/sokrypton/colabfold

Environment link: https://colabfold.mmseqs.com

Citations: 1138

ColabFold speeds up by combining MMSEQS2’s fast homology search with AlphaFold2 or Rosettafold prediction of protein structures and complexes. ColabFold can achieve 40-60 times accelerated search and optimization in model utilization, and can predict nearly 1,000 structures on a server with only one graphics processing unit. ColabFold is a free and accessible platform for protein folding based on Google Colaboratory and is also an available open source software.3. A ConvNet for the 2020s

Paper link: https://arxiv.org/pdf/ 2201.03545.pdf

Citations: 835

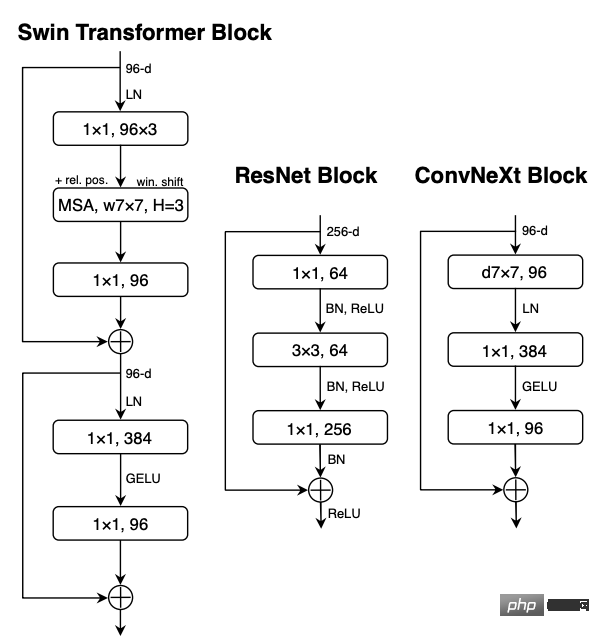

Visual Identity’s “Roaring 20s” (Roaring 20s) Starting with the introduction of Visual Transformers (ViTs), it quickly replaced ConvNets as the most advanced image classification model. On the other hand, a simplest ViT still faces some difficulties when applied to general computer vision tasks, such as object detection and semantic segmentation. Hierarchical Transformers (such as Swin Transformers) reintroduce several ConvNet priors, making the Transformer actually feasible as a general vision model backbone and showing significant performance in various vision tasks.However, the effectiveness of this hybrid approach is still mainly due to the inherent advantages of Transformers rather than the inherent inductive bias of Convolutions.

In this work, the researchers revisited the design space and tested the limits of what pure ConvNets can achieve.

Gradually "modernized" a standard ResNet into ViT's design, and in the process discovered several keys that contributed to the performance difference component, exploration revealed a family of pure ConvNet models called ConvNeXt.

ConvNeXt is composed entirely of standard ConvNet modules and is on par with Transformer in terms of accuracy and scalability, achieving 87.8% ImageNet top-1 accuracy in COCO detection and ADE20K segmentation, and exceeding Swin Transformers while maintaining the simplicity and efficiency of standard ConvNets.

4. Hierarchical Text-Conditional Image Generation with CLIP Latents

Paper link: https://arxiv.org/abs/2204.06125

Citations: 718

Contrastive models like CLIP have been shown to learn robust image representations that capture semantics and style.

To leverage these representations to generate images, the researchers propose a two-stage model: a prior that generates CLIP image embeddings given a text caption, and a decoder that generates images conditioned on image embeddings.

Experiments have shown that explicitly generating image representations can improve image diversity with minimal loss in fidelity and title similarity, and that decoders conditioned on image representations can also produce image changes, Preserving its semantics and style while changing non-essential details that are not present in the image representation.

In addition, CLIP’s joint embedding space enables image operations under language-guided to be performed in a zero-shot manner.

Use a diffusion model for the decoder and experiment with a priori autoregressive and diffusion models, finding that the latter is more computationally efficient and can generate higher quality samples.

5. PaLM: Scaling Language Modeling with Pathways

Paper link: https://arxiv.org/pdf/2204.02311.pdf

Citations: 426

Large language models have been shown to achieve higher performance on a variety of natural language tasks using few-shot learning, greatly reducing the number of task-specific training instances required to adapt the model to a specific application.

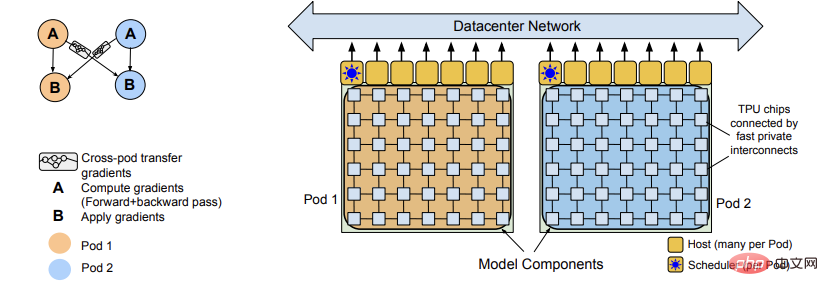

In order to further understand the impact of scale on few-shot learning, the researchers trained a 540 billion parameter, densely activated Transformer language model Pathways Language Model (PaLM).

PaLM was trained on 6144 TPU v4 chips using Pathways (a new ML system capable of efficient training on multiple TPU Pods), and achieved results on hundreds of language understanding and generation benchmarks State-of-the-art few-shot learning results demonstrate the benefits of scaling.

On some of these tasks, PaLM 540B achieves breakthrough performance, surpassing the state-of-the-art in fine-tuning on a set of multi-step inference tasks and outperforming humans on the recently released BIG-bench benchmark. average performance.

A large number of BIG-bench tasks show discontinuous improvements in model size, which also means that when the scale is expanded to the largest model, performance suddenly improves.

PaLM also has strong capabilities in multi-language tasks and source code generation, which has also been proven in a series of benchmark tests.

In addition, the researchers also conducted a comprehensive analysis of bias and toxicity, and studied the degree of training data memory in relation to model size. Finally, Ethical considerations related to large language models are discussed, and potential mitigation strategies are discussed.

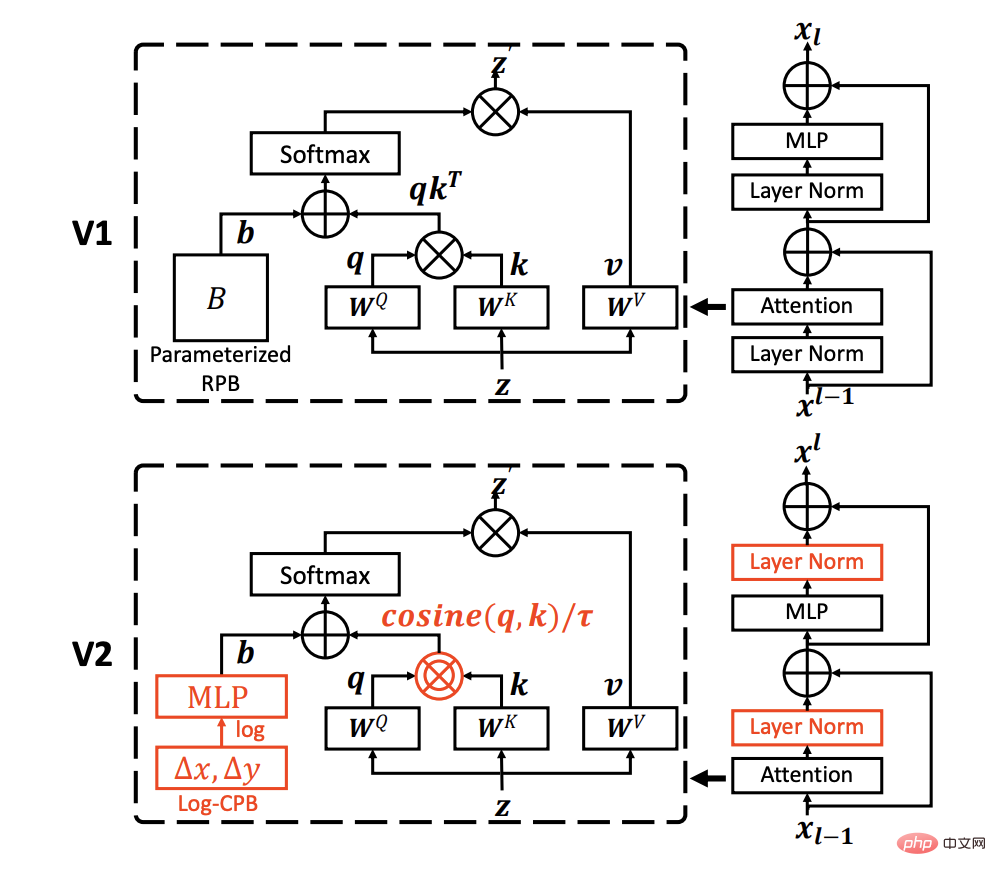

1. Swin Transformer V2: Scaling Up Capacity and Resolution

##Paper link: https://arxiv.org/pdf/2111.09883.pdf

Code link: https://github.com/microsoft/Swin-Transformer

Citations: 266

Large-scale NLP models have been shown to significantly improve the performance of language tasks without signs of saturation, while also demonstrating amazing few-shot capabilities like humans. This paper aims to explore large-scale models in computer vision and solves three main problems in the training and application of large-scale visual models, including the instability of training and the distinction between pre-training and fine-tuning. rate gap, and the need for labeled data.

2. Ensemble unsupervised autoencoders and Gaussian mixture model for cyberattack detection

Previous research used unsupervised machine learning with dimensionality reduction function For network detection, it is limited to robust anomaly detection on high-dimensional and sparse data. Most methods usually assume that the parameters in each field are homogeneous and have a specific Gaussian distribution, ignoring the robustness test of data skewness.Paper link: https://www.sciencedirect.com/science/article/pii/S0306457321003162

Citations: 145

This paper proposes using an unsupervised ensemble autoencoder connected to a Gaussian Mixture Model (GMM) to adapt to multiple domains without considering the uniqueness of each domain. Skewness. In the latent space of the integrated autoencoder, the attention-based latent representation and the reconstructed minimum error feature are utilized, and the expectation maximization (EM) algorithm is used to estimate the sample density in the GMM. When estimating When the sample density exceeds the learning threshold obtained during the training phase, the sample is identified as an outlier related to the anomaly. Finally, the integrated autoencoder and GMM are jointly optimized to convert the optimization of the objective function into a Lagrangian dual problem. Experiments on three public data sets verify the performance of the proposed model. Performance is significantly competitive with selected anomaly detection baselines. The co-authors of the paper are Professor An Peng from Ningbo Institute of Technology and Zhiyuan Wang from Tongji University. Professor An Peng is currently the deputy dean of the School of Electronics and Information Engineering of Ningbo Institute of Technology. He studied in the Department of Engineering Physics of Tsinghua University from 2000 to 2009 and received a bachelor's degree and a doctorate in engineering; CERN, Visiting scholar at the National University of Padua in Italy and Heidelberg University in Germany, member of the Cognitive Computing and Systems Professional Committee of the Chinese Automation Society, member of the Cognitive Systems and Information Processing Professional Committee of the Chinese Artificial Intelligence Society, and member of the Youth Working Committee of the Chinese Command and Control Society; chaired He has also participated in many scientific research projects such as the National Key Basic Research and Development Program (973 Program), the National Natural Science Foundation of China, and the National Spark Program.3. Scaling Up Your Kernels to 31x31: Revisiting Large Kernel Design in CNNs

Paper link: https://arxiv.org/abs/2203.06717

Code link: https://github.com/megvii-research/RepLKNet

Citations: 127

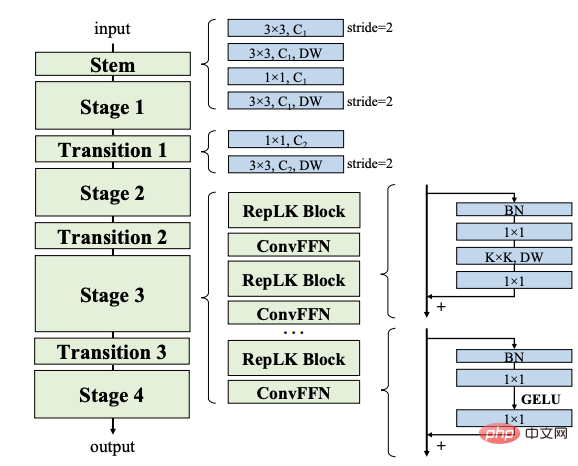

This article reviews the design of large kernels in modern convolutional neural networks (CNN).

Inspired by recent advances in Visual Transformers (ViTs), this paper demonstrates that using several large convolutional kernels instead of a bunch of small kernels may be a more powerful paradigm.

The researchers proposed five guidelines, such as applying reparameterized large-depth convolutions, to design efficient high-performance large-kernel CNNs.

# Based on these guidelines we propose RepLKNet, a pure CNN architecture with a kernel size of 31x31, in contrast to the commonly used 3x3, RepLKNet The performance gap between CNNs and ViTs is greatly reduced, such as achieving results comparable to or better than Swin Transformer with lower latency on ImageNet and some typical downstream tasks.

RepLKNet also shows good scalability for big data and large models, achieving the highest accuracy of 87.8% on ImageNet, 56.0% mIoU on ADE20K, and 56.0% mIoU on ImageNet with similar model sizes. is very competitive in the state-of-the-art technology.

The study further shows that compared with small-core CNNs, large-core CNNs have larger effective receptive fields and higher shape bias, rather than texture bias.

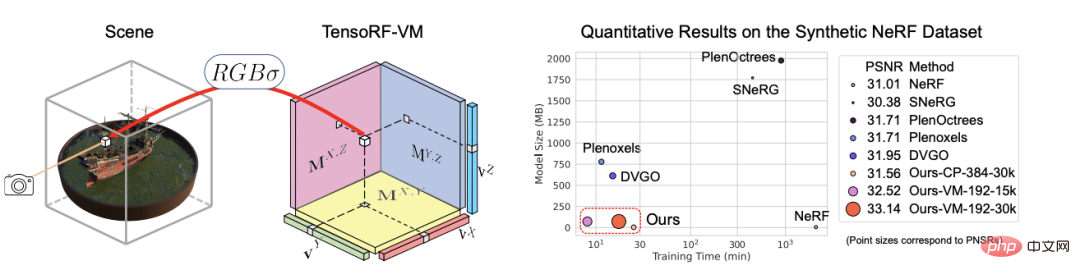

4. TensoRF: Tensorial Radiance Fields

Paper link: https://arxiv.org/abs/ 2203.09517

Number of citations: 110

The article proposes TensoRF, a method for conducting radiation fields. New ways of modeling and refactoring.

Different from NeRF, which purely uses MLP, the researchers model the radiation field of the scene as a 4D tensor, representing a three-dimensional image with per-voxel multi-channel features. The central idea of voxel grid is to decompose the 4D scene tensor into multiple compact low-rank tensor components.

It is proved that applying traditional CP decomposition in this framework, decomposing the tensor into rank-one components with compact vectors will achieve better performance than ordinary NeRF.

In order to further improve performance, the article also introduces a new vector-matrix (VM) decomposition that relaxes the two modes of tensors low-rank constraints and decompose the tensor into compact vector and matrix factors.

In addition to better rendering quality, the previous and concurrent work of directly optimizing per-pixel features resulted in a significant memory footprint for this model compared to CP and VM decomposition.

Experiments have proven that compared with NeRF, TensoRF with CP decomposition achieves fast reconstruction (

Additionally, TensoRF with VM decomposition further improves rendering quality and exceeds previous state-of-the-art methods, while reducing reconstruction time (

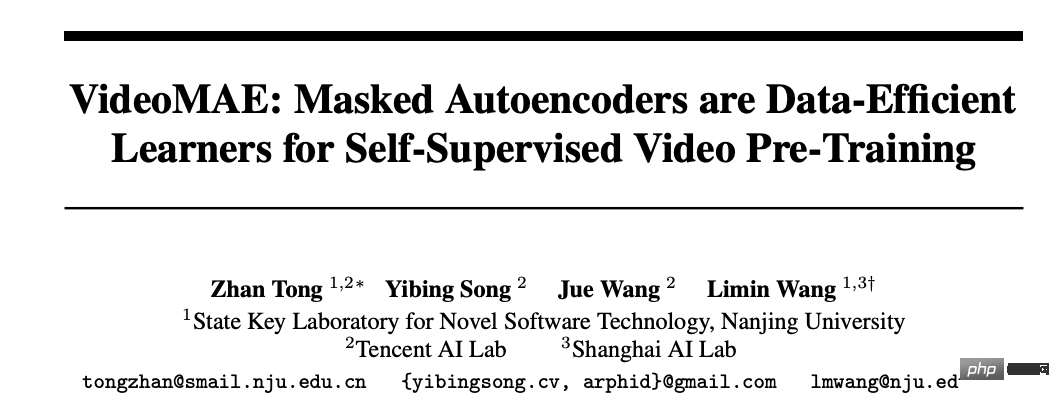

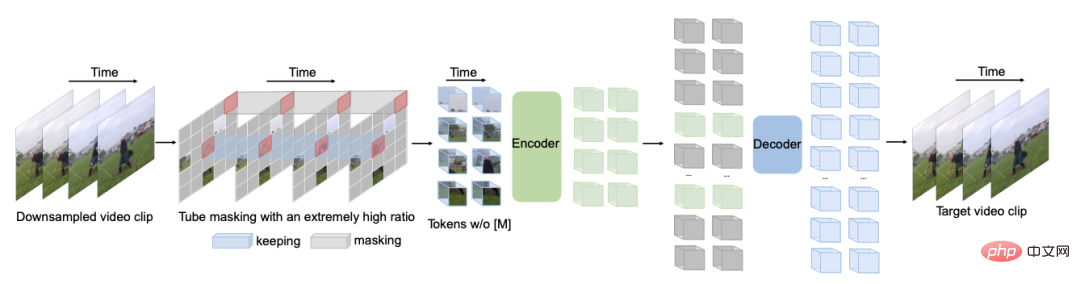

5. VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Paper link: https://arxiv.org/abs/2203.12602

Code link: https://github.com/MCG-NJU/VideoMAE

##Citations: 100

In order to achieve higher performance on relatively small datasets, it is usually necessary to pre-train the Video Transformer on additional large-scale datasets. This paper shows that the Video Masked Autoencoder (VideoMAE) is a data-efficient learner for self-supervised video pretraining (SSVP). Inspired by the recent ImageMAE, researchers propose a custom video tube with extremely high mask ratio. This simple design makes video reconstruction a more challenging self-supervised task. task, thus encouraging the extraction of more effective video representations during this pre-training process.

Three important findings were obtained on SSVP:

(1) Extremely high proportion The masking rate (i.e., 90% to 95%) can still produce favorable performance of VideoMAE. Temporally redundant video content results in higher masking rates than images.

(2) VideoMAE achieves very high performance on very small datasets (i.e. around 3k-4k videos) without using any additional data.

(3) VideoMAE shows that for SSVP, data quality is more important than data quantity.

Domain transfer between pre-training and target data sets is an important issue.

It is worth noting that VideoMAE and ordinary ViT can achieve 87.4% on Kinetics-400, 75.4% on Something-Something V2, 91.3% on UCF101, and 91.3% on UCF101. 62.6% is achieved on HMDB51 without using any additional data.

Complete list of top 100 papers

The above is the detailed content of The 'Top 100 AI Papers' in 2022 are released: Tsinghua ranks second after Google, and Ningbo Institute of Technology becomes the biggest dark horse. For more information, please follow other related articles on the PHP Chinese website!