Technology peripherals

Technology peripherals

AI

AI

Tesla is facing huge doubts: Autopilot automatically exited 1 second before the car accident

Tesla is facing huge doubts: Autopilot automatically exited 1 second before the car accident

Tesla is facing huge doubts: Autopilot automatically exited 1 second before the car accident

This article is reprinted with the authorization of AI New Media Qubit (public account ID: QbitAI). Please contact the source for reprinting.

An investigation into Tesla caused a uproar on social media.

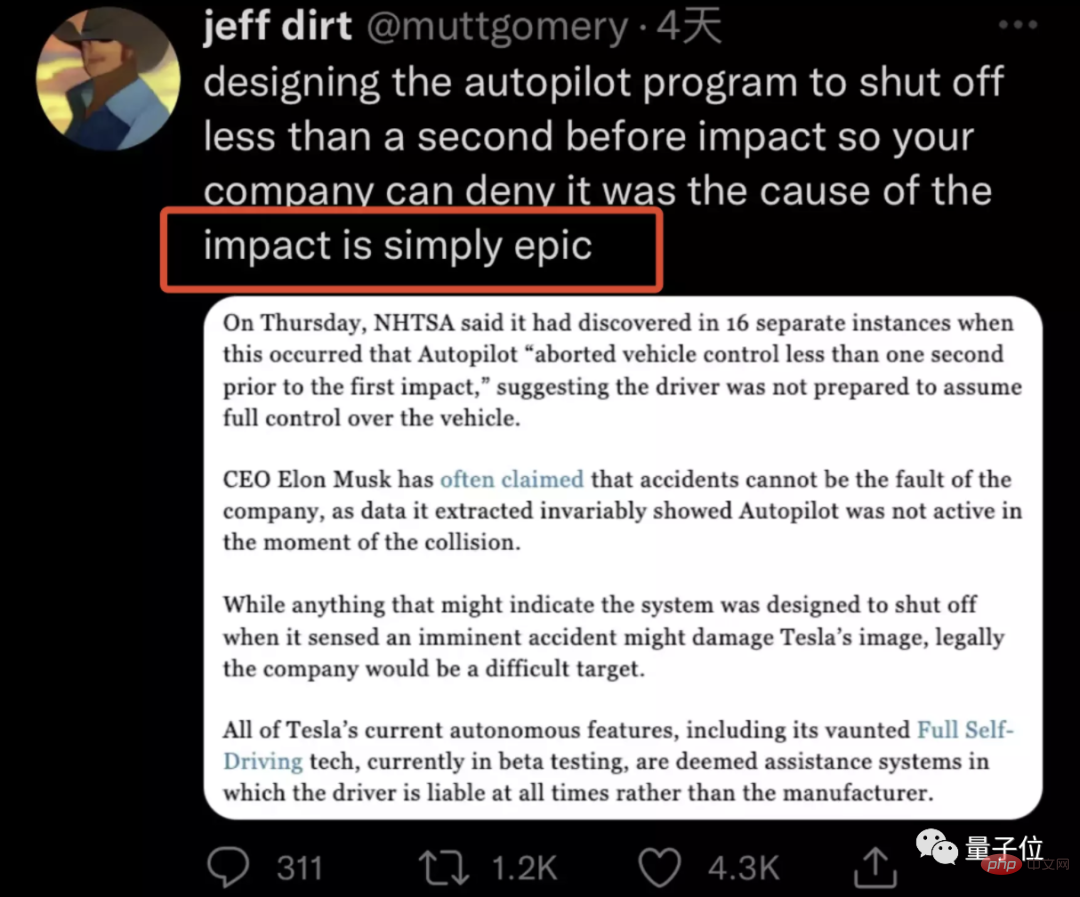

Some netizens even used "epic" to describe the findings of this investigation:

This The investigation comes from the US Highway Safety Administration(NHTSA), and among the exposed documents, one sentence stands out. :

Autopilot aborted vehicle control less than one second prior to the first impact.

在impact In the first less than 1 second, Autopilotsuspended control of the vehicle.

What NHTSA discovered was like a bombshell thrown to the public.

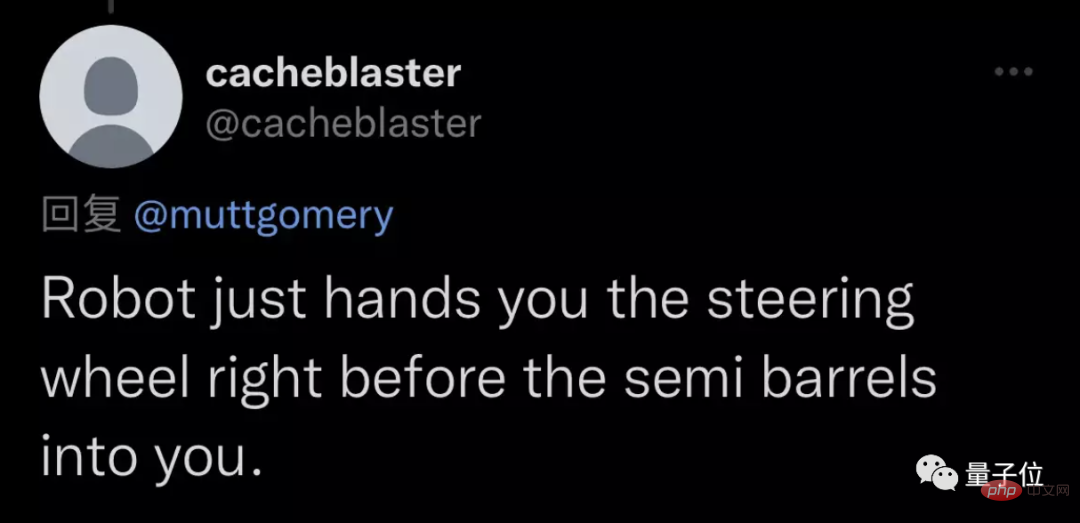

Because it is like "the robot hands over the steering wheel to you before the car hits you"...

Then many netizens commented Questioned:

With this design, Tesla can deny that the accident was due to Autopilot?

Ah, this...

Less than a second before the accident, the steering wheel was handed over to you

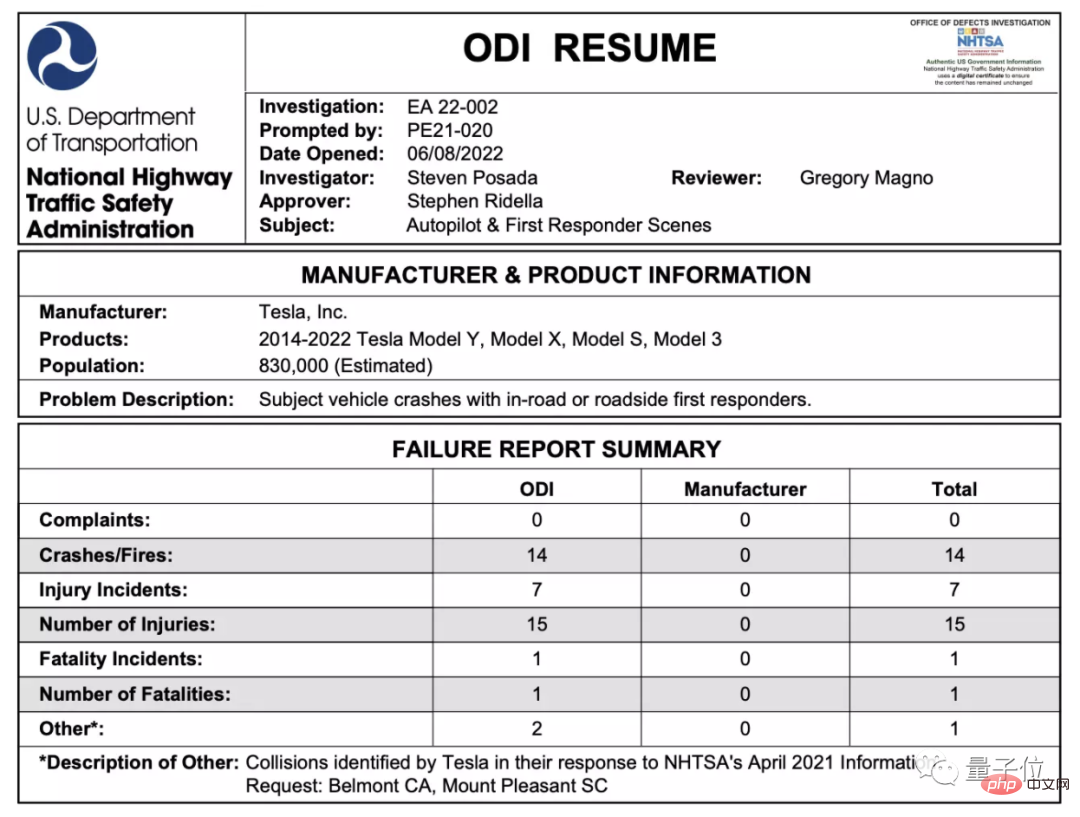

NHTSA’s investigation into Tesla began in August last year.

The direct reason for launching the investigation is that more than one Tesla entered the scene of an accident with the automatic driving system Autopilot turned on, and collided with Mingming who had already stopped on the road. Emergency vehicles, police cars or accident vehicles nearby.

You won’t know if you don’t check, but you will be shocked if you check.

They found that in 16 such accidents, most of the vehicles' Autopilot had activated forward collision warning# before the collision. ##(FCW). Then the automatic emergency braking(AEB) of about half of the vehicles also actively intervened.

But they all failed in the end(One person died unfortunately).

The scary thing is that, on average, in these 16 accidents, Autopilot stopped controlling the carless than one second before the actual impact.

And this didn’t give the human driver enough time to take over. The video of the accident shows that human drivers basically noticed the accident scene ahead 8 seconds before the collision.But court data from 11 of the accidents showed that no human driver took evasive measures in the 2-5 seconds before the collision, although they all kept their hands on the steering wheel as required by Autopilot.

Perhaps most drivers still "trusted" Autopilot at that moment - the drivers of 9 of the vehicles did not respond to the visual or audible warnings issued by the system in the last minute before the collision. react.

However, four vehicles did not give any warning at all.

Now, in order to further understand the safety of Autopilot and related systems(To what extent it can undermine the supervision of human drivers and increase the risk), NHTSA decided Upgrade this preliminary investigation to an engineering analysis (EA).

The vehicles involved will be expanded to all four existing Tesla models: Model S, Model X, Model 3 and Model Y, totaling 830,000 vehicles.

Once the results of this investigation were exposed, victims began to come forward to give their own opinions.

A user said that as early as 2016, her Model S also hit a car parked on the side of the road while changing lanes. But Tesla told her it was her fault because she hit the brakes before the crash, causing Autopilot to lose control.

The woman said that the system issued an alarm at this time. It means that whatever I did was wrong. I didn't apply the brakes and it was because I didn't pay attention to the alarm.

However, Musk did not respond to this matter. He just happened to send a tweet 6 hours after netizens broke the news, throwing out NHTSA as early as 2018An investigation report on Tesla.

The report states that Tesla’s Model S(produced in 2014) and Model X( Produced in 2015) All previous tests by NHTSA have proven that these two models have the lowest probability of injury after an accident among all cars.

Now they discovered that the new Model 3 (produced in 2018) actually replaced Model S and Model X in the first place.

△ Tesla also took the opportunity to make a wave of publicity

This is a response to the "car crash and unknown accident" in the Tesla car accident in Shanghai a few days ago. Injury" incident.

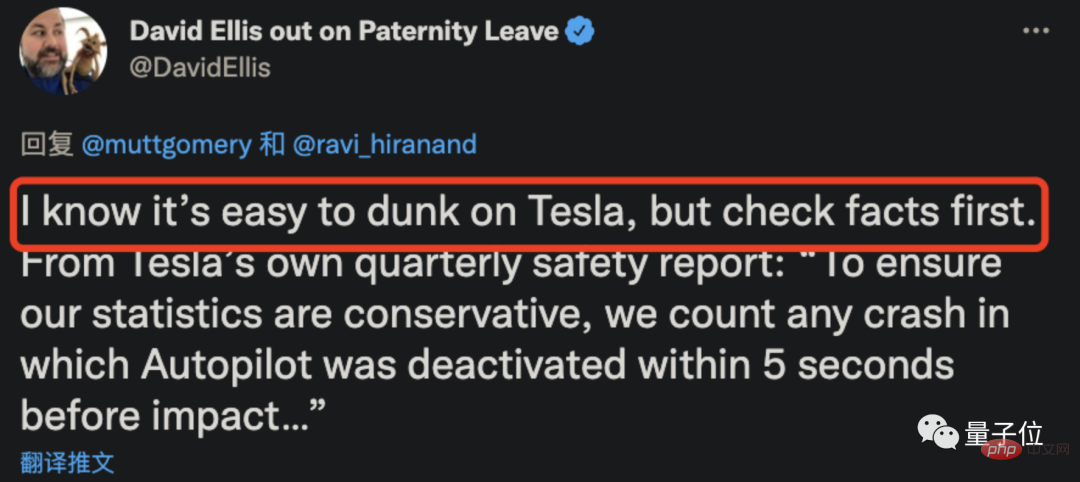

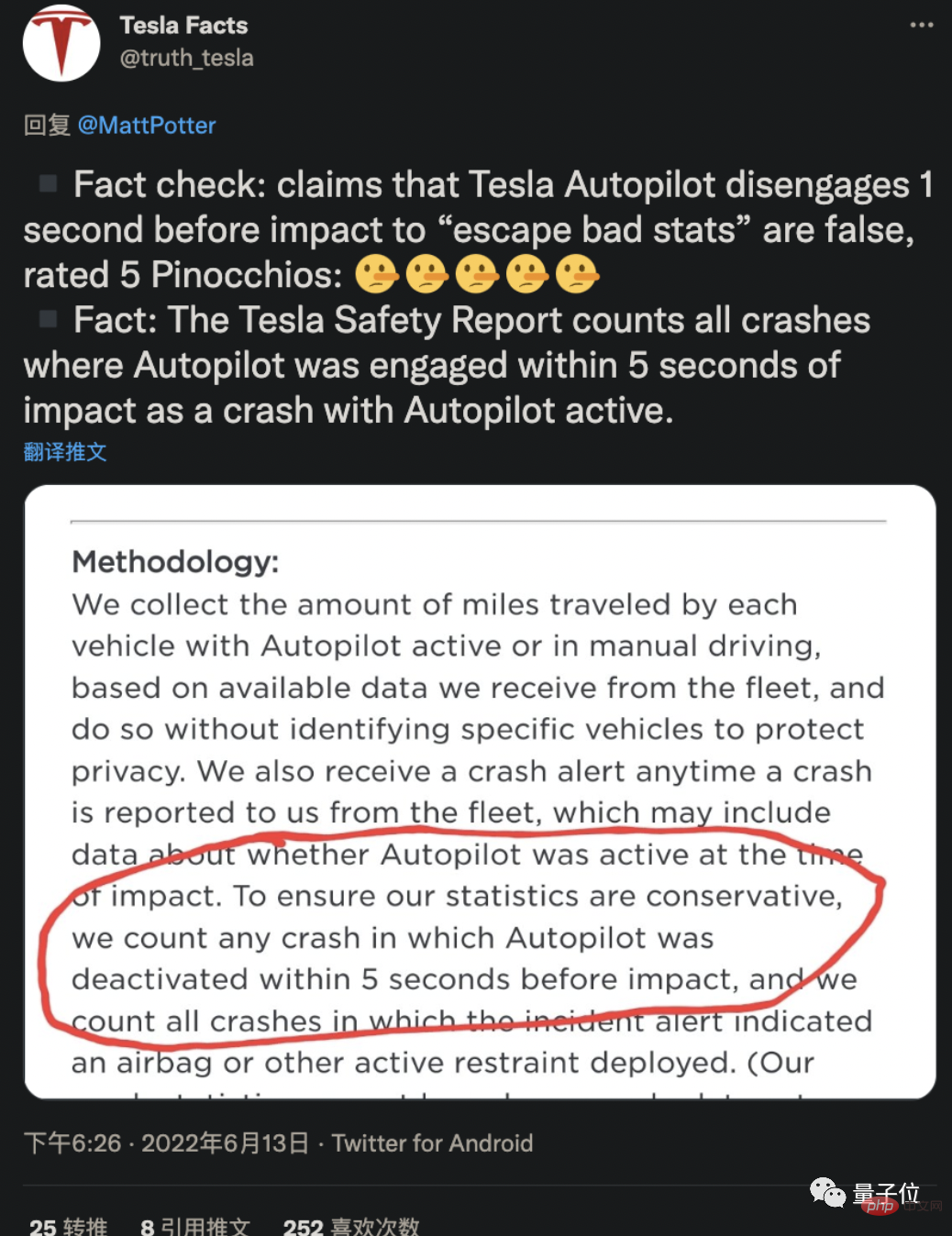

What’s interesting is that just when everyone was denouncing Tesla’s autopilot as unreliable, someone stood up and called everyone’s fault for Tesla’s “darkness.”

In fact, Tesla interrupting Autopilot 1 second before the accident does not put the blame on humans. They are counting In the event of a Tesla accident, as long as Autopilot is working within 5 seconds of the collision, the responsibility will be assigned to Autopilot.

Later, Tesla officials also came out to confirm this rule.

However, although humans no longer need to take the blame, the "saucy operation" of cutting off the autonomous driving one second before the accident still cannot change the fact that the human driver had no time to take over the steering wheel.

Autopilot, is it reliable?

After reading NHTSA’s investigation results, let’s look back at Autopilot, which is the focus of public opinion this time.

Autopilot is an advanced driving assistance system (ADAS) from Tesla, which is under the international Society of Automotive Engineers(SAE) Proposed L2 in the autonomous driving level.

(SAE divides autonomous driving into six levels, from L0 to L5)

According to Tesla’s official description, Autopilot currently Features include automatic assisted steering, acceleration and braking within a lane, autonomous parking, and the ability to "summon" the car from a garage or parking space.

So does this mean that the driver can be fully "managed"?

it's not true.

Tesla’s current Autopilot can only play an “auxiliary” role, not fully autonomous driving.

Moreover, the driver is required to "actively" and "actively" supervise Autopilot.

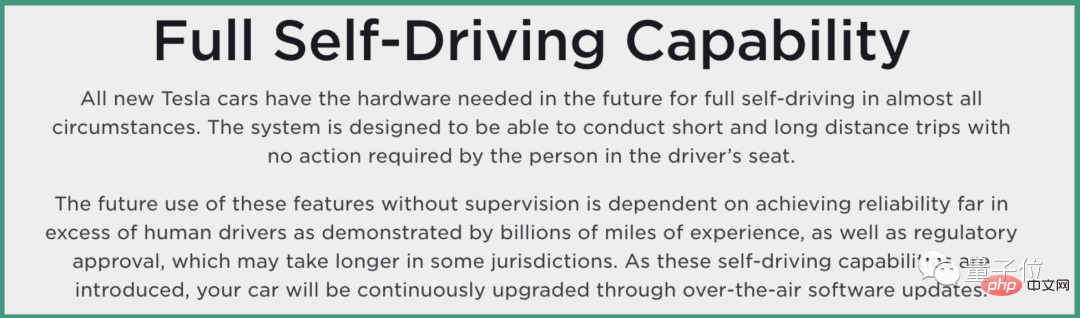

But in the official introduction, Tesla also gave a little description of the "fully autonomous driving" capability:

All new Teslas have the hardware needed for full self-driving in almost all situations in the future.

Able to carry out short and long distance travel without the need for a driver.

#However, in order for Autopilot to achieve the above goals, it is very important that it is far superior to humans in terms of safety.

In this regard, Tesla said it has proven this in billions of miles of experiments.

AndMusk has also spoken out about Tesla’s safety more than once:

Tesla’s full safety The safety level of autonomous driving is much higher than that of ordinary drivers.

But is this true?

Regardless of the evaluations given by Tesla and Musk, from a practical perspective, Tesla’s Autopilot has been controversial in terms of safety.

For example, the frequent "ghost brake" incident has pushed it to the forefront of public opinion again and again, and is also one of the main reasons why NHTSA launched this investigation. one.

"Ghost braking" refers to when the driver turns on the Tesla Autopilot assisted driving function, even if there are no obstacles in front of the vehicle or will not collide with the vehicle in front, the Tesla vehicle will Unnecessary emergency braking will be performed.

This brings huge safety risks to drivers and other vehicles on the road.

Not only that, if you take a closer look at the hot events related to Tesla, it is not difficult to find that many of them are related to the safety of Autopilot:

So what does NHTSA think of this?

In this document, NHTSA reminds that there are currently no fully autonomous cars on the market. “Every vehicle requires the driver to be in control at all times, and all state laws require drivers to control their vehicles.” Responsible for the operation of the company."

As for what impact this investigation will have on Tesla in the future, according to NHTSA:

If there are safety-related defects, The right to issue a "recall request" letter to the manufacturer.

......

Finally, do a small survey - do you trust Autopilot?

Full report:

https://static.nhtsa.gov/odi/inv/2022/INOA-EA22002-3184.PDF

The above is the detailed content of Tesla is facing huge doubts: Autopilot automatically exited 1 second before the car accident. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library: