Technology peripherals

Technology peripherals

AI

AI

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Thanks to the differentiable rendering provided by NeRF, recent 3D generative models have achieved stunning results on stationary objects. However, in a more complex and deformable category such as the human body, 3D generation still poses great challenges. This paper proposes an efficient combined NeRF representation of the human body, enabling high-resolution (512x256) 3D human body generation without the use of super-resolution models. EVA3D has significantly surpassed existing solutions on four large-scale human body data sets, and the code has been open source.

- ##Thesis name: EVA3D: Compositional 3D Human Generation from 2D image Collections

- Paper address: https://arxiv.org/abs/2210.04888

- Project homepage: https://hongfz16.github.io/projects/EVA3D.html

- Open source code: https://github.com/hongfz16/EVA3D

- Colab Demo: https://colab.research.google. com/github/hongfz16/EVA3D/blob/main/notebook/EVA3D_Demo.ipynb

- Hugging Face Demo: https://huggingface.co/spaces/hongfz16/EVA3D

#Background

In order to solve this problem, this paper proposes an efficient combined 3D human body NeRF representation to achieve high-resolution (512x256) 3D human body GAN training and generation. The human NeRF representation proposed in this article and the three-dimensional human GAN training framework will be introduced below.

Efficient Human NeRF Representation

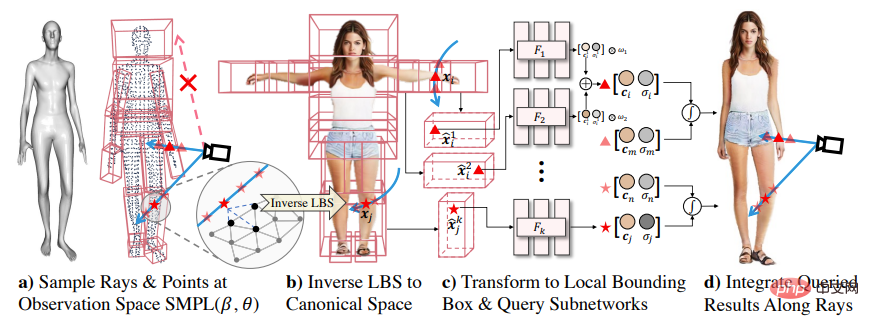

The human NeRF proposed in this article is based on the parametric human model SMPL, which provides convenient control of human posture and shape. When doing NeRF modeling, as shown in the figure below, this article divides the human body into 16 parts. Each part corresponds to a small NeRF network for local modeling. When rendering each part, this paper only needs to reason about the local NeRF. This sparse rendering method can also achieve native high-resolution rendering with lower computing resources.For example, when rendering a human body whose body and action parameters are inverse linear blend skinning), convert the sampling points in posed space into canonical space. Then it is calculated that the sampling points in the Canonical space belong to the bounding box of one or several local NeRFs, and then the NeRF model is inferred to obtain the color and density corresponding to each sampling point; when a certain sampling point falls into multiple local NeRFs In the overlapping area, each NeRF model will be inferred, and multiple results will be interpolated using the window function; finally, this information will be used for light integration to obtain the final rendering.

Three-dimensional human body GAN framework

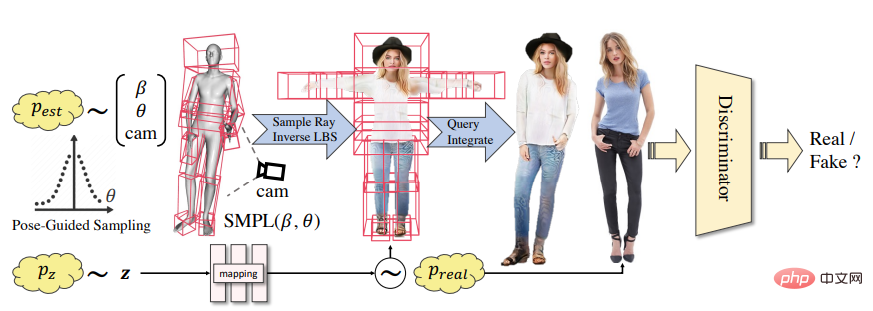

Based on the proposed efficient human NeRF expression, this article implements a three-dimensional human body GAN training framework. In each training iteration, this paper first samples an SMPL parameter and camera parameters from the data set, and randomly generates a Gaussian noise z. Using the human body NeRF proposed in this article, this article can render the sampled parameters into a two-dimensional human body picture as a fake sample. Using real samples in the data set, this article conducts adversarial training of GAN.

Extremely imbalanced data sets

Two-dimensional human body data sets, such as DeepFashion, are usually It is prepared for two-dimensional vision tasks, so the posture diversity of the human body is very limited. To quantify the degree of imbalance, this paper counts the frequency of model face orientations in DeepFashion. As shown in the figure below, the orange line represents the distribution of face orientations in DeepFashion. It can be seen that it is extremely unbalanced, which makes it difficult to learn three-dimensional human body representation. To alleviate this problem, we propose a sampling method guided by human posture to flatten the distribution curve, as shown by the other colored lines in the figure below. This allows the model during training to see more diverse and larger angle images of the human body, thereby helping to learn three-dimensional human geometry. We conducted an experimental analysis of the sampling parameters. As can be seen from the table below, after adding the human posture guidance sampling method, although the image quality (FID) will be slightly reduced, the learned three-dimensional geometry (Depth) is significantly better.

High-quality generation results

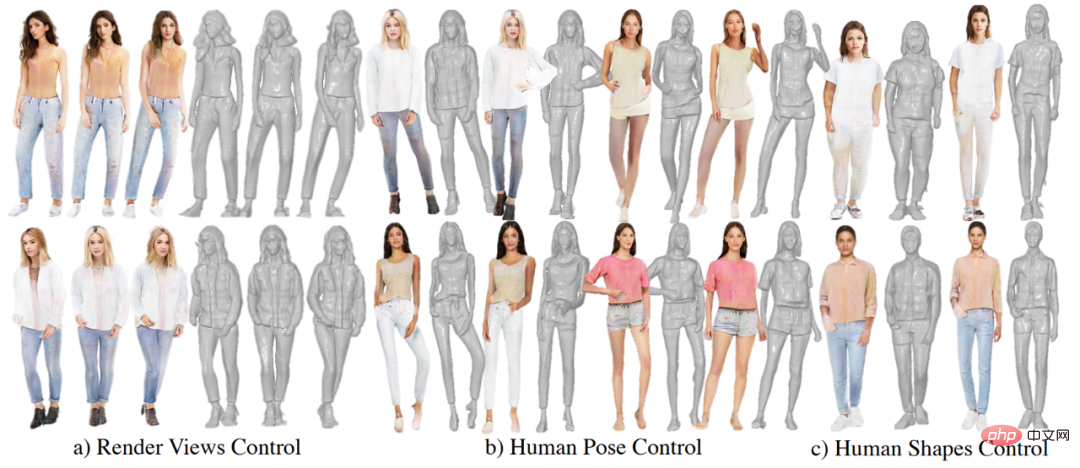

The following figure shows some EVA3D generation results. EVA3D can randomly sample human body appearance, and can control rendering camera parameters and human postures. and body shape.

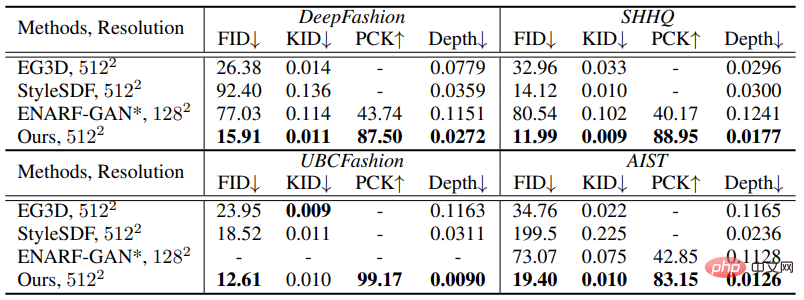

This paper conducts experiments on four large-scale human data sets, namely DeepFashion, SHHQ, UBCFashion, and AIST . This study compares the state-of-the-art static 3D object generation algorithm EG3D with StyleSDF. At the same time, the researchers also compared the algorithm ENARF-GAN specifically for 3D human generation. In the selection of indicators, this article takes into account the evaluation of rendering quality (FID/KID), the accuracy of human body control (PCK) and the quality of geometry generation (Depth). As shown in the figure below, this article significantly surpasses previous solutions in all data sets and all indicators.

Application potential

Finally, this article also shows some application potential of EVA3D. First, the study tested differencing in the latent space. As shown in the figure below, this article is able to make smooth changes between two three-dimensional people, and the intermediate results maintain high quality. In addition, this article also conducted experiments on GAN inversion. The researchers used Pivotal Tuning Inversion, an algorithm commonly used in two-dimensional GAN inversion. As shown in the right figure below, this method can better restore the appearance of the reconstructed target, but a lot of details are lost in the geometric part. It can be seen that the inversion of three-dimensional GAN is still a very challenging task.

Conclusion

This paper proposes the first high-definition three-dimensional human NeRF generation algorithm EVA3D, and only needs It can be trained using 2D human body image data. EVA3D achieves state-of-the-art performance on multiple large-scale human datasets and shows potential for application on downstream tasks. The training and testing codes of EVA3D have been open sourced, and everyone is welcome to try it!

The above is the detailed content of Use 2D images to create a 3D human body. You can wear any clothes and change your movements.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to clear desktop background recent image history in Windows 11

Apr 14, 2023 pm 01:37 PM

How to clear desktop background recent image history in Windows 11

Apr 14, 2023 pm 01:37 PM

<p>Windows 11 improves personalization in the system, allowing users to view a recent history of previously made desktop background changes. When you enter the personalization section in the Windows System Settings application, you can see various options, changing the background wallpaper is one of them. But now you can see the latest history of background wallpapers set on your system. If you don't like seeing this and want to clear or delete this recent history, continue reading this article, which will help you learn more about how to do it using Registry Editor. </p><h2>How to use registry editing

How to Download Windows Spotlight Wallpaper Image on PC

Aug 23, 2023 pm 02:06 PM

How to Download Windows Spotlight Wallpaper Image on PC

Aug 23, 2023 pm 02:06 PM

Windows are never one to neglect aesthetics. From the bucolic green fields of XP to the blue swirling design of Windows 11, default desktop wallpapers have been a source of user delight for years. With Windows Spotlight, you now have direct access to beautiful, awe-inspiring images for your lock screen and desktop wallpaper every day. Unfortunately, these images don't hang out. If you have fallen in love with one of the Windows spotlight images, then you will want to know how to download them so that you can keep them as your background for a while. Here's everything you need to know. What is WindowsSpotlight? Window Spotlight is an automatic wallpaper updater available from Personalization > in the Settings app

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

How to use image semantic segmentation technology in Python?

Jun 06, 2023 am 08:03 AM

With the continuous development of artificial intelligence technology, image semantic segmentation technology has become a popular research direction in the field of image analysis. In image semantic segmentation, we segment different areas in an image and classify each area to achieve a comprehensive understanding of the image. Python is a well-known programming language. Its powerful data analysis and data visualization capabilities make it the first choice in the field of artificial intelligence technology research. This article will introduce how to use image semantic segmentation technology in Python. 1. Prerequisite knowledge is deepening

iOS 17: How to use one-click cropping in photos

Sep 20, 2023 pm 08:45 PM

iOS 17: How to use one-click cropping in photos

Sep 20, 2023 pm 08:45 PM

With the iOS 17 Photos app, Apple makes it easier to crop photos to your specifications. Read on to learn how. Previously in iOS 16, cropping an image in the Photos app involved several steps: Tap the editing interface, select the crop tool, and then adjust the crop using a pinch-to-zoom gesture or dragging the corners of the crop tool. In iOS 17, Apple has thankfully simplified this process so that when you zoom in on any selected photo in your Photos library, a new Crop button automatically appears in the upper right corner of the screen. Clicking on it will bring up the full cropping interface with the zoom level of your choice, so you can crop to the part of the image you like, rotate the image, invert the image, or apply screen ratio, or use markers

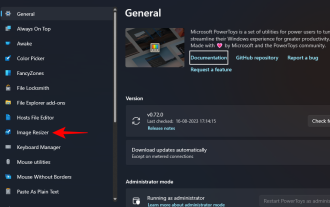

How to batch resize images using PowerToys on Windows

Aug 23, 2023 pm 07:49 PM

How to batch resize images using PowerToys on Windows

Aug 23, 2023 pm 07:49 PM

Those who have to work with image files on a daily basis often have to resize them to fit the needs of their projects and jobs. However, if you have too many images to process, resizing them individually can consume a lot of time and effort. In this case, a tool like PowerToys can come in handy to, among other things, batch resize image files using its image resizer utility. Here's how to set up your Image Resizer settings and start batch resizing images with PowerToys. How to Batch Resize Images with PowerToys PowerToys is an all-in-one program with a variety of utilities and features to help you speed up your daily tasks. One of its utilities is images

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Apr 11, 2023 pm 02:31 PM

Use 2D images to create a 3D human body. You can wear any clothes and change your movements.

Apr 11, 2023 pm 02:31 PM

Thanks to the differentiable rendering provided by NeRF, recent 3D generative models have achieved stunning results on stationary objects. However, in a more complex and deformable category such as the human body, 3D generation still poses great challenges. This paper proposes an efficient combined NeRF representation of the human body, enabling high-resolution (512x256) 3D human body generation without the use of super-resolution models. EVA3D has significantly surpassed existing solutions on four large-scale human body data sets, and the code has been open source. Paper name: EVA3D: Compositional 3D Human Generation from 2D image Collections Paper address: http

New perspective on image generation: discussing NeRF-based generalization methods

Apr 09, 2023 pm 05:31 PM

New perspective on image generation: discussing NeRF-based generalization methods

Apr 09, 2023 pm 05:31 PM

New perspective image generation (NVS) is an application field of computer vision. In the 1998 SuperBowl game, CMU's RI demonstrated NVS given multi-camera stereo vision (MVS). At that time, this technology was transferred to a sports TV station in the United States. , but it was not commercialized in the end; the British BBC Broadcasting Company invested in research and development for this, but it was not truly commercialized. In the field of image-based rendering (IBR), there is a branch of NVS applications, namely depth image-based rendering (DBIR). In addition, 3D TV, which was very popular in 2010, also needed to obtain binocular stereoscopic effects from monocular video, but due to the immaturity of the technology, it did not become popular in the end. At that time, methods based on machine learning had begun to be studied, such as

How to edit photos on iPhone using iOS 17

Nov 30, 2023 pm 11:39 PM

How to edit photos on iPhone using iOS 17

Nov 30, 2023 pm 11:39 PM

Mobile photography has fundamentally changed the way we capture and share life’s moments. The advent of smartphones, especially the iPhone, played a key role in this shift. Known for its advanced camera technology and user-friendly editing features, iPhone has become the first choice for amateur and experienced photographers alike. The launch of iOS 17 marks an important milestone in this journey. Apple's latest update brings an enhanced set of photo editing features, giving users a more powerful toolkit to turn their everyday snapshots into visually engaging and artistically rich images. This technological development not only simplifies the photography process but also opens up new avenues for creative expression, allowing users to effortlessly inject a professional touch into their photos