Technology peripherals

Technology peripherals

AI

AI

OpenAI officially launched an AI-generated content identifier, but the success rate is only 26%. Netizens: It's not as good as a paper plagiarism checking tool.

OpenAI officially launched an AI-generated content identifier, but the success rate is only 26%. Netizens: It's not as good as a paper plagiarism checking tool.

OpenAI officially launched an AI-generated content identifier, but the success rate is only 26%. Netizens: It's not as good as a paper plagiarism checking tool.

Many people may have forgotten that ChatGPT was officially released at the end of November last year, only two months ago, but the craze it set off has caused technology companies to follow suit and spawned unicorn startups. , and also led the academic community to modify the requirements for paper acceptance.

After ChatGPT triggered a big discussion in the AI field on "whether to ban it or not", OpenAI's authenticity identification tool is finally here.

On January 31, OpenAI officially announced the launch of a recognition tool that distinguishes human works from AI-generated text. This technology is designed to identify content generated by its own ChatGPT, GPT-3 and other models. . However, the accuracy of the classifier currently seems to be worrying: OpenAI pointed out in the blog that the AI identification AI high-confidence accuracy rate is about 26%. But the agency believes that when used in conjunction with other methods, it can help prevent AI text generators from being misused.

"The purpose of our proposed classifier is to help reduce confusion caused by AI-generated text. However, it still has some limitations, so it should be used as an alternative to other methods of determining the source of text. as a supplement rather than as a primary decision-making tool," an OpenAI spokesperson told the media via email. "We are getting feedback on whether such tools are useful with this initial classifier, and hope to share ways to improve them in the future." Enthusiasm for text-generating AI in particular is growing, but it's been countered by concerns about misuse, with critics calling on the creators of these tools to take steps to mitigate their potentially harmful effects.

Faced with the massive amount of AI-generated content, some industries immediately imposed restrictions. Some of the largest school districts in the United States have banned the use of ChatGPT on their networks and devices, fearing that it will affect students' learning and The accuracy of the content generated by the tool. Websites including Stack Overflow have also banned users from sharing content generated by ChatGPT, saying that artificial intelligence will make users inundated with useless content in normal discussions.

#These situations highlight the need for AI recognition tools. Although the effect is not satisfactory, the OpenAI AI Text Classifier achieves architectural benchmarking with the GPT series. Like ChatGPT, it is a language model that is trained on many public text examples from the web. Unlike ChatGPT, it’s fine-tuned to predict the likelihood that a piece of text was generated by AI—not just from ChatGPT, but from any text-generating AI model.

Specifically, OpenAI trained its AI text classifier on text from 34 text generation systems across five different organizations, including OpenAI itself. These were paired with similar (but not identical) artificial text from Wikipedia, websites pulled from links shared on Reddit, and a set of "human demos" collected for the OpenAI text generation system.

It should be noted that the OpenAI text classifier is not suitable for all types of text. The content to be detected needs to be at least 1000 characters, or approximately 150 to 250 words. It doesn’t have the plagiarism-checking capabilities of paper detection platforms—a very uncomfortable limitation considering that text-generating AI has been shown to copy “correct answers” from training sets. OpenAI said that because of its English-forward dataset, it was more likely to make errors on text written by children or in languages other than English.

The detector does not give a positive yes or no answer when evaluating whether a given piece of text was generated by AI. Depending on its confidence level, it will mark the text as "very unlikely" to be generated by AI (less than 10% probability), "unlikely" to be generated by AI (between 10% and 45% probability), "Unclear whether it was" AI-generated (45% to 90% chance), "possibly" AI-generated (90% to 98% chance), or "very likely" AI-generated (more than 98% chance) .

Looks very similar to image recognition AI, except for the accuracy. According to OpenAI, classifiers incorrectly label text written by humans as text written by AI 9% of the time.

After some trials, the effect is indeed not good

OpenAI claims that the success rate of its AI text classifier is about 26%. After some netizens tried it, they found that the recognition effect was indeed not good.

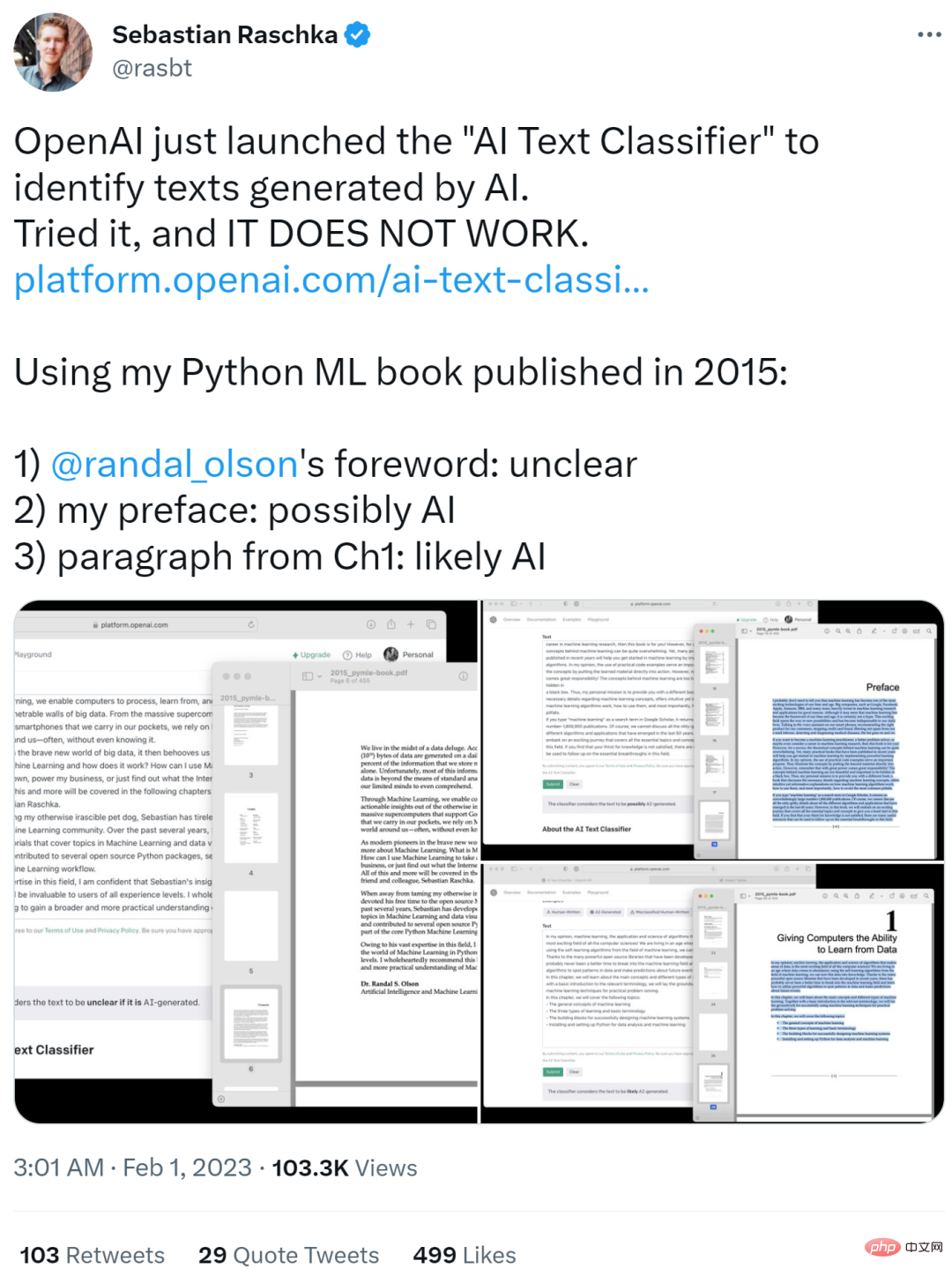

After the well-known ML and AI researcher Sebastian Raschka tried it, he gave the evaluation of "It does not work". He used his Python ML book from the original 2015 edition as input text and the results are shown below.

- Randy Olson’s foreword part was identified as Unclear whether it was generated by AI (unclear)

- His own preface part was identified aspossibly generated by AI

- The paragraph part of the first chapter was identified aslikely generated by AI

Sebastian Raschka said that this is an interesting example, but he already feels bad for students who may be punished for outrageous paper recognition results in the future.

So he proposed that if you want to deploy such a model, please share a confusion matrix. Otherwise, if educators adopt this model for grading, it could cause real-world harm. There should also be some transparency around false positives and false negatives.

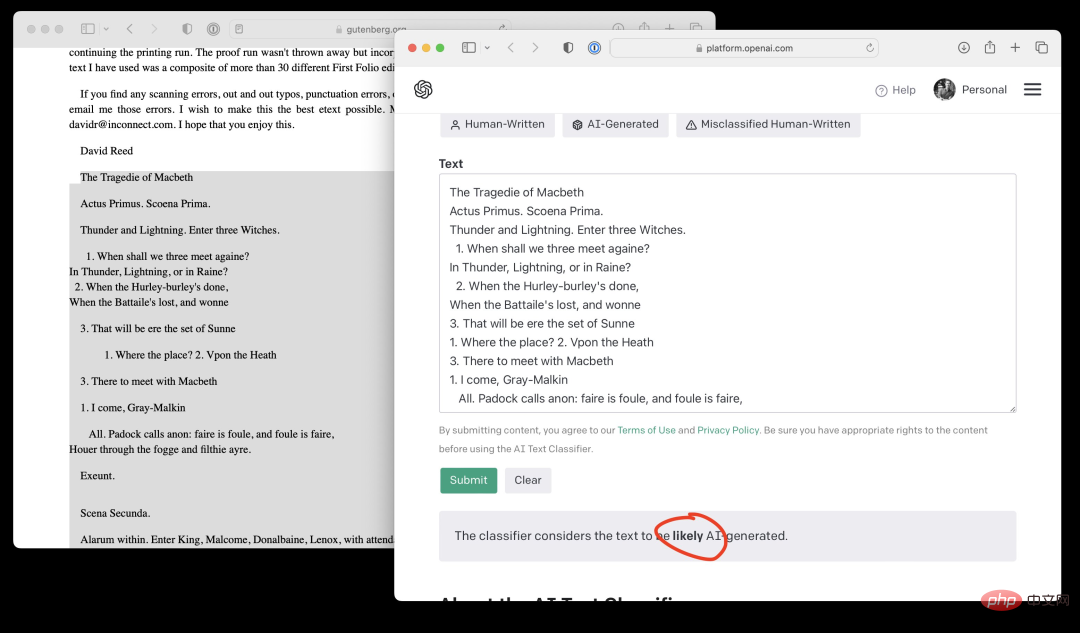

In addition, Sebastian Raschka input the content of the first page of Shakespeare's "Macbeth", and the OpenAI AI text classifier gave a result that was very likely to be generated by AI. Simply outrageous!

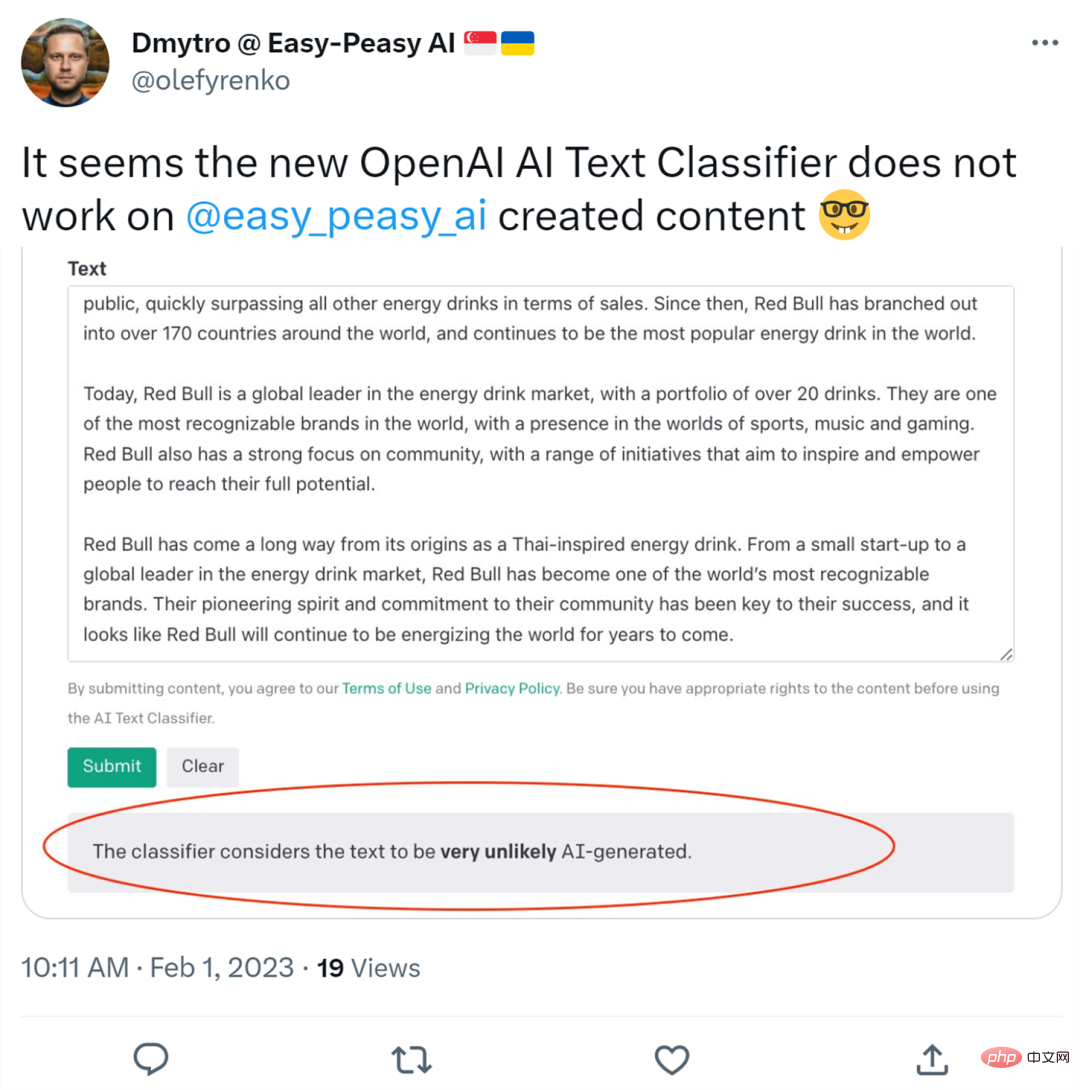

Others uploaded content created by the AI writing tool Easy-Peasy.AI, and the results were determined by the OpenAI AI text classifier The possibility of being generated by AI is very small.

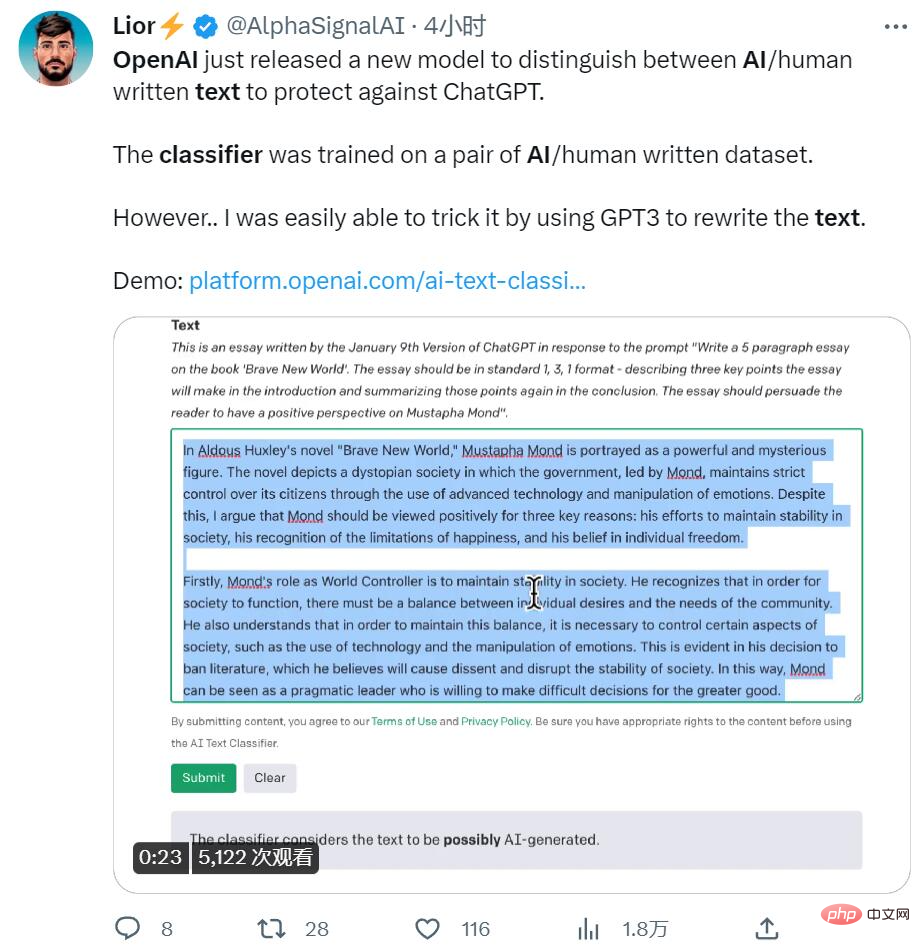

#Finally, someone used the method of repeated translation and let GPT3 rewrite the text, which also fooled the recognizer.

To sum up, the forward recognition is inaccurate, the reverse recognition is wrong, and some techniques for revising the paper cannot be seen through. . It seems that, at least in the field of AI text content recognition, OpenAI still needs to work hard.

The above is the detailed content of OpenAI officially launched an AI-generated content identifier, but the success rate is only 26%. Netizens: It's not as good as a paper plagiarism checking tool.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C? Answer: Use loop statements. Steps: 1. Define the variable n and store the countdown number to output; 2. Use the while loop to continuously print n until n is less than 1; 3. In the loop body, print out the value of n; 4. At the end of the loop, subtract n by 1 to output the next smaller reciprocal.

What are the pointer parameters in the parentheses of the C language function?

Apr 03, 2025 pm 11:48 PM

What are the pointer parameters in the parentheses of the C language function?

Apr 03, 2025 pm 11:48 PM

The pointer parameters of C language function directly operate the memory area passed by the caller, including pointers to integers, strings, or structures. When using pointer parameters, you need to be careful to modify the memory pointed to by the pointer to avoid errors or memory problems. For double pointers to strings, modifying the pointer itself will lead to pointing to new strings, and memory management needs to be paid attention to. When handling pointer parameters to structures or arrays, you need to carefully check the pointer type and boundaries to avoid out-of-bounds access.

What are the rules for function definition and call in C language?

Apr 03, 2025 pm 11:57 PM

What are the rules for function definition and call in C language?

Apr 03, 2025 pm 11:57 PM

A C language function consists of a parameter list, function body, return value type and function name. When a function is called, the parameters are copied to the function through the value transfer mechanism, and will not affect external variables. Pointer passes directly to the memory address, modifying the pointer will affect external variables. Function prototype declaration is used to inform the compiler of function signatures to avoid compilation errors. Stack space is used to store function local variables and parameters. Too much recursion or too much space can cause stack overflow.

How to define the call declaration format of c language function

Apr 04, 2025 am 06:03 AM

How to define the call declaration format of c language function

Apr 04, 2025 am 06:03 AM

C language functions include definitions, calls and declarations. Function definition specifies function name, parameters and return type, function body implements functions; function calls execute functions and provide parameters; function declarations inform the compiler of function type. Value pass is used for parameter pass, pay attention to the return type, maintain a consistent code style, and handle errors in functions. Mastering this knowledge can help write elegant, robust C code.

CS-Week 3

Apr 04, 2025 am 06:06 AM

CS-Week 3

Apr 04, 2025 am 06:06 AM

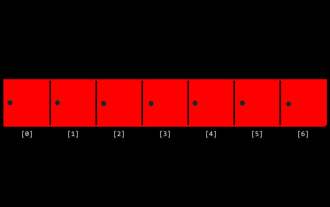

Algorithms are the set of instructions to solve problems, and their execution speed and memory usage vary. In programming, many algorithms are based on data search and sorting. This article will introduce several data retrieval and sorting algorithms. Linear search assumes that there is an array [20,500,10,5,100,1,50] and needs to find the number 50. The linear search algorithm checks each element in the array one by one until the target value is found or the complete array is traversed. The algorithm flowchart is as follows: The pseudo-code for linear search is as follows: Check each element: If the target value is found: Return true Return false C language implementation: #include#includeintmain(void){i

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers in C: a little history

Apr 04, 2025 am 06:09 AM

Integers are the most basic data type in programming and can be regarded as the cornerstone of programming. The job of a programmer is to give these numbers meanings. No matter how complex the software is, it ultimately comes down to integer operations, because the processor only understands integers. To represent negative numbers, we introduced two's complement; to represent decimal numbers, we created scientific notation, so there are floating-point numbers. But in the final analysis, everything is still inseparable from 0 and 1. A brief history of integers In C, int is almost the default type. Although the compiler may issue a warning, in many cases you can still write code like this: main(void){return0;} From a technical point of view, this is equivalent to the following code: intmain(void){return0;}

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...