Technology peripherals

Technology peripherals

AI

AI

alert! The energy consumption crisis caused by the explosion of ChatGPT poses huge challenges to data center operators

alert! The energy consumption crisis caused by the explosion of ChatGPT poses huge challenges to data center operators

alert! The energy consumption crisis caused by the explosion of ChatGPT poses huge challenges to data center operators

Recently, ChatGPT, an intelligent chat tool owned by the American company OpenAI, has taken social media by storm, attracting over 10 billion U.S. dollars in investment and driving a huge boom in artificial intelligence applications in the capital market. It has been in the limelight for a while.

Microsoft was the first to announce a $10 billion investment in OpenAI, and then Amazon and the US version of "Today's Headlines" BuzzFeed announced that they would enable ChatGPT in their daily work. , Baidu also announced the launch of the "Chinese version" of the ChatGPT chatbot in March. After many technology companies added fuel to the fire, ChatGPT instantly attracted global attention.

Data shows that the number of robots deployed by Amazon is increasing rapidly, with the daily increase reaching about 1,000. In addition, Facebook parent company Meta also plans to invest an additional US$4 billion to US$5 billion in data centers in 2023, all of which is expected to be used for artificial intelligence. IBM CEO Krishna said that artificial intelligence is expected to contribute $16 trillion to the global economy by 2030.

With the popularity of ChatGPT, giants may start a new round of fierce battle in the field of artificial intelligence in 2023.

However, when ChatGPT-3 predicts the next word, it needs to perform multiple inference calculations, so it takes up a lot of resources and consumes more power. As data center infrastructure expands to support the explosive growth of cloud computing, video streaming and 5G networks, its GPU and CPU architecture cannot operate efficiently to meet the imminent computing needs, which creates problems for hyperscale data center operators. huge challenge.

GPT3.5 training uses an AI computing system specially built by Microsoft, a high-performance network cluster composed of 10,000 V100 GPUs, with a total computing power consumption of approximately 3640 PF-days (i.e. If it is calculated one quadrillion times per second, it takes 3640 days to calculate). Such a large-scale and long-term GPU cluster training task places extreme requirements on the performance, reliability, cost and other aspects of the network interconnection base.

For example, Meta announced that it would suspend the expansion of data centers around the world and reconfigure these server farms to meet the data processing needs of artificial intelligence.

The demand for data processing in artificial intelligence platforms is huge. The OpenAI creators of ChatGPT launched the platform in November last year. It will not be able to continue without a ride on Microsoft’s upcoming upgrade of the Azure cloud platform. run.

The data center infrastructure that supports this digital transformation will be organized like the human brain into two hemispheres, or lobes, with one lobe needing to be much stronger than the other. One hemisphere will serve what's called "training," the computing power needed to process up to 300B data points to create the word salad that ChatGPT generates.

Training flaps require powerful computing power and state-of-the-art GPU semiconductors, but are currently required in data center clusters supporting cloud computing services and 5G networks There is very little connectivity.

At the same time, the infrastructure focused on “training” each AI platform will create a huge demand for electricity, requiring data centers to be located near gigawatts of renewable energy, installed A new liquid cooling system, along with redesigned backup power and generator systems, among other new design features.

Artificial Intelligence Platforms In the other hemisphere of the brain, higher-functioning digital infrastructure known as “inference” mode supports interactive “generative” platforms that respond to input questions or Within seconds of the instruction, the query is processed, entered into the modeled database, and responded with convincing human syntax.

While today’s hyper-connected data center networks, such as the largest data center cluster in North America, Northern Virginia’s “data center” also has the most extensive fiber optic network that can accommodate the lower reaches of the artificial intelligence brain’s “inference” lobe Level 1 connectivity is required, but these facilities will also need to be upgraded to meet the huge processing capacity required, and they will need to be closer to the substations.

In addition, data from research institutions show that data centers have become the world's largest energy consumers, and their proportion of total electricity consumption will increase from 3% in 2017 to 4.5% in 2025. Taking China as an example, the electricity consumption of data centers operating nationwide is expected to exceed 400 billion kWh in 2030, accounting for 4% of the country's total electricity consumption.

Therefore, even digital products require energy to develop and consume, and ChatGPT is no exception. It is estimated that inference processing in machine learning work accounts for 80-90% of computing power consumption. A rough calculation, since ChatGPT Since going online on November 30, 2022, carbon emissions have exceeded 814.61 tons.

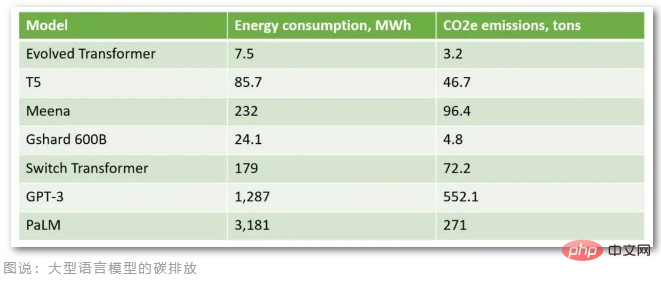

According to calculations by professional organizations, assuming that ChatGPT hosted on Microsoft's Azure cloud has 1 million user inquiries per day (approximately 29,167 hours per day under a specific response time and vocabulary), Calculated based on the maximum power of the A100 GPU of 407W (watts), the daily carbon emissions reach 3.82 tons, and the monthly carbon emissions exceed 100 tons. Today, ChatGPT has more than 10 million daily users, and the actual carbon emissions are far more than 100 tons per month. In addition, training such a large language model containing 175 billion parameters requires tens of thousands of CPUs/GPUs to input data 24 hours a day, consumes approximately 1287MWh of electricity, and emits more than 552 tons of carbon dioxide.

Judging from the carbon emissions of these large language models, GPT-3, the predecessor of ChatGPT, has the largest carbon emissions. It is reported that Americans produce an average of 16.4 tons of carbon emissions every year, and Danes produce an average of 11 tons of carbon emissions every year. As a result, ChatGPT’s model is trained to emit more carbon emissions than 50 Danes emit per year.

Cloud computing providers also recognize that data centers use large amounts of electricity and have taken steps to improve efficiency, such as building and operating data centers in the Arctic to take advantage of renewable energy and natural cooling conditions. However, this is not enough to meet the explosive growth of AI applications.

Lawrence Berkeley National Laboratory in the United States found in research that improvements in data center efficiency have been controlling the growth of energy consumption over the past 20 years, but research shows that current energy efficiency measures may not be enough to meet the needs of future data centers. needs.

The artificial intelligence industry is now at a critical turning point. Technological advances in generative AI, image recognition, and data analytics are revealing unique connections and uses for machine learning, but a technology solution that can meet this need first needs to be built because, according to Gartner, unless more sustainable choice, otherwise by 2025, AI will consume more energy than human activities.

The above is the detailed content of alert! The energy consumption crisis caused by the explosion of ChatGPT poses huge challenges to data center operators. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

SOTA performance, Xiamen multi-modal protein-ligand affinity prediction AI method, combines molecular surface information for the first time

Jul 17, 2024 pm 06:37 PM

Editor | KX In the field of drug research and development, accurately and effectively predicting the binding affinity of proteins and ligands is crucial for drug screening and optimization. However, current studies do not take into account the important role of molecular surface information in protein-ligand interactions. Based on this, researchers from Xiamen University proposed a novel multi-modal feature extraction (MFE) framework, which for the first time combines information on protein surface, 3D structure and sequence, and uses a cross-attention mechanism to compare different modalities. feature alignment. Experimental results demonstrate that this method achieves state-of-the-art performance in predicting protein-ligand binding affinities. Furthermore, ablation studies demonstrate the effectiveness and necessity of protein surface information and multimodal feature alignment within this framework. Related research begins with "S

Samsung introduces BM1743 data center-grade SSD: equipped with v7 QLC V-NAND and supports PCIe 5.0

Jun 18, 2024 pm 04:15 PM

Samsung introduces BM1743 data center-grade SSD: equipped with v7 QLC V-NAND and supports PCIe 5.0

Jun 18, 2024 pm 04:15 PM

According to news from this website on June 18, Samsung Semiconductor recently introduced its next-generation data center-grade solid-state drive BM1743 equipped with its latest QLC flash memory (v7) on its technology blog. ▲Samsung QLC data center-grade solid-state drive BM1743 According to TrendForce in April, in the field of QLC data center-grade solid-state drives, only Samsung and Solidigm, a subsidiary of SK Hynix, had passed the enterprise customer verification at that time. Compared with the previous generation v5QLCV-NAND (note on this site: Samsung v6V-NAND does not have QLC products), Samsung v7QLCV-NAND flash memory has almost doubled the number of stacking layers, and the storage density has also been greatly improved. At the same time, the smoothness of v7QLCV-NAND

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

ChatGPT is now available for macOS with the release of a dedicated app

Jun 27, 2024 am 10:05 AM

Open AI’s ChatGPT Mac application is now available to everyone, having been limited to only those with a ChatGPT Plus subscription for the last few months. The app installs just like any other native Mac app, as long as you have an up to date Apple S

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

SK Hynix will display new AI-related products on August 6: 12-layer HBM3E, 321-high NAND, etc.

Aug 01, 2024 pm 09:40 PM

According to news from this site on August 1, SK Hynix released a blog post today (August 1), announcing that it will attend the Global Semiconductor Memory Summit FMS2024 to be held in Santa Clara, California, USA from August 6 to 8, showcasing many new technologies. generation product. Introduction to the Future Memory and Storage Summit (FutureMemoryandStorage), formerly the Flash Memory Summit (FlashMemorySummit) mainly for NAND suppliers, in the context of increasing attention to artificial intelligence technology, this year was renamed the Future Memory and Storage Summit (FutureMemoryandStorage) to invite DRAM and storage vendors and many more players. New product SK hynix launched last year