Technology peripherals

Technology peripherals

AI

AI

Over 580 billion US dollars! The battle between Microsoft and Google has caused Nvidia's market value to soar, about 5 Intel

Over 580 billion US dollars! The battle between Microsoft and Google has caused Nvidia's market value to soar, about 5 Intel

Over 580 billion US dollars! The battle between Microsoft and Google has caused Nvidia's market value to soar, about 5 Intel

With ChatGPT in hand, you can answer all your questions.

# Do you know that the computational cost of each conversation with it is simply eye-watering.

# Previously, analysts said that ChatGPT costs 2 cents to reply once.

#You must know that the computing power required by artificial intelligence chatbots is powered by GPUs.

#This just makes chip companies like NVIDIA make a fortune.

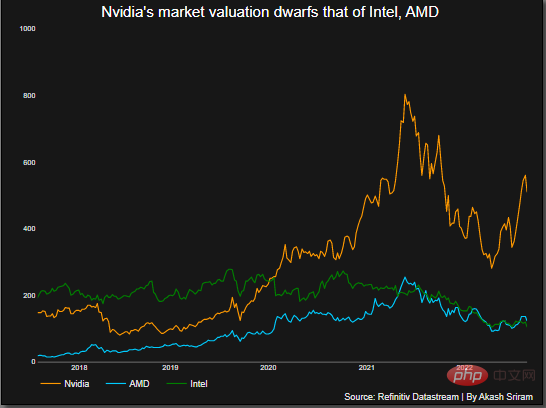

On February 23, Nvidia’s stock price soared, increasing its market value by more than 70 billion US dollars, and its total market value exceeded 580 billion US dollars, which is about 5 times that of Intel .

Besides NVIDIA, AMD can be called the second largest manufacturer in the graphics processor industry, with a market share of approximately 20%.

#And Intel holds less than 1% of the market share.

With ChatGPT unlocked Potential application cases, which may usher in another inflection point in artificial intelligence applications.

Why do you say that?

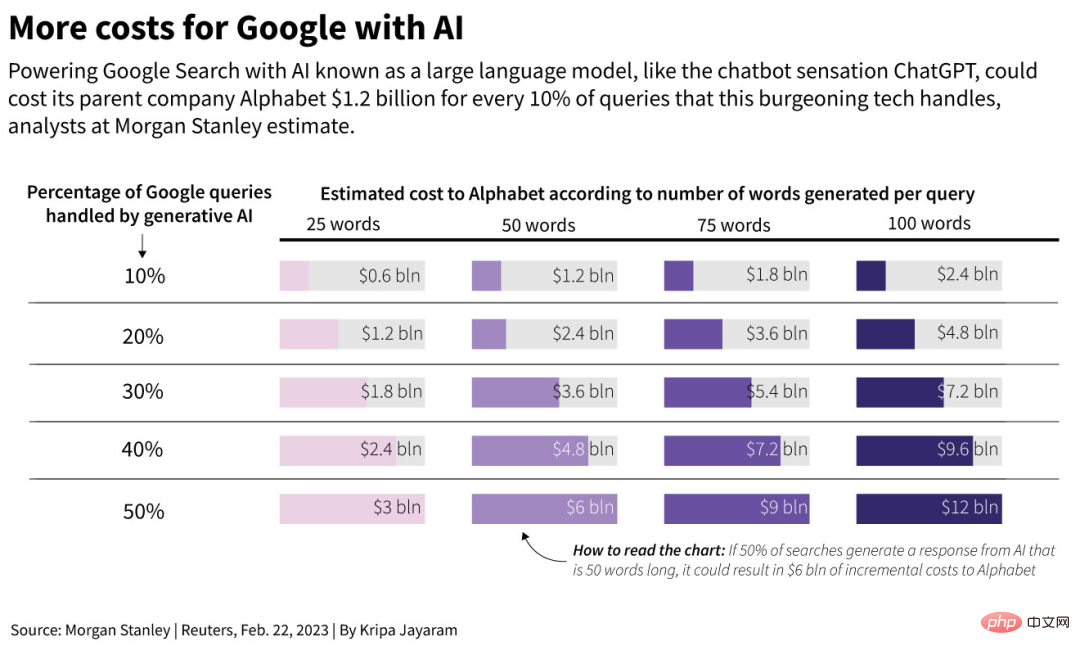

John Hennessy, chairman of Google parent company Alphabet, said in an interview with Reuters that the cost of dialogue with artificial intelligence such as large language models may be 10 times that of traditional search engines. More than times.

Morgan Stanley analysis said that Google had a total of 3.3 trillion searches last year, and the cost of each search was only 0.2 cents.

It is estimated that if Google’s chatbot Bard is introduced into the search engine and used to process Half of Google's searches and questions, based on 50 words per answer, could cost the company $6 billion more in 2024.

#Similarly, SemiAnalysis, a consulting firm specializing in chip technology, said that it was influenced by Google’s internal chips such as Tensor Processing Units and added chatbots to search engines. It could cost Google an additional $3 billion.

He believes that Google must reduce the operating costs of such artificial intelligence, but this process It won’t be easy, and in the worst case scenario it will take several years.

#That’s why searching through AI language models requires more computing power than traditional searches.

# Analysts say the additional costs could run into the billions of dollars over the next few years.

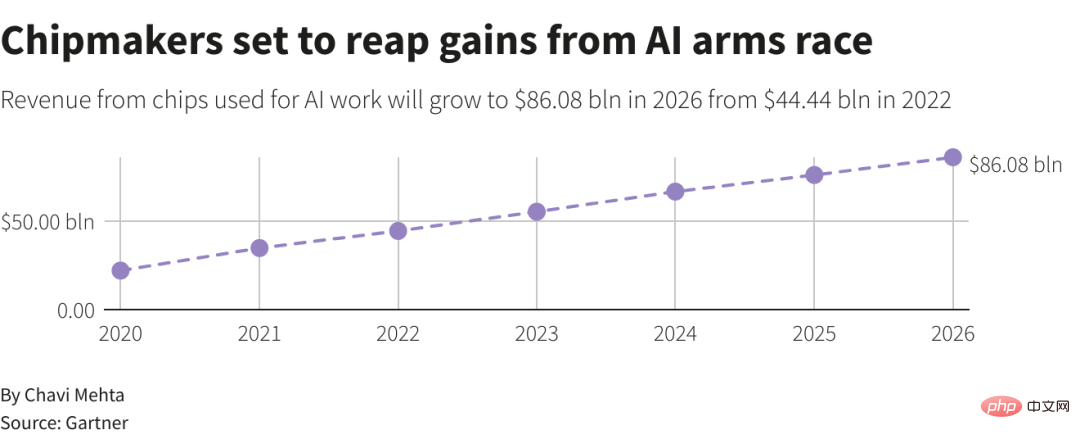

Gartner predicts that by 2026, the share of professional chips such as GPUs used in data centers is expected to rise by more than 15% from less than 3% in 2020 .

While it’s difficult to pinpoint exactly how much of Nvidia’s revenue is AI today, the potential for AI is growing exponentially as big tech companies race to develop similar AI applications. potential.

On Wednesday, Nvidia also announced an artificial intelligence cloud service and committed to working with Oracle, Microsoft and Google to provide it with Nvidia GTX through a simple browser The server has access to the ability to perform artificial intelligence processing.

The new platform will be offered by other cloud service providers and will help technology companies that do not have the infrastructure to build their own.

Huang Renxun said, “People’s enthusiasm for ChatGPT has made artificial intelligence visible to business leaders. But now, it is mainly a general-purpose software. To realize its real value, it needs to be tailored according to the company's own needs, so that it can improve its own services and products."

NVIDIA seizes GPU market dominance

New Street Research said that NVIDIA occupies 95% of the graphics processor market share.

#In the Philadelphia Stock Exchange Semiconductor Index, Nvidia shares have risen 42% this year, the best performance.

Investors are piling into Nvidia, betting that demand for artificial intelligence systems like ChatGPT will drive up orders for the company’s products, making it once again the world’s most valuable company The highest chip manufacturer.

For a long time, whether it is the top-notch ChatGPT, or models such as Bard and Stable Diffusion, they are all backed by an Nvidia chip worth about US$10,000. A100 provides computing power.

The NVIDIA A100 is able to perform many simple calculations simultaneously, which is very important for training and using neural network models.

#The technology behind the A100 was originally used to render complex 3D graphics in games. Now, the goal is to handle machine learning tasks and run in data centers.

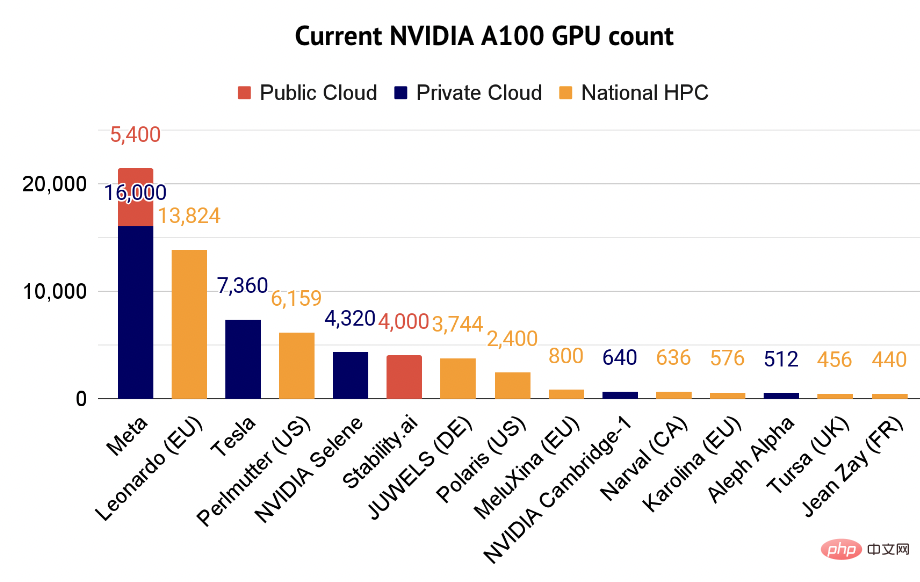

Investor Nathan Benaich said that A100 has now become the "workhorse" for artificial intelligence professionals. His report also lists some of the companies using the A100 supercomputer.

Machine learning tasks can consume the entire computer’s processing power, sometimes for several hours or days.

This means that companies with a best-selling AI product often need to buy more GPUs to cope with peak access periods, or to improve their models.

In addition to a single A100 on a card that plugs into an existing server, many data centers use a system of eight A100 graphics processors.

#This system is the Nvidia DGX A100, and a single system sells for up to $200,000.

Nvidia said on Wednesday it will sell cloud access to DGX systems directly, which could Lower the cost of entry for researchers.

#So what does it cost to run the new version of Bing?

An evaluation by New Street Research found that the OpenAI-based ChatGPT model in Bing search could require 8 GPUs to give an answer to a question in less than a second.

#At this rate, Microsoft would need more than 20,000 8-GPU servers to deploy this model to everyone.

Then Microsoft may spend $4 billion in infrastructure spending.

#This is just Microsoft. If you want to reach Google's daily query scale, that is, providing 8 billion-9 billion queries per day, it will need to spend $80 billion. .

For another example, the latest version of Stable Diffusion runs on 256 A100 graphics processors, or 32 DGX A100s, for 200,000 hours of calculation.

Mostaque, CEO of Stability AI, said that based on market prices, it would cost $600,000 just to train the model. The price is very affordable compared to the competition. This does not include the cost of inferring or deploying the model.

Huang Renxun said in an interview that

Only what is needed for this type of model In terms of computing power, Stability AI's products are actually not expensive.

We took a data center that would have cost $1 billion to run a CPU and scaled it down to a $100 million data center. Now, if this $100 million data center is placed in the cloud and shared by 100 companies, it is nothing.

NVIDIA GPU allows start-ups to train models at a lower cost. Now you can build a large language model, such as GPT, for about $10 million to $20 million. It's really, really affordable.

The 2022 State of Artificial Intelligence Report states that as of December 2022, more than 21,000 open source AI papers use NVIDIA chips.

Most researchers in the State of AI Compute Index use Nvidia’s V100 chip, which was launched in 2017, but the A100 It will grow rapidly in 2022 and become the third most frequently used chip.

The A100’s fiercest competition may be its successor, the H100, launching in 2022 and Mass production has begun. In fact, Nvidia said on Wednesday that the H100's revenue exceeded that of the A100 in the quarter ended in January.

#Currently, Nvidia is riding the express train of AI and sprinting towards "money".

The above is the detailed content of Over 580 billion US dollars! The battle between Microsoft and Google has caused Nvidia's market value to soar, about 5 Intel. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Bitget trading platform official app download and installation address

Feb 25, 2025 pm 02:42 PM

Bitget trading platform official app download and installation address

Feb 25, 2025 pm 02:42 PM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

The latest download address of Bitget in 2025: Steps to obtain the official app

Feb 25, 2025 pm 02:54 PM

This guide provides detailed download and installation steps for the official Bitget Exchange app, suitable for Android and iOS systems. The guide integrates information from multiple authoritative sources, including the official website, the App Store, and Google Play, and emphasizes considerations during download and account management. Users can download the app from official channels, including app store, official website APK download and official website jump, and complete registration, identity verification and security settings. In addition, the guide covers frequently asked questions and considerations, such as