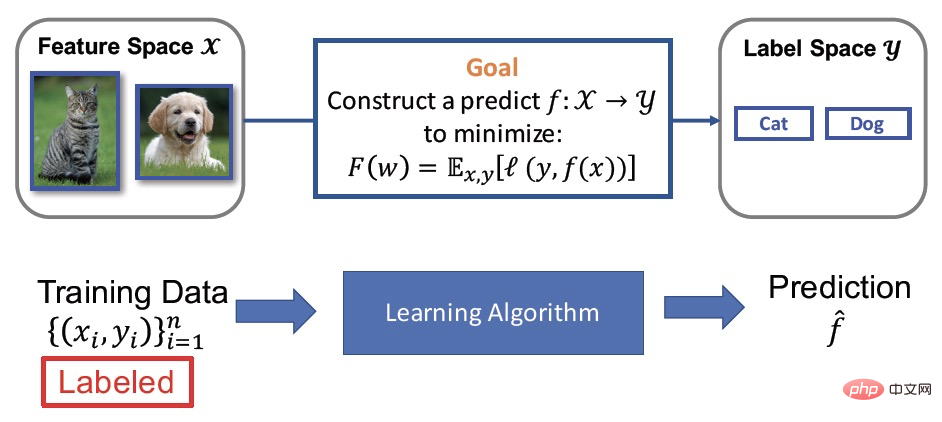

Supervised Learning

We know that the purpose of model training is actually to learn a prediction function. Mathematically, this can be described as a learning mapping function from data (X) to label (y). Supervised learning is one of the most commonly used model training methods. The improvement of its effect depends on a large amount of well-labeled training data, which is the so-called large amount of labeled data ((X,y)). However, labeling data often requires a lot of manpower and material resources, etc. Therefore, while the effect is improved, it will also bring about the problem of high cost. In practical applications, it is often encountered that there is a small amount of labeled data and a large amount of unlabeled data. The resulting semi-supervised learning has also attracted more and more attention from scientific workers.

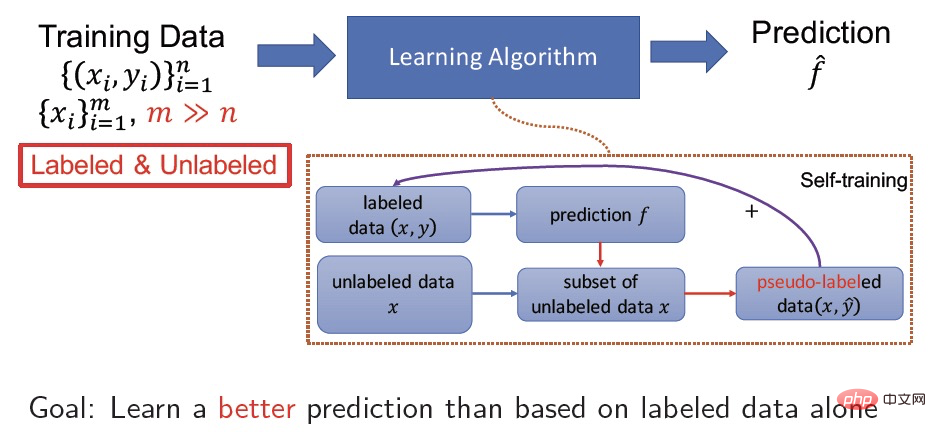

Semi-Supervised Learning

half Supervised learning simultaneously learns from a small amount of labeled data and a large amount of unlabeled data, and its purpose is to improve the accuracy of the model with the help of unlabeled data. For example, self-training is a very common semi-supervised learning method. Its specific process is to learn the mapping of data from X to y for labeled data (X, y), and at the same time use the learned model to predict an unlabeled data X Pseudo-label  helps the model achieve better convergence and improve accuracy by further performing supervised learning on the pseudo-label data (X,

helps the model achieve better convergence and improve accuracy by further performing supervised learning on the pseudo-label data (X,  ).

).

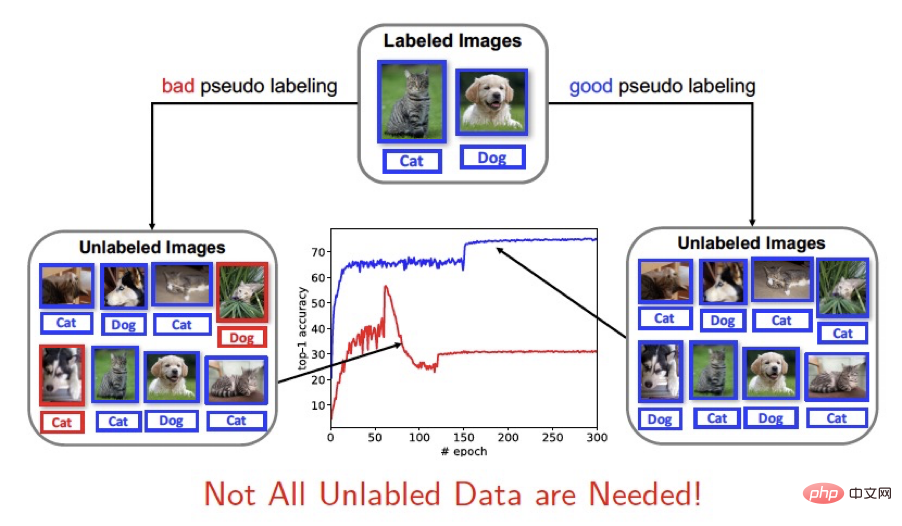

Core Solving Problem

Existing semi-supervised learning framework The use of unlabeled data can be roughly divided into two types. One is to participate in the training entirely, and the other is to use a fixed threshold to select samples with higher confidence for training (such as FixMatch). Since the use of unlabeled data by semi-supervised learning depends on the pseudo labels predicted by the current model, the accuracy of the pseudo labels will have a greater impact on the training of the model. Good prediction results will help the convergence and accuracy of the model. For new model learning, poor prediction results will interfere with model training. So we think: Not all unlabeled samples are necessary!

This paper innovatively proposes the use of dynamic threshold (dynamic threshold) As a method of screening unlabeled samples for semi-supervised learning (SSL), we transformed the training framework of semi-supervised learning and improved the selection strategy of unlabeled samples during the training process by dynamically changing thresholds. Select more effective unlabeled samples for training. Dash is a general strategy that can be easily integrated with existing semi-supervised learning methods. Experimentally, we fully verified its effectiveness on standard data sets such as CIFAR-10, CIFAR-100, STL-10 and SVHN. In theory, the paper proves the convergence properties of the Dash algorithm from the perspective of non-convex optimization.

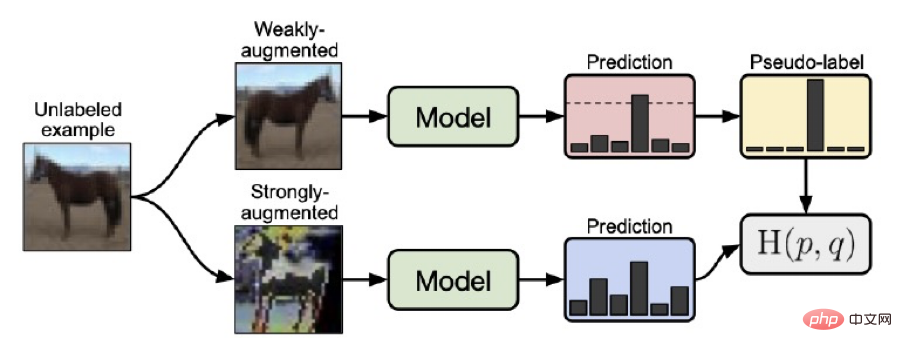

3. MethodFixmatch training framework

Before introducing our method Dash, we introduce the FixMatch algorithm proposed by Google, a semi-supervised learning method that uses a fixed threshold to select unlabeled samples. The FixMatch training framework was the previous SOTA solution. The key points of the entire learning framework can be summarized as the following points:

1. For unlabeled data, samples obtained through weak data enhancement (horizontal flipping, offset, etc.) are obtained through the current model Predicted value

2. For samples obtained by strong data enhancement (RA or CTA) of unlabeled data, the predicted value is obtained through the current model

3. Use the result of weak data enhancement  with high confidence to form a pseudo label

with high confidence to form a pseudo label  through one hot method, and then use

through one hot method, and then use  and X to predict the result of strong data enhancement The value

and X to predict the result of strong data enhancement The value  is used to train the model.

is used to train the model.

The advantage of fixmatch is that it uses weakly enhanced data to predict pseudo labels, which increases the accuracy of pseudo label predictions, and uses a fixed threshold of 0.95 during the training process (corresponding to a loss of 0.0513 ) Select prediction samples with high confidence (the threshold is greater than or equal to 0.95, that is, the loss is less than or equal to 0.0513) to generate pseudo labels, which further stabilizes the training process.

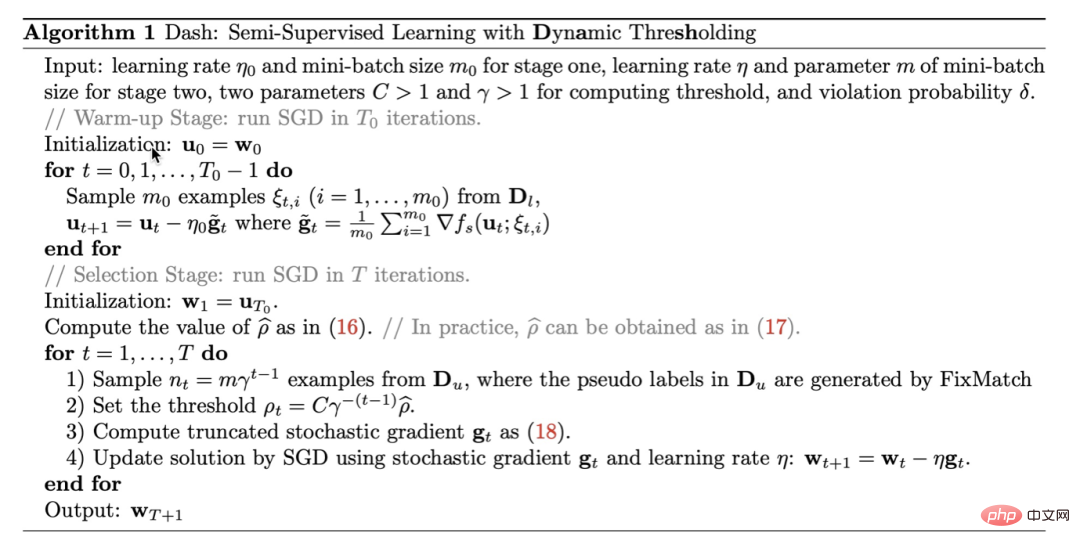

#Dash training framework

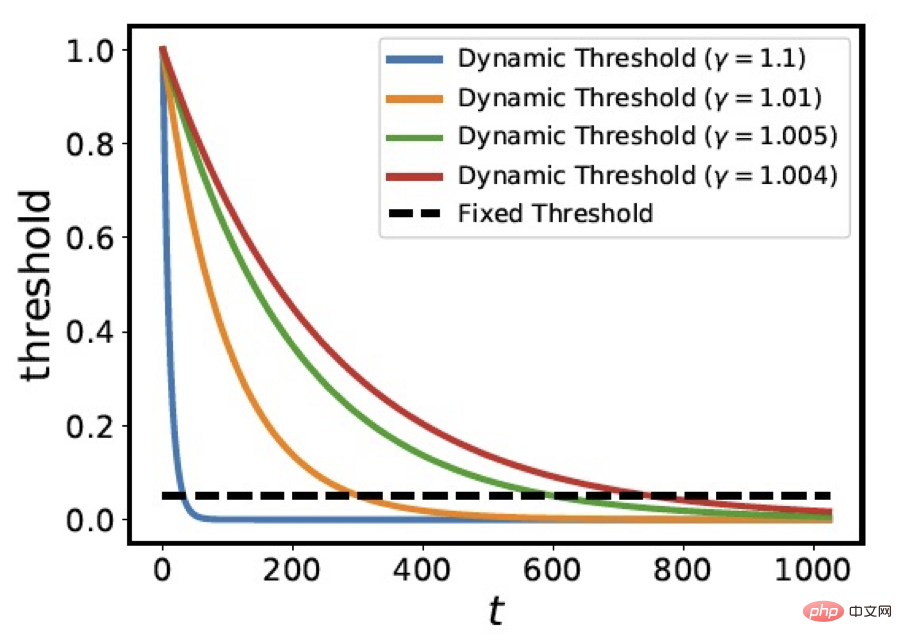

For the problem of selecting all pseudo-labels and selecting pseudo-labels with a fixed threshold, we Innovatively proposes a strategy of using dynamic thresholds for sample screening. That is, the dynamic threshold  is attenuated with t

is attenuated with t

In the formula, C=1.0001,  is the average loss of labeled data after the first epoch, and we select those

is the average loss of labeled data after the first epoch, and we select those  unlabeled samples to participate in gradient backpropagation. The figure below shows the change curve of the threshold

unlabeled samples to participate in gradient backpropagation. The figure below shows the change curve of the threshold  under different

under different  values. You can see that the parameter

values. You can see that the parameter  controls the rate of decline of the threshold curve. The change curve of

controls the rate of decline of the threshold curve. The change curve of  is similar to the downward trend of the loss function when simulating the training model.

is similar to the downward trend of the loss function when simulating the training model.

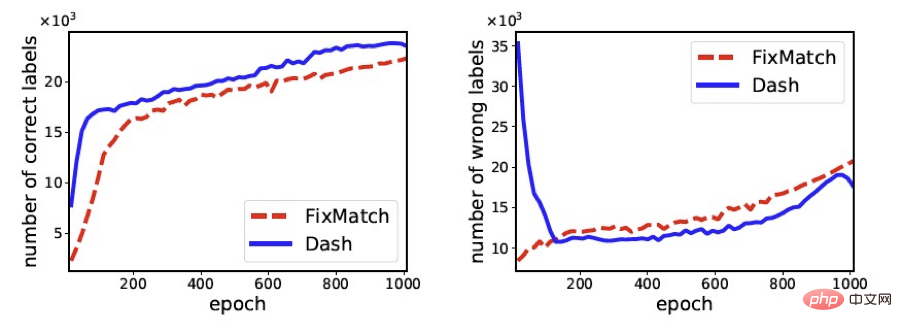

The following figure compares the number of correct samples and the number of incorrect samples selected by FixMath and Dash during the training process as the training progresses changes (the data set used is cifar100). It can be clearly seen from the figure that compared to FixMatch, Dash can select more samples with correct labels and fewer samples with wrong labels, which ultimately helps to improve the accuracy of the training model.

Our algorithm can be summarized as follows Algorithm 1. Dash is a general strategy that can be easily integrated with existing semi-supervised learning methods. For convenience, in the experiments of this article we mainly integrate Dash with FixMatch. For more theoretical proofs, see the paper.

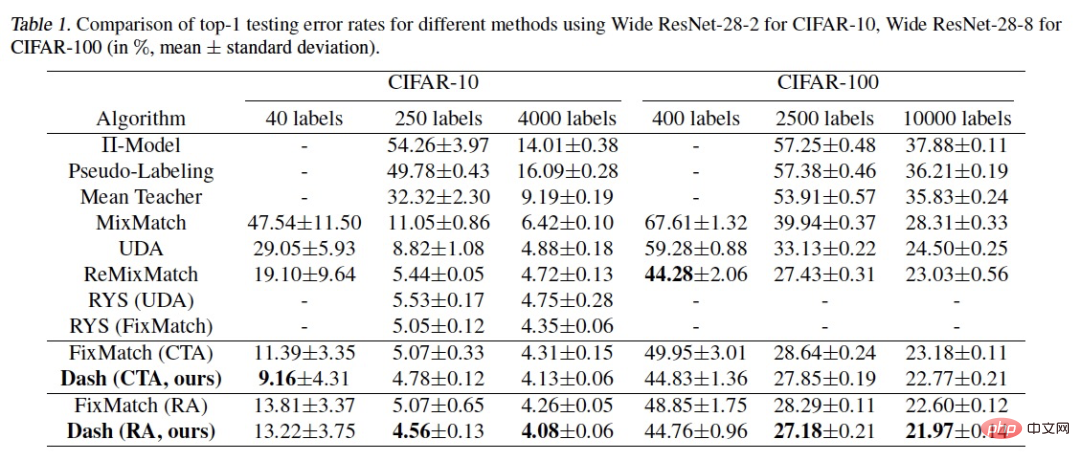

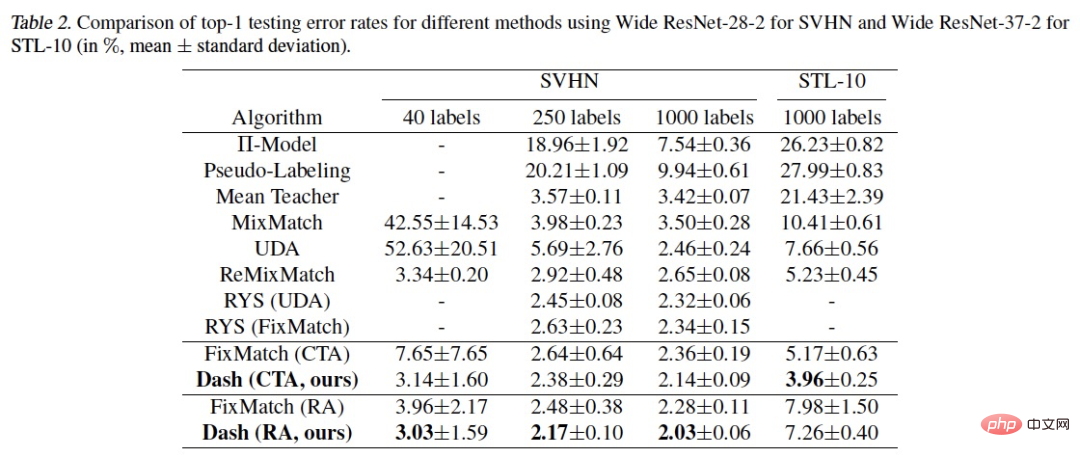

We use commonly used data sets in semi-supervised learning: CIFAR-10, CIFAR-100, STL-10 The algorithm was verified on SVHN. The results are as follows:

It can be seen that our method has achieved better results in multiple experimental settings. SOTA has better results. What needs to be explained is the experiment on CIFAR-100 400label. ReMixMatch used the additional trick of data align to achieve better results. After adding the trick of data align to Dash, an error rate of 43.31% can be achieved. , lower than ReMixMatch’s 44.28% error rate.

In the actual research and development process of task domain-oriented models, the semi-supervised Dash framework is often applied. Next, I will introduce to you the open source free models we have developed in various domains. You are welcome to experience and download (you can experience it on most mobile phones):

The above is the detailed content of Damo Academy's open source semi-supervised learning framework Dash refreshes many SOTAs. For more information, please follow other related articles on the PHP Chinese website!