Get data from the internet using Excel and Python

Today’s article is mainly divided into two parts. One is to build a data website through Python, and the other is to use Excel and Python to obtain data from the written Web website.

1. Construct test website data

Build a Web website and a Web API service through the Python Flask Web framework.

1. Build a Web website

Create a new Python script named "5-5-WebTable.py" to create a simple web page containing a table. If the reader is not interested in the construction method, you can skip the following code and directly execute the script "5-5-WebTable.py" to open the website.

(1) Install the flask package.

pip install flask

(2) Build a web page containing a table.

from flask import Flask

app = Flask(__name__)# 创建Falsk Web应用实例

# 将路由“/”映射到table_info函数,函数返回HTML代码

@app.route('/')

def table_info():

return """<h2 id="HTML表格实例-用于提供给Excel和Python读取">HTML表格实例,用于提供给Excel和Python读取</h2>

<table border="1">

<caption>用户信息表</caption>

<tbody><tr>

<th>姓名</th>

<th>性别</th>

<th>年龄</th>

</tr>

<tr>

<td>小米</td>

<td>女</td>

<td>22</td>

</tr>

……….

</tbody></table>"""

if __name__ == '__main__':

app.debug = True# 启用调试模式

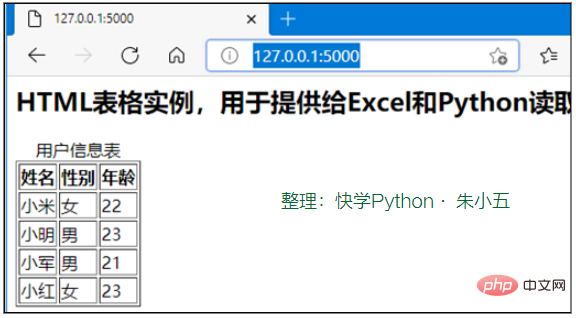

app.run()# 运行,网站端口默认为5000Start the website through the command "python ./5-5-WebTable.py", then enter http://127.0.0.1:5000/ in the browser, and the web page content shown in Figure 1 will appear. .

Figure 1 Test website built using Flask

2. Build a Web API service

Create a new website named "5-5- WebAPI.py" Python script that uses the flask_restplus package to build a Web API service. If the reader is not interested in the construction method, you can skip the following code and directly execute the script "5-5-WebAPI.py" to open the Web API service.

(1) Install the flask_restplus package.

pip install flask-restplus

(2) Import necessary libraries and initialize application objects.

from flask import Flask

# Api类是Web API应用的入口,需要用Flask应用程序初始化

from flask_restplus import Api

# Resource类是HTTP请求的资源的基类

from flask_restplus import Resource

# fields类用于定义数据的类型和格式

from flask_restplus import fields

app = Flask(__name__)# 创建Falsk Web应用实例

# 在flask应用的基础上构建flask_restplusApi对象

api = Api(app, version='1.0',

title='Excel集成Python数据分析-测试用WebAPI',

description='测试用WebAPI', )

# 使用namespace函数生成命名空间,用于为资源分组

ns = api.namespace('ExcelPythonTest', description='Excel与Python Web API测试')

# 使用api.model函数生成模型对象

todo = api.model('task_model', {

'id': fields.Integer(readonly=True,

description='ETL任务唯一标识'),

'task': fields.String(required=True,

description='ETL任务详情')

})(3) Web API data operation class, including methods such as add, delete, modify, and query.

class TodoDAO(object):

def __init__(self):

self.counter = 0

self.todos = []

def get(self, id):

for todo in self.todos:

if todo['id'] == id:

return todo

api.abort(404, "ETL任务 {} 不存在".format(id))

def create(self, data):

todo = data

todo['id'] = self.counter = self.counter + 1

self.todos.append(todo)

return todo

# 实例化数据操作,创建3条测试数据

DAO = TodoDAO()

DAO.create({'task': 'ETL-抽取数据操作'})

DAO.create({'task': 'ETL-数据清洗转换'})

DAO.create({'task': 'ETL-数据加载操作'})(4) Build the routing mapping of Web API.

The HTTP resource request class inherits from the Resource class, and then maps to different routes, while specifying the HTTP method that can be used.

@ns.route('/')# 路由“/”对应的资源类为TodoList,可使用get方法和post方法进行请求

class TodoList(Resource):

@ns.doc('list_todos')# @doc装饰器对应API文档的信息

@ns.marshal_list_with(todo)# @marshal_xxx装饰器对模型数据进行格式转换与输出

def get(self):# 定义get方法获取所有的任务信息

return DAO.todos

@ns.doc('create_todo')

@ns.expect(todo)

@ns.marshal_with(todo, code=201)

def post(self):# 定义post方法获取所有的任务信息

return DAO.create(api.payload), 201

# 路由/<int:id>对应的资源类为Todo,可使用get、delete、put方法进行请求

@ns.route('/<int:id>')

@ns.response(404, '未发现相关ETL任务')

@ns.param('id', 'ETL任务ID号')

class Todo(Resource):

@ns.doc('get_todo')

@ns.marshal_with(todo)

def get(self, id):

return DAO.get(id)

@ns.doc('delete_todo')

@ns.response(204, 'ETL任务已经删除')

def delete(self, id):

DAO.delete(id)

return '', 204

@ns.expect(todo)

@ns.marshal_with(todo)

def put(self, id):

return DAO.update(id, api.payload)

if __name__ == '__main__':

app.run(debug=True, port=8000)# 启动Web API服务,端口为8000(4) Start the Web API service.

Start the Web API service through the command "python ./5-5-WebAPI.py" and enter "http://127.0.0.1:8000/" in the browser, as shown in Figure 5-23 List of Web API service request methods shown.

Figure 2 List of WebAPI service request methods

2. Capturing web page data

Excel can be accessed through the "Data" tab The "from website" function captures web page data. Python can use the requests library, Beautiful Soup package, and Scrapy framework to crawl web page data.

1. Capture through Excel

Click "Data" → "From other sources" → "From website" function. There are limitations to the web page data that Excel can read: dynamic web page data cannot be automatically recognized, and non-table data cannot be automatically recognized.

(1) Click the "Data" → "From Other Sources" → "From Website" function.

(2) Make sure that the Web website written in Section 5.5.1 has been opened.

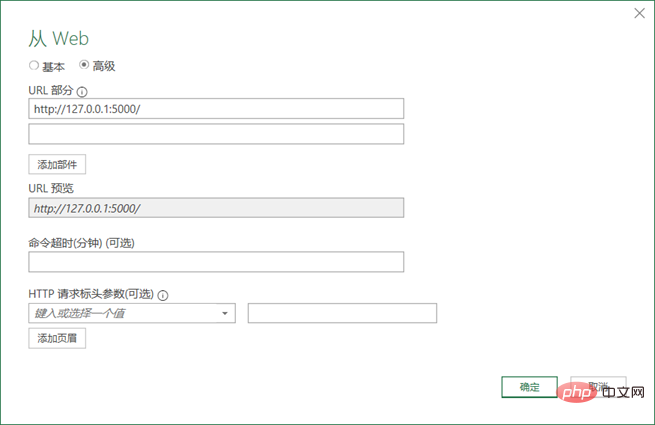

(3) Enter the website URL address "http://127.0.0.1:5000/"

Click the "Advanced" button to configure more detailed HTTP request information, and then click " OK" button, as shown in Figure 3.

Figure 3 Configure the URL of the website to be read

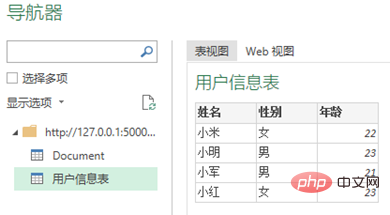

(4) Select import data in the "Navigator" window.

As shown in Figure 4, Excel automatically recognizes the table data in the web page, select the table name and click the "Load" button.

Figure 4 Excel automatically recognizes table data in web pages

2. Use Python to capture

The following demonstrates using the requests library to capture The data in the entire web page is then used to parse the web page using Beautiful Soup. Readers can refer to the code material file "5-5-web.ipynb" in this book to learn.

(1) Read web page data through requests.

import requests #导入requests包 url ='http://127.0.0.1:5000/' strhtml= requests.get(url) #使用get方法请求网页数据

(2) Parse web pages through Beautiful Soup.

from bs4 import BeautifulSoup

soup = BeautifulSoup(strhtml.text)# 将网页内容作为参数,创建soup对象

table = soup.find('table')# 查找网页中的table元素

table_body = table.find('tbody')# 查找table元素中的tbody元素

data = []

rows = table_body.find_all('tr')# 查找表中的所有tr元素

for row in rows:# 遍历数据

cols = row.find_all('td')

cols = [ele.text.strip() for ele in cols]

data.append([ele for ele in cols if ele])

# 结果输出:[[],

['小米', '女', '22'],['小明','男','23'],……3. Call the Web API service

Excel can call the Web API service through the "Self Website" function under the "Data" tab. Python can use the requests library, Beautiful Soup package, and Scrapy framework to call Web API to obtain data.

1. Use Excel to call

(1) Make sure that the Web API service written in Section 5.5.1 has been started.

(2) Enter the URL corresponding to the Web API method: http://127.0.0.1:8000/ExcelPythonTest/.

(3) Process the returned data.

After calling the Web API service, the data is returned in JSON format, and the JSON data is processed according to the method introduced in Section 5.4.3.

2. Use Python to call

Use the requests library to call the Web API method, and then process the returned JSON data. Readers can refer to the code material file "5-5-api.ipynb" in this book "To learn.

import requests#导入requests包 url ='http://127.0.0.1:8000/ExcelPythonTest/' strhtml= requests.get(url)#使用get方法获取网页数据 import pandas as pd frame= pd.read_json(strhtml.text)#使用Pandas包中的read_json函数 print(frame) #结果输出: id task 0 1 ETL-抽取数据操作 1 2 ETL-数据清洗转换 2 3 ETL-数据加载操作

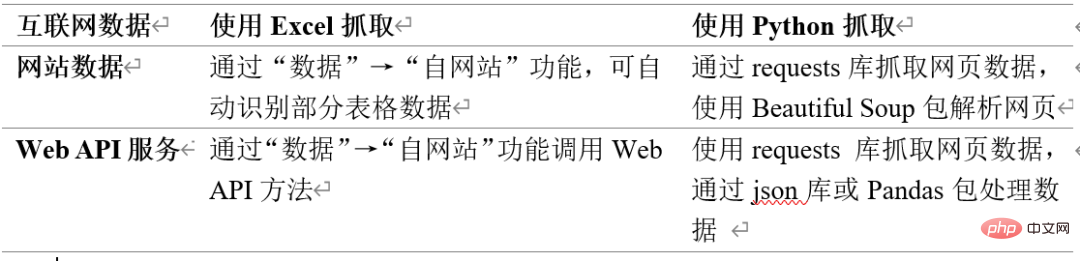

4、两种方法对比

表1所示为Excel和Python抓取互联网数据方法的对比。需要注意Excel从互联网抓取数据的功能并不完善。

表1 Excel和Python抓取互联网数据方法对比

The above is the detailed content of Get data from the internet using Excel and Python. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

Is the vscode extension malicious?

Apr 15, 2025 pm 07:57 PM

VS Code extensions pose malicious risks, such as hiding malicious code, exploiting vulnerabilities, and masturbating as legitimate extensions. Methods to identify malicious extensions include: checking publishers, reading comments, checking code, and installing with caution. Security measures also include: security awareness, good habits, regular updates and antivirus software.

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

How to run programs in terminal vscode

Apr 15, 2025 pm 06:42 PM

In VS Code, you can run the program in the terminal through the following steps: Prepare the code and open the integrated terminal to ensure that the code directory is consistent with the terminal working directory. Select the run command according to the programming language (such as Python's python your_file_name.py) to check whether it runs successfully and resolve errors. Use the debugger to improve debugging efficiency.

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

Can vs code run in Windows 8

Apr 15, 2025 pm 07:24 PM

VS Code can run on Windows 8, but the experience may not be great. First make sure the system has been updated to the latest patch, then download the VS Code installation package that matches the system architecture and install it as prompted. After installation, be aware that some extensions may be incompatible with Windows 8 and need to look for alternative extensions or use newer Windows systems in a virtual machine. Install the necessary extensions to check whether they work properly. Although VS Code is feasible on Windows 8, it is recommended to upgrade to a newer Windows system for a better development experience and security.

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

Can visual studio code be used in python

Apr 15, 2025 pm 08:18 PM

VS Code can be used to write Python and provides many features that make it an ideal tool for developing Python applications. It allows users to: install Python extensions to get functions such as code completion, syntax highlighting, and debugging. Use the debugger to track code step by step, find and fix errors. Integrate Git for version control. Use code formatting tools to maintain code consistency. Use the Linting tool to spot potential problems ahead of time.

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

Choosing Between PHP and Python: A Guide

Apr 18, 2025 am 12:24 AM

PHP is suitable for web development and rapid prototyping, and Python is suitable for data science and machine learning. 1.PHP is used for dynamic web development, with simple syntax and suitable for rapid development. 2. Python has concise syntax, is suitable for multiple fields, and has a strong library ecosystem.

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

Can vscode be used for mac

Apr 15, 2025 pm 07:36 PM

VS Code is available on Mac. It has powerful extensions, Git integration, terminal and debugger, and also offers a wealth of setup options. However, for particularly large projects or highly professional development, VS Code may have performance or functional limitations.

Golang vs. Python: Concurrency and Multithreading

Apr 17, 2025 am 12:20 AM

Golang vs. Python: Concurrency and Multithreading

Apr 17, 2025 am 12:20 AM

Golang is more suitable for high concurrency tasks, while Python has more advantages in flexibility. 1.Golang efficiently handles concurrency through goroutine and channel. 2. Python relies on threading and asyncio, which is affected by GIL, but provides multiple concurrency methods. The choice should be based on specific needs.

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP and Python: Different Paradigms Explained

Apr 18, 2025 am 12:26 AM

PHP is mainly procedural programming, but also supports object-oriented programming (OOP); Python supports a variety of paradigms, including OOP, functional and procedural programming. PHP is suitable for web development, and Python is suitable for a variety of applications such as data analysis and machine learning.