It's finally here, OpenAI officially opens ChatGPT API

Developers can now integrate ChatGPT and Whisper models into their applications and products through our API.

In the previous API version, the text-davinci-003 version of the model was used. This model did not have the contextual dialogue function, and the generated content was much worse than ChatGPT, so the community There have also been many projects that package the web version of ChatGPT to provide services, but the stability is not very good because they rely on web pages. Now that the ChatGPT version of the API has been officially released, this is great news for developers. Of course, it is of great significance to OpenAI and even the entire industry. In the next period of time, there is bound to be a new API. A large number of excellent AI applications.

The latest externally released API is driven by gpt-3.5-turbor. This is OpenAI’s most advanced language model. Many things can be done through this API.

- Write an email or other article

- Write Python code

- Answer a question about a set of files

- Give your software a Natural language interface

- Language translation

- Simulating video game characters and more

The new chat model needs to take a series of messages as input so that it can have The contextual dialogue function is now available, and of course you can also perform single-round tasks, the same as before.

To implement the new API, you need the v0.27.0 version of the Python package:

pip3 install openai==v0.27.0

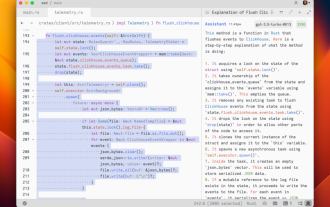

Then you can directly use the openai package to interact with openai:

import openai

openai.api_key = "sk-xxxx"

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "你是一个AI机器人助手。"},

{"role": "user", "content": "哪个队将赢得2023年NBA总冠军?"},

]

)The most important thing One of the input parameters is messages, which is an array of message objects, each of which contains a role (system, user, assisttant) and message content. The entire conversation can be one message or multiple messages.

Normally, the format of the conversation is to first have a system message. The system message helps to set the behavior of the assistant. User messages are generated by the end users of our application, which are the questions we want to consult. The assistant message is the data fed back to us by openai. Of course, it can also be written by the developer.

When we reply to the last assistant message together, we will have the ability to contextualize it.

import openai

openai.api_key = "sk-xxxx"

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "你是一个AI机器人助手。"},

{"role": "user", "content": "哪个队将赢得2023年NBA总冠军?"},

{"role": "assistant", "content": "湖人队将获得总冠军!"},

{"role": "user", "content": "谁会当选FMVP?"}

]

)

result = ''

for choice in response.choices:

result += choice.message.content

print(result)For example, if we add the previous message here, we can finally get the contextual message:

Because prediction is actually It is difficult to make the most accurate prediction because many factors may affect this decision. However, the Lakers have many players who have the opportunity to win the FMVP award, such as LeBron James, Anthony Davis, Kyle Kuzma, etc., who may become FMVP.

The above is the detailed content of It's finally here, OpenAI officially opens ChatGPT API. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

ChatGPT now allows free users to generate images by using DALL-E 3 with a daily limit

Aug 09, 2024 pm 09:37 PM

DALL-E 3 was officially introduced in September of 2023 as a vastly improved model than its predecessor. It is considered one of the best AI image generators to date, capable of creating images with intricate detail. However, at launch, it was exclus

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

A new programming paradigm, when Spring Boot meets OpenAI

Feb 01, 2024 pm 09:18 PM

In 2023, AI technology has become a hot topic and has a huge impact on various industries, especially in the programming field. People are increasingly aware of the importance of AI technology, and the Spring community is no exception. With the continuous advancement of GenAI (General Artificial Intelligence) technology, it has become crucial and urgent to simplify the creation of applications with AI functions. Against this background, "SpringAI" emerged, aiming to simplify the process of developing AI functional applications, making it simple and intuitive and avoiding unnecessary complexity. Through "SpringAI", developers can more easily build applications with AI functions, making them easier to use and operate.

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

Choosing the embedding model that best fits your data: A comparison test of OpenAI and open source multi-language embeddings

Feb 26, 2024 pm 06:10 PM

OpenAI recently announced the launch of their latest generation embedding model embeddingv3, which they claim is the most performant embedding model with higher multi-language performance. This batch of models is divided into two types: the smaller text-embeddings-3-small and the more powerful and larger text-embeddings-3-large. Little information is disclosed about how these models are designed and trained, and the models are only accessible through paid APIs. So there have been many open source embedding models. But how do these open source models compare with the OpenAI closed source model? This article will empirically compare the performance of these new models with open source models. We plan to create a data

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

How to install chatgpt on mobile phone

Mar 05, 2024 pm 02:31 PM

Installation steps: 1. Download the ChatGTP software from the ChatGTP official website or mobile store; 2. After opening it, in the settings interface, select the language as Chinese; 3. In the game interface, select human-machine game and set the Chinese spectrum; 4 . After starting, enter commands in the chat window to interact with the software.

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

Posthumous work of the OpenAI Super Alignment Team: Two large models play a game, and the output becomes more understandable

Jul 19, 2024 am 01:29 AM

If the answer given by the AI model is incomprehensible at all, would you dare to use it? As machine learning systems are used in more important areas, it becomes increasingly important to demonstrate why we can trust their output, and when not to trust them. One possible way to gain trust in the output of a complex system is to require the system to produce an interpretation of its output that is readable to a human or another trusted system, that is, fully understandable to the point that any possible errors can be found. For example, to build trust in the judicial system, we require courts to provide clear and readable written opinions that explain and support their decisions. For large language models, we can also adopt a similar approach. However, when taking this approach, ensure that the language model generates

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Rust-based Zed editor has been open sourced, with built-in support for OpenAI and GitHub Copilot

Feb 01, 2024 pm 02:51 PM

Author丨Compiled by TimAnderson丨Produced by Noah|51CTO Technology Stack (WeChat ID: blog51cto) The Zed editor project is still in the pre-release stage and has been open sourced under AGPL, GPL and Apache licenses. The editor features high performance and multiple AI-assisted options, but is currently only available on the Mac platform. Nathan Sobo explained in a post that in the Zed project's code base on GitHub, the editor part is licensed under the GPL, the server-side components are licensed under the AGPL, and the GPUI (GPU Accelerated User) The interface) part adopts the Apache2.0 license. GPUI is a product developed by the Zed team

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Don't wait for OpenAI, wait for Open-Sora to be fully open source

Mar 18, 2024 pm 08:40 PM

Not long ago, OpenAISora quickly became popular with its amazing video generation effects. It stood out among the crowd of literary video models and became the focus of global attention. Following the launch of the Sora training inference reproduction process with a 46% cost reduction 2 weeks ago, the Colossal-AI team has fully open sourced the world's first Sora-like architecture video generation model "Open-Sora1.0", covering the entire training process, including data processing, all training details and model weights, and join hands with global AI enthusiasts to promote a new era of video creation. For a sneak peek, let’s take a look at a video of a bustling city generated by the “Open-Sora1.0” model released by the Colossal-AI team. Open-Sora1.0

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft, OpenAI plan to invest $100 million in humanoid robots! Netizens are calling Musk

Feb 01, 2024 am 11:18 AM

Microsoft and OpenAI were revealed to be investing large sums of money into a humanoid robot startup at the beginning of the year. Among them, Microsoft plans to invest US$95 million, and OpenAI will invest US$5 million. According to Bloomberg, the company is expected to raise a total of US$500 million in this round, and its pre-money valuation may reach US$1.9 billion. What attracts them? Let’s take a look at this company’s robotics achievements first. This robot is all silver and black, and its appearance resembles the image of a robot in a Hollywood science fiction blockbuster: Now, he is putting a coffee capsule into the coffee machine: If it is not placed correctly, it will adjust itself without any human remote control: However, After a while, a cup of coffee can be taken away and enjoyed: Do you have any family members who have recognized it? Yes, this robot was created some time ago.