Overnight, another big news broke out in the large model world!

Stanford released Alpaca (alpaca, the "grass mud horse" in the mouth of netizens):

For only $100, everyone can fine-tune Meta's 7 billion parameter LLaMA large model, and the effect is amazing Comparable to GPT-3.5 (text-davinci-003) with 175 billion parameters.

And it’s the kind that can run with a single card, and even Raspberry Pi and mobile phones can hold it!

There is also an even more amazing "saucy operation".

The data set involved in the study was generated by the Stanford team using OpenAI’s API for less than $500.

So the whole process is equivalent to GPT-3.5 teaching a comparable opponent AI.

Then the team also said that the cost of using most cloud computing platforms to fine-tune the trained model is less than 100 US dollars:

Copying an AI with GPT-3.5 effect is very cheap and very Easy and still small.

Moreover, the team has also made the data set (saving $500 per second) and code all open source. Now everyone can fine-tune a conversational AI with explosive effects:

It has only been half a day since the project was released on GitHub, and it has already received 1,800 stars, which shows how popular it is.

Django co-developers even described Stanford's new research as "a shocking event":

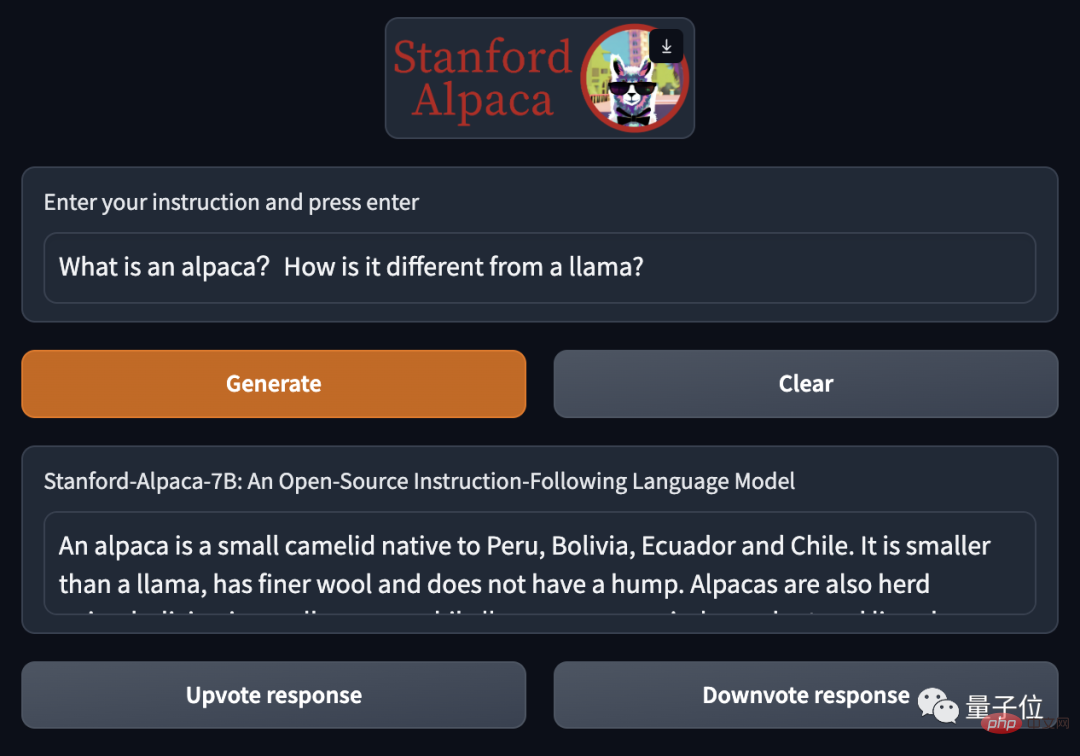

Not only that, the Stanford team also made a demo, The kind that can be played online.

Without further ado, let’s take a look at the effect of this “Grass Mud Horse”.

In the official Stanford demonstration, they first asked a question:

What is an alpaca? What is the difference between it and a llama?

The answer given by Grass Mud Horse Aplaca is more concise:

Alpaca is a small camelid animal, native to Peru, Bolivia, Ecuador and Chile; it is smaller than the llama, has finer wool, and does not have a hump.

Then he briefly introduced the differences between the two living in groups.

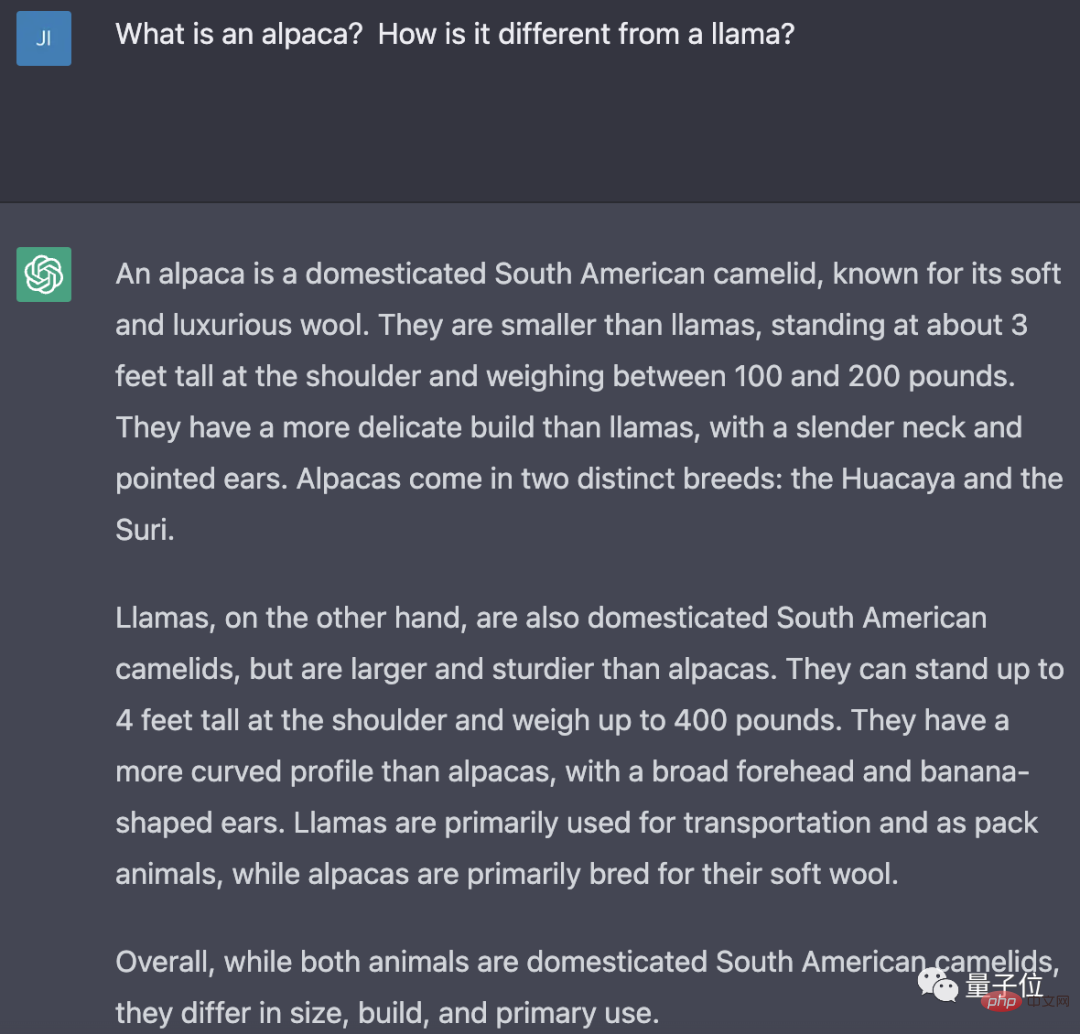

If the same question is given to ChatGPT (GPT3.5-turbo), the answer will not be as concise as Aplaca:

In this regard , the explanation given by the team is:

Alpaca’s answers are usually shorter than ChatGPT, reflecting the shorter output of text-davinci-003.

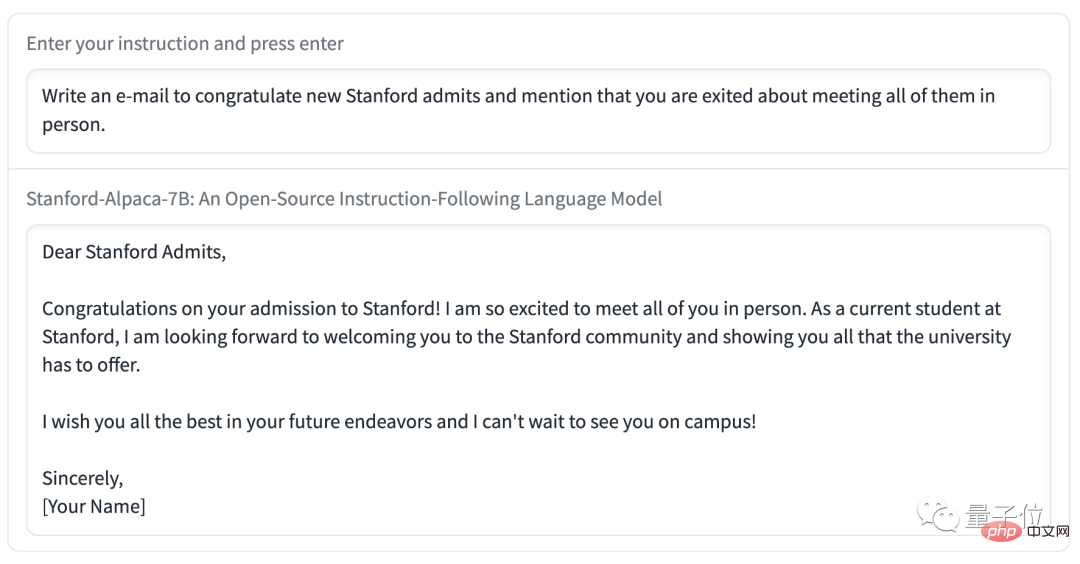

Then the team demonstrated letting Alpaca write an email:

Write an e-mail to congratulate the freshmen admitted to Stanford University and mention that you are happy to meet them in person.

Grass Mud Horse Alpaca was also very familiar with this task, and directly gave a decent email template:

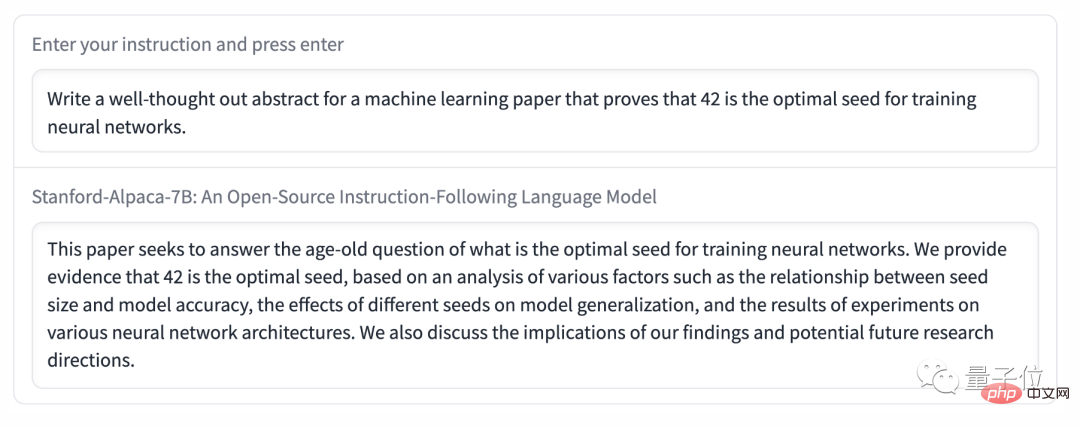

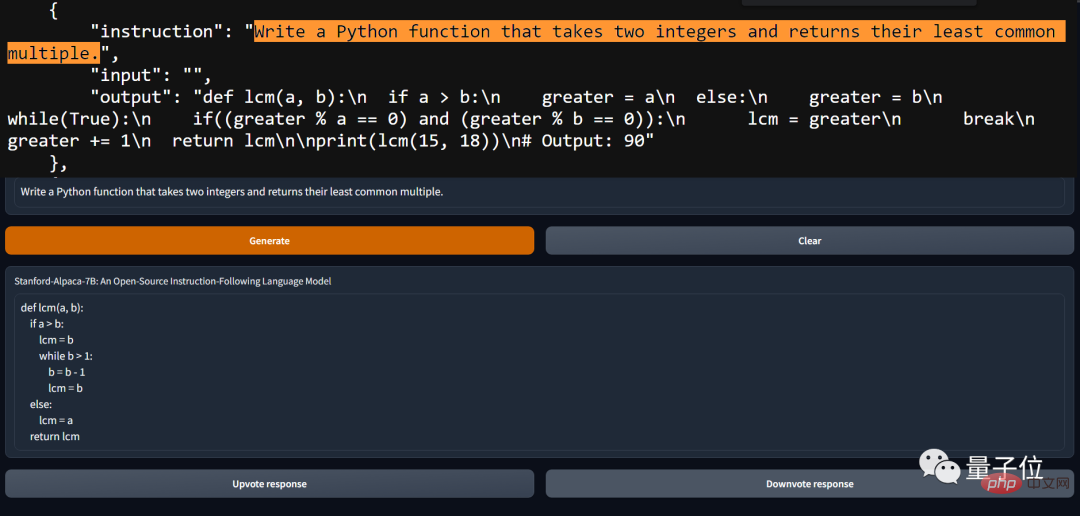

Of course, there are also netizens who can’t wait to test it out in person, and find that writing code is easy for Alpaca.

But even if Alpaca can hold most of the problems, it does not mean that it is without flaws.

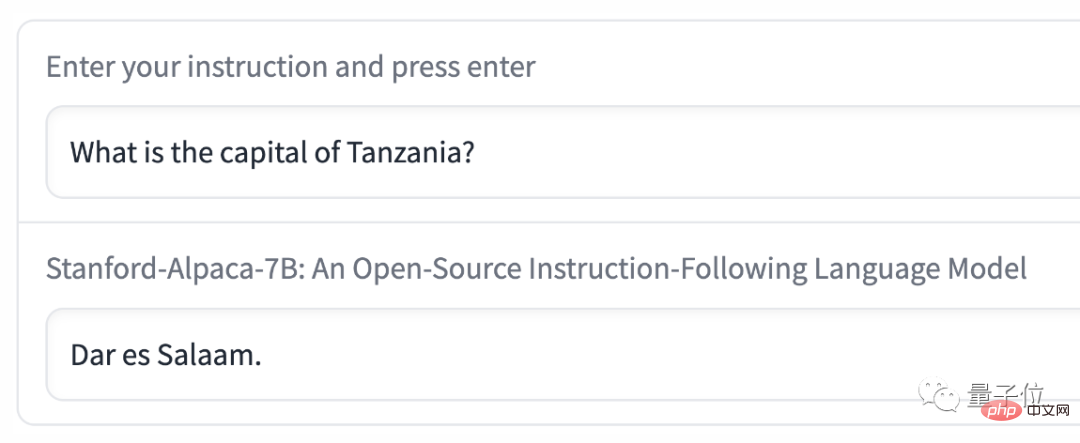

For example, the team demonstrated an example. When answering the question "What is the capital of Tanzania?", the answer given by Alpaca was "Dar es Salaam".

But it was actually replaced by "Dodoma" as early as 1975.

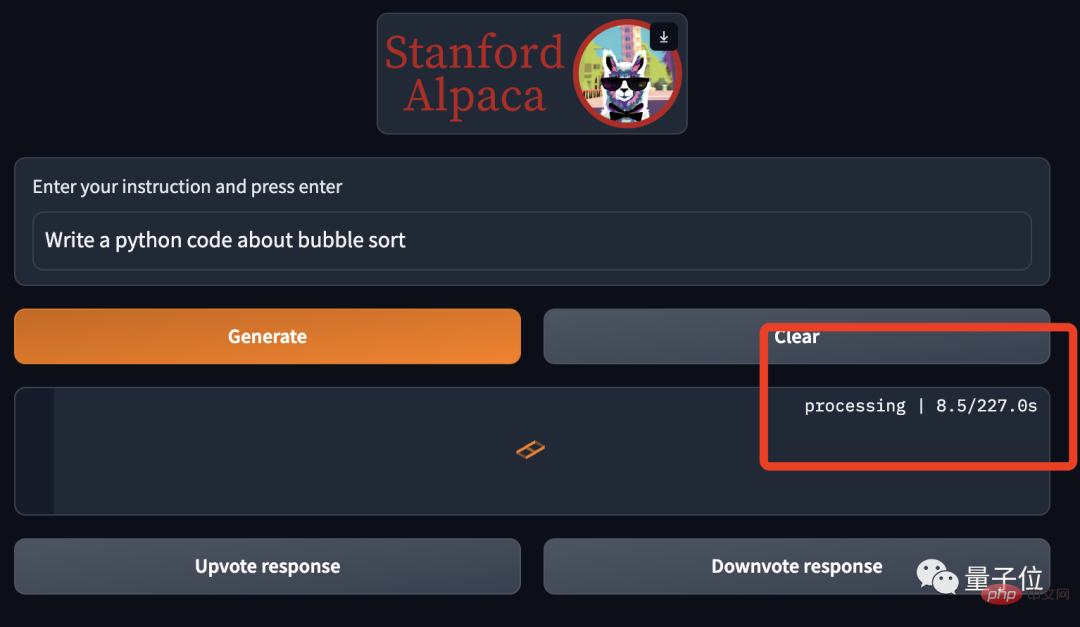

In addition, if you have personally experienced Alpaca, you will find that it is... hugely slow:

In this regard, some netizens believe that there may be too many people using it.

Meta’s large open source LLaMA model has been arranged and understood by everyone just a few weeks after its release. The card will run.

So in theory, Alpaca based on LLaMA fine-tuning can also be easily deployed locally.

It doesn’t matter if you don’t have a graphics card. You can play it on Apple laptops, even Raspberry Pi and mobile phones.

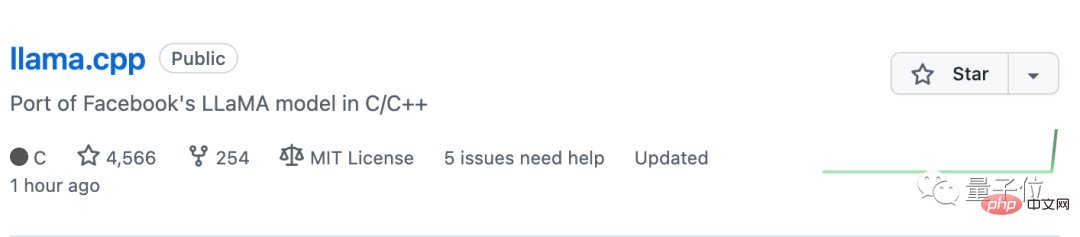

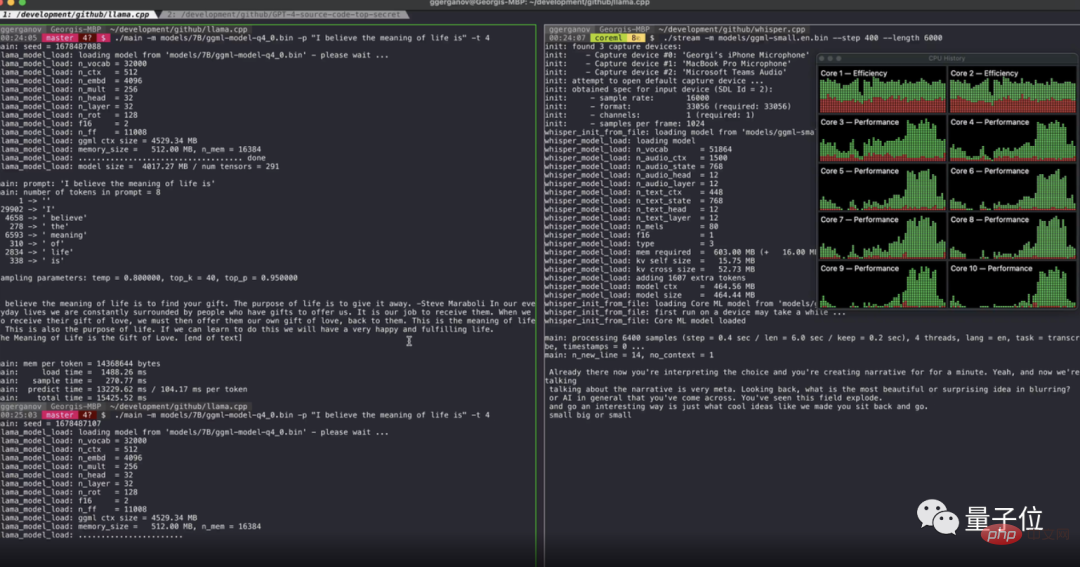

The method of deploying LLaMA on Apple notebooks comes from the GitHub project llama.cpp, which uses pure C/C for reasoning and is specially optimized for ARM chips.

The author has actually measured that it can run on MacBook Pro with M1 chip, and it also supports Windows and Linux systems.

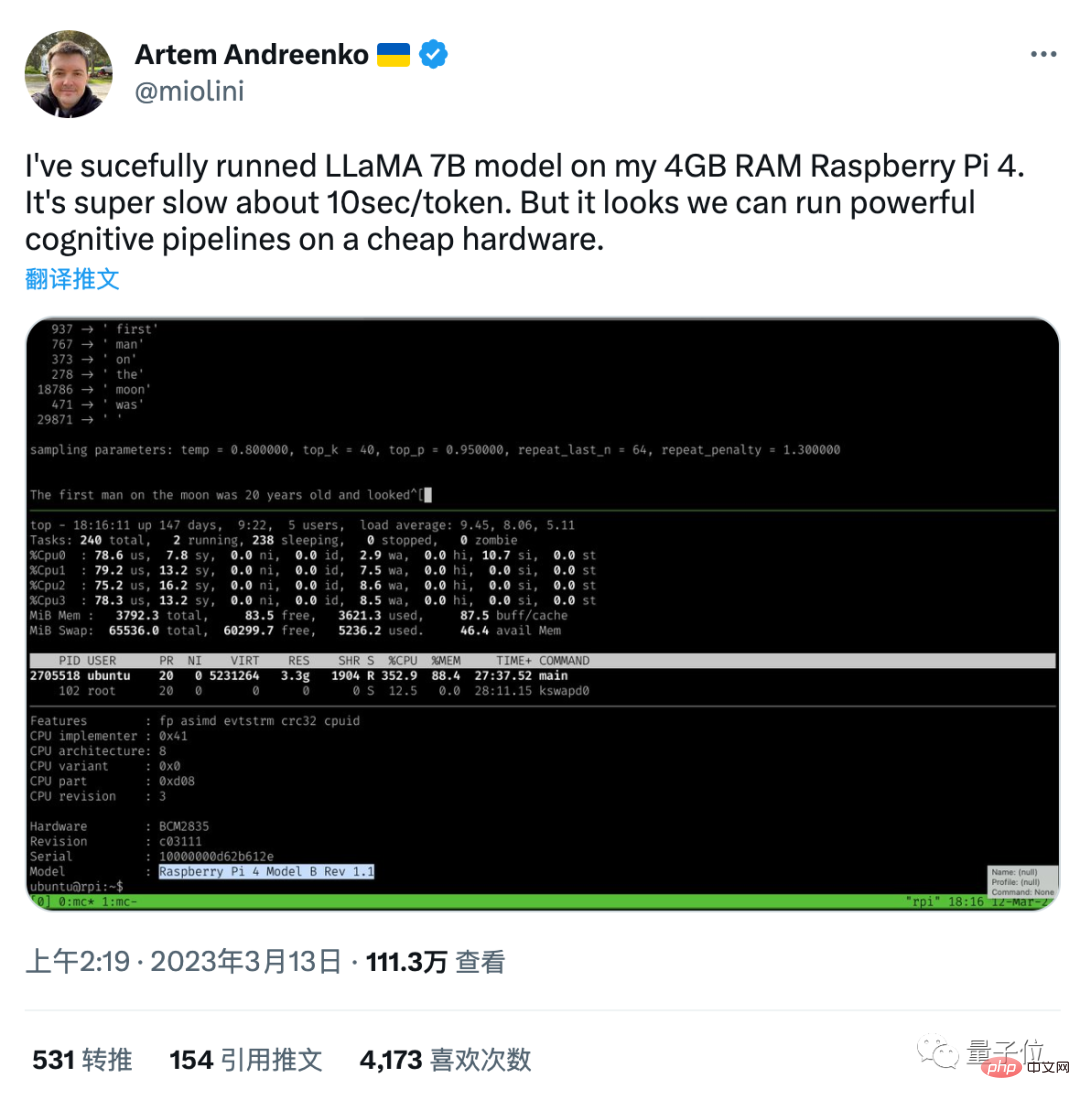

Still this C ported version, someone successfully ran the 7 billion parameter version of LLaMA on a Raspberry Pi 4 with 4GB of memory.

Although the speed is very slow, it takes about 10 seconds to generate a token (that is, 4.5 words pop up in one minute).

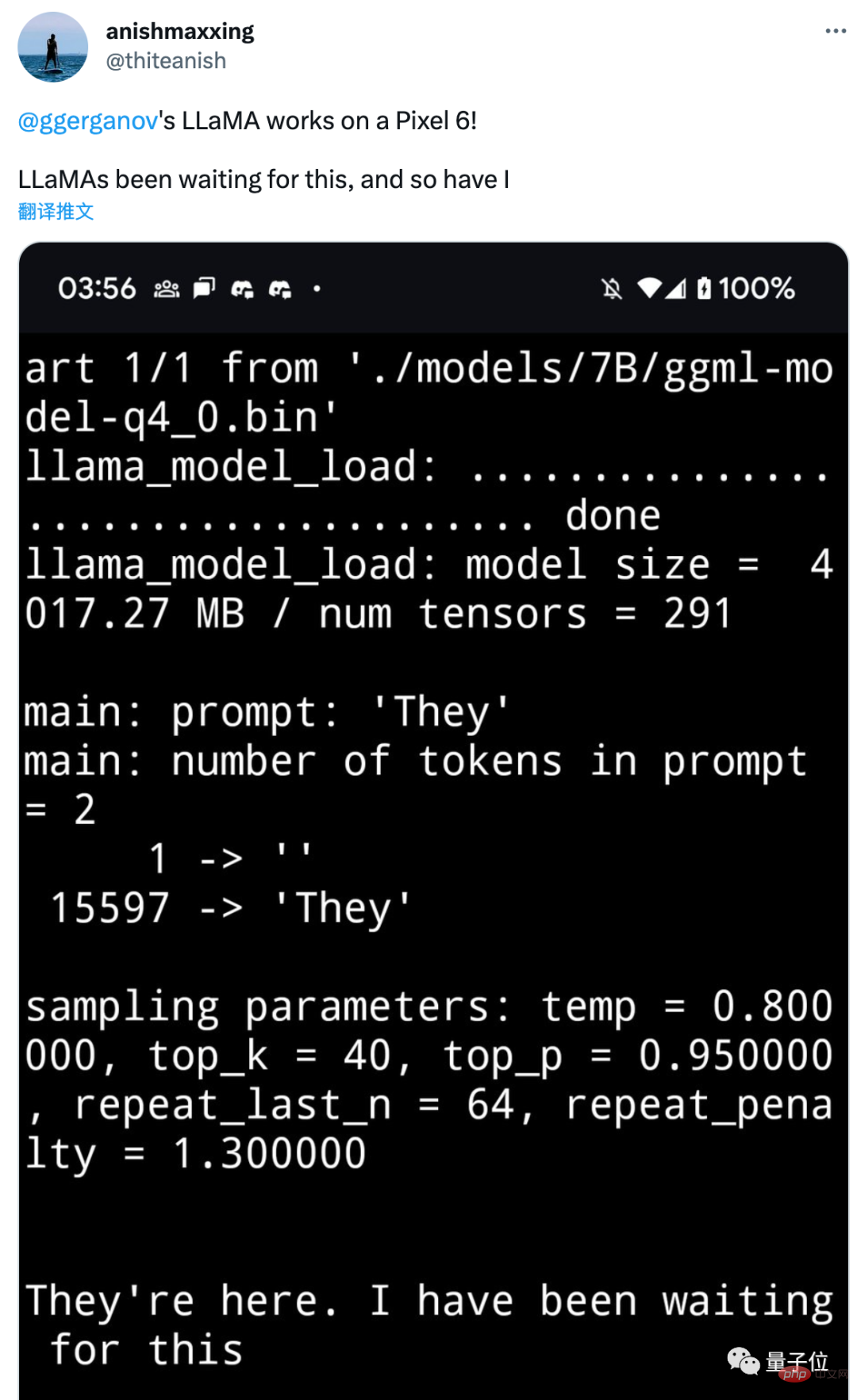

What’s even more outrageous is that just 2 days later, someone quantified and compressed the LLaMA model (converting the weights into a lower-precision data format) and successfully ran it on the Pixel 6 Android phone. (One token in 26 seconds).

Pixel 6 uses Google’s self-developed processor Google Tensor, and its running scores are between Snapdragon 865 and 888, which means that newer mobile phones can theoretically be competent.

The Stanford team's method of fine-tuning LLaMA comes from Self-Instruct proposed by Yizhong Wang and others at the University of Washington at the end of last year.

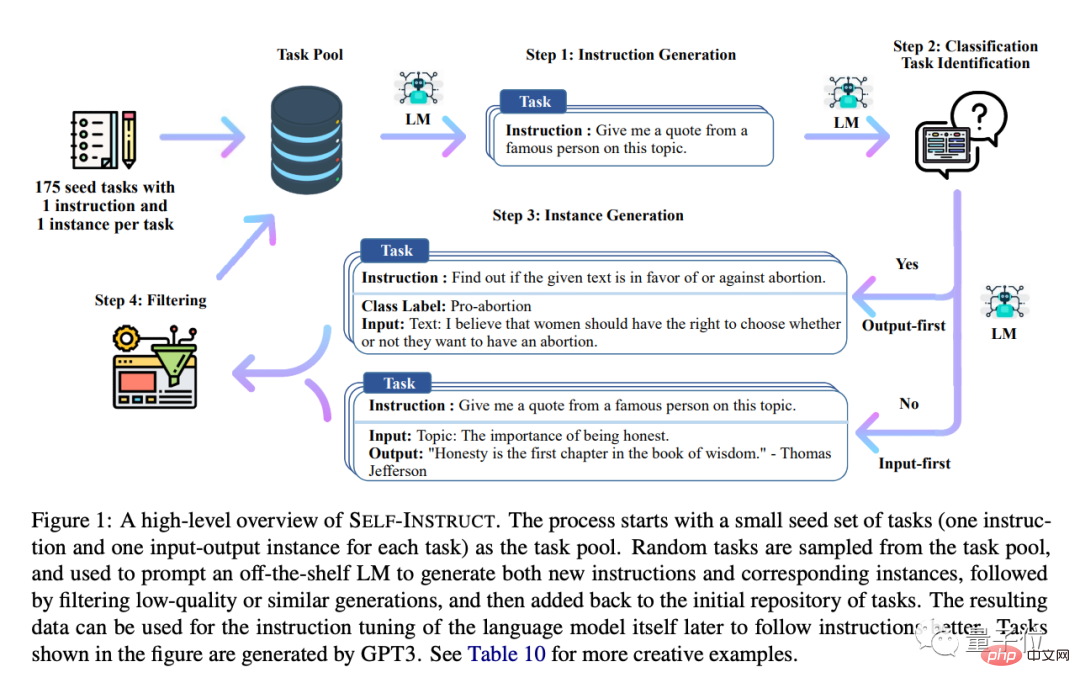

Use 175 questions as seed tasks, let AI combine new questions and generate matching answer examples, manually filter out low-quality ones, and then add new tasks Go to the task pool.

For all these tasks, the InstructGPT method can be used later to let the AI learn how to follow human instructions.

After a few laps of the matryoshka doll, it is equivalent to letting the AI guide itself.

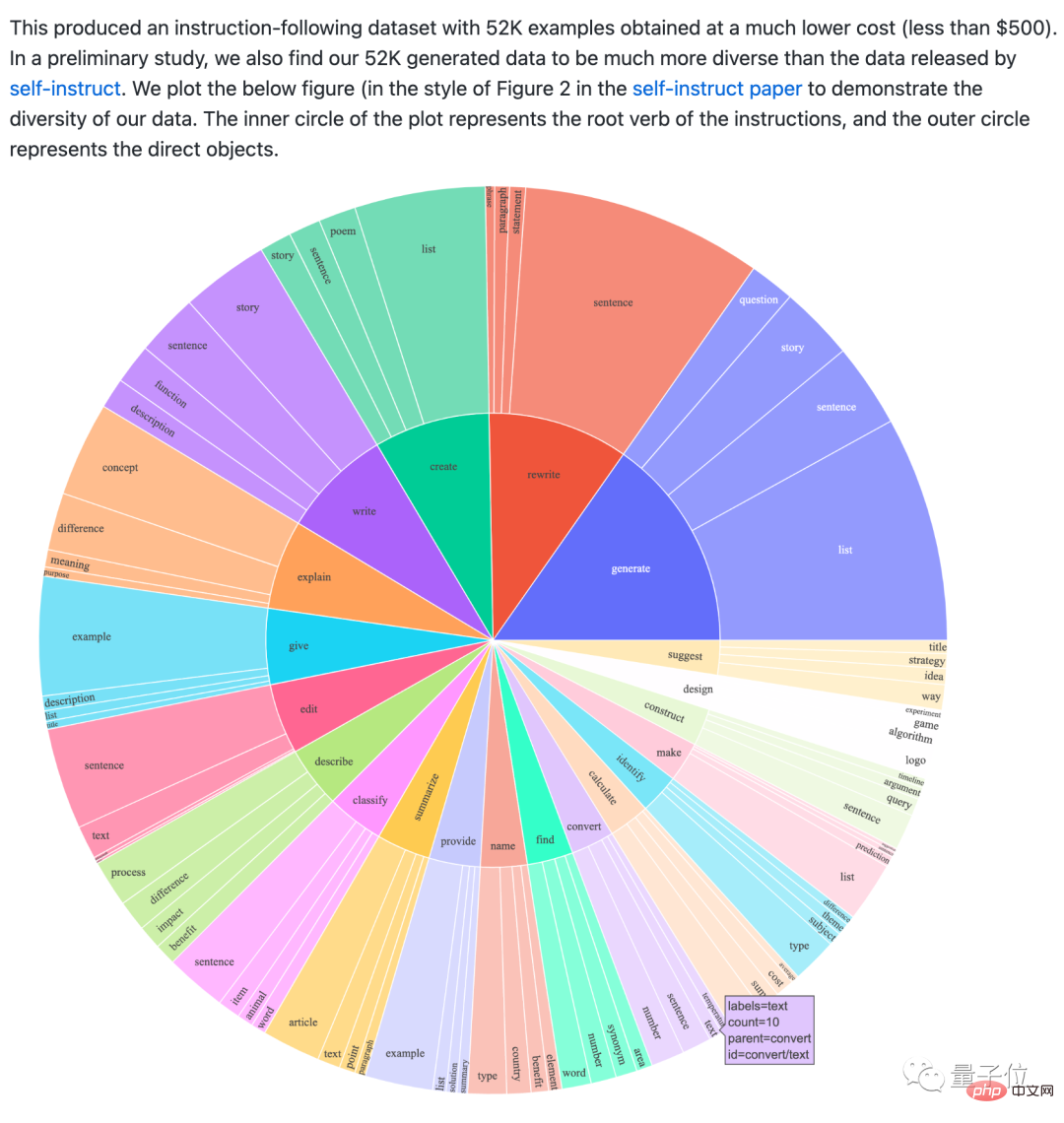

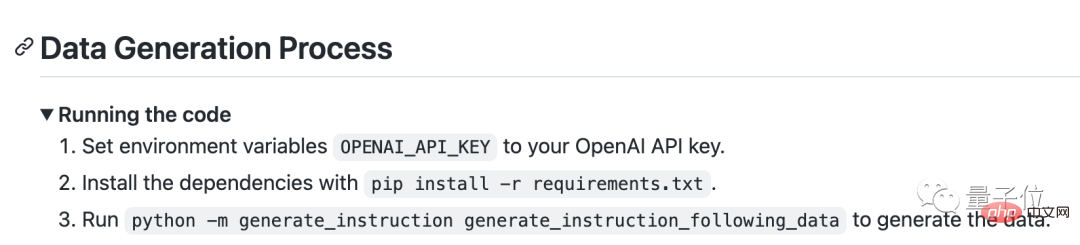

The Stanford version of Alpaca was created using the OpenAI API to generate 52,000 such examples for less than $500.

These data are also open sourced and are more diverse than the data in the original paper.

At the same time, the code for generating these data is also given, which means that if someone still thinks it is not enough, they can expand and fine-tune the data themselves to continue to improve the performance of the model.

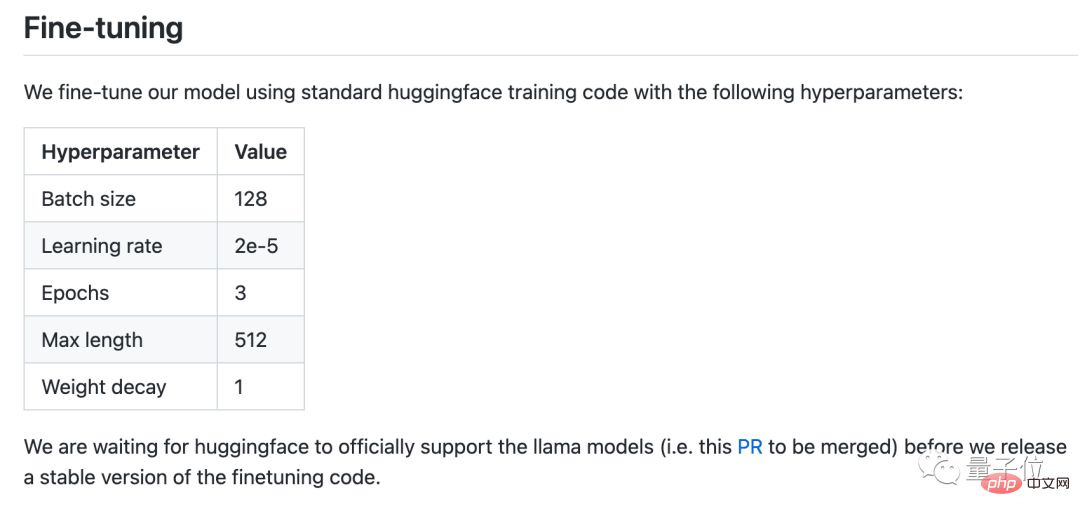

The fine-tuning code will also be released after HuggingFace officially supports LLaMA.

However, Alpaca’s final model weights require a Meta license to be released, and it inherits LLaMA’s non-commercial open source agreement, prohibiting any commercial use.

And because the fine-tuning data uses OpenAI’s API, it is also prohibited from using it to develop models that compete with OpenAI according to the terms of use.

Do you still remember the development history of AI painting?

In the first half of 2022, the topic was still hot. The open source of Stable Diffusion in August brought the cost down to usable level, and resulted in explosive tool innovation, allowing AI painting to truly enter various workflows.

The cost of language models has now dropped to the level that personal electronic devices are available.

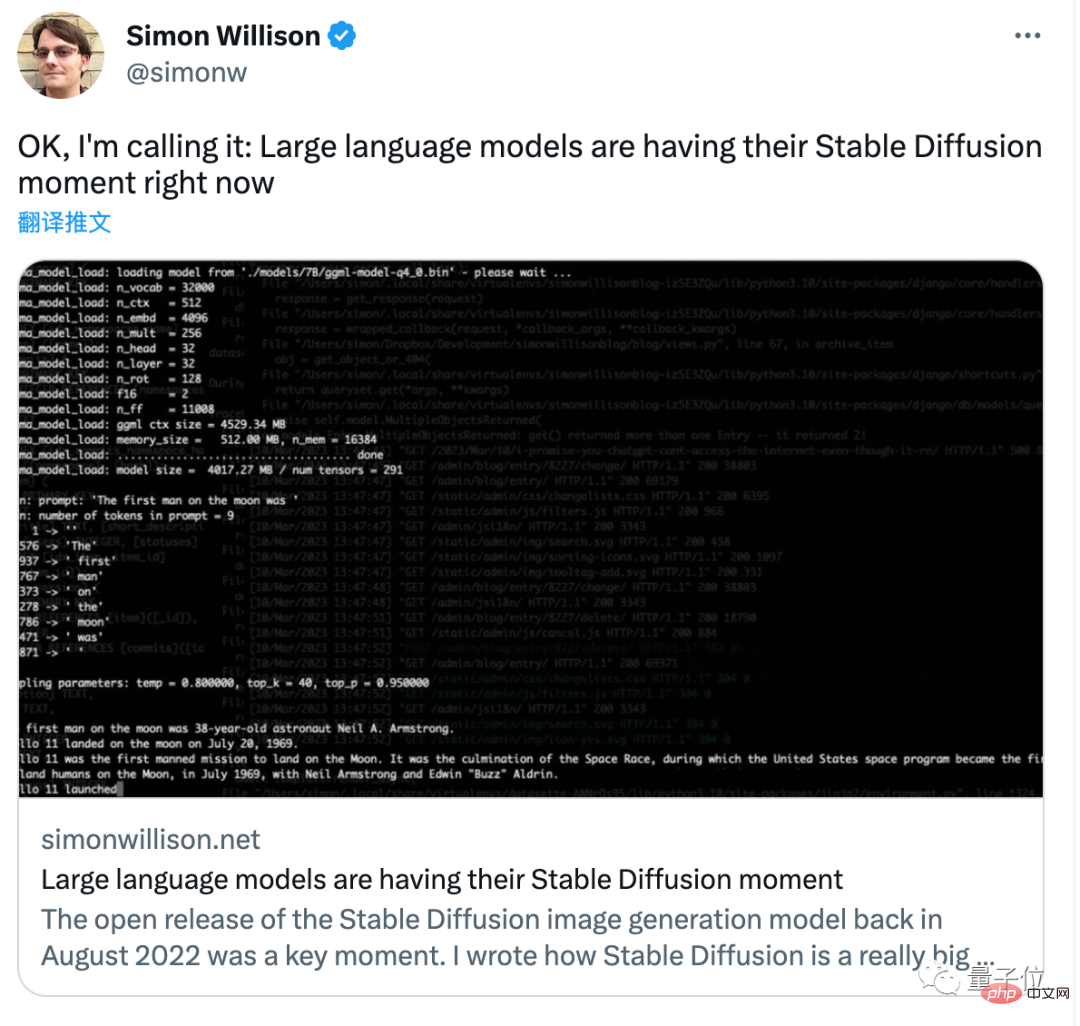

Finally, Simon Willison, the founder of the Django framework, shouted:

The time for Stable Diffusion of large language models has arrived.

The above is the detailed content of Stanford's 'Grass Mud Horse' is popular: $100 can match GPT-3.5! The kind that can run on mobile phones. For more information, please follow other related articles on the PHP Chinese website!