Technology peripherals

Technology peripherals

AI

AI

How do dogs see the world? Human researchers set out to decode visual cognition in dog brains

How do dogs see the world? Human researchers set out to decode visual cognition in dog brains

How do dogs see the world? Human researchers set out to decode visual cognition in dog brains

Over the past 15,000 years, dogs and humans have co-evolved. Nowadays, dogs often live in human environments as pets. Sometimes dogs watch videos at home like people do, and seem to understand.

So, what does the world look like in the eyes of dogs?

Recently, a study from Emory University decoded visual images from the brains of dogs, revealing for the first time how the dog's brain reconstructs what it sees. The research was published in the Journal of Visualized Experiments.

Paper address: https://www.jove.com/t/64442/through-dog-s-eyes-fmri-decoding-naturalistic-videos -from-dog

The researchers recorded fMRI neural data from two awake, unrestrained dogs while they watched 30-minute videos on three separate occasions for a total of 90 minutes. They then used machine learning algorithms to analyze patterns in the neural data.

Gregory Berns, a professor of psychology at Emory University and one of the authors of the paper, said: "We can monitor the dog's brain activity while it watches the video and reconstruct to some extent what it is looking at. . It’s remarkable that we were able to do this.”

Berns and colleagues pioneered the use of fMRI scanning technology in dogs and trained the dogs to remain completely still while measuring neural activity. Unrestricted. Ten years ago, the team published the first fMRI brain images of fully awake, unrestrained dogs, opening the door to what Berns calls "The Dog Project."

Berns and Callie, the first dog to have her brain activity scanned while fully awake and unrestrained.

Over the years, Berns’ lab has published multiple studies on how the canine brain processes vision, language, smells and rewards (such as receiving praise or food).

At the same time, machine learning technology continues to advance, allowing scientists to decode some of the patterns of human brain activity. Berns then began wondering whether similar technology could be applied to dog brains.

This new research is based on machine learning and fMRI technology. fMRI is a neuroimaging technique that uses magnetic resonance imaging to measure changes in hemodynamics caused by neuronal activity. This technology is non-invasive and plays an important role in the field of brain function localization. In addition to humans, the technology has only been used in a handful of other species, including some primates.

Research Introduction

Two dogs were used in the experiment. It proves that machine learning, fMRI and other technologies can be generally used for canine analysis. The researchers also hope that this Research helps others gain a deeper understanding of how different animals think.

The experimental process is roughly as follows:

Experimental participants: Bhubo, 4 years old; Daisy, 11 years old. Both dogs had previously participated in several fMRI training sessions (Bhubo: 8 sessions, Daisy: 11 sessions), some of which involved viewing visual stimuli projected onto a screen. The two dogs were chosen because they were able to stay inside the scanner for extended periods of time without moving around unseen by their owners.

Video shooting: Shoot videos from the dog's perspective to capture everyday scenes in the dog's life. These scenarios include walking, feeding, playing, interacting with humans, dog-to-dog interactions, etc. The video was edited into 256 unique scenes, each depicting an event such as a dog cuddling with a human, a dog running, or a walk. Each scene is assigned a unique number and label based on its content. The scenes were then edited into five larger compilation videos, each approximately 6 minutes long.

#Experimental design: Participants were first scanned using a 3T MRI while watching a compilation video projected onto a screen behind the MRI bore. For dogs, pre-training is achieved by placing their heads in custom-made chin rests to achieve a stable head position, like the one pictured below

The experiment is divided into three viewings, each time watching a 30-minute video, with a total duration of 90 minutes.

During the experiment, fMRI was used to scan the dog at the same time, and then the data was analyzed. The experiment used the Ivis machine learning algorithm, which is a nonlinear method based on twin neural networks (SNN). , has achieved success in analyzing high-dimensional biological data. In addition, machine learning algorithms such as scikit-learn and RFC were also used in the experiment.

Daisy, who is being scanned, has tape on her ears to hold earplugs in place to eliminate noise.

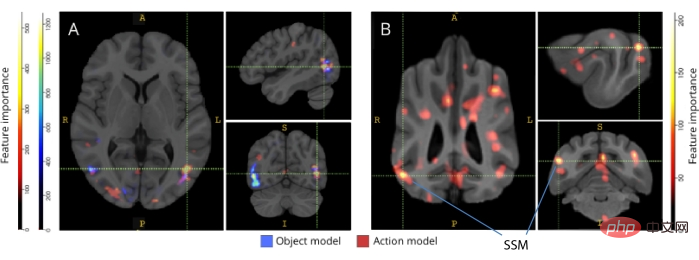

The study compared how the human and dog brains work. Results from two human subjects showed that a model developed using neural networks mapped brain data to object-based and action-based classifiers with 99% accuracy; the same model performed well when decoding dog brain patterns. Not applicable to object classifiers, while decoding dog action classification, accuracy reached 75% - 88%. This illustrates significant differences in how the human and dog brains work, as shown below in the experimental results for humans (A) and dogs (B). In this regard, Berns concluded: "We humans are very concerned about what we see, but dogs seem to care less about who they see or what they see, and more about the action behavior."

Interested readers can read the original text of the paper to learn more research details.

The above is the detailed content of How do dogs see the world? Human researchers set out to decode visual cognition in dog brains. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

What is NeRF? Is NeRF-based 3D reconstruction voxel-based?

Oct 16, 2023 am 11:33 AM

1 Introduction Neural Radiation Fields (NeRF) are a fairly new paradigm in the field of deep learning and computer vision. This technology was introduced in the ECCV2020 paper "NeRF: Representing Scenes as Neural Radiation Fields for View Synthesis" (which won the Best Paper Award) and has since become extremely popular, with nearly 800 citations to date [1 ]. The approach marks a sea change in the traditional way machine learning processes 3D data. Neural radiation field scene representation and differentiable rendering process: composite images by sampling 5D coordinates (position and viewing direction) along camera rays; feed these positions into an MLP to produce color and volumetric densities; and composite these values using volumetric rendering techniques image; the rendering function is differentiable, so it can be passed

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

The first pure visual static reconstruction of autonomous driving

Jun 02, 2024 pm 03:24 PM

A purely visual annotation solution mainly uses vision plus some data from GPS, IMU and wheel speed sensors for dynamic annotation. Of course, for mass production scenarios, it doesn’t have to be pure vision. Some mass-produced vehicles will have sensors like solid-state radar (AT128). If we create a data closed loop from the perspective of mass production and use all these sensors, we can effectively solve the problem of labeling dynamic objects. But there is no solid-state radar in our plan. Therefore, we will introduce this most common mass production labeling solution. The core of a purely visual annotation solution lies in high-precision pose reconstruction. We use the pose reconstruction scheme of Structure from Motion (SFM) to ensure reconstruction accuracy. But pass

Base64 encoding and decoding using Python

Sep 02, 2023 pm 01:49 PM

Base64 encoding and decoding using Python

Sep 02, 2023 pm 01:49 PM

Let's say you have a binary image file that you want to transfer over the network. You're surprised that the other party didn't receive the file correctly - it just contains weird characters! Well, it looks like you're trying to send the file in raw bits and bytes format, while the media you're using is designed for streaming text. What are the solutions to avoid such problems? The answer is Base64 encoding. In this article, I will show you how to encode and decode binary images using Python. The program is explained as a standalone local program, but you can apply the concept to different applications, such as sending encoded images from a mobile device to a server and many other applications. What is Base64? Before diving into this article, let’s define Base6

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

Point cloud registration is inescapable for 3D vision! Understand all mainstream solutions and challenges in one article

Apr 02, 2024 am 11:31 AM

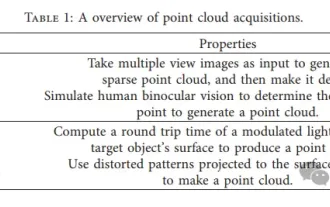

Point cloud, as a collection of points, is expected to bring about a change in acquiring and generating three-dimensional (3D) surface information of objects through 3D reconstruction, industrial inspection and robot operation. The most challenging but essential process is point cloud registration, i.e. obtaining a spatial transformation that aligns and matches two point clouds obtained in two different coordinates. This review introduces the overview and basic principles of point cloud registration, systematically classifies and compares various methods, and solves the technical problems existing in point cloud registration, trying to provide academic researchers outside the field and Engineers provide guidance and facilitate discussions on a unified vision for point cloud registration. The general method of point cloud acquisition is divided into active and passive methods. The point cloud actively acquired by the sensor is the active method, and the point cloud is reconstructed later.

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.

May 08, 2024 am 11:40 AM

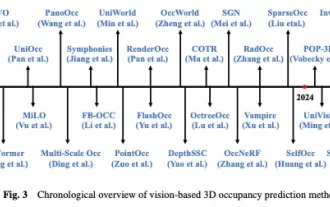

Written above & The author’s personal understanding In recent years, autonomous driving has received increasing attention due to its potential in reducing driver burden and improving driving safety. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. at last

How to implement encoding and decoding of Chinese characters in C language programming?

Feb 19, 2024 pm 02:15 PM

How to implement encoding and decoding of Chinese characters in C language programming?

Feb 19, 2024 pm 02:15 PM

In modern computer programming, C language is one of the most commonly used programming languages. Although the C language itself does not directly support Chinese encoding and decoding, we can use some technologies and libraries to achieve this function. This article will introduce how to implement Chinese encoding and decoding in C language programming software. First, to implement Chinese encoding and decoding, we need to understand the basic concepts of Chinese encoding. Currently, the most commonly used Chinese encoding scheme is Unicode encoding. Unicode encoding assigns a unique numeric value to each character so that when calculating

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

May 13, 2023 pm 07:52 PM

You can play Genshin Impact just by moving your mouth! Use AI to switch characters and attack enemies. Netizen: 'Ayaka, use Kamiri-ryu Frost Destruction'

May 13, 2023 pm 07:52 PM

When it comes to domestic games that have become popular all over the world in the past two years, Genshin Impact definitely takes the cake. According to this year’s Q1 quarter mobile game revenue survey report released in May, “Genshin Impact” firmly won the first place among card-drawing mobile games with an absolute advantage of 567 million U.S. dollars. This also announced that “Genshin Impact” has been online in just 18 years. A few months later, total revenue from the mobile platform alone exceeded US$3 billion (approximately RM13 billion). Now, the last 2.8 island version before the opening of Xumi is long overdue. After a long draft period, there are finally new plots and areas to play. But I don’t know how many “Liver Emperors” there are. Now that the island has been fully explored, grass has begun to grow again. There are a total of 182 treasure chests + 1 Mora box (not included). There is no need to worry about the long grass period. The Genshin Impact area is never short of work. No, during the long grass