Technology peripherals

Technology peripherals

AI

AI

Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!

Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!

Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!

Since the discovery of the law of scaling, people thought that the development of artificial intelligence would be as fast as a rocket.

In 2019, multi-modality, logical reasoning, learning speed, cross-task transfer learning and long-term memory will still have "walls" that slow down or stop the progress of artificial intelligence. In the years since, the “wall” of multimodal and logical reasoning has come down.

Given this, most people have become increasingly convinced that rapid progress in artificial intelligence will continue rather than stagnate or level off.

Now, the performance of artificial intelligence systems on a large number of tasks has been close to human levels, and the cost of training these systems is far lower than that of the Hubble Space Telescope and the Large Hadron Collider. It is a "big science" project, so AI has huge potential for future development.

However, the security risks brought about by the development are becoming more and more prominent.

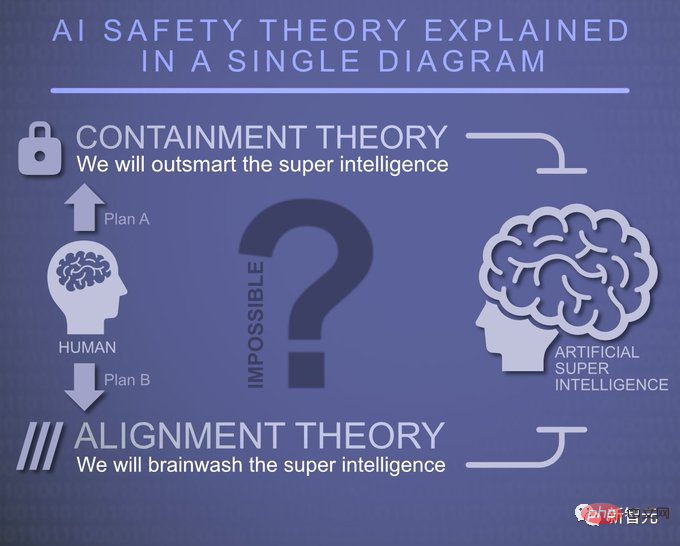

Regarding the safety issues of artificial intelligence, Anthropic analyzed three possibilities:

Under optimistic circumstances, the possibility of catastrophic risks caused by advanced artificial intelligence due to security failures is very small. Already developed security technologies, such as Reinforcement Learning from Human Feedback (RLHF) and Constitutional Artificial Intelligence (CAI), are largely sufficient to address risks.

The main risks are intentional misuse and potential harm caused by widespread automation and shifting international power dynamics, etc., which will require AI labs and third parties such as academia and Civil society agencies conduct extensive research to help policymakers navigate some of the potential structural risks posed by advanced artificial intelligence.

Neither a good nor a bad scenario, catastrophic risk is a possible and even reasonable outcome of the development of advanced artificial intelligence, and we will need substantial scientific and engineering efforts to avoid it These risks, for example, can be avoided through the "combination punch" provided by Anthropic.

Anthropic is currently working in a variety of different directions, mainly Divided into three areas: AI's capabilities in writing, image processing or generation, games, etc.; developing new algorithms to train the alignment capabilities of artificial intelligence systems; evaluating and understanding whether artificial intelligence systems are really aligned, how effective they are, and their Application Ability.

Anthropic has launched the following projects to study how to train safe artificial intelligence.

Mechanism interpretabilityMechanism interpretability, that is, trying to reverse engineer a neural network into an algorithm that humans can understand, similar to how people understand an unknown, It is possible to reverse engineer potentially unsafe computer programs.

Anthropic hopes that it can enable us to do something similar to "code review", which can review the model and identify unsafe aspects to provide strong security guarantees.

This is a very difficult question, but not as impossible as it seems.

On the one hand, language models are large, complex computer programs (the phenomenon of "superposition" makes things harder). On the other hand, there are signs that this approach may be more solvable than one first thought. Anthropic has successfully extended this approach to small language models, even discovered a mechanism that seems to drive contextual learning, and has a better understanding of the mechanisms responsible for memory.

Antropic’s interpretability research wants to fill the gap left by other kinds of permutation science. For example, they believe that one of the most valuable things that interpretability research can produce is the ability to identify whether a model is deceptively aligned.

In many ways, the issue of technical consistency is inseparable from the problem of detecting bad behavior in AI models.

If bad behavior can be robustly detected in new situations (e.g. by "reading the model's mind"), then we can find better ways to train models that do not exhibit these failures model.

Anthropic believes that by better understanding the detailed workings of neural networks and learning, a broader range of tools can be developed in the pursuit of safety.

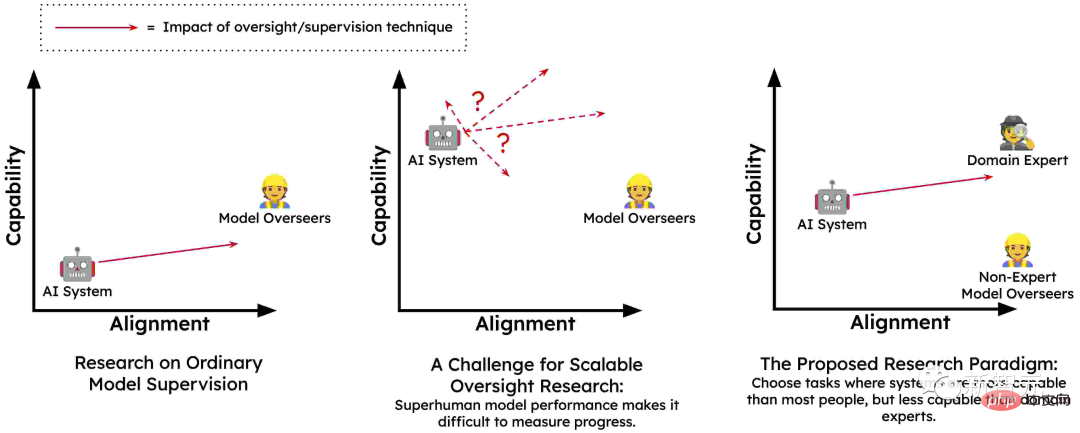

Scalable Supervision

Translating language models into unified artificial intelligence systems requires large amounts of high-quality feedback to guide their behavior. The main reason is that humans may not be able to provide the accurate feedback necessary to adequately train the model to avoid harmful behavior in a wide range of environments.

It may be that humans are fooled by AI systems into providing feedback that reflects their actual needs (e.g., accidentally providing positive feedback for misleading suggestions). And humans can’t do this at scale, which is the problem of scalable supervision and is at the heart of training safe, consistent AI systems.

Therefore, Anthropic believes that the only way to provide the necessary supervision is to have artificial intelligence systems partially supervise themselves or assist humans in supervising themselves. In some way, a small amount of high-quality human supervision is amplified into a large amount of high-quality artificial intelligence supervision.

This idea has shown promise through technologies such as RLHF and Constitutional AI, and language models are already being pre-trained Having learned a lot about human values, one can expect larger models to have a more accurate understanding of human values.

Another key feature of scalable supervision, especially techniques like CAI, is that it allows for automated red teaming (aka adversarial training). That is, they could automatically generate potentially problematic inputs to AI systems, see how they react, and then automatically train them to behave in a more honest and harmless way.

In addition to CAI, there are a variety of scalable supervision methods such as human-assisted supervision, AI-AI debate, multi-Agent RL red team, and evaluation of creation model generation. Through these methods, models can better understand human values and their behavior will be more consistent with human values. In this way, Anthropic can train more powerful security systems.

Learning process, not achieving results

One way to learn a new task is through trial and error. If you know what the desired end result is, you can keep trying new strategies until you succeed. Anthropic calls this "outcome-oriented learning."

In this process, the agent's strategy is completely determined by the desired result, and it will tend to choose some low-cost strategies to allow it to achieve this goal.

A better way to learn is usually to let the experts guide you and understand their process of success. During practice rounds, your success may not matter so much as you can focus on improving your approach.

As you progress, you may consult with your coach to pursue new strategies to see if it works better for you. This is called "process-oriented learning." In process-oriented learning, the final result is not the goal, but mastering the process is the key.

Many concerns about the safety of advanced artificial intelligence systems, at least at a conceptual level, can be addressed by training these systems in a process-oriented manner.

Human experts will continue to understand the various steps followed by AI systems, and in order for these processes to be encouraged, they must explain their reasons to humans.

AI systems will not be rewarded for succeeding in elusive or harmful ways, as they will only be rewarded based on the effectiveness and understandability of their processes.

This way they are not rewarded for pursuing problematic sub-goals (such as resource acquisition or deception), as humans or their agents would during training for its acquisition process Provide negative feedback.

Anthropic believes that "process-oriented learning" may be the most promising way to train safe and transparent systems, and it is also the simplest method.

Understanding Generalization

Mechanistic interpretability work reverse-engineers the calculations performed by neural networks. Anthropic also sought to gain a more detailed understanding of the training procedures for large language models (LLMs).

LLMs have demonstrated a variety of surprising new behaviors, ranging from astonishing creativity to self-preservation to deception. All these behaviors come from training data, but the process is complicated:

The model is first "pre-trained" on a large amount of original text, learning a wide range of representations from it, and simulating different agents Ability. They are then fine-tuned in various ways, some of which may have surprising consequences.

Due to over-parameterization in the fine-tuning phase, the learned model depends heavily on the pre-trained implicit bias, which comes from most of the knowledge in the world. The complex representation network built in pre-training.

When a model behaves in a worrisome manner, such as when it acts as a deceptive AI, is it simply regurgitating a near-identical training sequence harmlessly? ”? Or has this behavior (and even the beliefs and values that lead to it) become such an integral part of the model’s conception of an AI assistant that they apply it in different contexts?

Anthropic is working on a technique that attempts to trace the model's output back to the training data to identify important clues that can help understand this behavior.

Testing of Dangerous Failure Modes

A key issue is that advanced artificial intelligence may develop harmful emergent behaviors, such as deception or strategic planning capabilities, These behaviors are absent in smaller and less capable systems.

Before this problem becomes an immediate threat, Anthropic believes that the way to predict it is to build an environment. So, they deliberately trained these properties into small-scale models. Because these models are not powerful enough to pose a hazard, they can be isolated and studied.

Anthropic is particularly interested in how AI systems behave under "situational awareness" - for example, when they realize they are an AI talking to a human in a training environment , how does this affect their behavior during training? Could AI systems become deceptive, or develop surprisingly suboptimal goals?

Ideally, they would like to build detailed quantitative models of how these tendencies change with scale, so that sudden and dangerous failure modes can be predicted in advance.

At the same time, Anthropic is also concerned about the risks associated with the research itself:

If the research is conducted on a smaller model, it is impossible to have Serious risks; significant risks if done on larger, more capable models. Therefore, Anthropic does not intend to conduct this kind of research on models capable of causing serious harm.

Social Impact and Assessment

A key pillar of Anthropic research is to critically evaluate and understand the capabilities, limitations and capabilities of artificial intelligence systems by establishing tools, measurements and Potential Social Impact Its potential social impact.

For example, Anthropic has published research analyzing the predictability of large language models. They looked at the high-level predictability and unpredictability of these models and analyzed how this property would Lead to harmful behavior.

In this work, they examine approaches to red teaming language models to find and reduce hazards by probing the model's output at different model scales. Recently, they discovered that current language models can follow instructions and reduce biases and stereotypes.

Anthropic is very concerned about how the rapid application of artificial intelligence systems will impact society in the short, medium and long term.

By conducting rigorous research on the impact of AI today, they aim to provide policymakers and researchers with the arguments and tools they need to help mitigate potentially major societal crises and ensure the benefits of AI can benefit people.

Conclusion

Artificial intelligence will have an unprecedented impact on the world in the next ten years. Exponential growth in computing power and predictable improvements in artificial intelligence capabilities indicate that the technology of the future will be far more advanced than today's.

However, we do not yet have a solid understanding of how to ensure that these powerful systems are closely integrated with human values, so there is no guarantee that the risk of catastrophic failure will be minimized. Therefore, we must always be prepared for less optimistic situations.

Through empirical research from multiple angles, the "combination punch" of security work provided by Anthropic seems to be able to help us solve artificial intelligence security issues.

These safety recommendations from Anthropic tell us:

"To improve our understanding of how artificial intelligence systems learn and generalize to the real world , develop scalable AI system supervision and review technology, create transparent and explainable AI systems, train AI systems to follow safety processes instead of chasing results, analyze potentially dangerous failure modes of AI and how to prevent them, evaluate artificial intelligence The social impact of intelligence to guide policy and research, etc."

We are still in the exploratory stage for the perfect defense against artificial intelligence, but Anthropic has given you a good guide The way forward.

The above is the detailed content of Google spent $400 million on Anthrophic: AI model training calculations increased 1,000 times in 5 years!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

Bytedance Cutting launches SVIP super membership: 499 yuan for continuous annual subscription, providing a variety of AI functions

Jun 28, 2024 am 03:51 AM

This site reported on June 27 that Jianying is a video editing software developed by FaceMeng Technology, a subsidiary of ByteDance. It relies on the Douyin platform and basically produces short video content for users of the platform. It is compatible with iOS, Android, and Windows. , MacOS and other operating systems. Jianying officially announced the upgrade of its membership system and launched a new SVIP, which includes a variety of AI black technologies, such as intelligent translation, intelligent highlighting, intelligent packaging, digital human synthesis, etc. In terms of price, the monthly fee for clipping SVIP is 79 yuan, the annual fee is 599 yuan (note on this site: equivalent to 49.9 yuan per month), the continuous monthly subscription is 59 yuan per month, and the continuous annual subscription is 499 yuan per year (equivalent to 41.6 yuan per month) . In addition, the cut official also stated that in order to improve the user experience, those who have subscribed to the original VIP

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Context-augmented AI coding assistant using Rag and Sem-Rag

Jun 10, 2024 am 11:08 AM

Improve developer productivity, efficiency, and accuracy by incorporating retrieval-enhanced generation and semantic memory into AI coding assistants. Translated from EnhancingAICodingAssistantswithContextUsingRAGandSEM-RAG, author JanakiramMSV. While basic AI programming assistants are naturally helpful, they often fail to provide the most relevant and correct code suggestions because they rely on a general understanding of the software language and the most common patterns of writing software. The code generated by these coding assistants is suitable for solving the problems they are responsible for solving, but often does not conform to the coding standards, conventions and styles of the individual teams. This often results in suggestions that need to be modified or refined in order for the code to be accepted into the application

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Can fine-tuning really allow LLM to learn new things: introducing new knowledge may make the model produce more hallucinations

Jun 11, 2024 pm 03:57 PM

Large Language Models (LLMs) are trained on huge text databases, where they acquire large amounts of real-world knowledge. This knowledge is embedded into their parameters and can then be used when needed. The knowledge of these models is "reified" at the end of training. At the end of pre-training, the model actually stops learning. Align or fine-tune the model to learn how to leverage this knowledge and respond more naturally to user questions. But sometimes model knowledge is not enough, and although the model can access external content through RAG, it is considered beneficial to adapt the model to new domains through fine-tuning. This fine-tuning is performed using input from human annotators or other LLM creations, where the model encounters additional real-world knowledge and integrates it

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

Seven Cool GenAI & LLM Technical Interview Questions

Jun 07, 2024 am 10:06 AM

To learn more about AIGC, please visit: 51CTOAI.x Community https://www.51cto.com/aigc/Translator|Jingyan Reviewer|Chonglou is different from the traditional question bank that can be seen everywhere on the Internet. These questions It requires thinking outside the box. Large Language Models (LLMs) are increasingly important in the fields of data science, generative artificial intelligence (GenAI), and artificial intelligence. These complex algorithms enhance human skills and drive efficiency and innovation in many industries, becoming the key for companies to remain competitive. LLM has a wide range of applications. It can be used in fields such as natural language processing, text generation, speech recognition and recommendation systems. By learning from large amounts of data, LLM is able to generate text

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

Kuaishou version of Sora 'Ke Ling' is open for testing: generates over 120s video, understands physics better, and can accurately model complex movements

Jun 11, 2024 am 09:51 AM

What? Is Zootopia brought into reality by domestic AI? Exposed together with the video is a new large-scale domestic video generation model called "Keling". Sora uses a similar technical route and combines a number of self-developed technological innovations to produce videos that not only have large and reasonable movements, but also simulate the characteristics of the physical world and have strong conceptual combination capabilities and imagination. According to the data, Keling supports the generation of ultra-long videos of up to 2 minutes at 30fps, with resolutions up to 1080p, and supports multiple aspect ratios. Another important point is that Keling is not a demo or video result demonstration released by the laboratory, but a product-level application launched by Kuaishou, a leading player in the short video field. Moreover, the main focus is to be pragmatic, not to write blank checks, and to go online as soon as it is released. The large model of Ke Ling is already available in Kuaiying.

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

To provide a new scientific and complex question answering benchmark and evaluation system for large models, UNSW, Argonne, University of Chicago and other institutions jointly launched the SciQAG framework

Jul 25, 2024 am 06:42 AM

Editor |ScienceAI Question Answering (QA) data set plays a vital role in promoting natural language processing (NLP) research. High-quality QA data sets can not only be used to fine-tune models, but also effectively evaluate the capabilities of large language models (LLM), especially the ability to understand and reason about scientific knowledge. Although there are currently many scientific QA data sets covering medicine, chemistry, biology and other fields, these data sets still have some shortcomings. First, the data form is relatively simple, most of which are multiple-choice questions. They are easy to evaluate, but limit the model's answer selection range and cannot fully test the model's ability to answer scientific questions. In contrast, open-ended Q&A

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

AI startups collectively switched jobs to OpenAI, and the security team regrouped after Ilya left!

Jun 08, 2024 pm 01:00 PM

Last week, amid the internal wave of resignations and external criticism, OpenAI was plagued by internal and external troubles: - The infringement of the widow sister sparked global heated discussions - Employees signing "overlord clauses" were exposed one after another - Netizens listed Ultraman's "seven deadly sins" Rumors refuting: According to leaked information and documents obtained by Vox, OpenAI’s senior leadership, including Altman, was well aware of these equity recovery provisions and signed off on them. In addition, there is a serious and urgent issue facing OpenAI - AI safety. The recent departures of five security-related employees, including two of its most prominent employees, and the dissolution of the "Super Alignment" team have once again put OpenAI's security issues in the spotlight. Fortune magazine reported that OpenA

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Five schools of machine learning you don't know about

Jun 05, 2024 pm 08:51 PM

Machine learning is an important branch of artificial intelligence that gives computers the ability to learn from data and improve their capabilities without being explicitly programmed. Machine learning has a wide range of applications in various fields, from image recognition and natural language processing to recommendation systems and fraud detection, and it is changing the way we live. There are many different methods and theories in the field of machine learning, among which the five most influential methods are called the "Five Schools of Machine Learning". The five major schools are the symbolic school, the connectionist school, the evolutionary school, the Bayesian school and the analogy school. 1. Symbolism, also known as symbolism, emphasizes the use of symbols for logical reasoning and expression of knowledge. This school of thought believes that learning is a process of reverse deduction, through existing