Technology peripherals

Technology peripherals

AI

AI

Turing Award winner Jack Dongarra: How the integration of high-performance computing and AI will subvert scientific computing

Turing Award winner Jack Dongarra: How the integration of high-performance computing and AI will subvert scientific computing

Turing Award winner Jack Dongarra: How the integration of high-performance computing and AI will subvert scientific computing

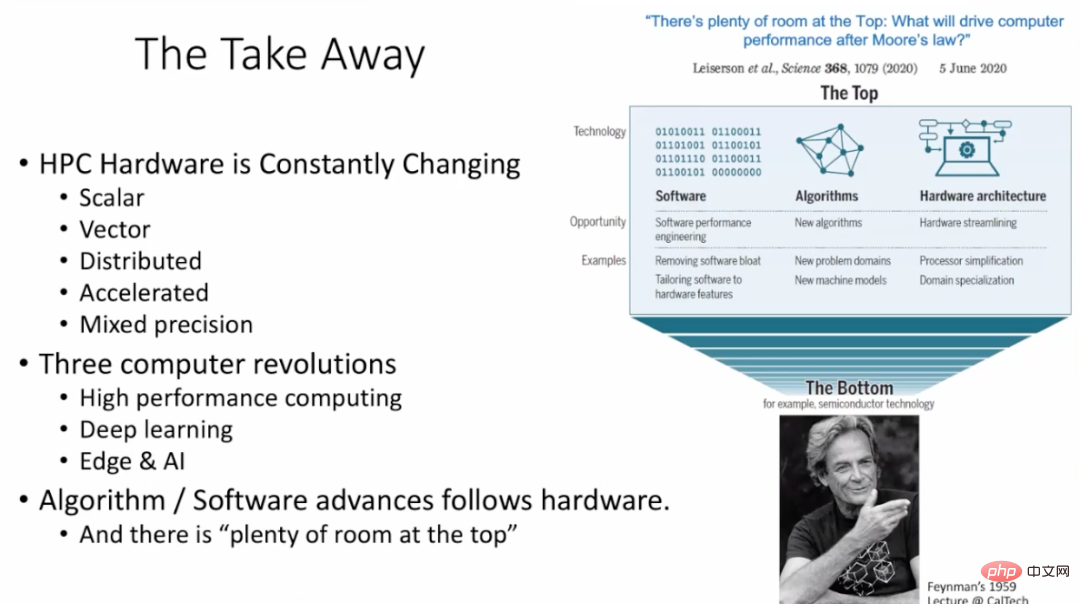

In the past three decades, high-performance computing (HPC) has made rapid progress and plays an important role in scientific computing and other fields. Currently, cloud computing and mobile computing are gradually becoming mainstream computing paradigms. At the same time, the disruptive impact of AI methods such as deep learning has brought new challenges and opportunities to the integration of HPC and AI. At the 10th National Social Media Processing Conference (SMP 2022), Turing Award winner Jack Dongarra sorted out the most important applications and developments of high-performance computing in recent years.

Jack Dongarra is a high-performance computing expert, winner of the 2021 Turing Award, and director of the Innovative Computing Laboratory at the University of Tennessee. His pioneering contributions to numerical algorithms and libraries have enabled high-performance computing software to keep pace with exponential improvements in hardware for more than four decades. His numerous academic achievements include the 2019 SIAM/ACM Computational Science and Engineering Award for which he was awarded and the 2020 IEEE Computing Pioneer Award for leadership in high-performance mathematics software. He is a Fellow of AAAS, ACM, IEEE, and SIAM, a Foreign Fellow of the Royal Society, and a member of the National Academy of Engineering.

1 High performance computing is widely used in the "third pole" of science

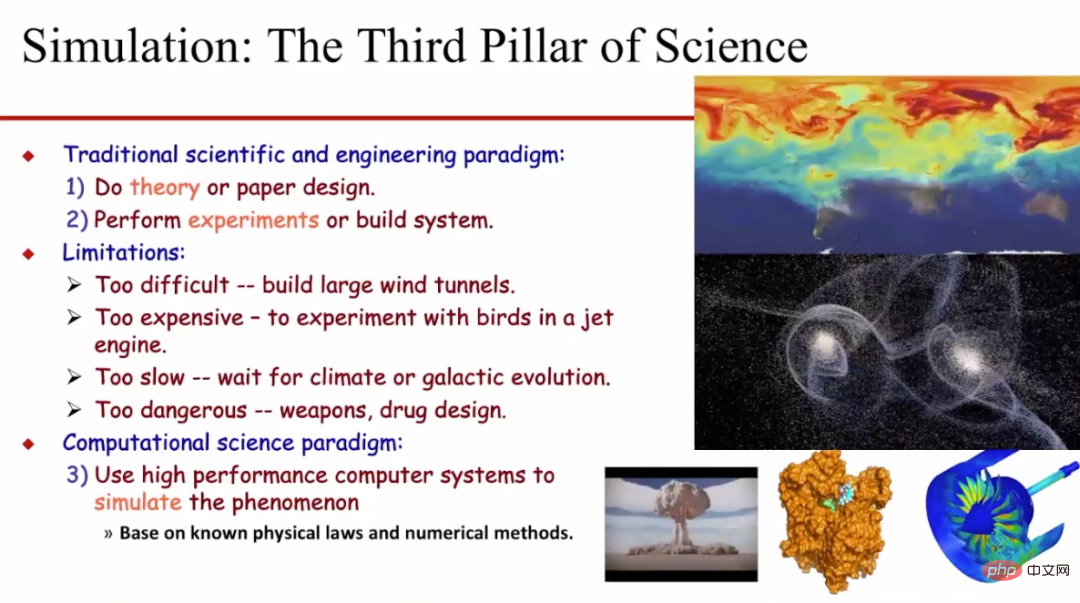

Currently, high-performance computing (HPC) methods are widely used in scientific research simulation, and simulation is also known as the "third pole" of scientific research. Historically, scientific research and engineering research have usually adopted paradigms based on theory and experiment. However, these two methods have many inherent limitations. For example, it is usually very difficult to build large-scale wind tunnels, the cost of testing aircraft engines and bird collisions will be very expensive, and waiting to observe climate change will be very time-consuming and slow. Experiments on drugs and weapons will be very dangerous and so on. In addition, we sometimes cannot study certain problems experimentally, such as studying the movement of galaxies and developing new drugs. Therefore, researchers are gradually using scientific computing methods to conduct simulations and study such problems. This method is usually based on known physical laws and digital calculation methods, and simulates the corresponding physical phenomena through high-performance computer systems.

2 The pinnacle of computing power - supercomputer

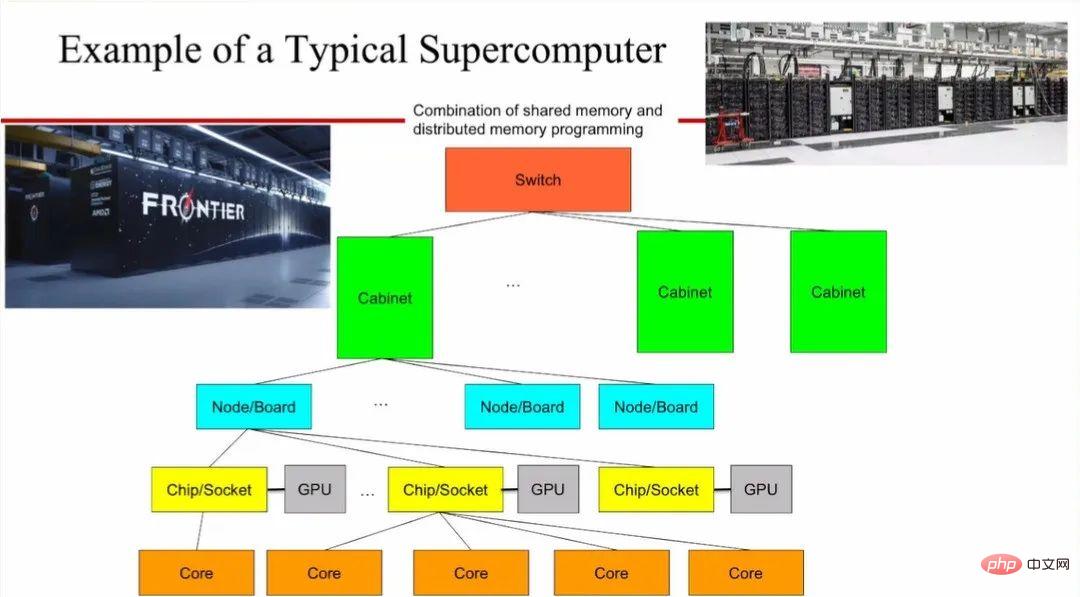

People generally based on commercialized mature chips To build a typical supercomputer, multiple chips are integrated on a single board, and each chip has multiple cores. At the same time, a graphics processing unit (GPU) is usually used on the board as an accelerator to enhance computing power. In the same rack cabinet, different boards communicate through high-speed links, and different cabinets are interconnected through switches. A supercomputer formed in this way may need to occupy a space as large as two tennis courts.

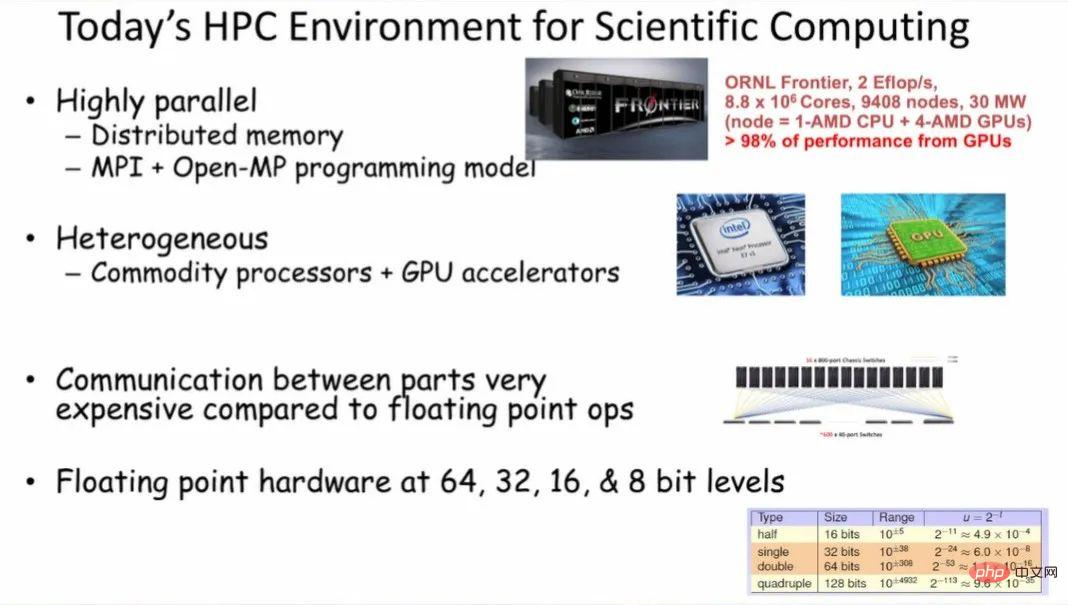

# Such supercomputers have very high parallelism and usually use distributed memory and "MPI Open-MP" programming paradigms. Moving data between different parts of an HPC system is very expensive compared to floating point calculations on data. Existing supercomputers support floating-point calculations with different precisions including 64, 32, 16, and 8 bit widths.

Currently, the fastest supercomputers can provide Exaflop/s level (1018) computing power. This is a very huge value. If everyone completes one multiplication and addition calculation in one second, then it will take everyone in the world four years to complete the calculation that a supercomputer can complete in one second. At the same time, in order to maintain the operation of such a supercomputer, tens of millions of dollars in electricity bills need to be spent every year.

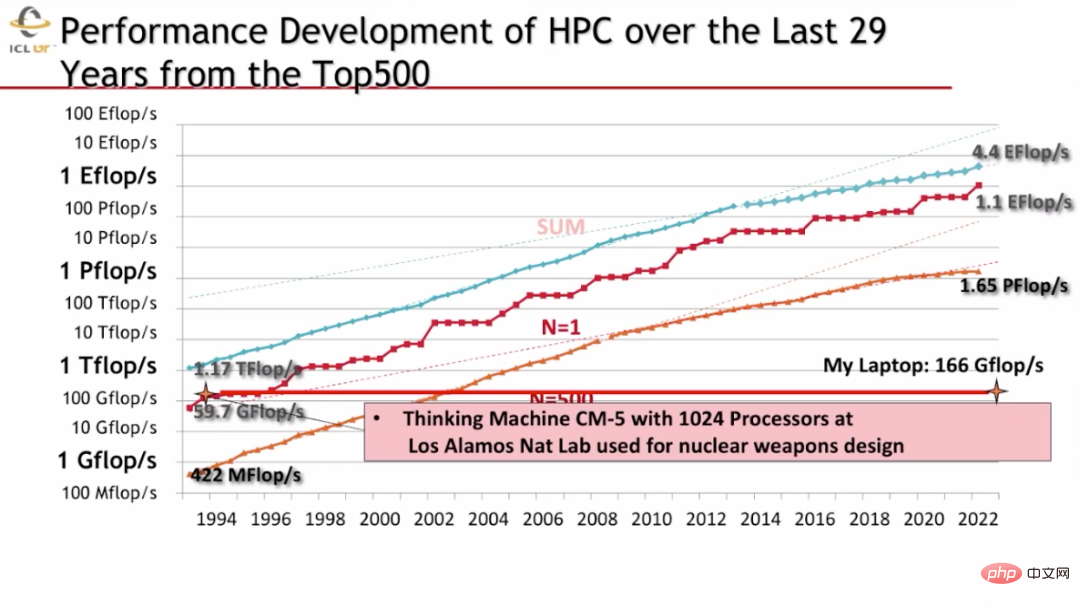

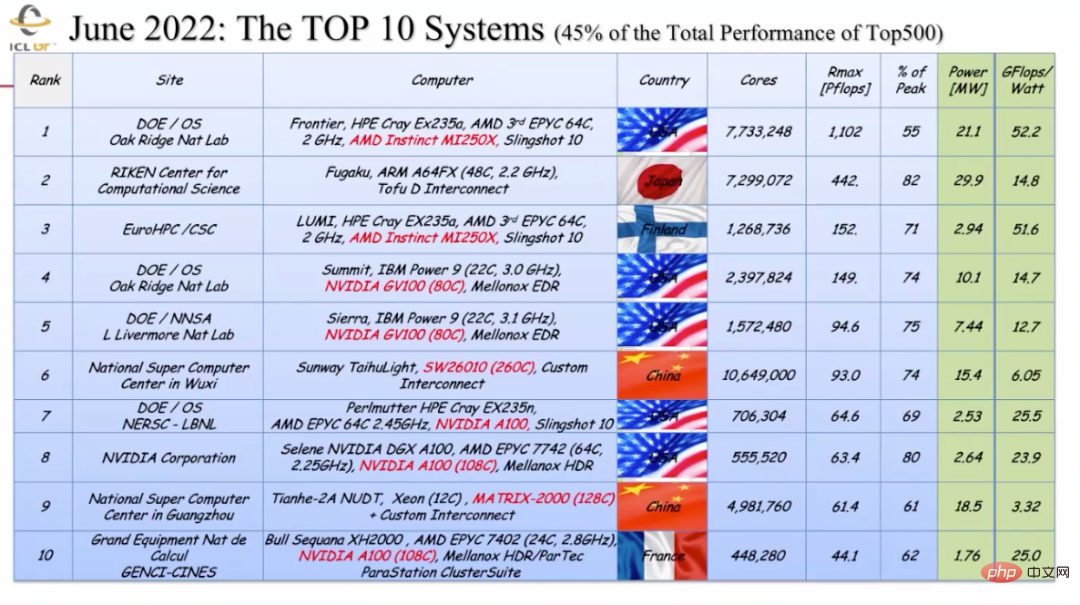

From the performance changes of the world's TOP500 supercomputers in the past thirty years, we can find that supercomputing performance has almost maintained an exponential growth rate. Interestingly, the performance of the MacBook used today is more powerful than the world's most advanced supercomputer in 1993, which was built at Los Alamos National Laboratory and was mainly used for nuclear weapons. design. Data from June this year show that among the top 10 supercomputers in the world, 5 are from the United States, 2 are from China (located in Wuxi and Guangzhou), and the remaining 3 are from Finland, Japan and France.

3 “Harmonious but Different” HPC & ML/AI

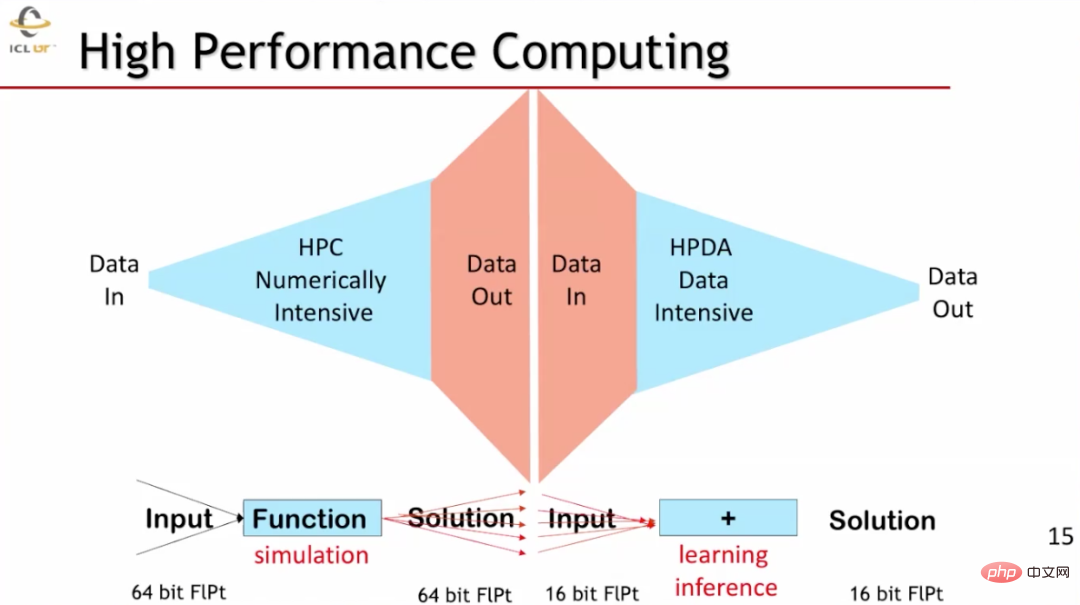

HPC and ML computing have similar yet different characteristics. HPC is digital calculation-intensive. It usually inputs very limited data and outputs a large amount of data after a very large number of digital calculations. High-performance data processing (HPDA) in the ML field usually requires inputting a large amount of data, but outputs relatively small amounts of data. The data accuracy used by the two is also very different. In high-performance computing scenarios such as scientific simulations, 64-bit floating point data is usually used, while in machine learning scenarios, 16-bit floating point data is used.

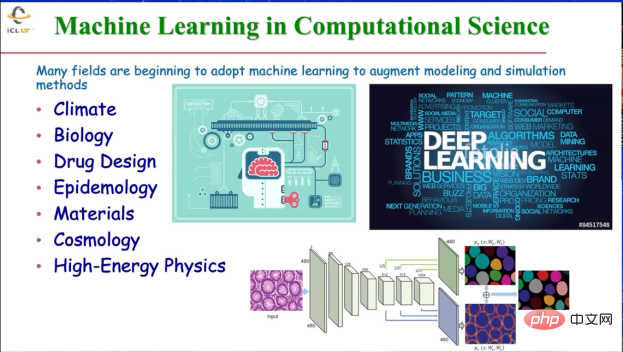

AI plays a very important role in many different aspects of scientific research Role: AI can assist scientific discovery in different fields, improve the performance of computing architecture, and manage and process large amounts of data at the edge. Therefore, in the field of scientific computing, technologies such as machine learning are applied to many disciplines such as climatology, biology, pharmacy, epidemiology, materials science, cosmology and even high-energy physics to provide enhanced models and more advanced simulation methods. For example, deep learning is used to assist drug development, predict epidemics, and classify tumors based on medical imaging, etc.

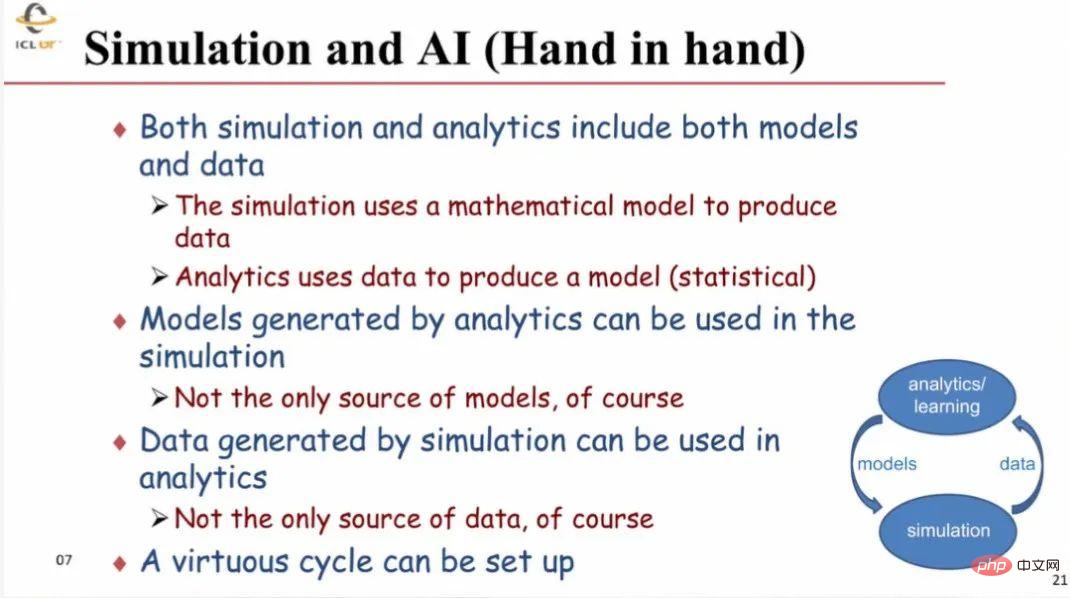

Scientific research simulation and AI computing can be combined very effectively because both require models and data. Typically, simulation uses (mathematical) models to generate data, and (AI) analytics uses data to generate models. Models obtained using analytical methods can be used in simulations together with other models; data generated by simulations can be used in analysis together with data from other sources. This forms a virtuous cycle of mutual promotion.

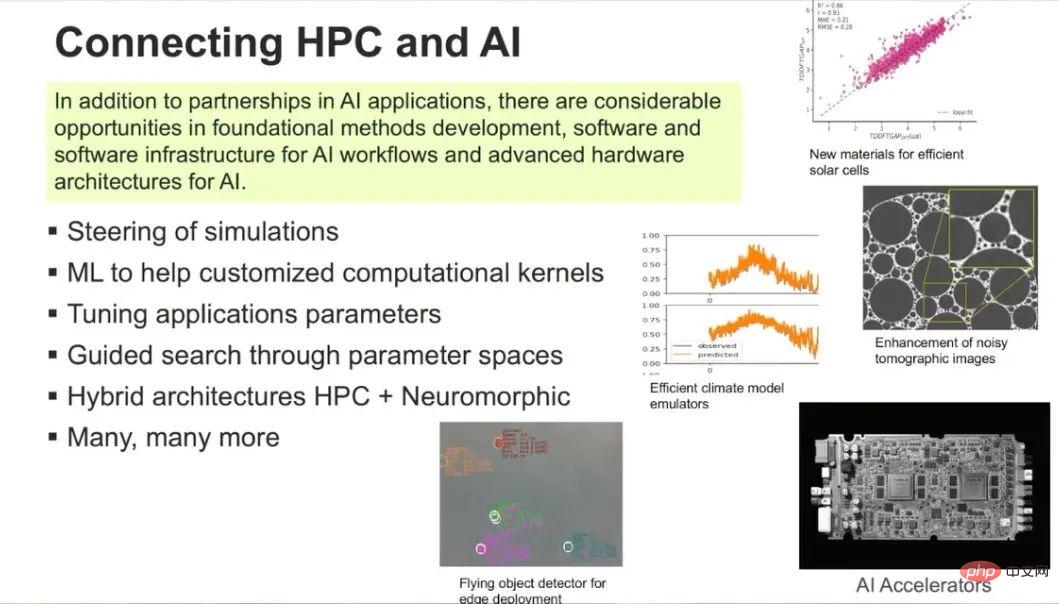

In addition to complementing each other in specific application fields, HPC&AI are both in basic development methods, software and software infrastructure, and AI hardware architecture. There are so many connections. At the same time, the two are more widely connected. For example, AI can be used to guide simulations, adjust parameters of simulation applications faster, provide customized computing kernel functions, and combine traditional HPC and neuromorphic computing, etc. content. AI&ML has a disruptive influence, as is often said: "AL&ML will not replace scientists, but scientists who use AI&ML tools will replace those who do not use these tools."

4 Looking to the future: HPC systems will be more customized

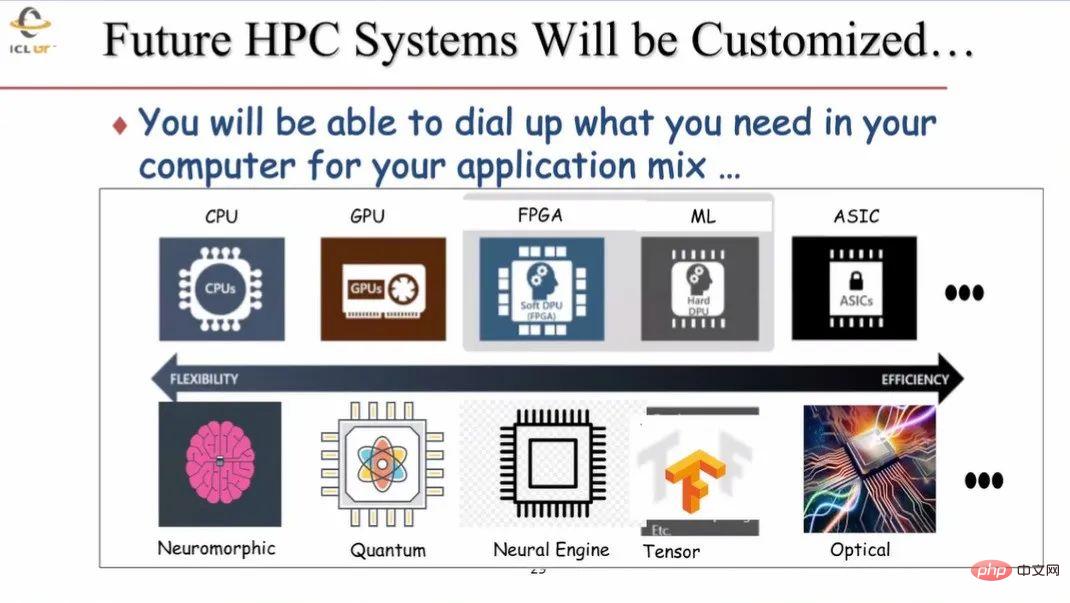

Future HPC systems will be customizable. Currently, HPC mainly has two types of processors, CPU and GPU. In the future, more different units will be used, such as FPGA, ML accelerator, ASIC chip, etc. More processors using different structures and paradigms will be increasingly added to HPC computing systems, such as neuromorphic processing, quantum computing and optical computing, which may play an increasingly important role. When building new HPC systems, people will be able to use the corresponding modules and functions as needed.

5 Summary

HPC hardware is constantly evolving, including scalar computing machines, vector computing machines, distributed systems, accelerators and mixed precision Calculating machines and so on. Three major changes are currently taking place in the computer field: high-performance computing, deep learning, and edge computing and artificial intelligence. Algorithms and software should continue to evolve with the hardware. As stated in the paper by Leiserson et al., after Moore's Law, there is still a lot of room to improve the ultimate performance of HPC systems through algorithms, software and hardware architecture.

##6 Q&A

Question 1: Currently, the industry Both the government and the academic community are paying more attention to the training of large neural network models. For example, GPT3 has more than 170 billion parameters, which usually requires 100 high-performance GPUs to train for 1 to 3 months. Will high-performance computers be used to complete relevant training in a few days or hours in the future?

Answer 1: GPU provides computers with powerful numerical calculation capabilities. For example, 98% of the computing power in supercomputers comes from GPUs. And moving data between CPU and GPU is very time-consuming. In order to reduce costly data movement, the GPU and CPU can be brought closer together, using chip design methods such as Chiplets or more practical implementation paths. In addition, the method of directly bringing the data and the corresponding processing units closer will also be very helpful to solve the problem of high data transportation costs.

Question 2: We have observed a phenomenon that many current machine learning algorithms can evolve together with the hardware and influence each other. For example, Nvidia and other companies have specially designed a dedicated architecture for the Transformer model, which currently has the best performance in the ML field, making the Transformer easier to use. Have you observed such a phenomenon and what are your comments?

Answer 2: This is a very good example of how hardware design and other aspects reinforce each other. Currently, many hardware researchers pay close attention to changes in the industry and make judgments on trends. Co-designing applications with hardware can significantly improve performance and sell more hardware. I agree with this statement of "algorithms and hardware co-evolving".

# Question 3: You pointed out that the future of high-performance computing will be a heterogeneous hybrid. Integrating these parts will be a very difficult problem and may even lead to performance degradation. If we just purely use the GPU, it may result in better performance. What do you think?

Answer 3: Currently, the CPU and GPU are very loosely coupled together in high-performance computers, and data needs to be transferred from the CPU to the GPU for calculation. In the future, the trend of using different hardware to couple together will continue. For example, using specialized hardware for ML calculations can further enhance the GPU. By loading ML-related algorithms onto the corresponding accelerator, the details of the algorithm are executed on the accelerator and the calculation results are transmitted to the corresponding processor. In the future, pluggable quantum accelerators can also be implemented to execute corresponding quantum algorithms and so on.

Question 4: HPC is very expensive, especially for researchers and small and medium-sized enterprises. Are there methods like cloud computing that can make HPC affordable for teachers, students and small and medium-sized enterprises engaged in research?

Answer 4: In the United States, using HPC requires submitting a relevant application to the relevant department, describing the problem being studied and the amount of calculation required. If approved, there is no need to worry about the cost of HPC use. A study was conducted in the United States on whether all HPC should be converted to cloud-based systems. The results show that cloud-based solutions are 2-3 times more expensive than directly using HPC systems. It is important to note the economic assumptions behind this: HPC is used by enough people, and the problems that need to be solved sometimes require the use of the entire HPC system. In this case, having a dedicated HPC is better than purchasing cloud services. This is the current situation observed in the United States and Europe.

The above is the detailed content of Turing Award winner Jack Dongarra: How the integration of high-performance computing and AI will subvert scientific computing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem of transparent image with blank projection transformation result in OpenCV.js. When using OpenCV.js for image processing, sometimes you will encounter the image after projection transformation...

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

How to implement notifications before task start using Quartz timer and cron expression without changing the front end?

Apr 04, 2025 pm 02:15 PM

Implementation method of task scheduling notification In task scheduling, the Quartz timer uses cron expression to determine the execution time of the task. Now we are facing this...

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to realize the function of playing pictures like videos? Many times, we need to achieve similar video playback effects in the application, but the playback content is not...