Technology peripherals

Technology peripherals

AI

AI

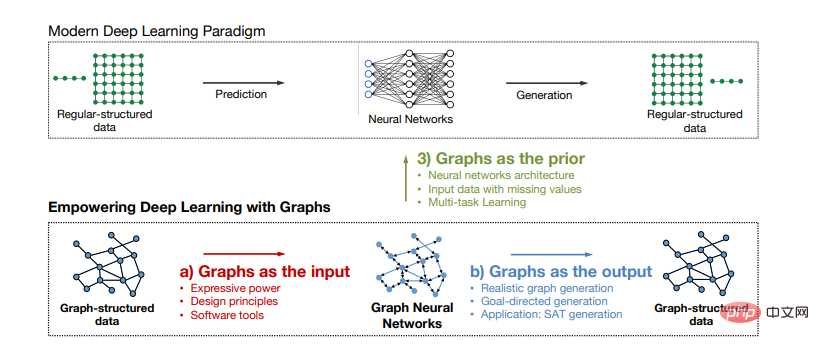

Topological aesthetics in deep learning: GNN foundation and application

Topological aesthetics in deep learning: GNN foundation and application

Topological aesthetics in deep learning: GNN foundation and application

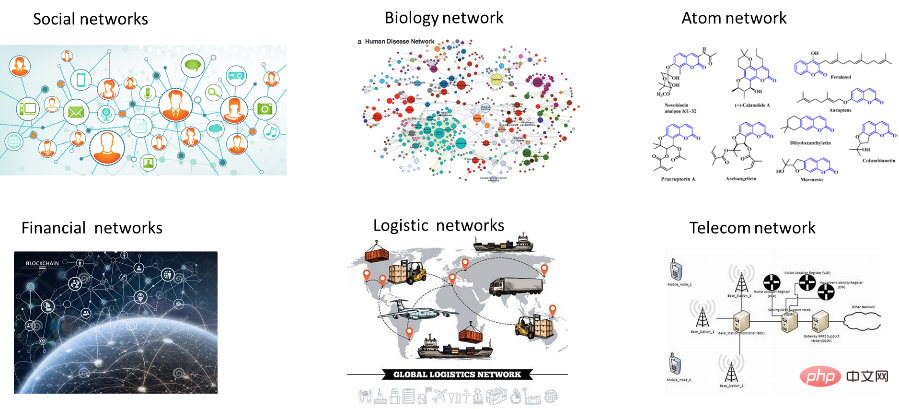

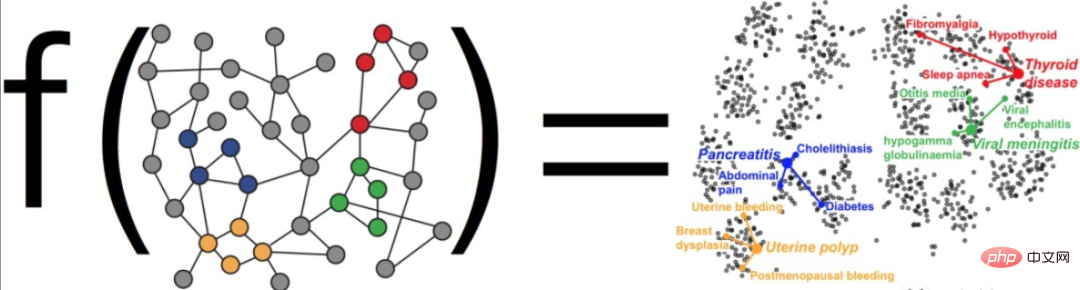

Introduction: In the real world, a lot of data often appears in the form of graphs, such as social networks, e-commerce shopping, protein interaction relationships, etc. In the past few years, In 2007, graph data analysis and mining methods based on neural networks have received widespread attention due to their excellent performance. They have not only become a research hotspot in the academic world but also shined in a variety of applications. This article mainly combines relevant literature, sharing from experts in the field, and the author's superficial experience to make a rough summary and induction. Although it is a transfer of knowledge, it is also mixed with personal subjective judgment. Bias and omissions are inevitable, so please refer to it with caution. It coincides with Christmas Eve to repair and stop writing. I also take this opportunity to wish everyone that everything they wish for in the new year will be fulfilled, and that they will be safe and happy.

1. Overview of the Development of Graph Neural Networks

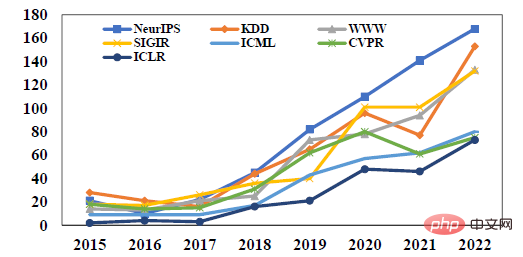

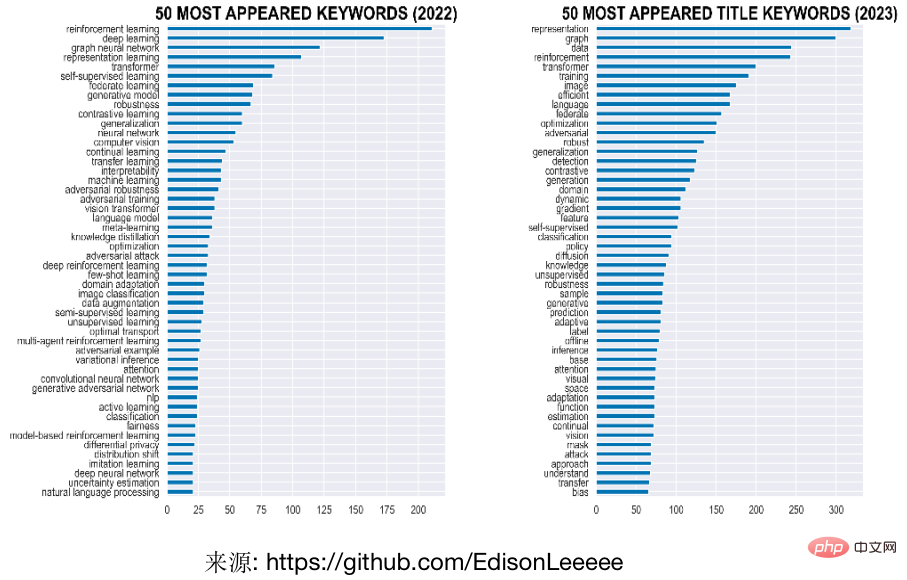

In recent years, research on the use of modeling to analyze graph structures has attracted more and more attention. Among them, the graph neural network based on deep learning graph modeling methods Graph Neural Network (GNN) has become one of the research hotspots in academia due to its excellent performance. For example, as shown in the figure below, the number of papers on graph neural networks at top conferences related to machine learning continues to rise. Using graphs as titles or keywords has been one of the most popular words in ICLR, the top conference on representation learning in the past two years. In addition, graph neural networks have appeared in the best paper awards at many conferences this year. For example, the first and second place for the best doctoral thesis of KDD, the top data mining conference, were awarded to two young scholars related to graph machine learning. The best Research papers and application papers are also about causal learning on hypergraphs and federated graph learning respectively. On the other hand, graph neural networks also have many practical applications in e-commerce search, recommendation, online advertising, financial risk control, traffic prediction and other fields. Major companies are also working hard to build graph learning related platforms or capabilities.

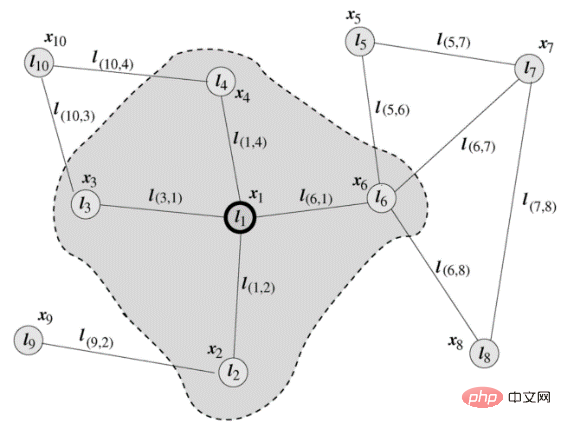

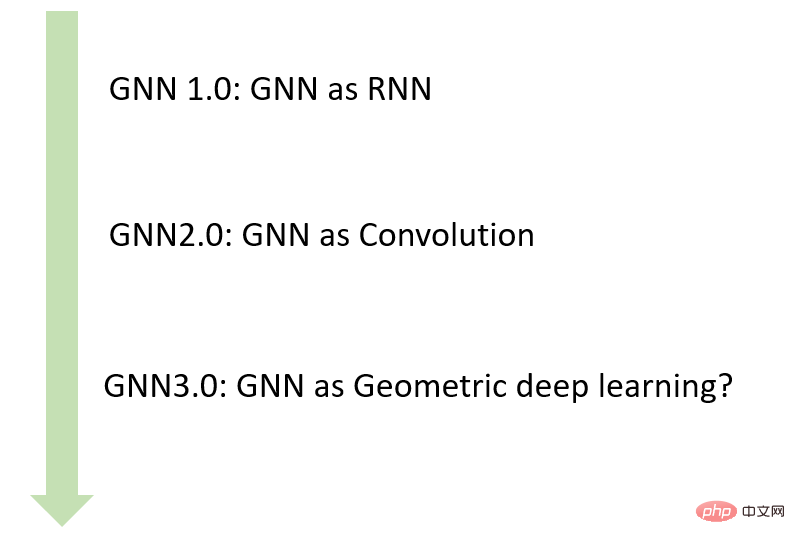

Although graph neural networks have only begun to become a research hotspot in the past five years, However, the relevant definition was proposed by Italian scholars Marco Gori and Franco Scarselli in 2005. A typical diagram in Scarselli's paper is shown below. The early stage of GNN mainly uses RNN as the main framework, inputs node neighbor information to update the node status, and defines the local transfer function as a circular recursive function. Each node uses surrounding neighbor nodes and connected edges as source information to update its own Express.

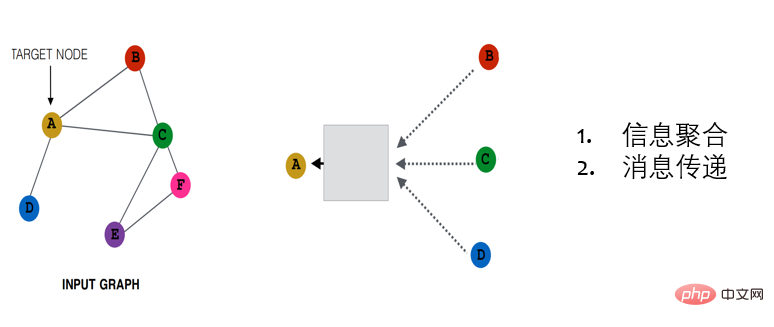

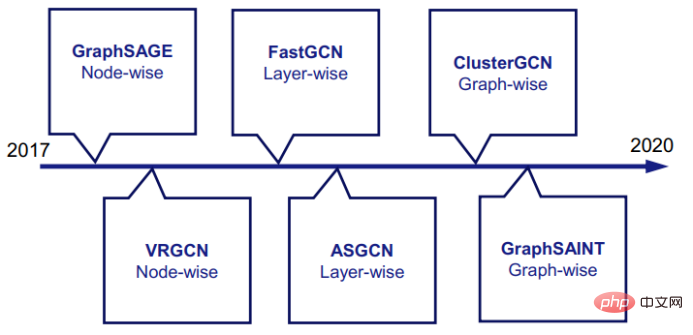

LeCun’s student Bruna et al. proposed applying CNN to graphs in 2014. Through clever conversion of convolution operators, they proposed two graph convolution networks based on frequency domain and spatial domain. Information aggregation methods. Spectrum-based methods introduce filters from the perspective of graph signal processing to define graph convolution, where the graph convolution operation is interpreted as removing noise from the graph signal. Space-based methods are more in line with the CNN paradigm and represent graph convolution as aggregating feature information from neighborhoods. In the following years, although some new models were proposed sporadically, they were still relatively niche research directions. It was not until 2017 that a series of research works represented by the three musketeers of graph models, GCN, GAT, and GraphSage, were proposed, which opened up the computational barriers between graph data and convolutional neural networks, making graph neural networks gradually become a research hotspot, and laid the foundation for The current basic paradigm of graph neural network model based on message-passing mechanism (MPNN).

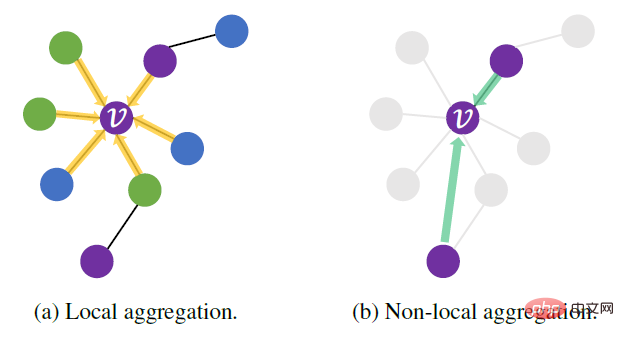

##The typical MPNN architecture consists of several propagation layers , each node is updated based on an aggregation function of neighbor characteristics. According to different aggregation functions, MPNN can be divided into: information aggregation (linear combination of neighbor features, the weight depends only on the structure of the graph, such as GCN), attention (linear combination, the weight depends on the graph structure and features, such as GAT) and message passing (generalized nonlinear functions, such as GraphSAGE), shown from left to right in the figure below.

##From the perspective of reasoning, it can also be divided into Transductive (such as GCN) and inductive (such as GraphSage). The direct push method will learn a unique representation for each node, but the limitations of this model are very obvious. In most business scenarios in the industry, the structure and nodes in the graph cannot be fixed. It will change. For example, new users will continue to appear in the user collection, the user's attention relationship collection will also continue to grow, and a large number of new articles will be added on the content platform every day. In such a scenario, direct push learning requires constant retraining to learn representations for new nodes. The inductive method is to learn the "aggregation function" of node neighbor characteristics, which can be applied to more flexible scenarios, such as the representation of new nodes or changes in the structure of the graph, and therefore will be applicable to various graphs in actual scenarios. Dynamically changing scenes.

#In the process of the development of graph neural networks, in order to solve the problems of graph network calculation accuracy and scalability, new models are constantly proposed from generation to generation. Although the ability of graph neural networks to represent graph data is undoubted, new model design is mainly based on empirical intuition, heuristic methods and experimental trial and error methods. Jure Leskovec's group's 2019 related work GIN (Graph Isomorphism Networks) established a connection between GNN and the classic heuristic algorithm Weisfeiler Lehman (WL) for graph isomorphism detection, and theoretically proved that the upper limit of GNN's expressive ability is 1-WL (Jure is currently an associate professor at the School of Computer Science at Stanford University. The SNAP laboratory he leads is currently one of the most well-known laboratories in the field of graph network. The CS224W "Graph Machine Learning" he teaches is a highly recommended learning material). However, the WL algorithm has very limited expressive capabilities for many data scenarios, such as the two examples in the figure below. For the Circular Skip Link (CSL) Graphs in (a), 1-WL will mark each node in the two graphs with the same color. In other words, these are obviously two graphs with completely different structures. Use 1-WL to test We'll get the same label. The second example is the Decalin molecule shown in (b). 1-WL will dye a and b the same color, and c and d the same color, so that in the task of link prediction, (a, d ) and (b, d) are indistinguishable.

WL-test performs unsatisfactory in many data with triangular or cyclic structures. However, in fields such as biochemistry, cyclic structures are very common and very important, and they also determine the properties of molecules. The corresponding properties greatly limit the applicability of graph neural networks in relevant scenarios. Micheal Bostein and others proposed that the current "node- and edge-centered" way of thinking in graph deep learning methods has great limitations. Based on this, they proposed to rethink the development of graph learning and possible new approaches from the perspective of geometric deep learning. Paradigm (Micheal is currently a DeepMind Professor of Artificial Intelligence at the University of Oxford and the chief scientist of the Twitter Graph Learning Research Group and one of the promoters of geometric deep learning). Many scholars have also started research on a series of new tools from the fields of differential geometry, algebraic topology and differential equations, and proposed a series of works such as equivariant graph neural networks, topological graph neural networks, subgraph neural networks, etc., and have solved many problems. Achieve eye-catching results. Combined with the development context of graph neural networks, we can make a simple summary as shown below.

2. Complex graph model

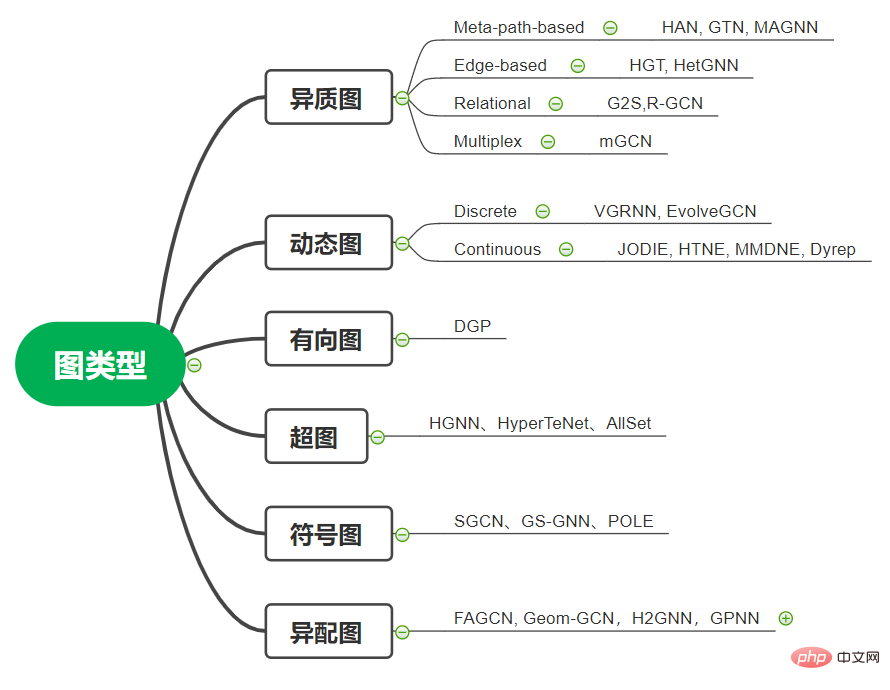

In the previous section we outlined the development process of graph neural networks , the corresponding graph neural networks mentioned are basically set in the scenario of undirected and homogeneous graphs. However, graphs in the real world are often complex. Researchers have proposed methods for directed graphs, heterogeneous graphs, and dynamic graphs. , hypergraph, signed graph and other scenarios. We will briefly introduce these graph data forms and related models respectively:

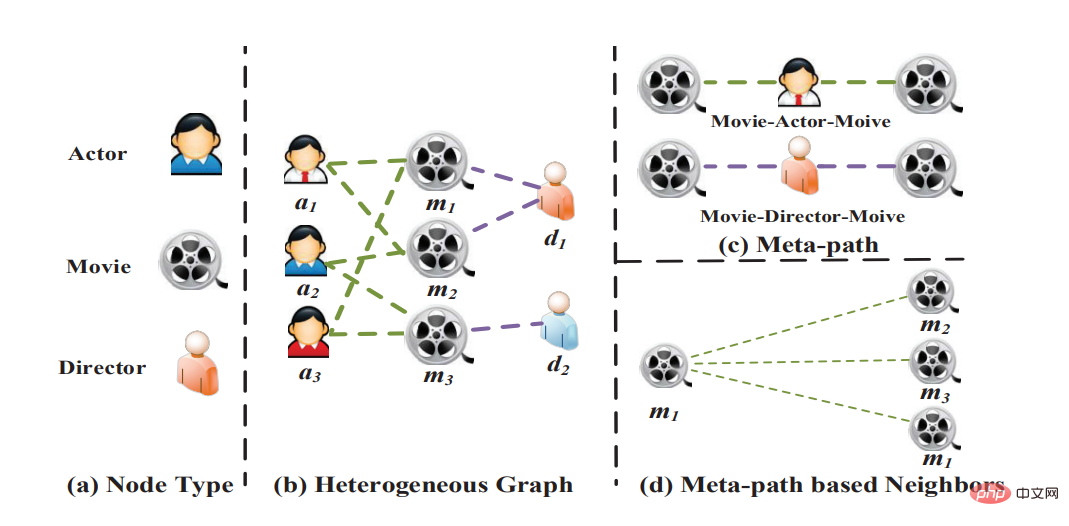

1. Heterogeneous graph: Heterogeneous graph refers to a scenario when nodes and edges have multiple categories and multiple modes exist. For example, in an e-commerce scenario, nodes can be products, stores, users, etc., and edge types can be clicks, collections, transactions, etc. Specifically, in a heterogeneous graph, each node carries type information, and each edge also carries type information. The common GNN model cannot model the corresponding heterogeneous information. On the one hand, the Embedding dimensions of different types of nodes cannot be aligned; on the other hand, the Embeddings of different types of nodes are located in different semantic spaces. The most widely used learning method for heterogeneous graphs is the meta-path based method. The metapath specifies the node type at each location in the path. During training, meta-paths are instantiated as node sequences, and we capture the similarity of two nodes that may not be directly connected by linking the nodes at both ends of a meta-path instance. In this way, a heterogeneous graph can be reduced to several isomorphic graphs, and we can apply graph learning algorithms on these isomorphic graphs. In addition, some works have proposed edge-based methods to deal with heterogeneous graphs, which use different sampling functions and aggregation functions for different neighbor nodes and edges. Representative works include HetGNN, HGT, etc. We sometimes also need to deal with relationship graphs. The edges in these graphs may contain information other than categories, or the number of edge categories is very large, making it difficult to use methods based on meta-paths or meta-relationships. Friends who are interested in heterogeneous graphs can follow the series of work of teachers Ishikawa and Wang Xiao of Peking University.

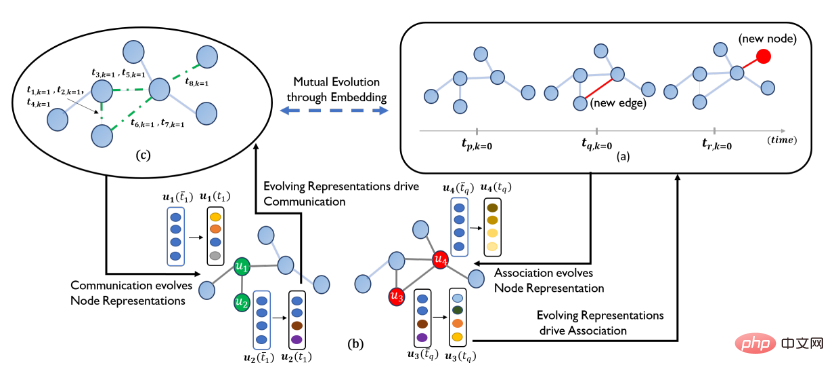

2. Dynamic Graph: Dynamic Graph refers to graph data in which nodes and topological structures evolve over time, and is also widely used in actual scenarios. For example, the academic citation network will continue to expand over time, the interaction graph between users and products will change with user interests, and the transportation network and traffic flow will continue to change over time. GNN models on dynamic graphs aim to generate node representations at a given time. According to the thickness of time granularity, dynamic graphs can be divided into discrete-time dynamic graphs (also called snapshot based) and continuous-time dynamic graphs (event-based); in discrete-time dynamic graphs, time is divided into multiple time slices ( For example, divided into days/hours), each time slice corresponds to a static picture. The GNN model of discrete-time dynamic graphs usually applies the GNN model separately on each time slice, and then uses RNN to aggregate the representation of nodes at different times. Representative works include DCRNN, STGCN, DGNN, EvolveGCN, etc. In a continuous-time dynamic graph, each edge is attached with a timestamp, indicating the moment when an interaction event occurs. Compared with static graphs, the message function in continuous-time dynamic graphs also depends on the timestamp of a given sample and the timestamp of the edge. In addition, neighbor nodes must be related to time, for example, nodes that appear after a certain time cannot appear in neighbor nodes. From a model perspective, point processes are often used to model continuous dynamic graphs, and the arrival rate of the sequence is generated by optimizing the conditional intensity function of the neighborhood generated sequence. This method can also further predict the specific moment when an event occurs (such as a network the death time of a certain link). Representative works on modeling on continuous dynamic graphs include JODIE, HTNE, MMDNE, and Dyrep.

##Source: Dyrep

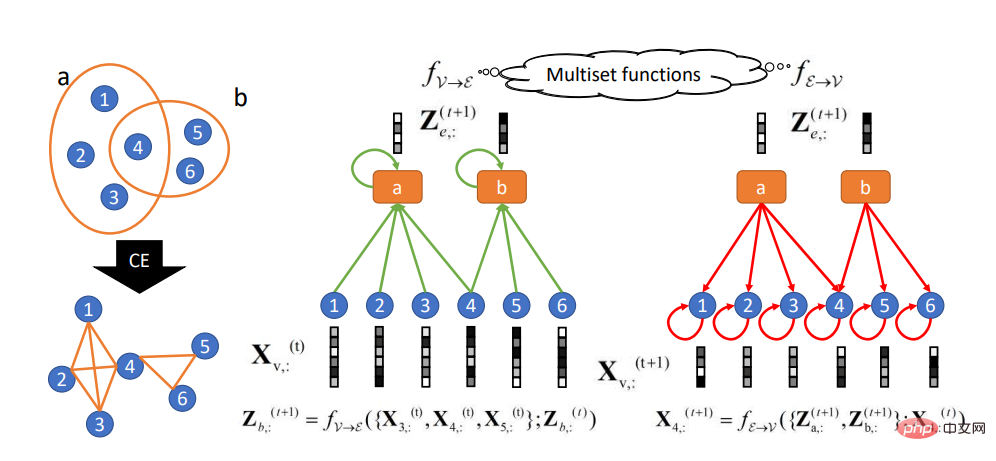

3. Hypergraph: A hypergraph is a graph in a broad sense, and one of its edges can connect any number of vertices. In the early days of research on hypergraphs, it was mainly related to applications in computer vision scenarios. Recently, it has also attracted attention in the field of graph neural networks. The main application areas and scenarios are recommendation systems. For example, a pair of nodes in the graph can be passed through different types of Multiple edges are associated. By utilizing different types of edges, we can organize the graph into several layers, each layer representing a type of relationship. Representative works include HGNN, AllSet, etc.

Source: AllSet

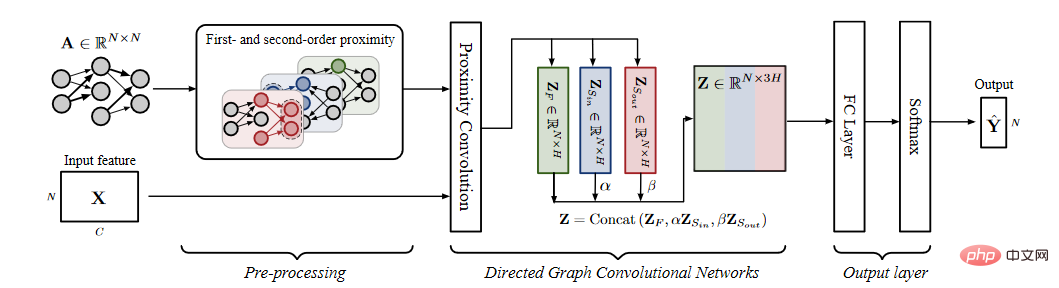

4. Directed Graph :Directed graph means that the connection relationship of nodes is directional, and directed edges often contain more information than undirected edges. For example, in a knowledge graph, if the head entity is the parent class of the tail entity, the direction of the edge will provide information about this partial order relationship. For directed graph scenarios, in addition to simply using an asymmetric adjacency matrix in the convolution operation, you can also model the two directions of the edge separately to obtain better representation. Representative works include DGP and so on.

Source: DGP

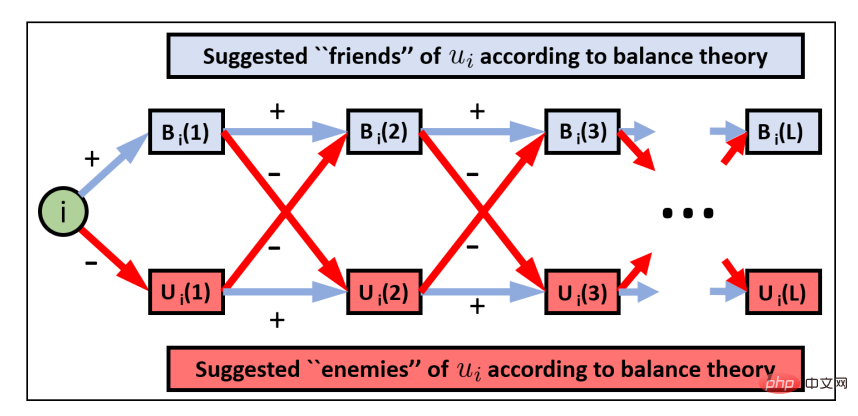

5. Symbol diagram: Signed graph refers to the relationship between nodes in the graph including forward and reverse relationships. For example, in social networks, interactive relationships include positive relationships, such as friendship, agreement and support. As well as negative relationships, such as enemies, disagreements, resistance, etc. Compared with ordinary graphs, symbolic graphs contain richer node interactions. The primary problem to solve when modeling symbolic graphs is how to model negative edges and how to aggregate information about two types of edges. SGCN is based on the assumptions of the balance theory (friends of friends are friends, and friends of enemies are enemies). The corresponding equilibrium path is defined to perform corresponding modeling. In addition, representative works include the polarization embedding model POLE with symbolic network, the bipartite symbolic graph neural network model SBGNN, and the symbolic graph neural network GS-GNN based on k-group theory.

Source: SGCN

6. Mismatched picture: The definition is slightly different from the other types of graphs above. Heterophily graph is an indicator that describes the characteristics of graph data. The so-called heterophily graph refers to data with relatively low similarity between node neighbors on the graph. type. The counterpart to heterogamy is homogamy, which means that linked nodes usually belong to the same category or have similar characteristics ("birds of a feather flock together"). For example, one's friends may have similar political beliefs or age, and a paper may tend to cite papers in the same research field. However, real-world networks do not entirely comply with the assumption of high homoplasy. For example, in protein molecules, different types of amino acids are linked together. The mechanism of graph neural network aggregation and propagation of features through link relationships is based on the assumption of homogeneous data, which makes GNN often have poor results on data with high heterogeneity. At present, there have been many works trying to generalize graph neural networks to heterogeneous graph scenarios, such as Geom-GCN, a model that uses structural information to select neighbors for nodes, H2GNN, which improves the expressiveness of graph neural networks by improving the message passing mechanism, By constructing a pointer network GPNN for information aggregation based on central node correlation reordering (as shown in the figure below, different colors represent different node types), FAGCN by simultaneously combining high-frequency signal and low-frequency signal processing, etc.

Source: GPNN

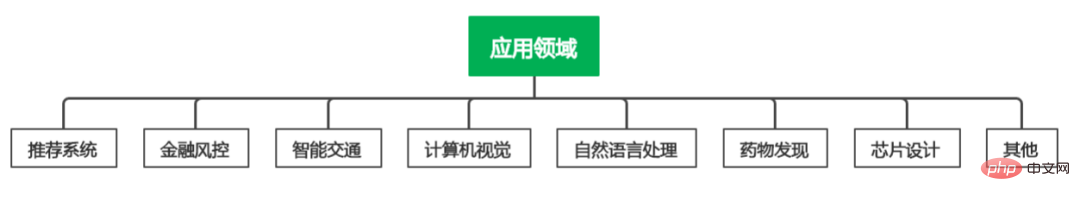

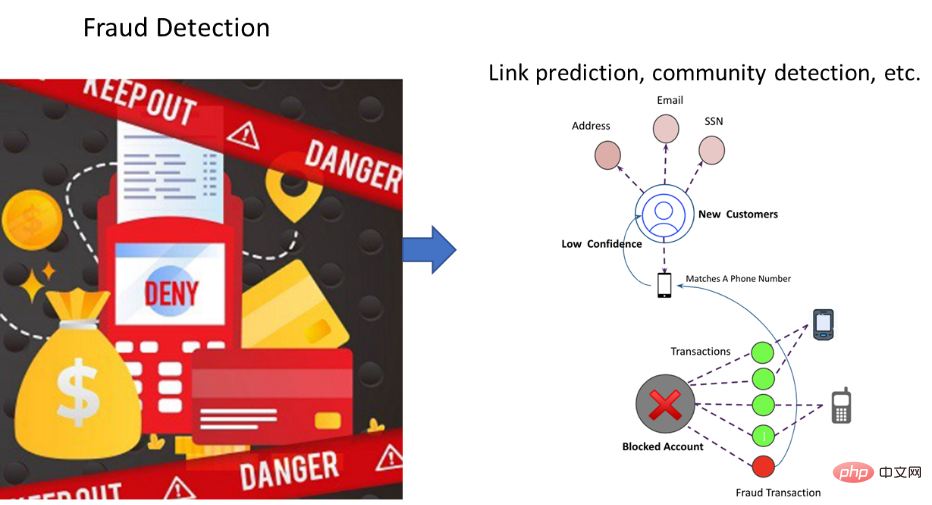

3. Graph Neural Network Application

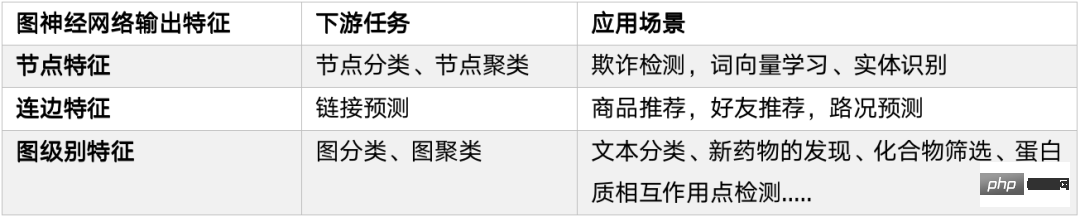

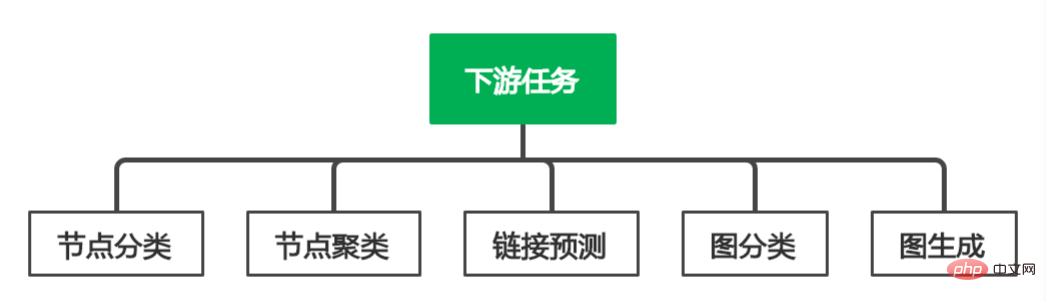

Because graph neural networks can better learn the characteristics of graph-structured data, they have been widely used and explored in many graph-related fields. In this section, we classify and summarize accordingly from the perspectives of downstream tasks and applications.

1. Downstream tasks

Node classification: According to the attributes of nodes (can be categorical or numerical), edge Information, edge attributes (if any), known node prediction labels, and category prediction for nodes with unknown labels. For example, OGB's ogbn-products data set is an undirected product purchase network. The nodes represent products sold in e-commerce. The edge between two products indicates that these products have been purchased together. The attributes of the nodes are determined from the products. Bag-of-word features are extracted from the description, and then principal component analysis is performed to generate dimensionality reduction. The corresponding task is to predict the missing category information of the product.

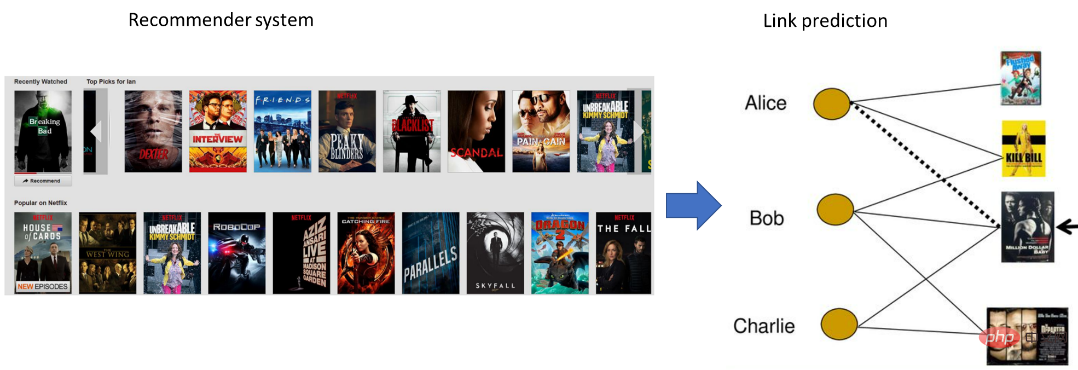

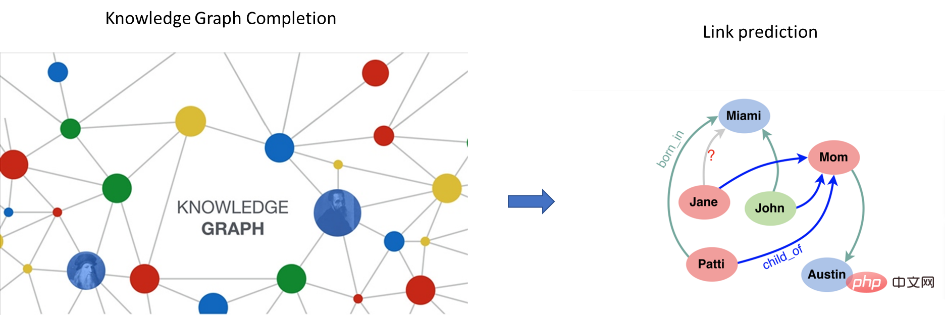

Link prediction: Link prediction (Link Prediction) in the network refers to how to pass the known network nodes and network structure, etc. The information predicts the possibility of a link between two nodes in the network that have not yet been connected. This prediction includes both prediction of unknown links and prediction of future links. Link prediction is widely used in recommendation systems, biochemical experiments and other scenarios. For example, in product recommendation, in the bipartite graph of users and products, if a user purchases a product, there is a link between the user and the product, and similar users may have the same There will be demand for the product. Therefore, predicting whether links such as "purchase" and "click" are likely to occur between the user and the product, so as to recommend products to users in a targeted manner, can increase the purchase rate of the product. In addition, knowledge graph completion in natural language processing and traffic prediction in smart transportation can be modeled as link prediction problems.

Graph classification: Graph classification is actually similar to node classification. The essence is to predict the label of the graph. Based on the characteristics of the graph (such as graph density, graph topology information, etc.) and the label of the known graph, making category predictions for graphs with unknown labels can be found in bioinformatics and chemical informatics, such as training graph neural networks to predict protein structures. nature.

Graph generation: The graph generation goal is to generate new graphs given a set of observed graphs, e.g. In biological information, it is based on generating new molecular structures or in natural language processing, it is based on given sentences to generate semantic graphs or knowledge graphs.

2. Application fields

We will discuss Different application scenarios are introduced accordingly.

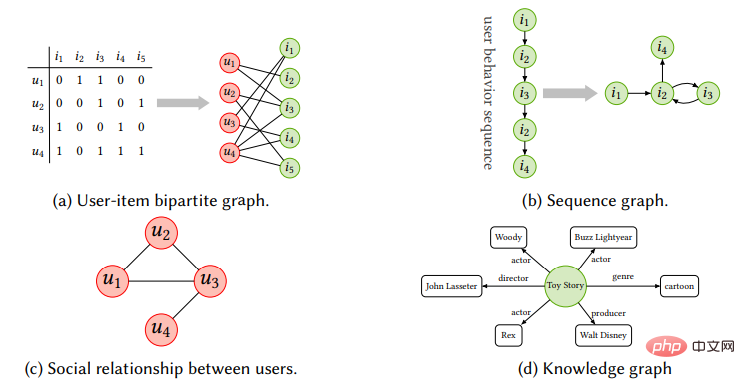

Recommendation system: The development of mobile Internet has greatly promoted the rapid development of information retrieval. . Recommendation systems, as the most important direction, have received widespread attention. The main purpose of the recommendation system is to learn effective user and item representations from historical interactions and side information, so as to recommend items (goods, music) that the user is more likely to prefer. , videos, movies, etc.). Therefore, it is natural to consider constructing a bipartite graph with items and users as nodes, so that the graph neural network can be applied to the recommendation system to improve the recommendation effect. Based on GraphSAGE, Pinterest proposed PinSage, the first industrial-level recommendation system based on GCN, which supports large-scale image recommendation scenarios with 3 billion nodes and 18 billion edges. In fact, after going online, Pinterest’s Shop and Look product views increased by 25%. , In addition, Alibaba, Amazon and many other e-commerce platforms use GNN to build corresponding recommendation algorithms.

In addition to the bipartite graph composed of user-item interaction, the social relationship and knowledge graph in the recommendation system The item transfer graph in the sequence exists in the form of graph data. On the other hand, heterogeneous data also widely exists in e-commerce scenarios in recommendation systems. The nodes can be Query, Item, Shop, User, etc., and the edge types can be Click, collect, deal, etc. By utilizing the relationship and content information between projects, users and users, users and projects, and based on multi-source heterogeneous and multi-modal graph models, higher-quality recommendation effects are also being continuously explored. In addition, serialized recommendations based on changes in user behavior over time in actual business and incremental learning caused by the addition of new users and products have also brought new challenges and opportunities to the development of GNN models.

Natural language processing: Many problems and scenarios in natural language processing are described Because of the association relationship, it can be naturally modeled as a graph data structure. The first direct application scenario is the completion and reasoning of knowledge graph (KG). For example, researchers at Mila proposed to model the single-hop reasoning problem into a path representation learning problem based on NBFNet, thereby realizing the knowledge graph. Inductive reasoning. Graph neural networks use deep neural networks to integrate topological structure information and attribute feature information in graph data, thereby providing more refined feature representations of nodes or substructures, and can easily communicate with downstream in a decoupled or end-to-end manner. Task combination meets the requirements of knowledge graphs in different application scenarios for learning attribute characteristics and structural characteristics of entities and relationships.

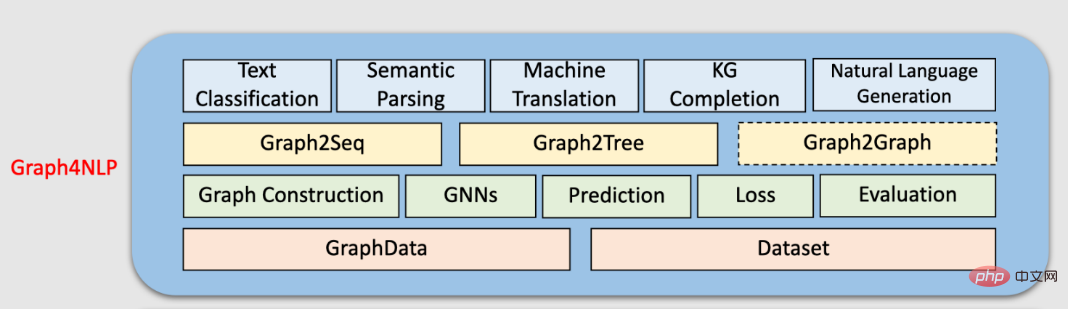

In addition, graph neural networks are used in many problems in natural language processing, such as text classification, semantic analysis, machine translation, knowledge graph completion, named entity recognition, machine classification and other scenarios. For corresponding applications, we recommend that you refer to Dr. Wu Lingfei’s Graph4NLP related tutorials and reviews for more content.

##Source: (https://github.com/graph4ai/graph4nlp)

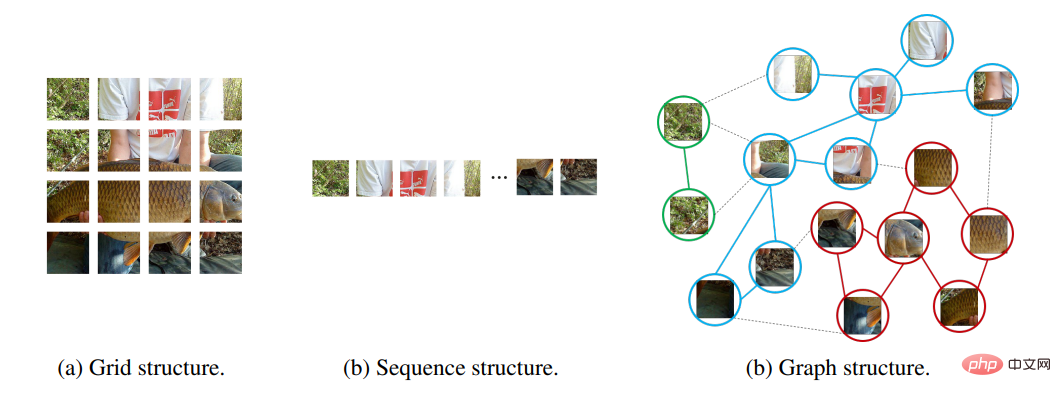

Computer Vision: Computer vision is one of the largest application scenarios in the field of machine learning and deep learning. Compared with the field of recommendation systems and natural language processing, graph neural The Internet is not mainstream in computer vision. The reason is that the advantage of GNN is relationship modeling and learning, and most of the data formats in computer vision are regular image data. When using GNN in CV scenarios, the key lies in how the graph is constructed: what are the vertices and vertex features? How to define the connection relationship of vertices? The initial work is mainly used for some scenes that are intuitive and easy to abstract the graph structure. For example, in the action recognition method ST-GCN used for dynamic skeletons, the natural skeleton of the human body can naturally be regarded as a graph structure to construct a spatial graph. In scene graph generation, the semantic relationships between objects help understand the semantic meaning behind the visual scene. Given an image, scene graph generation models detect and identify objects and predict semantic relationships between pairs of objects. In point cloud classification and segmentation, point clouds are converted into k-nearest neighbor graphs or overlay graphs to use graph networks to learn related tasks. Recently, the application direction of graph neural networks in computer vision is also increasing. Some researchers have made related explorations and attempts in general computer vision tasks such as object detection. For example, Huawei proposed a new general visual architecture based on graph representation. In ViG, researchers divided the input image into many small blocks and constructed corresponding node graphs. Experimental results show that compared to matrices or grids, graph structures can represent object components more flexibly. relationship to achieve more ideal results.

##Source: Vision GNN@NeurIPS 2022

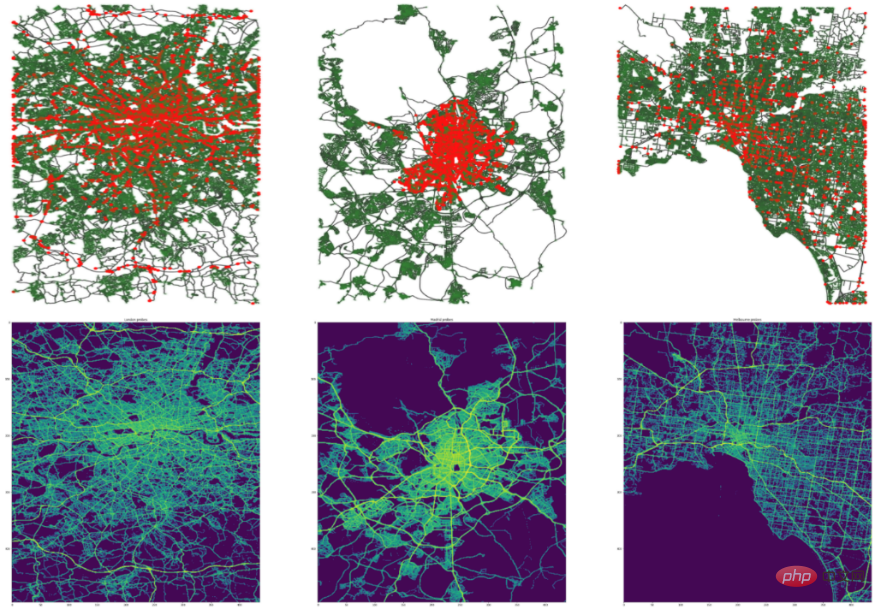

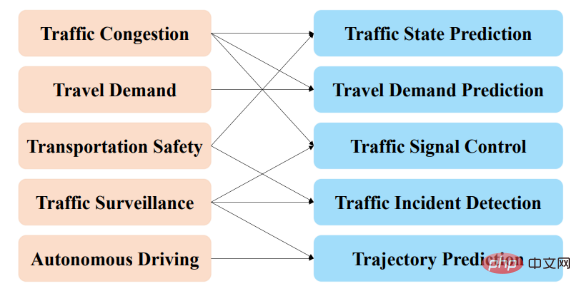

Intelligent transportation:Intelligent management of transportation is a hot issue in modern cities. Accurate prediction of traffic speed, traffic volume or road density in a transportation network is crucial in route planning and flow control. Due to the highly nonlinear and complex nature of traffic flow, it is difficult for traditional machine learning methods to learn spatial and temporal dependencies simultaneously. The booming development of online travel platforms and logistics services has provided rich data scenarios for intelligent transportation. How to use neural networks to automatically learn the spatiotemporal correlation in traffic data to achieve better traffic flow analysis and management has become a research hotspot. Since urban traffic (as shown in the figure below) naturally exists in the form of irregular grids, it is a very natural exploration to use graph neural networks for intelligent traffic management.

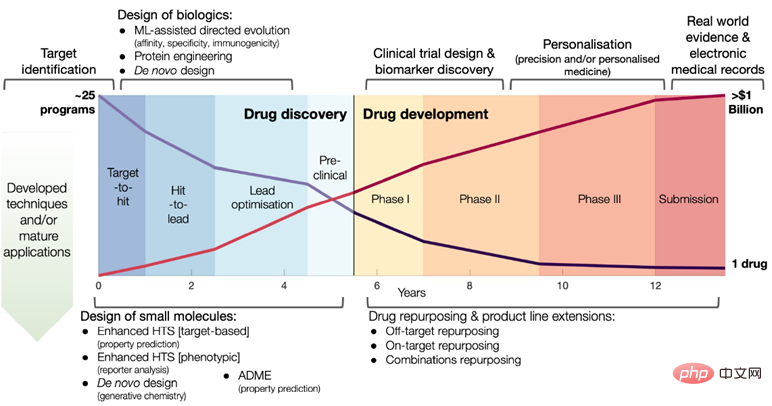

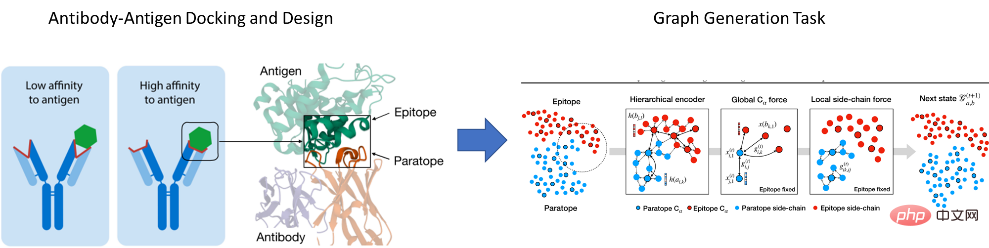

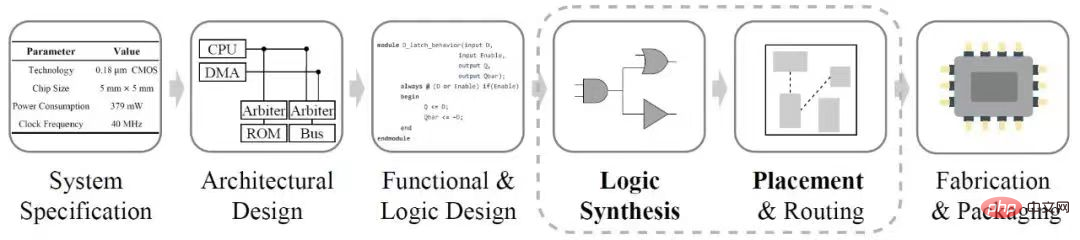

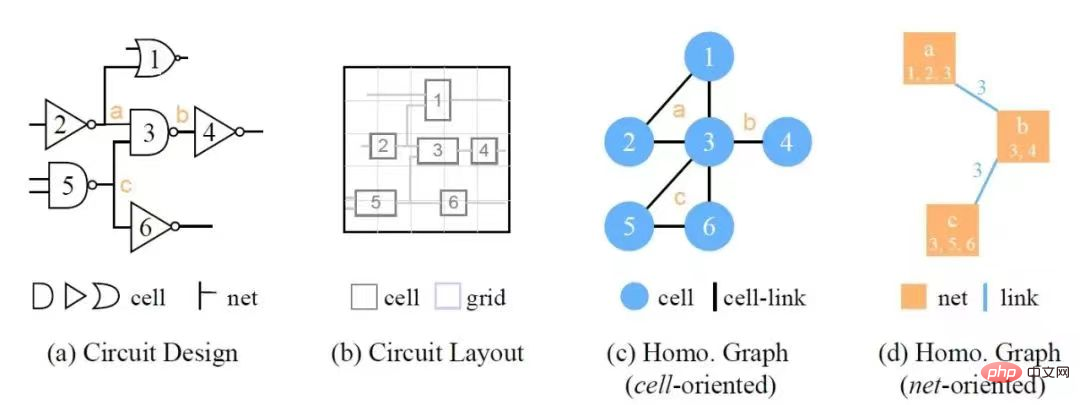

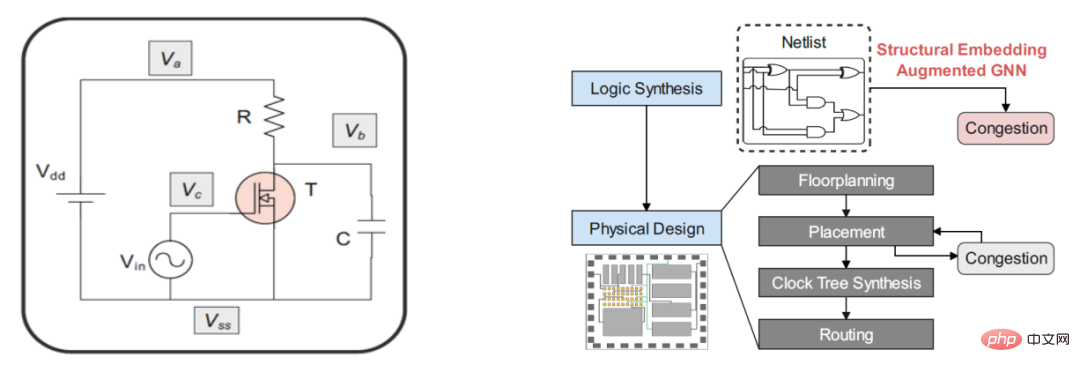

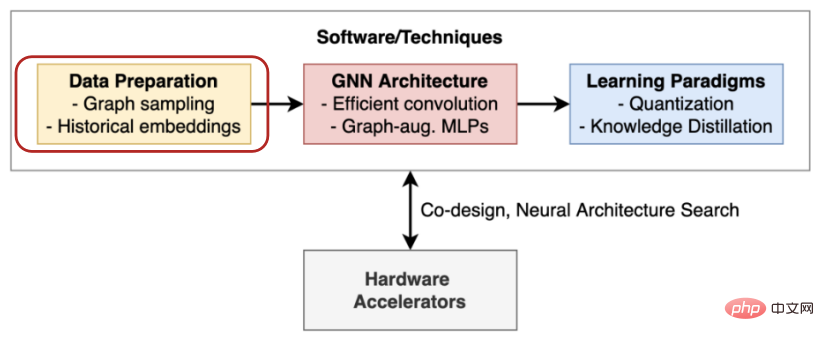

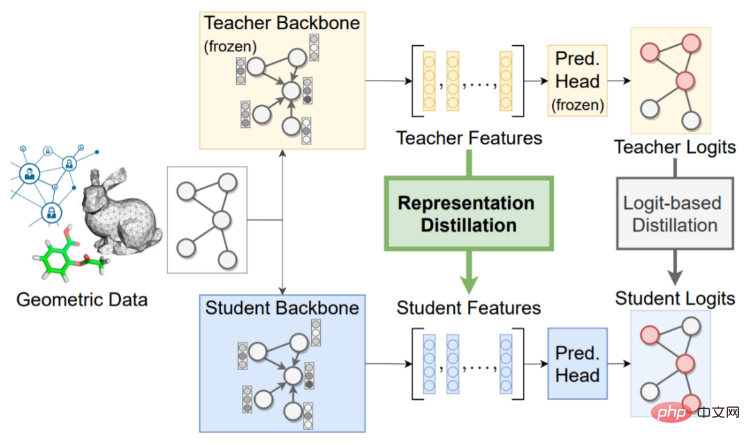

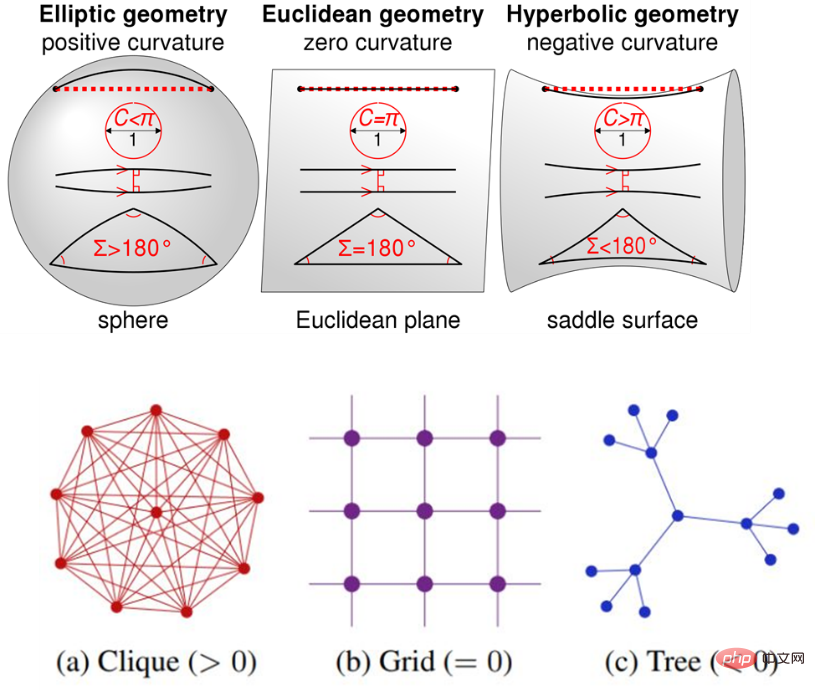

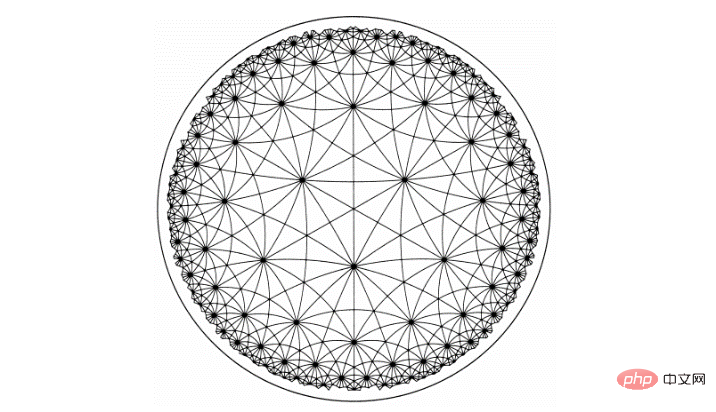

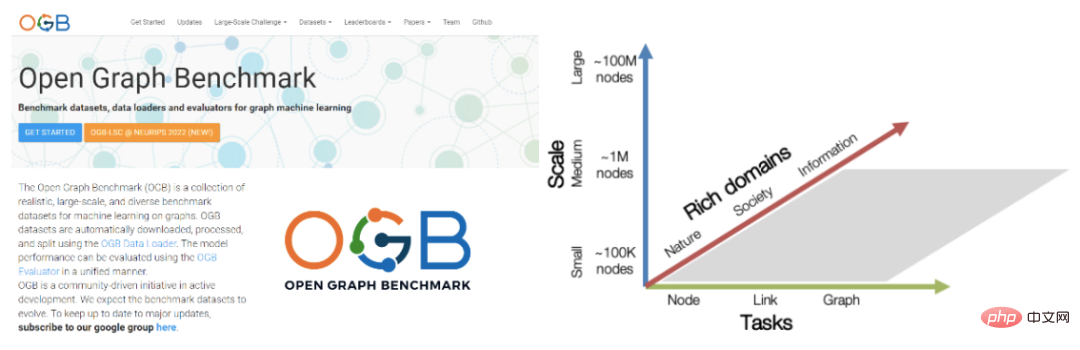

For example, the classic spatio-temporal network STGCN uses GCN to capture spatial features for each traffic flow map at each moment, and captures the temporal features of each node through convolution in the time dimension. These two Operate cross-hybrid parallelism to achieve end-to-end learning of features in both spatio-temporal dimensions. There is also corresponding work that uses multi-source information to construct node association graphs from different perspectives to aggregate information to achieve more accurate prediction effects. In addition to traffic prediction, graph neural networks are also used in many aspects such as signal light management, traffic event detection, vehicle trajectory prediction, and road congestion prediction. In recent years, relevant competitions at top conferences such as KDD and NeurIPS have also set up corresponding traffic prediction questions, and the winner solutions basically include graph neural networks. Due to the simultaneous existence of spatio-temporal dynamics, it is no exaggeration to say that relevant application demands in the field of intelligent transportation are the most important driver of the development of spatio-temporal graph neural networks. Financial risk control: With the development of the market economy and the process of industry digitization, a large number of While traditional businesses are migrating online, various new online products and services are also increasing day by day. Massive data and complex relationships bring great challenges to financial transactions and related audits. Bank credit management and risk management of listed companies play an important role in maintaining the order of the financial market. With the promotion of emerging global payment management systems such as Alipay and Paypal, the payment risk control system that protects them plays a vital role in protecting the security of user funds, preventing card theft and account theft, and reducing platform losses. However, traditional algorithms are not enough to solve the analysis of graph network data with associated information. Thanks to the graph neural network's ability to process graph data, a series of practices in various financial risk control scenarios have emerged. For example, risk assessment before and after lending during the transaction process, virtual account/scam/fraud detection, etc. Although the application of graph deep learning technology has proven to be effective and necessary in the field of risk control, the development time is short and the overall process is still in the early stages of development. Due to the privacy of industry data, the main technological innovations are still based on the corresponding companies. Among them, Ant Financial and Amazon are the most prominent ones. For example, the GeniePath algorithm proposed by Ant Financial is defined as a binary classification problem for an account. The first GEM algorithm proposed by Ant Financial to use graph convolution to identify malicious accounts is mainly used in account login/registration scenarios. The privacy of data and the diversity of scenarios also result in the lack of unified standards in the industry for comparison and verification of models. Recently, Xinye Technology and Zhejiang University jointly released a large-scale dynamic graph data set DGraph, which provides a large-scale data of a real scene for verification of fraud and other anomaly detection scenarios. The nodes represent the financial lending users served by Xinye Technology, and are directed to The edge represents the emergency contact relationship, and each node contains desensitized attribute characteristics and a label indicating whether the user is a financial fraud user. Although there are problems such as data barriers, the prevalent data imbalance in financial risk control scenarios, the difficulty in obtaining labels, and the demand for model interpretability have also brought new thinking and opportunities to the development of graph neural networks. Drug discovery: Drug development is a long-term, expensive and risky undertaking Engineering, from initial drug design and molecular screening to later safety testing and clinical trials, the new drug research and development cycle takes about 10-15 years. The average research and development cost of each drug is nearly 3 billion US dollars. During this process, 1 /3 of the time and cost are spent in the drug discovery stage. Especially in the face of the outbreak of epidemics such as COVID-19, how to effectively use deep learning models to quickly discover possible and diverse candidate molecules and accelerate the development process of new drugs has aroused the thinking and participation of many researchers. Molecular compounds, proteins and other substances involved in drug research and development naturally exist in graph structures. Taking molecules as an example, the edges of the graph could be bonds between atoms in a molecule or interactions between amino acid residues in a protein. And on a larger scale, graphs can represent interactions between more complex structures such as proteins, mRNA, or metabolites. In a cell network, nodes can represent cells, tumors, and lymphocytes, and edges represent the spatial proximity between them. Therefore, graph neural networks have broad application prospects in molecular property prediction, high-throughput screening, new drug design, protein engineering, and drug reuse. For example, researchers from MIT CSIAL and their collaborators published work in Cell (2020) using graph neural networks to predict whether molecules have antibiotic properties. This year, members of the same group proposed a series of work such as building an antigen-based conditional generation model based on graph generation methods to design antibodies that highly match specific antigens. Mila Labs is also a pioneer in applying graph learning to drug discovery, and has recently open sourced the PyTorch-based drug discovery machine learning platform TorchDrug based on corresponding exploration. In addition, major technology companies have also made plans and explorations in AI pharmaceuticals in recent years, and have achieved corresponding outstanding results. Tencent AI Lab's "Yunshen" platform released the industry's first large-scale out-of-distribution research framework for drug AI, DrugOOD, to promote Research on the distribution shift problem in pharmaceutical scenarios to help the development of the drug research and development industry. Baidu Biotech, founded by Baidu founder Robin Li, is committed to combining advanced AI technology with cutting-edge biotechnology to create unique target discovery and drug design. ##Chip design: Chip It is the soul of the digital age and one of the three elements of the information industry. Graph structured data runs through multiple stages of chip design. For example, in the Logic Synthesis stage, digital circuits are represented by NAND graphs. In the Physical Design stage, correlation is generated based on the circuit netlist generated by logic synthesis. Constraints, engineers complete the layout and routing of the chip according to certain density and congestion limit requirements. ##As circuits continue to grow in size and complexity ,The design efficiency and accuracy of electronic design automation ,(EDA) tools has become a crucial issue, which attracts ,researchers to adopt deep learning technology to assist the ,circuit design process. If circuit quality and usability can be predicted in the early stages of chip design, the efficiency of chip iterations can be improved and design costs can be reduced. For example, predicting the congestion of a circuit in the physical design stage can help detect its defects and avoid producing defective chips. If such predictions can be made in the logic synthesis stage, the design and production cycle of the chip can be further saved. The Google and Stanford University teams successfully used GNN in hardware design, combined with reinforcement learning, such as optimizing the power consumption, area and performance of Google TPU chip blocks. In view of the various heterogeneous information in the chip netlist representation, the Circuit GNN proposed by Huawei and Peking University can compose the map by integrating topological and geometric information to improve the performance of various EDA tasks for cell and net attribute prediction. In the previous content, we introduced some basic paradigms of graph models and corresponding application scenarios. We can see that graph neural network as a new depth Learning architecture shines in different fields such as social networks, recommendation systems, and biomedical discovery. However, in actual applications, the scalability and usability of graphical models still face many theoretical and engineering challenges. The first is memory limitations. At the beginning of the design, GCN's convolution operation was performed on the entire graph, that is, the convolution operation of each layer will traverse the entire graph. In practical applications, the memory and time overhead required are unacceptable. In addition, in the traditional machine learning framework, the loss function of the model can be decomposed into the sum of the losses of individual samples, so mini batch and stochastic optimization can be used to process training sets that are much larger than GPU memory. However, in the training of GNN, unlike standard data sets for machine learning where samples are independent, the relational structure of network data will produce statistical dependencies between samples. Directly conducting Mini-Batch training through random sampling will often lead to a greatly reduced model effect. However, it is not a simple matter to ensure that the subgraph retains the semantics of the complete graph and provides reliable gradients for training GNN. The second is hardware limitations. Compared with image data and text data, graphs are essentially a sparse structure, so their sparsity needs to be exploited for efficient and scalable calculations. However, the current design of corresponding deep learning processors and related hardware is aimed at processing matrices. intensive operations. In this section, we mainly summarize the scalability of the graph model. Referring to the summary of Chaitanya K. Joshi, a doctoral student at the University of Cambridge, related work can be summarized into four aspects: data preprocessing, efficient model architecture, new learning paradigms, and hardware acceleration (as shown in the figure below). Data preprocessing generally implements the calculation of large-scale graph data by sampling or simplifying the original data (we will go further below) expand). The new architecture proposes some new, more efficient and concise architectures from the perspective of some specific tasks or data. For example, LightGCN eliminates the inner product part between adjacent nodes to accelerate the running speed. Some works have also found that using label propagation methods after running MLP on node features can also achieve good results. In addition, we can also improve the performance of GNN and reduce latency through some lightweight learning paradigms such as knowledge distillation or quantization-aware training. The point to mention is that the above-mentioned methods of accelerating training of graph neural networks are decoupled from each other, which means that in actual scenarios, multiple methods can be used simultaneously. ##Source: G-CRD@TNNNLS Compared with model optimization and new learning paradigms, data preprocessing is a more general and more applicable method. It is also relatively speaking at present. We will briefly analyze and introduce it here. Generally speaking, data preprocessing methods use some sampling or graph simplification to reduce the size of the original image so as to meet memory constraints. Sampling-based methods can be divided into three subcategories, Node -Wise Sampling, Layer-Wise Sampling and Graph-Wise Sampling. Node-Wise Sampling: First proposed by GraphSage, it is a relatively common, effective and most widely used method. One layer of GraphSAGE aggregates information from 1-hop neighbors. Overlaying k layers of GraphSAGE can increase the receptive field to a subgraph induced by k-hop neighbors. At the same time, the neighbors are evenly sampled, which can control the speed of the aggregation operation and reduce the number of neighbors. Meaning less calculations. However, it should be noted that as the number of layers increases, the number of sampled neighbors will also increase exponentially. In the end, it is still equivalent to message aggregation on the subgraph induced by k-hop neighbors, and the time complexity is not substantial. Improve. Lay-Wise sampling:First proposed by Fast GCN. Unlike GraphSAGE, it directly limits the neighbor sampling range of the node. Through importance sampling, it samples each sample node of GraphSAGE in a small batch from all nodes. The neighbor set is independent, and all sample nodes of Fast GCN share the same neighbor set, so the computational complexity can be directly controlled to the linear level. However, it should be noted that when the graph we are processing is large and sparse, this Samples from adjacent layers sampled by this method may not be related at all, resulting in failure to learn. Graph-wise Sampling: Different from the neighbor sampling method, the graph sampling technique is based on Sampling subgraphs on the original image, for example, Cluster GCN uses the idea of clustering to divide the graph into small blocks for training to achieve graph sampling. Graph clustering algorithms (such as METIS) group similar nodes together, causing the node distribution within a class to deviate from the node distribution of the original graph. In order to solve the problems caused by graph sampling, Cluster GCN simultaneously extracts multiple categories as a batch to participate in training to balance the node distribution. However, the information loss of the structure-based sampling method is relatively large, and most of the data results are larger than the full-batch GNN. Each epoch needs to be sampled, and the time overhead is not small. In addition to sampling, through some Graph reduction is also a feasible direction to reduce the size of the original graph while retaining key attributes for subsequent processing and analysis. Graph simplification mainly includes graph sparsification: reducing the number of edges in the graph and graph coarsening: reducing the number of vertices in the graph. Among them, graph coarsening aggregates some subgraphs into a super- node is a suitable framework to achieve simplification of the original image scale. The algorithm for using graph coarsening for accelerated GNN training was first proposed in KDD 2021 work. The process is as shown in the figure below: First use a graph coarsening algorithm (such as spectral clustering coarsening) to coarsen the original graph, and perform model training on the coarsened graph G′, thereby reducing the parameters required for graph neural network training. , and reduce training time and running memory overhead. The method is universally simple and has linear training time and space. The author's theoretical analysis also shows that APPNP training on a graph coarsened by spectral clustering is equivalent to restricted APPNP training on the original graph. However, like the graph sampling method, the method based on graph coarsening also requires data preprocessing, and the time overhead is related to the experimental results and the choice of coarsening algorithm. Similarly, the several sampling or simplified graph model extension methods introduced above are also methods that are decoupled from each other, which means that multiple methods can be used together at the same time, such as Cluster GCN GraphSAGE. In essence, aggregating messages on a subgraph induced by k-hop neighbors is an exponential operation. It is difficult to control the time complexity of the algorithm based on node sampling at the linear level without losing information; while pre-processing Downsampling the original image is a good solution, because if the entire image can be put into memory for calculation, the time complexity of GCN will be linear, but the cost of preprocessing cannot be ignored. There is no free lunch in the world. The training acceleration of graph neural network still requires a trade-off between information loss and preprocessing overhead. Different methods need to be used for analysis based on the actual situation. In addition, the graph is essentially a sparse object, so the design efficiency and scalability issues should be considered more from the perspective of data sparsity. think. But this is easier said than done, as modern GPUs are designed to handle intensive operations on matrices. Although customized hardware accelerators for sparse matrices can significantly improve the timeliness and scalability of GNNs, related work is still in its early stages of development. In addition, the design of communication strategies for graph computing is also a direction that has attracted much attention recently. For example, the Best research paper and Best student paper awards of VLDB2022 and Webconf 2022 were awarded to systems or algorithms that accelerate the processing of graph models. Among them, SANCUS@VLDB2022 proposed a set of distributed training framework (SANCUS), with the goal of reducing communication volume, and using a decentralized mechanism to accelerate the distributed training of graph neural networks. The article not only theoretically proves that the convergence speed of SANCUS is close to that of full-graph training, but also verifies the training efficiency and accuracy of SANCUS through experiments on a large number of real scene graphs. The work of PASCA@Webconf2022 attempts to separate the message aggregation operation and update operation in the message passing framework, and defines a new paradigm of pre-processing-training-post-processing to achieve communication overhead in distributed scenarios. ## Source: PASCA@Webconf2022 Thanks to the rapid growth of computing resources and the powerful representation ability of deep neural networks, deep learning has become a knowledge An important tool for digging. Graphs are a versatile and powerful data structure that represent entities and their relationships in a concise form and are ubiquitous in applications in the natural and social sciences. However, graph data in the real world vary widely in structure, content, and tasks. The best-performing GNN network and architecture design for one task may not be suitable for another task. For a given data set and prediction task, how to quickly obtain a model with good results is very meaningful for researchers or application algorithm engineers. For a given data set and prediction task, what neural network architecture is effective? Can we build a system that automatically predicts good GNN designs? With these thoughts, Jure Leskovec's group defined the design space of GNN from three levels in their published work on the graph neural network design space in 2020. This work also provides a basis for the subsequent migration of graph automatic machines and graph models. Learning lays the foundation. ##Given a certain task and a certain data set, we can first pass: (1) Intra-layer design: the design of a single GNN layer. # (2) Inter-layer design: How to connect GNN layers. #(3) Learning configuration: How to set parameters for machine learning. Construct the corresponding GNN design space in three directions, and then quantify their performance differences on specific tasks by sorting the models, so as to understand the optimal model design under given data. In addition, for new tasks and data, we can also quickly identify the most similar tasks by simply calculating the similarity between the new data set and the existing collection in the task space, and migrate its best model to the new data. Training on the set. In this way, a better model can be obtained quickly on data sets that have never been used before. Of course, automatic machine learning on graphs and the transferability of graph models are very important issues in academic research and industrial applications. In the past two years, there have been a lot of related explorations and thoughts. Here we I won’t go into details anymore. For more work on automatic machine learning on graphs, I suggest that you pay attention to the relevant review of the academic group of Tsinghua University teacher Zhu Wenwu and their open source automatic learning toolkit AutoGL and the related work of the fourth paradigm in the industry. Source: AutoGL mentioned earlier The obtained model design space mainly focuses on the model structure level, but there is another very important dimension, which is the representation or learning space of the model, which is also very necessary to supplement. Graph machine learning is a means of representation learning on graph data. The goal is not to predict an observation result by learning the original data, but to learn the underlying structure of the data, so that the corresponding feature learning of the original data can be better performed. and expression to achieve better results on downstream tasks. Most current representation learning is performed in Euclidean space, because Euclidean Space is a natural generalization of our intuitive and friendly visual space and has great computational and computational advantages. But as we all know, graphs have non-Euclidean structures. For example, research in the field of complex networks shows that there are a large number of scale-free properties in real network data (social networks, commodity networks, telecommunications networks, disease networks, semantic networks, etc.) ( scale-free), which means that tree-like/hierarchical structures are ubiquitous in reality. Using Euclidean space as the prior space for representation learning to perform corresponding modeling will inevitably cause corresponding errors (distortion). Therefore, representation learning based on different curvature spaces has recently attracted everyone's attention. Curvature is a measure of the curvature of space. The closer the curvature is to zero, the flatter the space. In the science fiction novel "The Three-Body Problem", humans use the changes in the curvature of space to build a curvature spacecraft. As shown in the figure below, Euclidean space is uniform and flat everywhere, has isotropy and translation invariance, and is therefore suitable for modeling grid data. The spherical spatial distance measure with positive curvature is equivalent to the angle measure and has rotation invariance, so it is suitable for modeling ring data or dense and uniform graph data structures. The hyperbolic space distance metric with negative curvature is equivalent to a power law distribution and is suitable for modeling scale-free networks or tree structures. ##Due to the large number of unknown data in the actual network data, Scale-free means that tree-like/hierarchical structures are ubiquitous in reality. Among them, hyperbolic space is regarded as a continuous expression of tree/hierarchical structure in the field of traditional network science, so it is more suitable for modeling actual data. Recently, many excellent works have emerged. In addition, compared with Euclidean space, the volume of hyperbolic space increases exponentially with the radius, so it has a larger embedded space. Different from Euclidean space, hyperbolic space has multiple models that can be described. Let's briefly introduce it using Poincare Ball as an example. The Poincaré disk is a hyperbolic model by restricting the embedding space to a unit sphere. In the hyperbolic model of the Poincaré sphere, all the light and dark triangles above are the same size, but from our Euclidean perspective, the triangles near the edge are relatively small. To put it another way, if you look at it from a European perspective, taking the center of the circle above as the origin, as the radius increases, the number of triangles becomes more and more We can imagine that using hyperbolic space modeling is like "blowing up a balloon". Suppose a deflated balloon has a billion nodes on its surface. This would be a very dense state. As the balloon gradually inflates and becomes larger, the surface of the balloon becomes more and more "curved", and the nodes become further apart. Alimama’s technical team applied curvature space (Curvlearn) to Taobao-based search advertising scenarios. After the system was fully launched, storage consumption was reduced by 80%, and user-side request matching accuracy increased by 15%. In addition to recommendation systems, the hyperbolic graph model has shown excellent results in a variety of different scenarios. Students who are interested in related content can also refer to our related tutorials on hyperbolic graph representation learning on ECML-PKDD this year (homepage Portal: https://hyperbolicgraphlearning.github.io/ ) or hyperbolic neural network related tutorials at WebConf by scholars such as Virginia Tech and Amazon. The graph neural network algorithm combines the operations of deep neural networks (such as convolution , gradient calculation) combined with iterative graph propagation: the features of each vertex are calculated by the features of its neighbor vertices combined with a set of deep neural networks. However, existing deep learning frameworks cannot extend and execute graph propagation models, and therefore lack the ability to efficiently train graph neural networks. In addition, the scale of graph data in the real world is huge, and there are complex dependencies between vertices. For example, Facebook's social network graph contains more than 2 billion vertices and 1 trillion edges. A graph of this size may generate 100 TB of data. Different from traditional graph algorithms, balanced graph partitioning not only depends on the number of vertices within the partition, but also depends on the number of vertex neighbors within the partition. The number of multi-order neighbors of different vertices in a multi-layer graph neural network model may The differences are huge, and frequent data exchange is required between these partitions. How to reasonably partition graph data to ensure the performance of distributed training is a major challenge for distributed systems. In addition, graph data is very sparse, which leads to frequent cross-node access in distributed processing, resulting in a large amount of message passing overhead. Therefore, how to reduce system overhead based on the special properties of graphs is a major challenge to improve system performance. If a worker wants to do his job well, he must first sharpen his tools. In order to support the application of graph neural networks on large-scale graphs and the exploration of more complex graph neural network structures, it is necessary to develop a training system for graph neural networks. The first thing to mention is the two most well-known open source frameworks PyG (PyTorch Geometric) and DGL (Deep Graph Library). The former is a PyTorch-based graph neural network library jointly developed by Stanford University and TU Dortmund University. It contains many GNNs. Method implementations and commonly used data sets in related papers are provided, and simple and easy-to-use interfaces are provided. The latter is a graph learning framework jointly developed by New York University and Amazon Research Institute. As the earliest open source framework in academic and industrial circles, both All have active community support. In addition, many companies have also built their own graph neural network frameworks and databases based on their own business characteristics. For example: NeuGraph, EnGN, PSGraph, AliGraph, Roc, AGL, PGL, Galileo, TuGraph, Angle Graph, etc. Among them, AliGraph is a graph neural network platform integrating sampling modeling and training developed by Alibaba Computing Platform and DAMO Academy Intelligent Computing Laboratory. PGL (paddle graph learning) is a corresponding graph learning framework based on PaddlePaddle developed by Baidu. Angle Graph is a large-scale high-performance graph computing platform launched by Tencent TEG data platform. Let’s talk about the benchmark platform again. In the core research or application areas of deep machine learning, benchmark datasets as well as platforms help identify and quantify which types of architectures, principles, or mechanisms are universal and can be generalized to real tasks and large datasets. For example, the latest revolution in neural network models was triggered by the large-scale benchmark image data set ImageNet. Compared with grid or sequence data, the development of graph data models is still in a relatively free growth stage. First, data sets are often too small to match real-world scenarios, meaning it is difficult to reliably and rigorously evaluate algorithms. Secondly, the schemes for evaluating algorithms are not uniform. Basically, each research paper uses its own "training set/test set" data partitioning method and performance evaluation indicators. This means that it is difficult to perform performance comparisons across papers and architectures. In addition, different researchers often use traditional random partitioning methods when partitioning data sets. In order to solve the problem of inconsistent data and task data division methods and evaluation schemes in the graph learning community, Stanford University's Jure Leskovec team launched the Open Graph Benchmark (OGB), a foundational work for the graph neural network benchmark platform, in 2020. OGB contains ready-to-use tools for key tasks on graphs (node classification, link prediction, graph classification etc.), it also contains a common code library and implementation code for performance evaluation indicators, allowing for rapid model evaluation and comparison. In addition, OGB also has a model performance ranking board (leaderboard), which can facilitate everyone to quickly follow the corresponding research progress. In addition, in 2021, OGB jointly held the first OGB-LSC (OGB Large-Scale Challenge) competition with KDD CUP, providing ultra-large-scale graph data from the real world to complete node classification, edge prediction and graph learning in the field of graph learning. Returning to the three major tasks, it has attracted the participation of many top universities and technology companies including Microsoft, Deepmind, Facebook, Alibaba, Baidu, ByteDance, Stanford, MIT, Peking University, etc. This year, in the NeurIPS2022 competition track, based on the experience of the KDD Cup, the corresponding data sets were updated and the second OGB-LSC competition was organized. The winning plan has now been made public (A good way to quickly become familiar with a field is to read the doctoral dissertations of students who have just graduated from the laboratory. Rex YING and You Jiaxuan of the SNAP laboratory must have names. They started working in Jure in 2016 and 2017 respectively. Under the guidance of Leskovec, research related to graph learning began. Many results have become landmarks in the development process of graph learning. They are also included in their respective doctoral dissertations "Towards Expressive and Scalable Deep Representation Learning for Graphs" and "Empowering Deep Learning with Graphs". The corresponding combing has been carried out.) After more than ten years of development and the recent industrial implementation in various industries Through application and continuous iteration of theory in the laboratory, graph neural network has been proven to be an effective method and framework for processing graph-structured data both theoretically and practically. As a universal, concise and powerful data structure, graphs can not only be used as the input and output of graph models to mine and learn non-Euclidean structured data, but can also be used as a priori structure to model European data (text and pictures). In application. In the long run, we believe that graph data neural networks will transform from an emerging research field into a standard data model paradigm for machine learning research and applications, empowering more industries and scenarios. ##(It is inevitable to make an outlook) Although GNN has It has achieved great success in many fields in the past, but with the expansion of application scenarios, actual dynamic changes and unknown open environments, in addition to the many problems and challenges mentioned above, there are still many directions worthy of further exploration: New scenes and new paradigms of graph neural networks: In the real world, from the gravity of the planet to the interaction of molecules, almost everything can be seen as connected by some relationship. Then it can be regarded as a picture. From social network analysis to recommendation systems and natural sciences, we have seen related application exploration of graph neural networks in various fields and model development arising from application problems, such as spatiotemporal interaction in intelligent transportation, different categories in financial risk control scenarios. Balance, analytical structure discrimination problems in the field of biochemistry, etc. Therefore, how to adaptively learn scene-related features in different scenes is still an important direction. In addition, on the one hand, the current GNN is mainly based on the message passing paradigm, using the three steps of information transfer, information aggregation, and information update. How to make information transfer, aggregation, and update more reasonable and efficient is currently more important for GNN. work; on the other hand, the information over-smoothing problem and information bottleneck caused by the message passing framework and the assortative assumption will also restrict its effect in more complex data and scenarios. Overall, most GNNs always borrow some ideas from computer vision and natural language processing, but how to break the rules of borrowing, design a more powerful model based on the inductive preferences of graph data, and inject unique features into the graph neural network? The soul will also be the direction in which researchers in the field continue to think and work hard. Graph structure learning: The main difference between graph neural networks and traditional neural networks is that they are guided by the structure of the graph and aggregate neighbor information to learn node representations. The application actually has an underlying assumption: the graph structure is correct, that is, the connections on the graph are real and trustworthy. For example, edges in a social graph imply real friendships. However, in fact, the structure of the graph is not so reliable, and noisy connections and accidental connections are common. The wrong graph structure and the diffusion process of GNN will greatly reduce the node representation and the performance of downstream tasks (garbage in, garbage out). Therefore, how to better learn graph structures and how to build more credible graph structures in different data scenarios is an important direction. Trusted Graph Neural Network: Due to the information transfer mechanism and the non-IID characteristics of graph data, GNNs are very effective against attacks. Fragile, easily affected by adversarial perturbations in node characteristics and graph structure. For example, fraudsters can evade GNNs-based fraud detection by creating transactions with specific high-credit users. Therefore, it is very necessary to develop robust graph neural networks for some areas with high security risks. On the other hand, as the whole society pays increasing attention to privacy protection, the fairness of graph neural networks and data privacy protection are also hot topics in recent research. For example, FederatedScope-GNN, Alibaba DAMO Academy’s federated learning open source platform for graph data in 2022, also won the best application paper in KDD 2022 this year. In addition, how to make the trained graph model forget specific data training effects/specific parameters to achieve the purpose of protecting the implicit data in the model (Graph unlearning) is also a direction worthy of discussion. Interpretability: Although the deep learning model achieves performance that is beyond the reach of traditional methods on many tasks, the complexity of the model leads to Interpretability tends to be more limited. However, in many high-sensitivity fields such as bioinformatics, health, and financial risk control, interpretability is important when evaluating computational models and better understanding underlying mechanisms. Therefore, designing models/architectures that are interpretable or can better visualize complex relationships has attracted more attention recently. The existing work mainly refers to the interpretability processing methods in texts and images. For example, methods based on gradient changes or input perturbations (e.g. GNNExplainer). Recently, some researchers have tried to explore the explainability measurement framework using causal screening methods to better derive the intrinsic explainability of graph neural networks based on invariant learning, and also provide insights into the explainability of graph models. Some new ideas. Out-of-distribution generalization: General learning problems are to complete model training on a training set, and then the model needs to give results on a new test set. When When the test data distribution is significantly different from the training distribution, the generalization error of the model is difficult to control. Most current graph neural network (GNN) methods do not consider the unknowable deviation between training graphs and test graphs, resulting in poor generalization performance of GNN on out-of-distribution (OOD) graphs. However, many scenarios in reality require the model to interact with an open and dynamic environment. During the training phase, the model needs to consider new entities or samples from unknown distributions in the future, such as new users/items in recommendation systems, new products in online advertising systems, etc. User portraits/behavioral characteristics of the platform, new nodes or edge relationships in the dynamic network, etc. Therefore, how to use limited observation data to learn a stable GNN model that can generalize to new environments with unknown or limited data is also an important research direction. Graph data pre-training and general model: The pre-training paradigm has achieved revolutionary success in the fields of computer vision and natural language processing. Its capabilities have been proven in many missions. Although GNN already has some relatively mature models and successful applications, it is still limited to deep learning that uses a large amount of labeled data to train models for specific tasks. When the task changes or the labels are insufficient, the results are often unsatisfactory. Therefore, it naturally triggers everyone's exploration and thinking about general models in graph data scenarios. The key to pre-training lies in abundant training data, transferable knowledge, powerful backbone models, and effective training methods. Compared with the clear semantic information in computer vision and natural language processing, because different graph data structures vary widely, what knowledge in graphs is transferable is still a relatively open question. In addition, although deep and general GNN models have been studied, they have not yet brought revolutionary improvements. Fortunately, the graph machine learning community has accumulated large-scale graph data and has developed self-supervised training methods such as graph reconstruction. With the further exploration of subsequent research on deep GNN, GNN with more expressive capabilities, and new paradigms of graph self-supervision, it is believed that a universal model with strong versatility will eventually be realized. Software and hardware collaboration: As the application and research development of graph learning advances, GNN will definitely be more deeply integrated into PyTorch. In standard frameworks and platforms such as TensorFlow and Mindpsore. To further improve the scalability of graph models, more hardware-friendly algorithm frameworks and software-coordinated hardware acceleration solutions are the general trend. Although dedicated acceleration structures for graph neural network applications are slowly emerging, customizing computing hardware units and on-chip storage hierarchies for graph neural networks, and dedicated chips that optimize computing and memory access behaviors have had some success, these technologies are still in their early stages. Facing huge challenges and correspondingly providing many opportunities.

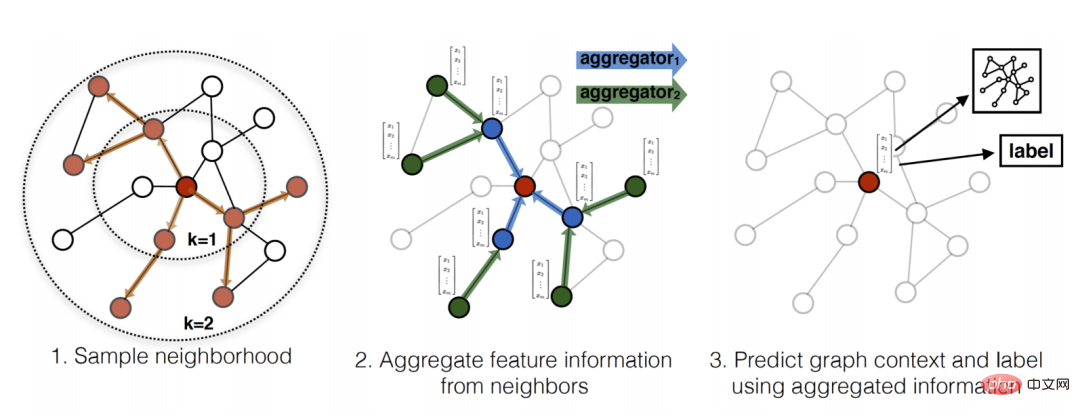

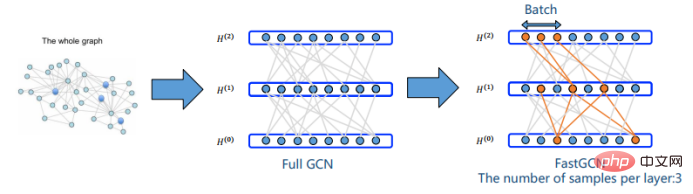

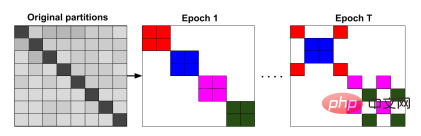

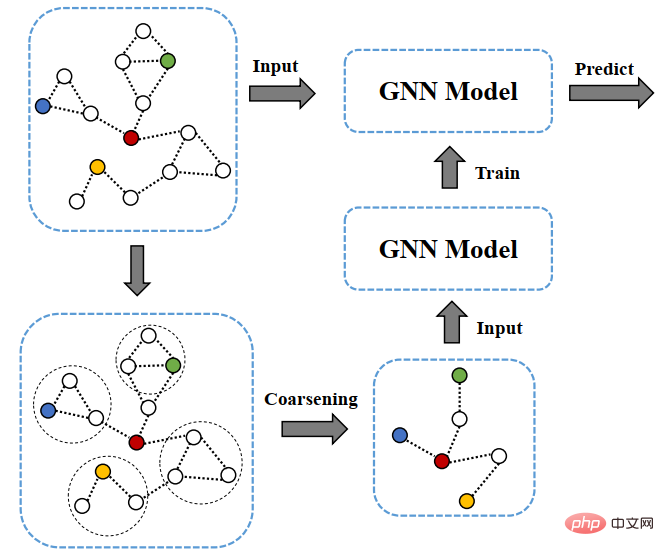

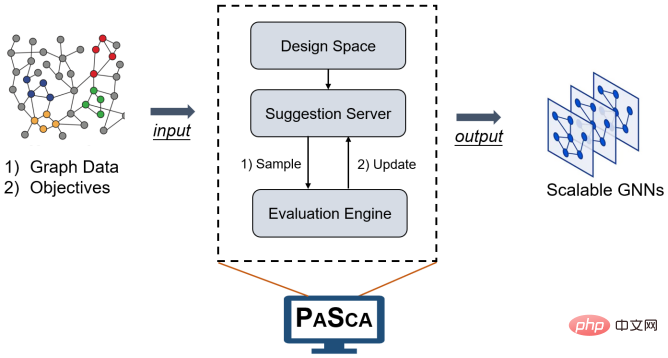

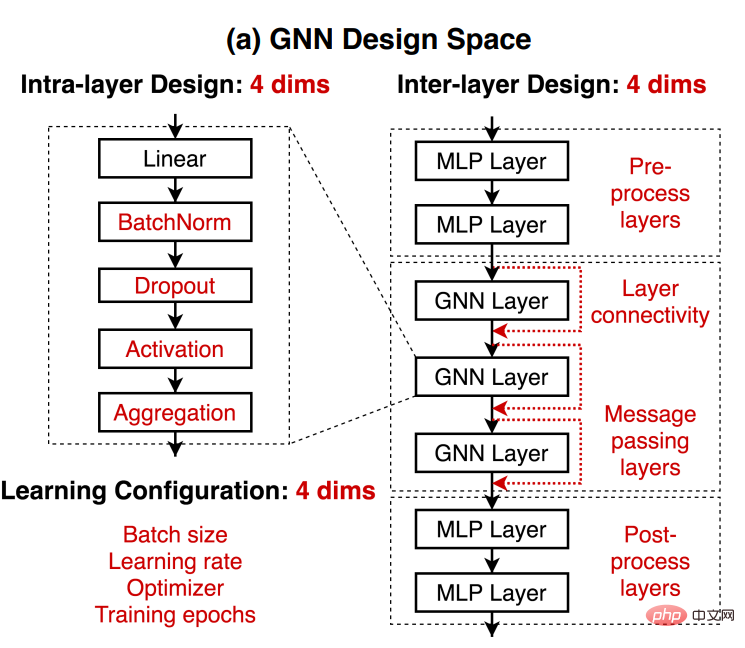

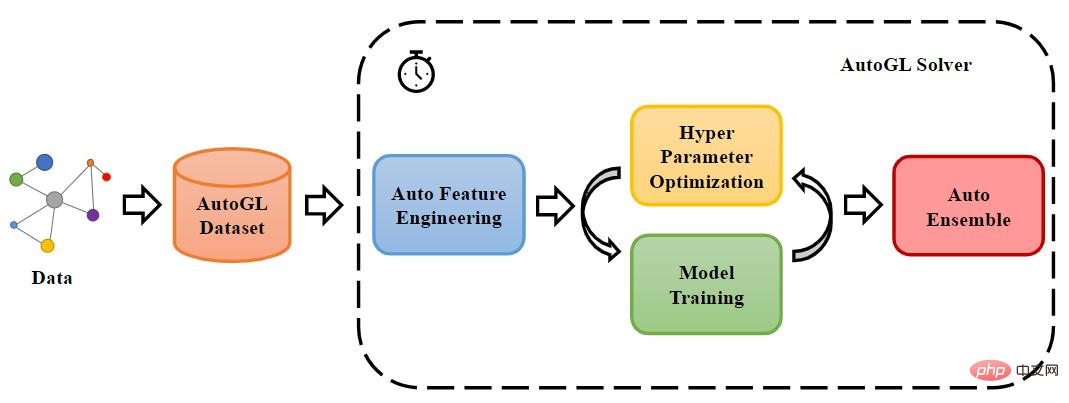

4. Scalability of graph neural network

2. Graph-based simplification

6. Graph neural network training system, framework, benchmark platform

7. Summary and Outlook

The above is the detailed content of Topological aesthetics in deep learning: GNN foundation and application. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

Methods and steps for using BERT for sentiment analysis in Python

Jan 22, 2024 pm 04:24 PM

BERT is a pre-trained deep learning language model proposed by Google in 2018. The full name is BidirectionalEncoderRepresentationsfromTransformers, which is based on the Transformer architecture and has the characteristics of bidirectional encoding. Compared with traditional one-way coding models, BERT can consider contextual information at the same time when processing text, so it performs well in natural language processing tasks. Its bidirectionality enables BERT to better understand the semantic relationships in sentences, thereby improving the expressive ability of the model. Through pre-training and fine-tuning methods, BERT can be used for various natural language processing tasks, such as sentiment analysis, naming

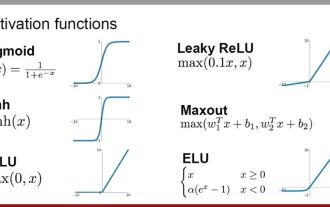

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Analysis of commonly used AI activation functions: deep learning practice of Sigmoid, Tanh, ReLU and Softmax

Dec 28, 2023 pm 11:35 PM

Activation functions play a crucial role in deep learning. They can introduce nonlinear characteristics into neural networks, allowing the network to better learn and simulate complex input-output relationships. The correct selection and use of activation functions has an important impact on the performance and training results of neural networks. This article will introduce four commonly used activation functions: Sigmoid, Tanh, ReLU and Softmax, starting from the introduction, usage scenarios, advantages, disadvantages and optimization solutions. Dimensions are discussed to provide you with a comprehensive understanding of activation functions. 1. Sigmoid function Introduction to SIgmoid function formula: The Sigmoid function is a commonly used nonlinear function that can map any real number to between 0 and 1. It is usually used to unify the

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent space embedding: explanation and demonstration

Jan 22, 2024 pm 05:30 PM

Latent Space Embedding (LatentSpaceEmbedding) is the process of mapping high-dimensional data to low-dimensional space. In the field of machine learning and deep learning, latent space embedding is usually a neural network model that maps high-dimensional input data into a set of low-dimensional vector representations. This set of vectors is often called "latent vectors" or "latent encodings". The purpose of latent space embedding is to capture important features in the data and represent them into a more concise and understandable form. Through latent space embedding, we can perform operations such as visualizing, classifying, and clustering data in low-dimensional space to better understand and utilize the data. Latent space embedding has wide applications in many fields, such as image generation, feature extraction, dimensionality reduction, etc. Latent space embedding is the main

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

Understand in one article: the connections and differences between AI, machine learning and deep learning

Mar 02, 2024 am 11:19 AM

In today's wave of rapid technological changes, Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) are like bright stars, leading the new wave of information technology. These three words frequently appear in various cutting-edge discussions and practical applications, but for many explorers who are new to this field, their specific meanings and their internal connections may still be shrouded in mystery. So let's take a look at this picture first. It can be seen that there is a close correlation and progressive relationship between deep learning, machine learning and artificial intelligence. Deep learning is a specific field of machine learning, and machine learning

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

From basics to practice, review the development history of Elasticsearch vector retrieval

Oct 23, 2023 pm 05:17 PM

1. Introduction Vector retrieval has become a core component of modern search and recommendation systems. It enables efficient query matching and recommendations by converting complex objects (such as text, images, or sounds) into numerical vectors and performing similarity searches in multidimensional spaces. From basics to practice, review the development history of Elasticsearch vector retrieval_elasticsearch As a popular open source search engine, Elasticsearch's development in vector retrieval has always attracted much attention. This article will review the development history of Elasticsearch vector retrieval, focusing on the characteristics and progress of each stage. Taking history as a guide, it is convenient for everyone to establish a full range of Elasticsearch vector retrieval.

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Super strong! Top 10 deep learning algorithms!

Mar 15, 2024 pm 03:46 PM

Almost 20 years have passed since the concept of deep learning was proposed in 2006. Deep learning, as a revolution in the field of artificial intelligence, has spawned many influential algorithms. So, what do you think are the top 10 algorithms for deep learning? The following are the top algorithms for deep learning in my opinion. They all occupy an important position in terms of innovation, application value and influence. 1. Deep neural network (DNN) background: Deep neural network (DNN), also called multi-layer perceptron, is the most common deep learning algorithm. When it was first invented, it was questioned due to the computing power bottleneck. Until recent years, computing power, The breakthrough came with the explosion of data. DNN is a neural network model that contains multiple hidden layers. In this model, each layer passes input to the next layer and

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

AlphaFold 3 is launched, comprehensively predicting the interactions and structures of proteins and all living molecules, with far greater accuracy than ever before

Jul 16, 2024 am 12:08 AM

Editor | Radish Skin Since the release of the powerful AlphaFold2 in 2021, scientists have been using protein structure prediction models to map various protein structures within cells, discover drugs, and draw a "cosmic map" of every known protein interaction. . Just now, Google DeepMind released the AlphaFold3 model, which can perform joint structure predictions for complexes including proteins, nucleic acids, small molecules, ions and modified residues. The accuracy of AlphaFold3 has been significantly improved compared to many dedicated tools in the past (protein-ligand interaction, protein-nucleic acid interaction, antibody-antigen prediction). This shows that within a single unified deep learning framework, it is possible to achieve