Technology peripherals

Technology peripherals

AI

AI

DeepFake has never been so real! How strong is Nvidia's latest 'implicit distortion”?

DeepFake has never been so real! How strong is Nvidia's latest 'implicit distortion”?

DeepFake has never been so real! How strong is Nvidia's latest 'implicit distortion”?

In recent years, generation technology in the field of computer vision has become more and more powerful, and the corresponding "forgery" technology has become more and more mature. From DeepFake face-changing to action simulation, it is difficult to distinguish the real from the fake.

Recently, NVIDIA has made another big move, and published a new Implicit Warping (Implicit Warping) framework at the NeurIPS 2022 conference, using A set of source images and drive the movement of the video to make the target animation .

## Paper link: https://arxiv.org/pdf/2210.01794.pdf

From the effect point of view, the generated images are more realistic. When the characters move in the video, the background will not change.

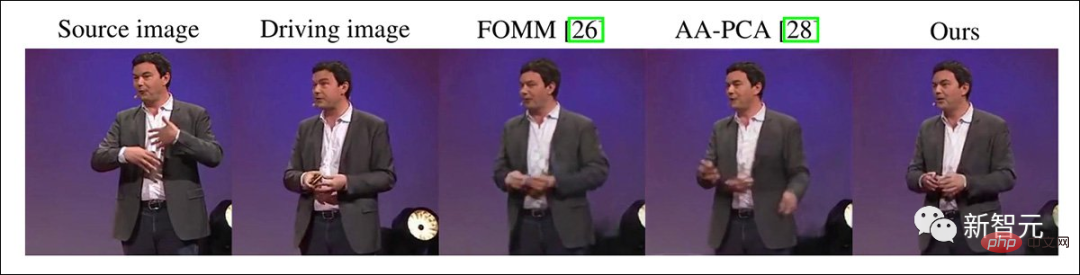

Multiple source images input usually provide different appearance information, reducing the generator's "fantasy" space , for example, the following two are used as model input.

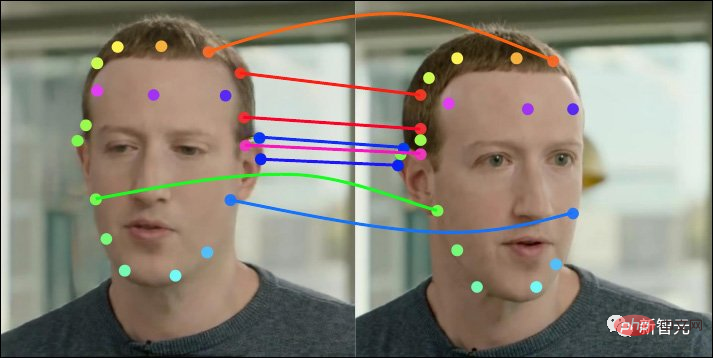

It can be found that compared with other models, implicit distortion does not produce "space distortion" similar to the beauty effect.

Because of the occlusion of characters, multiple source images can also provide a more complete background.

As you can see from the video below, if there is only one picture on the left, is the one behind the background "BD" or " ED" is difficult to guess, which will cause background distortion, and two pictures will generate a more stable image.

When comparing other models, only one source image is better.

Magical Implicit Distortion

Magical Implicit Distortion

Not all methods are trying to generate videos from a single frame of images. There are also some studies that perform complex calculations on each frame in the video. This is actually what Deepfake does. Take the imitation route.

However, since the DeepFake model obtains less information, this method requires training for each video clip, and the performance is reduced compared to the open source methods of DeepFaceLab or FaceSwap. This Both models are able to impose an identity onto any number of video clips.

The FOMM model released in 2019 allows characters to move with the video, giving the video imitation task another shot in the arm.

Other researchers subsequently attempted to derive multiple poses and expressions from a single face image or full-body representation; however, this approach generally only worked for relatively expressionless and immobile subjects. , such as a relatively stationary "talking head" because there are no "sudden changes in behavior" in facial expressions or gestures that the network has to interpret.

The implicit distortion that NVIDIA focuses on this time is to obtain information between multiple frames or even only between two frames, rather than obtaining all necessary poses from one frame. Information, this setup isn't present in other competing models, or is handled very poorly.

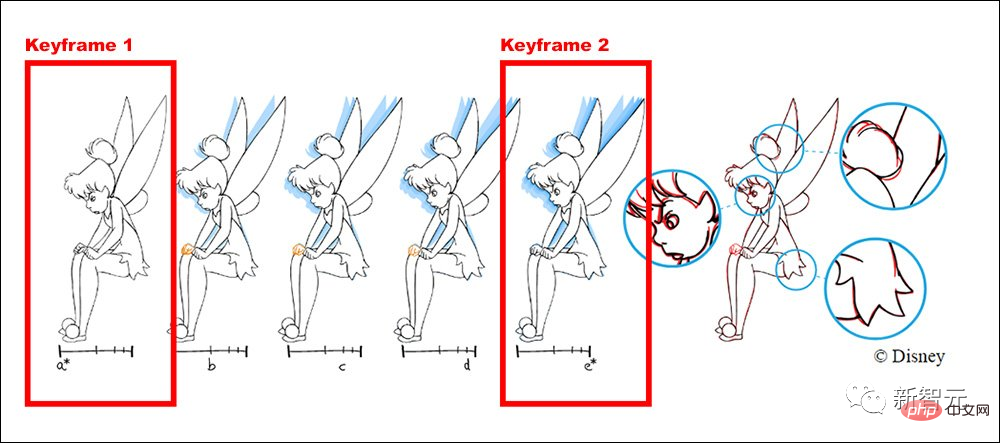

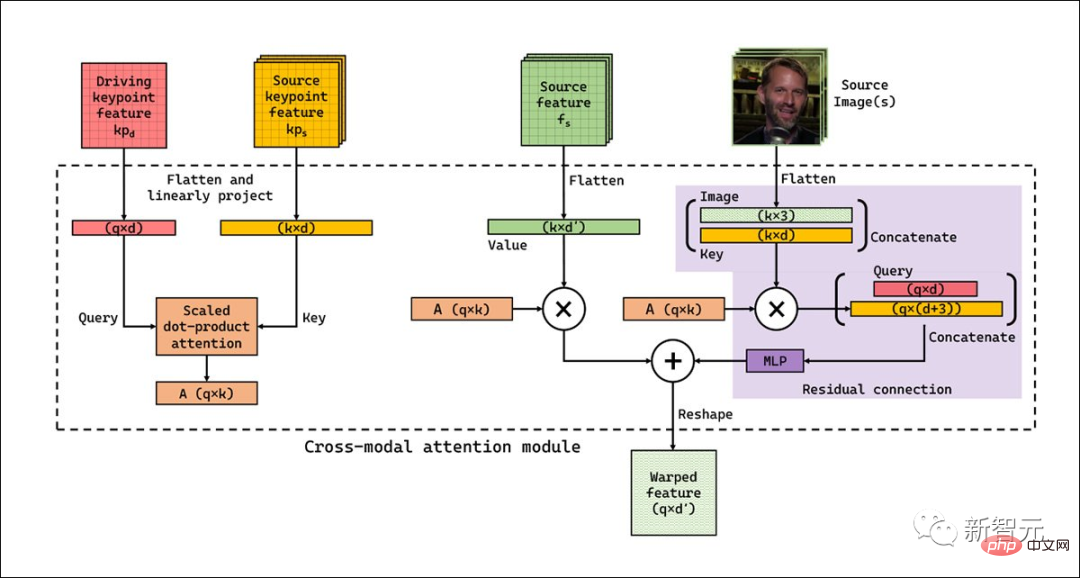

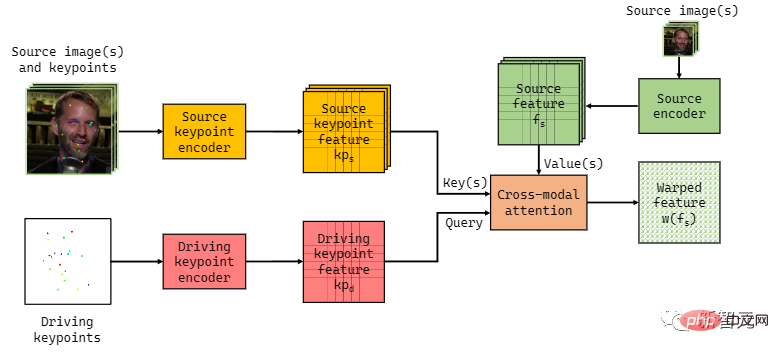

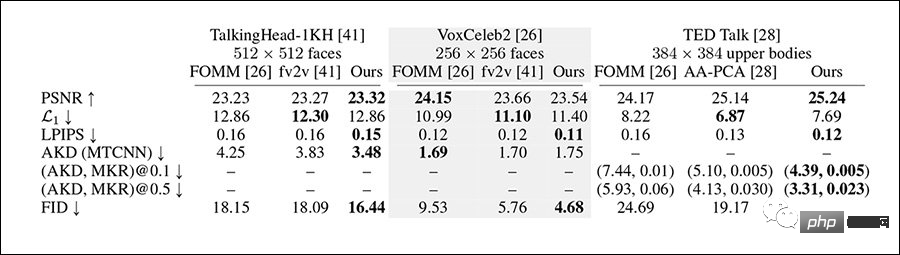

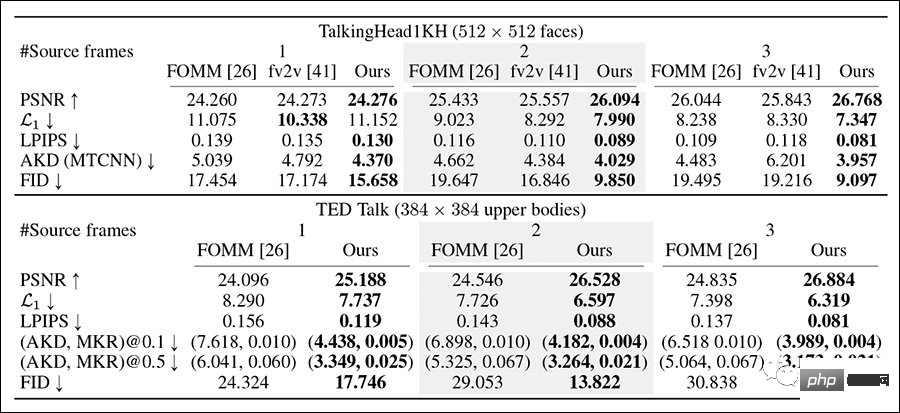

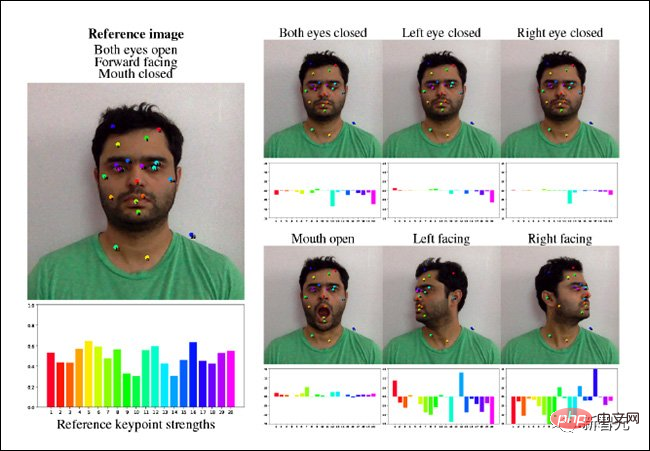

For example, Disney's workflow is that senior animators draw the main frames and key frames, and other junior animators are responsible for drawing intermediate frames. Through testing on previous versions, NVIDIA researchers found that the quality of results from the previous method deteriorated with additional "keyframes", and that the new method was inconsistent with the logic of animation production. Consistently, performance improves in a linear fashion as the number of keyframes increases. If there are some sudden changes in the middle of the clip, such as an event or expression that is not shown in the start frame or end frame, implicit distortion can be added at this midpoint. One frame, additional information will be fed back to the attention mechanism of the entire clip. Previous methods like FOMM, Monkey-Net and face-vid2vid etc. use explicit distortion to draw a Time series,The information extracted from source faces and control,movements must be adapted and consistent with this time,series. Under this model design, the final mapping of key points is quite strict. In contrast, Implicit Warp uses a cross-modal attention layer with fewer predefined bootstrapping in its workflow and can adapt to inputs from multiple frameworks. The workflow also does not require distortion on a per-key basis, the system can select the most appropriate features from a series of images. Implicit warping also reuses some key point prediction components in the FOMM framework, and finally uses a simple U-net to derive the space Drive keypoint representation for encoding. A separate U-net is used to encode the source image together with the derived spatial representation. Both networks can operate at resolutions ranging from 64px (256px squared output) to 384x384px. Because this mechanism cannot automatically account for all possible changes in pose and movement in any given video, additional keyframes are necessary , can be added temporarily. Without this intervention capability, keys that are not similar enough to the target motion point will automatically update, resulting in a decrease in output quality. The researchers’ explanation for this is that although it is the most similar key to the query in a given set of keyframes, it may not be enough to produce a good output. For example, suppose the source image has a face with closed lips, and the driver image has a face with open lips and exposed teeth. In this case, there is no appropriate key (and value) in the source image to drive the mouth region of the image. This method overcomes this problem by learning additional image-independent key-value pairs, which can cope with the lack of information in the source image. Although the current implementation is quite fast, around 10 FPS on a 512x512px image, the researchers believe that in a future version the pipeline could be passed through a factorized I-D attention layer Or Spatial Reduction Attention (SRA) layer (i.e. Pyramid Vision Transformer) to optimize. Because implicit warping uses global attention instead of local attention, it can predict factors that previous models cannot predict. The researchers tested the system on the VoxCeleb2 data set, the more challenging TED Talk data set and the TalkingHead-1KH data set, comparing Baseline between 256x256px and full 512x512px resolution, using metrics including FID, AlexNet-based LPIPS, and Peak Signal-to-Noise Ratio (pSNR). The contrasting frameworks used for testing include FOMM and face-vid2vid, as well as AA-PCA. Since previous methods have little or no ability to use multiple keyframes, this is also the main innovation of implicit distortion, research The staff also designed similar testing methods. Implicit warping outperforms most contrasting methods on most metrics. In the multi-keyframe reconstruction test, in which the researchers used sequences of up to 180 frames and selected gap frames, implicit warping won overall this time. As the number of source images increases, this method can achieve better reconstruction results, and the scores of all indicators improve. And as the number of source images increases, the reconstruction effect of the previous work becomes worse, contrary to expectations. After conducting qualitative research through AMT staff, it is also believed that the generation results of implicit deformation are stronger than other methods. Having access to this framework would allow users to create more coherent and longer video simulations and full-body deepfake videos, all while Capable of exhibiting a much greater range of motion than any frame the system has been tested on. But research into more realistic image synthesis also raises concerns because these techniques can be easily used for forgery, and there are standard disclaimers in papers. If our method is used to create DeepFake products, it may have negative impacts. Malicious speech synthesis creates false images of people by transferring and transmitting false information across identities, leading to identity theft or the spread of false news. But in controlled settings, the same technology can also be used for entertainment purposes. The paper also points out the potential of this system for neural video reconstruction, such as Google's Project Starline. In this framework, the reconstruction work is mainly focused on the client side, leveraging the sparse input from the person on the other end. Sports information. This solution has attracted more and more interest from the research community, and some companies intend to implement low-bandwidth conference calls by sending pure motion data or sparsely spaced key frames. These key frames will Interpreted and inserted into full HD video upon reaching the target client.

Model structure

Experimental results

The above is the detailed content of DeepFake has never been so real! How strong is Nvidia's latest 'implicit distortion”?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

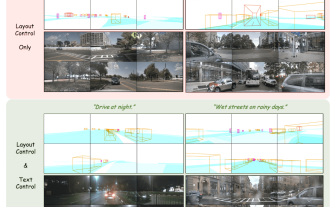

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

The first multi-view autonomous driving scene video generation world model | DrivingDiffusion: New ideas for BEV data and simulation

Oct 23, 2023 am 11:13 AM

Some of the author’s personal thoughts In the field of autonomous driving, with the development of BEV-based sub-tasks/end-to-end solutions, high-quality multi-view training data and corresponding simulation scene construction have become increasingly important. In response to the pain points of current tasks, "high quality" can be decoupled into three aspects: long-tail scenarios in different dimensions: such as close-range vehicles in obstacle data and precise heading angles during car cutting, as well as lane line data. Scenes such as curves with different curvatures or ramps/mergings/mergings that are difficult to capture. These often rely on large amounts of data collection and complex data mining strategies, which are costly. 3D true value - highly consistent image: Current BEV data acquisition is often affected by errors in sensor installation/calibration, high-precision maps and the reconstruction algorithm itself. this led me to

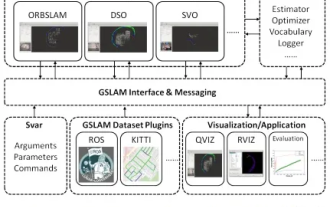

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

GSLAM | A general SLAM architecture and benchmark

Oct 20, 2023 am 11:37 AM

Suddenly discovered a 19-year-old paper GSLAM: A General SLAM Framework and Benchmark open source code: https://github.com/zdzhaoyong/GSLAM Go directly to the full text and feel the quality of this work ~ 1 Abstract SLAM technology has achieved many successes recently and attracted many attracted the attention of high-tech companies. However, how to effectively perform benchmarks on speed, robustness, and portability with interfaces to existing or emerging algorithms remains a problem. In this paper, a new SLAM platform called GSLAM is proposed, which not only provides evaluation capabilities but also provides researchers with a useful way to quickly develop their own SLAM systems.

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

'Minecraft' turns into an AI town, and NPC residents role-play like real people

Jan 02, 2024 pm 06:25 PM

Please note that this square man is frowning, thinking about the identities of the "uninvited guests" in front of him. It turned out that she was in a dangerous situation, and once she realized this, she quickly began a mental search to find a strategy to solve the problem. Ultimately, she decided to flee the scene and then seek help as quickly as possible and take immediate action. At the same time, the person on the opposite side was thinking the same thing as her... There was such a scene in "Minecraft" where all the characters were controlled by artificial intelligence. Each of them has a unique identity setting. For example, the girl mentioned before is a 17-year-old but smart and brave courier. They have the ability to remember and think, and live like humans in this small town set in Minecraft. What drives them is a brand new,

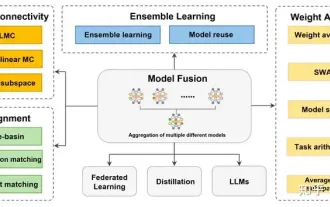

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

Review! Deep model fusion (LLM/basic model/federated learning/fine-tuning, etc.)

Apr 18, 2024 pm 09:43 PM

In September 23, the paper "DeepModelFusion:ASurvey" was published by the National University of Defense Technology, JD.com and Beijing Institute of Technology. Deep model fusion/merging is an emerging technology that combines the parameters or predictions of multiple deep learning models into a single model. It combines the capabilities of different models to compensate for the biases and errors of individual models for better performance. Deep model fusion on large-scale deep learning models (such as LLM and basic models) faces some challenges, including high computational cost, high-dimensional parameter space, interference between different heterogeneous models, etc. This article divides existing deep model fusion methods into four categories: (1) "Pattern connection", which connects solutions in the weight space through a loss-reducing path to obtain a better initial model fusion