Who can make a Chinese version of ChatGPT? How to do it?

In December 2022, ChatGPT was born. OpenAI has changed the paradigm of scientific research and engineering applications with a nuclear bomb-level result. In China, ChatGPT has received widespread attention and profound discussions. In the past month, I visited major universities, research institutes, large factories, start-up companies, and venture capital; from Beijing to Shanghai to Hangzhou to Shenzhen, I talked with all the leading players. The Game of Scale has already begun in China. How can the players at the center of the storm accomplish this given the huge gap between domestic technology and ecology and the world's leading edge? Who can do this?

Qin lost its deer, and the whole world chased it away. ———— "Historical Records·Biographies of the Marquis of Huaiyin"

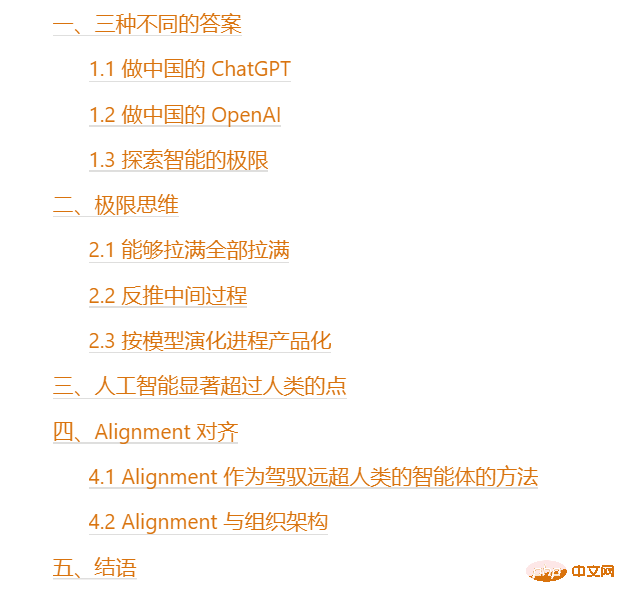

Contents

Every time I come into contact with a startup company, I will ask the same question: "Where is ChatGPT, what do you want to do?" I can probably receive three different answers. The first answer is clear: to build China’s ChatGPT.

1.1 Make China’s ChatGPT

Because it is there, I want to reproduce it and make it domestically change. This is very classic product-oriented Chinese Internet thinking. This idea is also a common business model of the Chinese Internet in the past two decades: first Silicon Valley makes something, and then we copy it.

But the problem here is that, first of all, ChatGPT is not like taxi-hailing software, and the difficulty of reproduction is completely different. From a human perspective, the creation of GPT is the result of continuous research by the world's top scientists and engineers since 2015. OpenAI's chief scientist, Ilya Sutskever[1], deeply believes that AGI can achieve. As a disciple of Turing Award winner Geoffery Hinton, he has been studying deep learning since 2007. He has 370,000 citations, and the articles he has published accurately hit all the key nodes of Deep Learning in the past ten years. Even with such a strong team, it took four years to go from GPT 2 to GPT 3.5. One can imagine the difficulty of its science and engineering.

At the same time, the first generation of ChatGPT is OpenAI based on the basic model of GPT 3.5. It took two weeksAfter finetuning the dialog Throw out the demo. The real strength here is not the product ChatGPT, but the underlying GPT 3.5 basic model. This model is still evolving. The GPT 3.5 series has been updated with three major versions in 2022[2], each major version is significantly stronger than the previous version; similarly, ChatGPT was released two months ago A total of four minor versions have been updated[3], each minor version has obvious improvements over the previous version in a single dimension. All OpenAI models are constantly evolving and getting stronger over time.

This also means that if you only focus on the current ChatGPT product, it is tantamount to trying to find a sword. When ChatGPT appeared, it caused a dimensionality reduction blow to existing voice assistants; if you can't see the evolution of the basic model, even if you spend a year or two working hard to make something similar, the foundation of OpenAI at that time Models are also continuing to become stronger. If they continue to productize and finetune a stronger product with a new stronger basic model, will they be hit by dimensionality reduction again?

The approach of carving a boat and seeking a sword will not work.

1.2 To be China’s OpenAI

The second answer is to be China’s OpenAI. The player who gave this answer jumped out of the classic Chinese Internet product thinking. They not only saw a single product, but also saw the powerful driving force for the continuous evolution of the basic model behind this product, which comes from the density of cutting-edge talentsandadvanced organizational structure.

- Density of cutting-edge talents: It is not that one person pools resources to lead a team and then packages and assigns tasks to the people below according to the hierarchy, but a group of top-level talents People who combine science and engineering work together.

- Advanced organizational structure: The Language team and the Alignment team cooperate with each other to iterate, and then the scaling team and the data team below help provide infrastructure , each team is very small, but has clear goals and clear paths, highly concentrated resources, and is heading towards AGI

So, if you want to do this, you must not only see For products, we also need to see the talent team and organizational structure behind it; if ranked by scarcity, 人>>卡>>money.

But the problem here is that different soils encourage innovation to different degrees. When OpenAI was first founded in 2015, its investors believed in AGI, even though no profit was seen at the time. Now that GPT has been developed, domestic investors have also believed in AGI, but their beliefs may be different: Do you believe that AGI can make money, or do you believe that AGI can promote human development? ?

Furthermore, even if OpenAI is born here and will appear tomorrow, can the deal they reached with Microsoft be achieved with domestic cloud computing manufacturers? The training and inference of large models require huge costs and require a cloud computing engine as support. Microsoft can devote all its efforts to let the entire Azure help OpenAI[4]. If this is changed to China, is it possible for Alibaba Cloud to help a startup company?

Organizational structure is very important. Only cutting-edge talents and advanced organizational structures can promote the continuous iteration and evolution of intelligence; but it also needs to adapt to the soil where it is located, and find ways to flourish Methods.

1.3 Exploring the limits of intelligence

The third answer is toExplore the limits of intelligence The limit. This is the best answer I've heard. It goes far beyond the classic Internet product thinking of seeking a sword at every turn. It also sees the importance of organizational structure and density of cutting-edge talents. More importantly, it sees the future, sees model evolution and product iteration, and thinks about how to integrate the most profound and most profound things. Difficult problems are solved with the most innovative approaches.

This involves thinking about the extremes of large models.

2. Extreme Thinking

Observing the current ChatGPT / GPT-3.5, it is obviously an intermediate state. It still has many significant improvements that can be enhanced, and it will be available soon. Strengthened points include:

- Longer input box: At the beginning, the context of GPT 3.5 was up to 8,000 tokens; the current ChatGPT context modeling The length seems to be over 10,000. And this length can obviously continue to grow. After incorporating the methods of efficient attention[5] and recursive encoding[6], the context length should be able to continue to scale to one hundred thousand, or even one million. The length of To the magnitude of T[7] ; the size of data has not reached the limit, and the data fed back by humans is growing

- every day Modality: After adding multi-modal data (audio, pictures), especially video data, the size of the overall and training data can be increased by two orders of magnitude. This can make the known capabilities Then it increases linearly according to the scaling law, and at the same time, new emerging capabilities may continue to appear. For example, the model may automatically learn to do analytical geometry after seeing pictures of various geometric shapes and algebra problems.

- Specialization: The existing model is roughly equivalent to graduate level in liberal arts, but equivalent to high school or freshman level in science Sophomore student level; existing work has proven that we can move the skill points of the model from one direction to another, which means that even without any scaling, we can still achieve the goal by sacrificing other abilities. , to push the model in the target direction. For example, the model's science ability is sacrificed and its liberal arts ability is pushed from a graduate student to the level of an expert professor.

- The above four points are only what can be seen at this stage. They can be strengthened immediately but have not yet been strengthened. As time goes by and the model evolves, there will be More can be further reflected by the dimensions of scale. This means that we need to have extreme thinking and think about what the model will look like when we fill up all the dimensions that can be filled. 2.1 Able to fill everything

The input box of the model can be lengthened and the size of the model can be continued As the model increases, the data of the model can continue to increase, multi-modal data can be integrated, the degree of specialization of the model can continue to increase, and all these dimensions can continue to be pulled upward. The model has not yet reached its limit. Limit is a process. How will the model's capabilities develop during this process?

Log-linear curve: The growth of some capabilities will follow the log-linear curve

[8]- , such as finetuning of a certain task. As the finetune data grows exponentially, the capabilities of the finetune tasks corresponding to the model will grow linearly. These capabilities will predictably become stronger

- Phase change curve: Some capabilities will continue to emerge with scaling [9] , for example, the model above is an example of analytic geometry. As the dimensions that can be filled continue to be filled, new and unpredictable emergent abilities will appear.

- Polynomial curve? When the model is strong to a certain extent and aligned with humans to a certain extent, perhaps the linear growth of some capabilities and the data required will break through the blockade of exponential growth and reduce to the level of polynomials. In other words, when the model is strong to a certain extent, it may not need exponential data, but only polynomial-level data to complete generalization. This can be observed from human professional learning: when a person is not a domain expert, he or she needs exponential amounts of data to learn domain knowledge; when a person is already a domain expert, he/she only needs a very small amount of data. Level data bursts out new inspiration and knowledge on its own.

- Therefore, under extreme thinking, if all the dimensions that can be filled are filled, the model is destined to become stronger and stronger, with more and more emergent capabilities. 2.2 Reverse the intermediate process

#After thinking clearly about the limit process, you can reverse the intermediate process from the limit state. For example, if we want to increase the size of the input box:

- If you want to increase the input box of the model from the magnitude of to On the order of ten thousand, you may only need toincrease the number of graphics cards , this can be achieved by optimizing the video memory. If you want to continue to increase the input box from the

-

million# to One hundred thousand may require linear attention[10] method, because adding video memory at this time should not be able to support the attention calculation amount, which increases with the quadratic growth of the length of the input box.

If you want to continue to increase the input box from one hundred thousand to one million, you may need - recursive encoding [11] method and increase long-term memory [12] method, because linear attention may not be able to support the growth of video memory at this time. #In this way, we can infer what technologies are needed for scaling at different stages. The above analysis not only applies to the length of the input box, but also applies to the scaling process of other factors.

In this way, we can get a clear technology roadmap for each intermediate stage from the current technology to the limit of scaling

.2.3 Productization according to the model evolution process

The model is constantly evolving, but productization does not need to wait until the final one Model Completion - Whenever a large version of the model is iterated, it can be commercialized. Take the productization process of OpenAI as an example:

##In 2020, the first generation GPT 3 training was completed and the OpenAI API was opened[13]

- In 2021, the training of the first generation Codex was completed, and Github Copilot was opened[14] ##In 2022, the training of GPT-3.5 was completed, with dialog The data is finetune into ChatGPT and then released

- #It can be seen that in each important version in the intermediate stage, the model's capabilities will be enhanced, and there will be opportunities for productization.

- More importantly, according to the model evolution process, it can be adapted to the market during the productization stage. Learn the organizational structure of OpenAI to promote model evolution itself, but productization can be based on the characteristics of the local market. This approach may allow us to learn from OpenAI’s advanced experience while avoiding the problem of acclimatization.

3. The point at which artificial intelligence significantly surpasses humans

So far, we have discussed the need to analyze the model from the perspective of model evolution and discuss it with extreme thinking. The evolution of the model. Points that can be immediately enhanced at this stage include the length of the input box, larger models and data, multi-modal data, and the degree of specialization of the model. Now let us take a longer-term view and think about how the model can be pushed further to the limit in a larger time and space. We discuss:From these perspectives, It is not unimaginable that artificial intelligence will surpass humans. This raises the next question: How to control strong artificial intelligence that far exceeds that of humans? #This problem is what the Alignment technology really wants to solve. At the current stage, the model’s capabilities, except that AlphaGo surpasses the strongest humans in Go, other aspects of AI have not surpassed the strongest. Stronger humans (but ChatGPT may have surpassed 95% of humans in liberal arts, and it continues to grow). When the model has not surpassed humans, Alignment's task is to make the model conform to human values and expectations; but after the model continues to evolve to surpass humans, Alignment's task becomes to find ways to control intelligent agents that far exceed humans. 4.1 Alignment as a method to control intelligent agents that far exceed humans An obvious problem is that when AI surpasses humans, can it still Make him/her stronger/more disciplined through human feedback? Is it out of control at this point? Not necessarily. Even if the model is far better than humans, we can still control it. An example here is between athletes and coaches Relationship: The gold medal athlete is already the strongest human being in his direction, but this does not mean that the coach cannot train him. On the contrary, even if the coach is not as good as the athlete, he can still make the athlete stronger and more disciplined through various feedback mechanisms. Similarly, the relationship between humans and strong artificial intelligence may become the relationship between athletes and coaches in the middle and later stages of AI development. At this time, the ability that humans needis not to complete a goal, but toset a good goal, and then measure whether the machine Achieve this goal well enough and provide suggestions for improvement. The research in this direction is still very preliminary. The name of this new discipline is Scalable Oversight[15]. 4.2 Alignment and Organizational Structure #On the road to strong artificial intelligence, not only humans and AI need to be aligned, humans and humans also need a high degree of alignment . From the perspective of organizational structure, alignment involves: In 2017, when I first entered the NLP industry, I spent a lot of effort on controllable generation. At that time, the most so-called text style transfer was to change the sentiment classification of the sentence. Changing good to bad was considered a complete transfer. In 2018, I spent a lot of time studying how to let the model modify the style of sentences from the perspective of sentence structure. I once mistakenly thought that style conversion was almost impossible to accomplish. Now ChatGPT makes style conversion very easy. Tasks that once seemed impossible, things that were once extremely difficult, can now be accomplished very easily with large language models. Throughout 2022, I tracked all version iterations from GPT-3 to GPT-3.5[11], and saw with my own eyes its continuous evolution from weak to strong step by step. This rate of evolution is not slowing down, but is accelerating. What once seemed like science fiction has now become a reality. Who knows what the future holds? The millet is separated, the seedlings of the millet are growing. The pace is slow and timid, and the center is shaking. The millet is separated, the ear of the grain is separated. Walking forward with great strides, the center is like intoxication. ———— "The Book of Songs·Mill"

4. Alignment

5. Conclusion

The above is the detailed content of Who can make a Chinese version of ChatGPT? How to do it?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

When installing and configuring GitLab on a CentOS system, the choice of database is crucial. GitLab is compatible with multiple databases, but PostgreSQL and MySQL (or MariaDB) are most commonly used. This article analyzes database selection factors and provides detailed installation and configuration steps. Database Selection Guide When choosing a database, you need to consider the following factors: PostgreSQL: GitLab's default database is powerful, has high scalability, supports complex queries and transaction processing, and is suitable for large application scenarios. MySQL/MariaDB: a popular relational database widely used in Web applications, with stable and reliable performance. MongoDB:NoSQL database, specializes in