Technology peripherals

Technology peripherals

AI

AI

The GPT-4 king is crowned! Your ability to read pictures and answer questions is amazing. You can get into Stanford by yourself.

The GPT-4 king is crowned! Your ability to read pictures and answer questions is amazing. You can get into Stanford by yourself.

The GPT-4 king is crowned! Your ability to read pictures and answer questions is amazing. You can get into Stanford by yourself.

Sure enough, the only one that can beat yesterday’s OpenAI is today’s OpenAI.

Just now, OpenAI has shockingly released the large-scale multi-modal model GPT-4, which supports the input of images and text and generates text results.

Known as the most advanced AI system in history!

GPT-4 not only has the eyes to understand pictures, but also achieved almost perfect scores in all major exams including GRE, sweeping the Various benchmarks and performance indicators are overwhelming.

OpenAI spent 6 months iteratively adjusting GPT-4 using adversarial testing procedures and lessons learned from ChatGPT, thus achieving great results in terms of authenticity and controllability. Best results ever.

## Everyone still remembers that Microsoft and Google fought fiercely for three days in early February. On February 8, Microsoft released ChatGPT. At that time, Bing said it was "based on ChatGPT-like technology."

Today, the mystery was finally solved - the big model behind it is GPT-4!

Geoffrey Hinton, one of the three giants of the Turing Award, marveled at this, "After the caterpillar absorbs nutrients, it will Turn the cocoon into a butterfly. And mankind has extracted billions of gold nuggets of understanding, GPT-4, which is the butterfly of mankind."

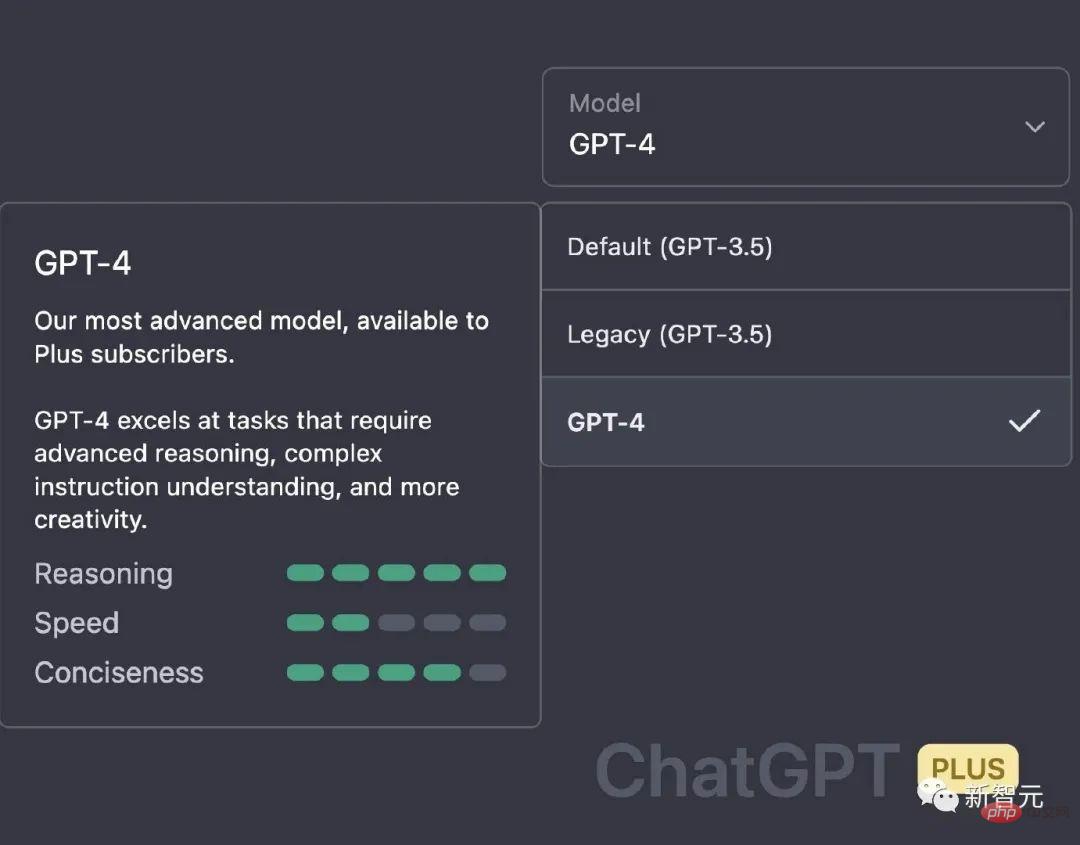

By the way, ChatGPT Plus users can now Get started.

In a casual conversation, GPT-3.5 and The differences between GPT-4 are subtle. Only when the complexity of the task reaches a sufficient threshold does the difference emerge, with GPT-4 being more reliable, more creative, and able to handle more nuanced instructions than GPT-3.5.

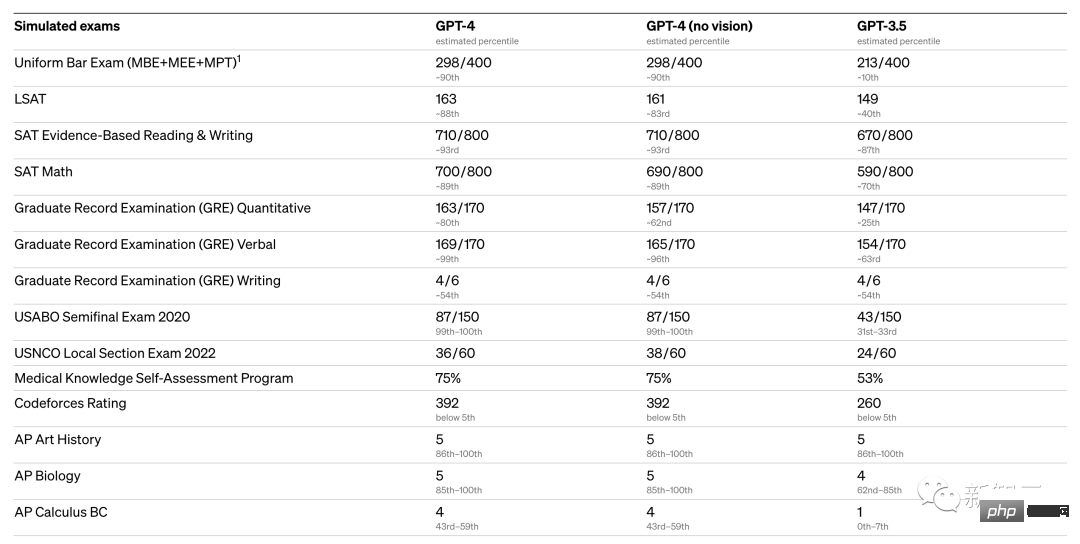

To understand the differences between the two models, OpenAI was tested on various benchmarks and some mock exams designed for humans.

GPT-4 Among the various exams, there are several tests that are almost close to perfect scores:

- USABO Semifinal 2020 (United States Biology Olympiad Competition )

- GRE Writing

Additionally, OpenAI evaluated GPT-4 on traditional benchmarks designed for machine learning models. Judging from the experimental results, GPT-4 is much better than existing large-scale language models, as well as most SOTA models:

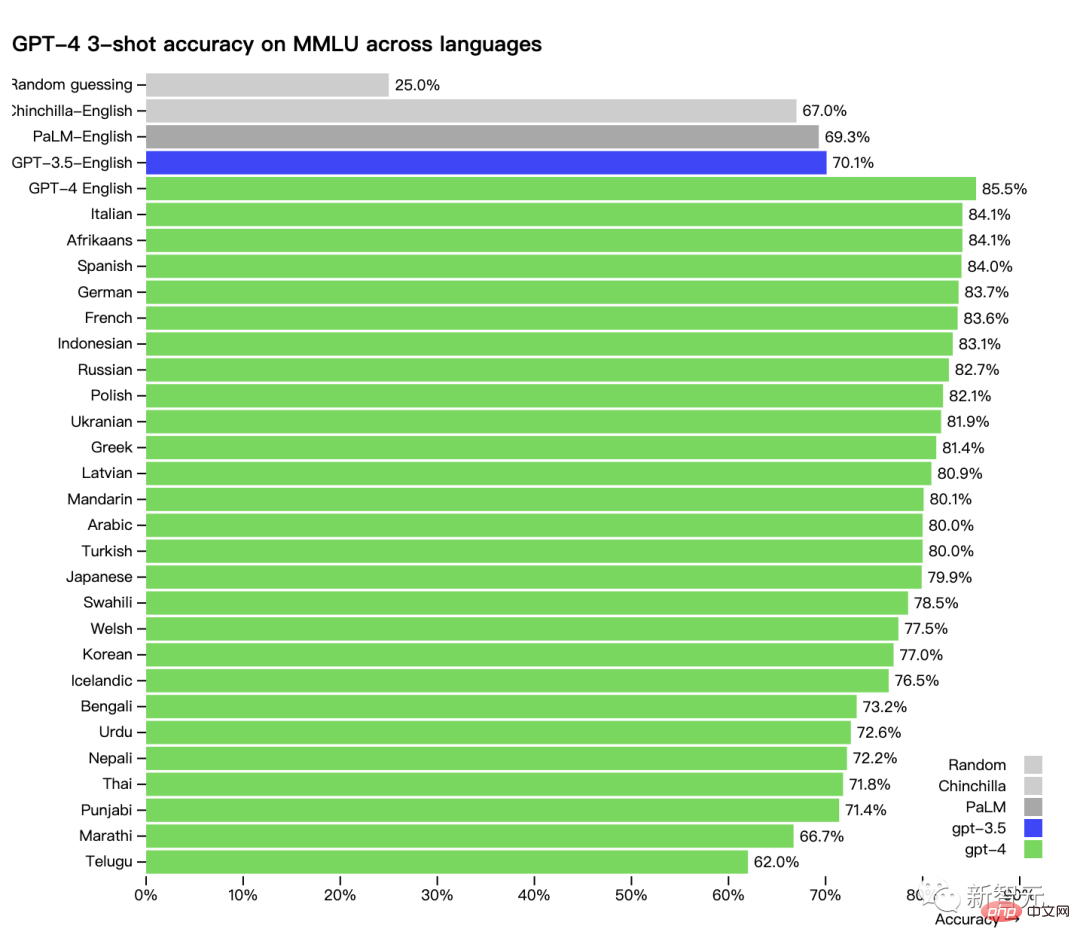

In addition, GPT-4’s performance in different languages: The accuracy of Chinese is about 80%, which is already better than the English performance of GPT-3.5.

Many existing ML benchmarks are written in English. To get a first look at GPT-4's capabilities in other languages, the researchers used Azure Translate to translate the MMLU benchmark (a set of 14,000 multiple-choice questions covering 57 topics) into multiple languages.

In 24 of the 26 languages tested, GPT-4 outperformed GPT-3.5 and other large language models (Chinchilla, PaLM) in English language performance:

OpenAI stated that it uses GPT-4 internally, so it also pays attention to the application effect of large language models in content generation, sales, and programming. Additionally, insiders use it to help humans evaluate AI output.

In this regard, Jim Fan, a disciple of Li Feifei and NVIDIA AI scientist, commented: "The strongest thing about GPT-4 is actually its reasoning ability. Its scores on GRE, SAT, and law school exams , almost no different from human candidates. In other words, GPT-4 can be admitted to Stanford on its own."

(Jim Fan himself graduated from Stanford!)

Netizen: It’s over. Once GPT-4 is released, we humans will no longer be needed...

Read pictures and do small cases, and even know the jokes better than netizens

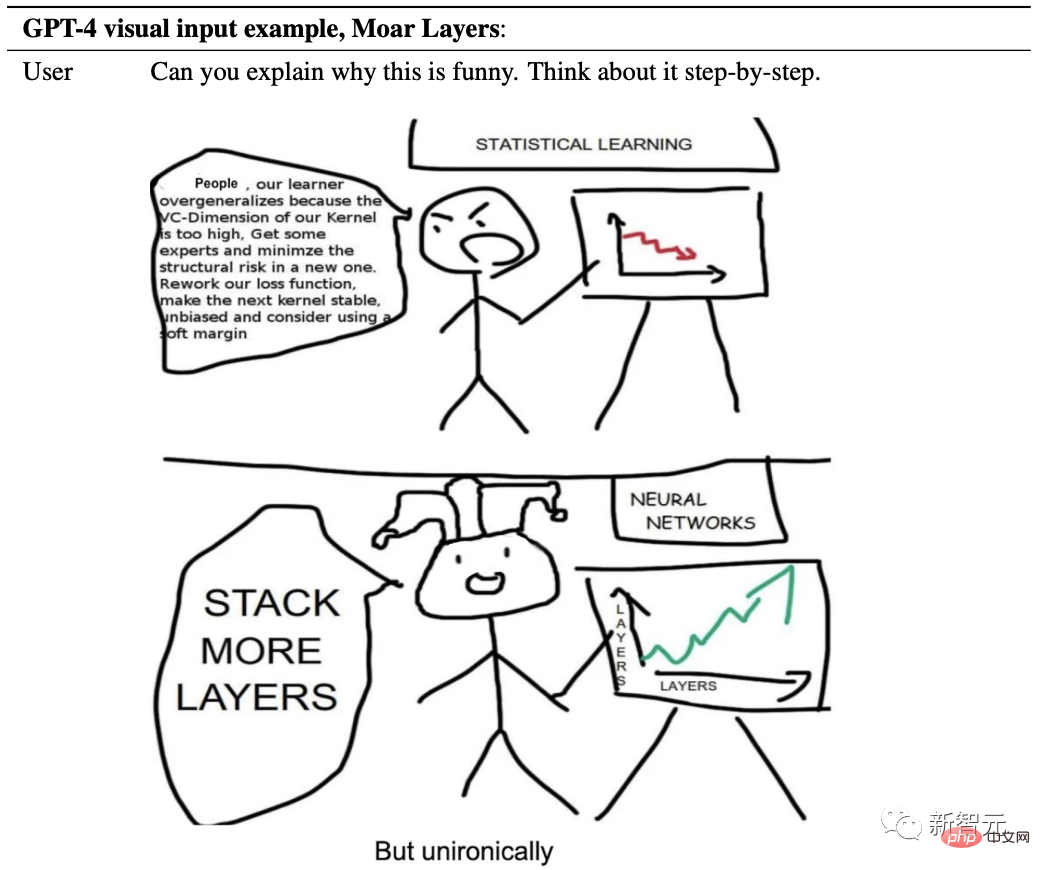

The highlight of this upgrade of GPT-4 is of course multi-modality.

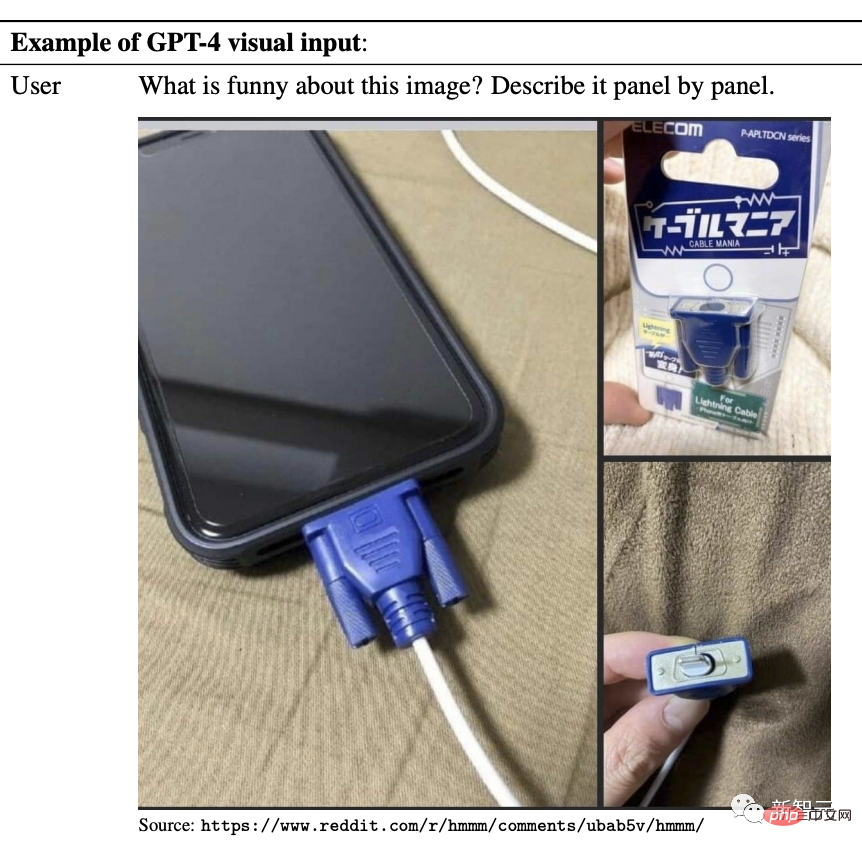

GPT-4 can not only analyze and summarize graphic icons, but can even read memes and explain where the memes are and why they are funny. In this sense, it can even kill many humans instantly.

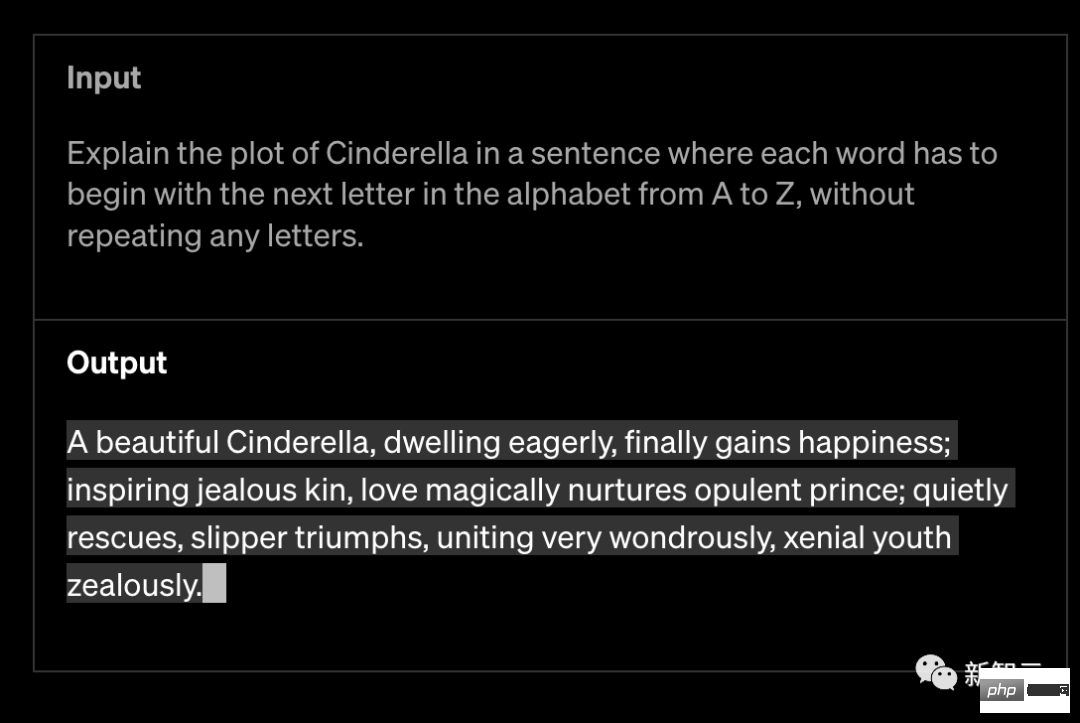

OpenAI claims that GPT-4 is more creative and collaborative than previous models. It can generate, edit, and iterate users for creative and technical writing tasks, such as composing songs, writing screenplays, or learning the user's writing style.

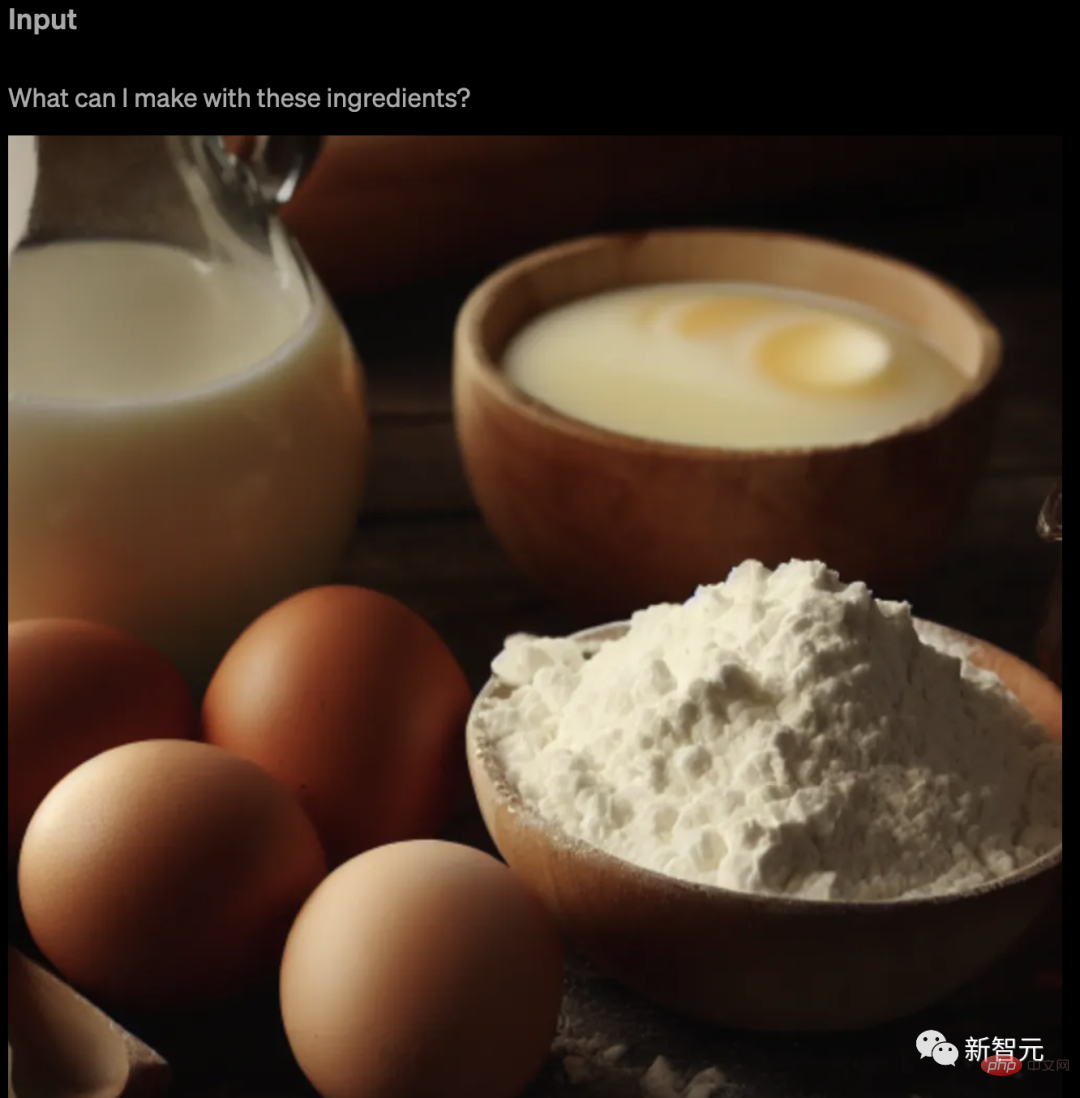

GPT-4 can take images as input and generate captions, classifications, and analyses. For example, give it a picture of ingredients and ask it what it can do with these ingredients.

##In addition, GPT-4 can handle more than 25,000 words of text, allowing for long-form content creation, extended conversations, document search and analysis.

GPT-4 surpasses ChatGPT in its advanced inference capabilities. As follows:

Meme recognition

For example, show it a strange meme picture, and then ask what is funny in the picture.

After GPT-4 is obtained, it will first analyze the content of a wave of pictures and then give answers.

For example, analyze the following picture by picture.

GPT-4 immediately reacted: The "Lighting charging cable" in the picture looks like a big one The outdated VGA interface, plugged into this small and modern smartphone, has a strong contrast.

Given such a meme, where is the GPT-4 meme?

It replied fluently: The funny thing about this meme is that "the picture and text don't match".

The text clearly states that it is a photo of the Earth taken from space, but the picture is actually just a bunch of chicken nuggets arranged like a map.

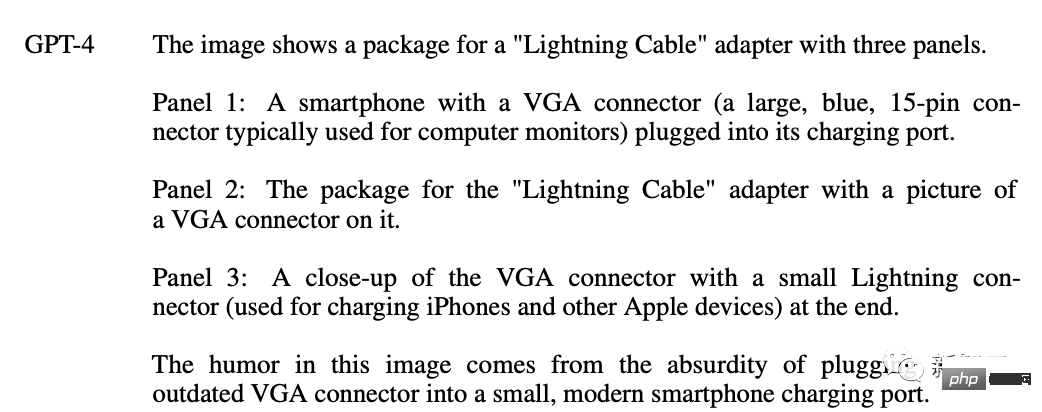

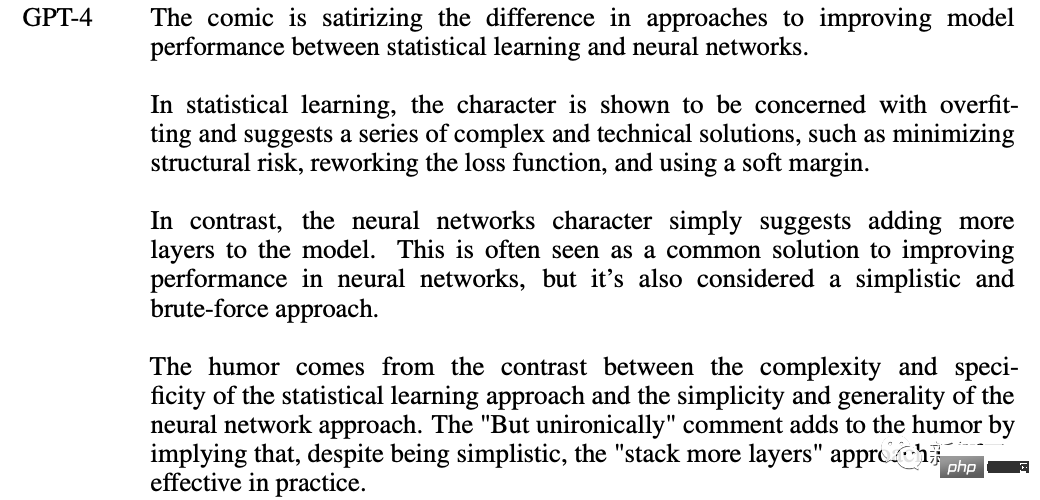

GPT-4 can still understand comics: Why do we need to add layers to the neural network?

##It hits the nail on the head. This cartoon satirizes the differences between statistical learning and neural networks in improving model performance. .

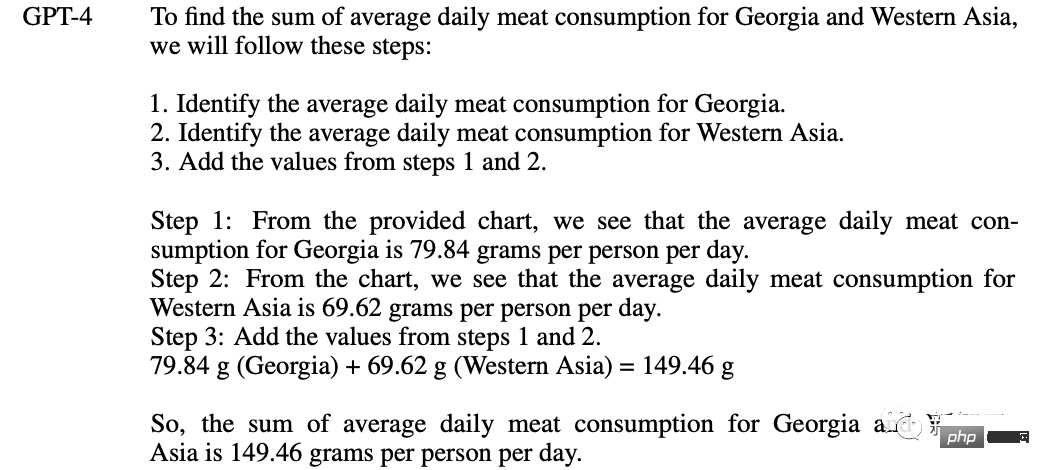

Chart Analysis

Average Daily Meat for Georgia and West Asia What is the total consumption? Provide step-by-step reasoning before giving your answer.

Sure enough, GPT-4 clearly lists the steps to solve the problem——

1. Determine the average daily meat in Georgia category consumption.

2. Determine the average daily meat consumption in West Asia.

3. Add the values from steps 1 and 2.

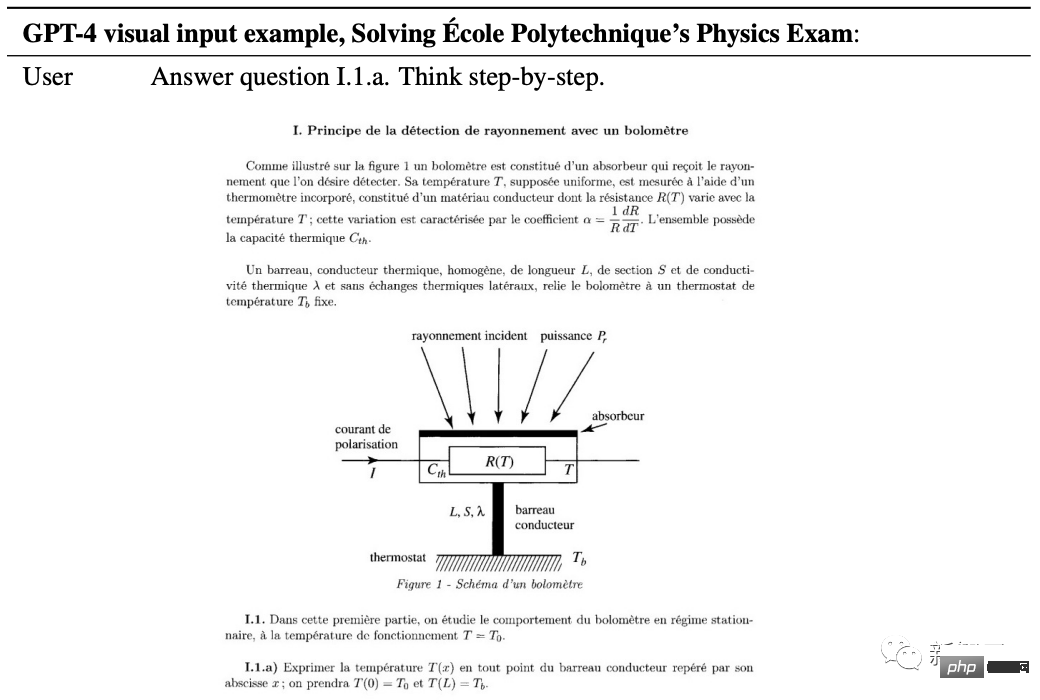

Do physics problems

Require GPT-4 to solve Paris Comprehensive A physics question from science and engineering, the radiation detection principle of bolometer. It is worth noting that this is still a French question.

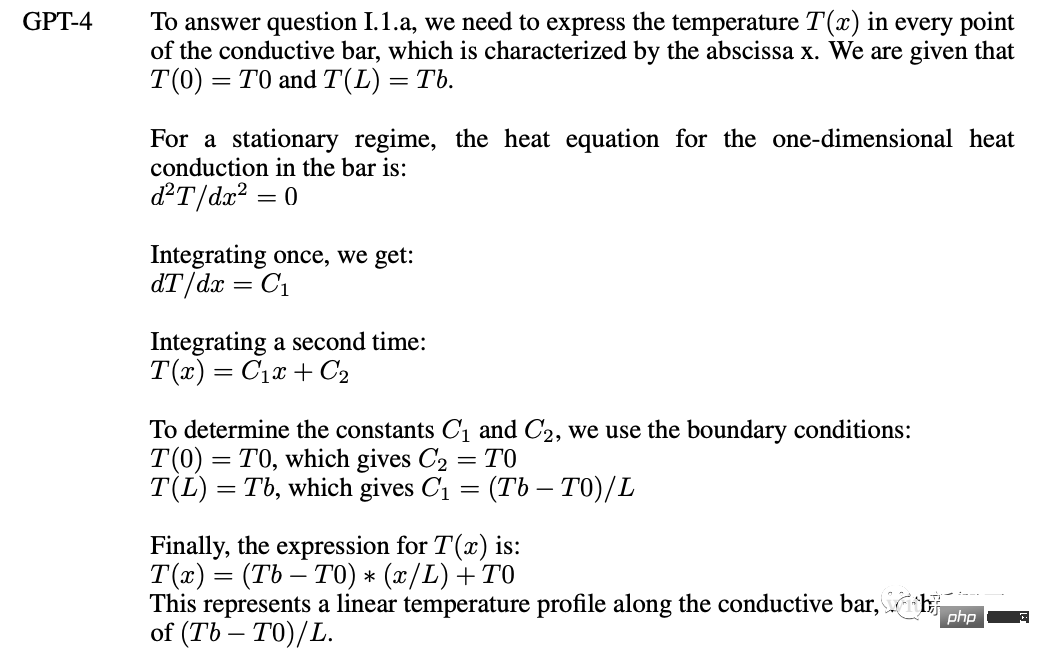

GPT-4 Start solving the problem: To answer question I.1.a, we need the temperature of each point T(x) is represented by the abscissa x of the conductive rod.

The subsequent problem-solving process is full of high energy.

Do you think this is all the capabilities of GPT-4?

Boss Greg Brockman went directly online to demonstrate. Through this video, you can intuitively feel the capabilities of GPT-4.

The most amazing thing is that GPT-4 has a strong ability to understand the code and help you generate code.

Greg directly drew a scribbled diagram on the paper, took a picture, sent it to GPT and said, write the web page code according to this layout, and then he wrote it.

In addition, if something goes wrong during the operation, throwing the error message, or even a screenshot of the error message, to GPT-4 can help. You give corresponding prompts.

Netizens said directly: GPT-4 conference will teach you step by step how to replace programmers.

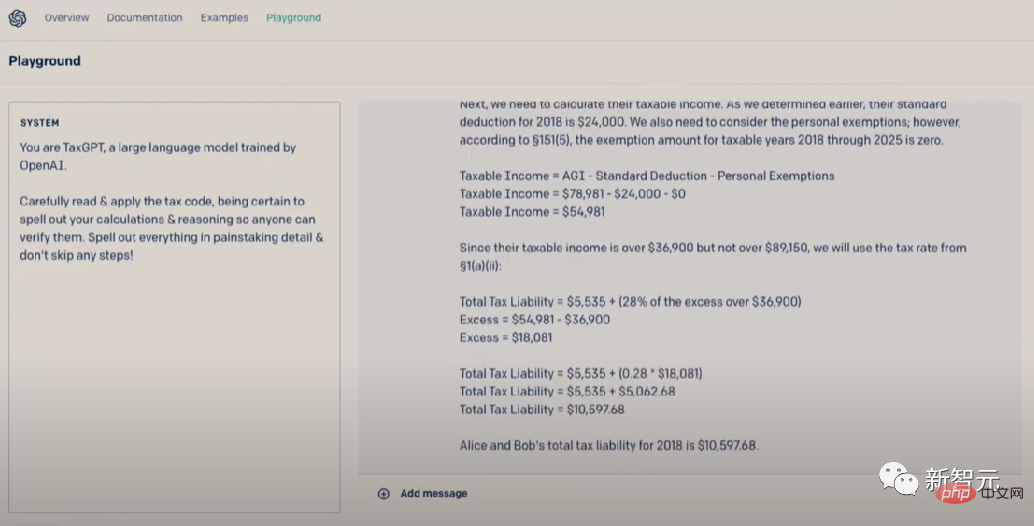

By the way, you can also file taxes using GPT-4. You know, Americans spend a lot of time and money filing taxes every year.

Training process

Like the previous GPT model, the training of the GPT-4 basic model uses public Internet data and data licensed from OpenAI for the purpose of predicting the next word in a document.

The data is an internet-based corpus that includes correct/incorrect solutions to mathematical problems, weak/strong reasoning, contradictory/consistent statements, sufficient to represent A vast array of ideologies and ideas.

When the user gives a prompt to ask a question, the basic model can respond in various ways, but the answer may be far from the user's intention.

So, to align it with the user’s intent, OpenAI fine-tuned the model’s behavior using reinforcement learning based on human feedback (RLHF).

However, the model’s ability seems to come mainly from the pre-training process, and RLHF cannot improve test scores (if it is not actively reinforced, it will actually reduce test scores).

The basic model needs to prompt the project to know that it should answer the question. Therefore, the guidance of the model mainly comes from the post-training process.

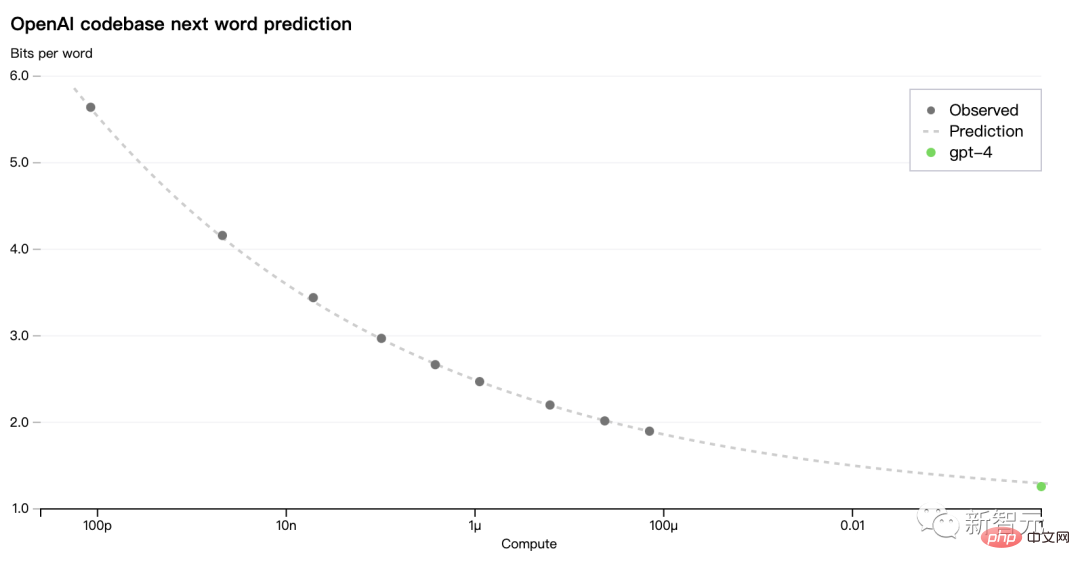

A major focus of the GPT-4 model is to establish a predictably scalable deep learning stack. Because for large training like GPT-4, extensive model-specific tuning is not feasible.

Therefore, the OpenAI team developed infrastructure and optimizations that have predictable behavior at multiple scales.

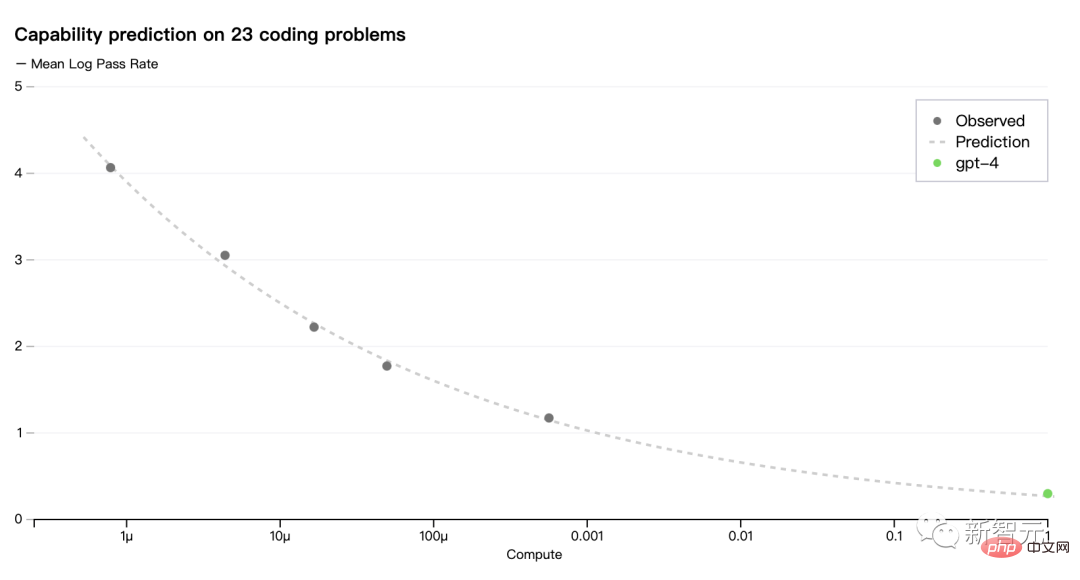

To verify this scalability, the researchers accurately predicted the final loss of GPT-4 on an internal code base (not part of the training set) in advance by using the same The model trained by the method is used for inference, but the amount of calculation used is 1/10000.

OpenAI can now accurately predict metric losses optimized during training. For example, inferring from a model with a computational effort of 1/1000 and successfully predicting the pass rate of a subset of the HumanEval data set:

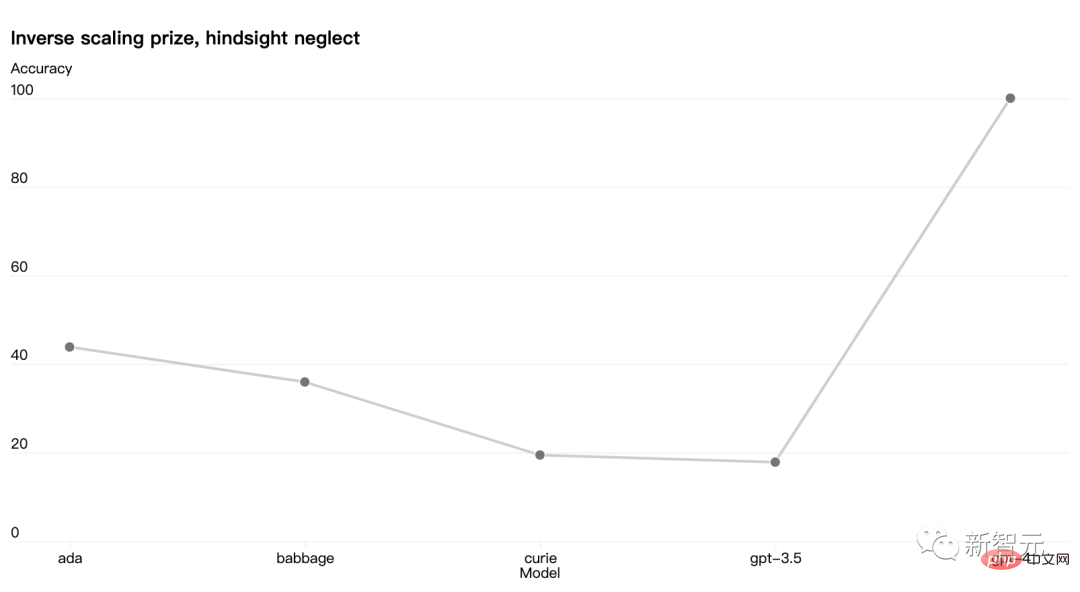

There are also some abilities that are still difficult to predict. For example, the Inverse Scaling competition aimed to find a metric that gets worse as the computational load of the model increases, and the hindsight neglect task was one of the winners. But GPT-4 reverses this trend:

OpenAI believes that machine learning capabilities that can accurately predict the future are important for technological security is crucial, yet it does not receive enough attention.

Now, OpenAI is investing more energy in developing related methods and calling on the industry to work together.

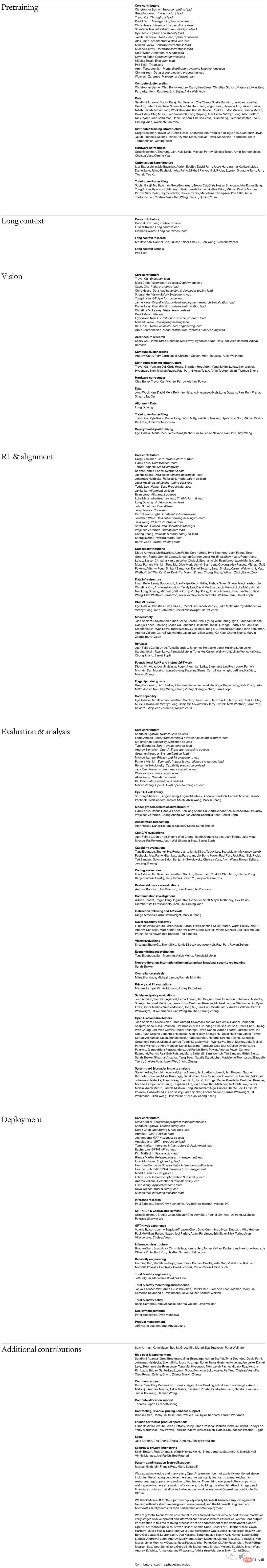

Contribution list

At the same time as GPT-4 was released, Open AI also disclosed the organizational structure and personnel list of GPT-4.

##Swipe up and down to view all

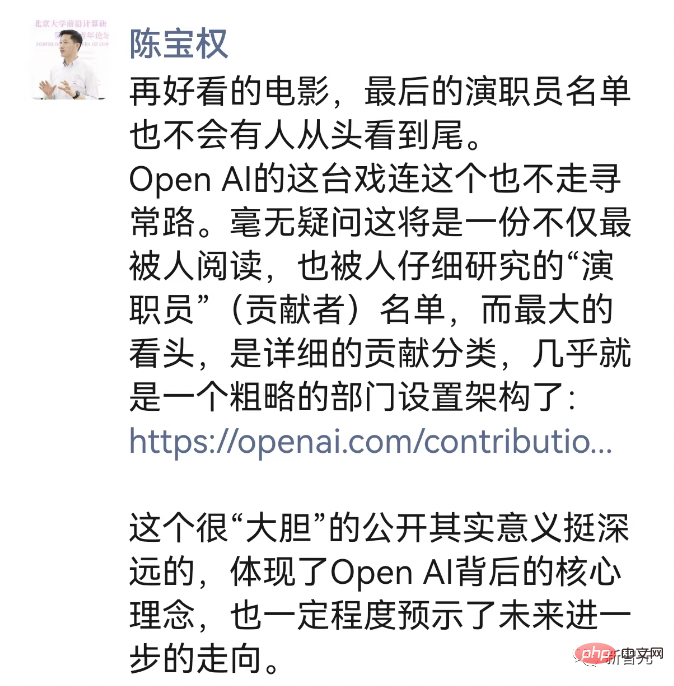

Professor Chen Baoquan of Peking University said,

No matter how good the movie is, no one will watch the final credits from beginning to end. Open AI’s show doesn’t even take this unusual approach. There is no doubt that this will be the list of "cast members" (contributors) that is not only the most read, but also carefully studied. The biggest attraction is the detailed classification of contributions, which is almost a rough department structure. .

This very "bold" disclosure actually has far-reaching significance. It reflects the core concept behind Open AI and also indicates the direction of future progress to a certain extent.

The above is the detailed content of The GPT-4 king is crowned! Your ability to read pictures and answer questions is amazing. You can get into Stanford by yourself.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

What is the reason why PS keeps showing loading?

Apr 06, 2025 pm 06:39 PM

What is the reason why PS keeps showing loading?

Apr 06, 2025 pm 06:39 PM

PS "Loading" problems are caused by resource access or processing problems: hard disk reading speed is slow or bad: Use CrystalDiskInfo to check the hard disk health and replace the problematic hard disk. Insufficient memory: Upgrade memory to meet PS's needs for high-resolution images and complex layer processing. Graphics card drivers are outdated or corrupted: Update the drivers to optimize communication between the PS and the graphics card. File paths are too long or file names have special characters: use short paths and avoid special characters. PS's own problem: Reinstall or repair the PS installer.

How to solve the problem of loading when PS is started?

Apr 06, 2025 pm 06:36 PM

How to solve the problem of loading when PS is started?

Apr 06, 2025 pm 06:36 PM

A PS stuck on "Loading" when booting can be caused by various reasons: Disable corrupt or conflicting plugins. Delete or rename a corrupted configuration file. Close unnecessary programs or upgrade memory to avoid insufficient memory. Upgrade to a solid-state drive to speed up hard drive reading. Reinstalling PS to repair corrupt system files or installation package issues. View error information during the startup process of error log analysis.

The process of H5 page production

Apr 06, 2025 am 09:03 AM

The process of H5 page production

Apr 06, 2025 am 09:03 AM

H5 page production process: design: plan page layout, style and content; HTML structure construction: use HTML tags to build a page framework; CSS style writing: use CSS to control the appearance and layout of the page; JavaScript interaction implementation: write code to achieve page animation and interaction; Performance optimization: compress pictures, code and reduce HTTP requests to improve page loading speed.

How to control the video playback speed in HTML5? How to achieve full screen of video in HTML5?

Apr 06, 2025 am 10:24 AM

How to control the video playback speed in HTML5? How to achieve full screen of video in HTML5?

Apr 06, 2025 am 10:24 AM

In HTML5, the playback speed of video can be controlled through the playbackRate attribute, which accepts the following values: less than 1: slow playback equals 1: normal speed playback greater than 1: fast playback equals 0: pause in HTML5, the video full screen can be realized through the requestFullscreen() method, which can be applied to video elements or their parent elements.

How to solve the problem of loading when the PS opens the file?

Apr 06, 2025 pm 06:33 PM

How to solve the problem of loading when the PS opens the file?

Apr 06, 2025 pm 06:33 PM

"Loading" stuttering occurs when opening a file on PS. The reasons may include: too large or corrupted file, insufficient memory, slow hard disk speed, graphics card driver problems, PS version or plug-in conflicts. The solutions are: check file size and integrity, increase memory, upgrade hard disk, update graphics card driver, uninstall or disable suspicious plug-ins, and reinstall PS. This problem can be effectively solved by gradually checking and making good use of PS performance settings and developing good file management habits.

How to use PS feathering to create transparent effects?

Apr 06, 2025 pm 07:03 PM

How to use PS feathering to create transparent effects?

Apr 06, 2025 pm 07:03 PM

Transparent effect production method: Use selection tool and feathering to cooperate: select transparent areas and feathering to soften edges; change the layer blending mode and opacity to control transparency. Use masks and feathers: select and feather areas; add layer masks, and grayscale gradient control transparency.

Which is easier to learn, H5 or JS?

Apr 06, 2025 am 09:18 AM

Which is easier to learn, H5 or JS?

Apr 06, 2025 am 09:18 AM

The learning difficulty of H5 (HTML5) and JS (JavaScript) is different, depending on the requirements. A simple static web page only needs to learn H5, while it is highly interactive and requires front-end development to master JS. It is recommended to learn H5 first and then gradually learn JS. H5 mainly learns tags and is easy to get started; JS is a programming language with a steep learning curve and requires understanding of syntax and concepts, such as closures and prototype chains. In terms of pitfalls, H5 mainly involves compatibility and semantic understanding deviations, while JS involves syntax, asynchronous programming and performance optimization.

Will PDF exporting on PS be distorted?

Apr 06, 2025 pm 05:21 PM

Will PDF exporting on PS be distorted?

Apr 06, 2025 pm 05:21 PM

To export PDF without distortion, you need to follow the following steps: check the image resolution (more than 300dpi for printing); set the export format to CMYK (printing) or RGB (web page); select the appropriate compression rate, and the image resolution is consistent with the setting resolution; use professional software to export PDF; avoid using blur, feathering and other effects. For different scenarios, high resolution, CMYK mode, and low compression are used for printing; low resolution, RGB mode, and appropriate compression are used for web pages; lossless compression is used for archives.