Technology peripherals

Technology peripherals

AI

AI

Can't stop it! Diffusion model can be used to photoshop photos using only text

Can't stop it! Diffusion model can be used to photoshop photos using only text

Can't stop it! Diffusion model can be used to photoshop photos using only text

It is the common wish of Party A and Party B to be able to improve the picture with just words, but usually only Party B knows the pain and sorrow involved. Today, AI has launched a challenge to this difficult problem.

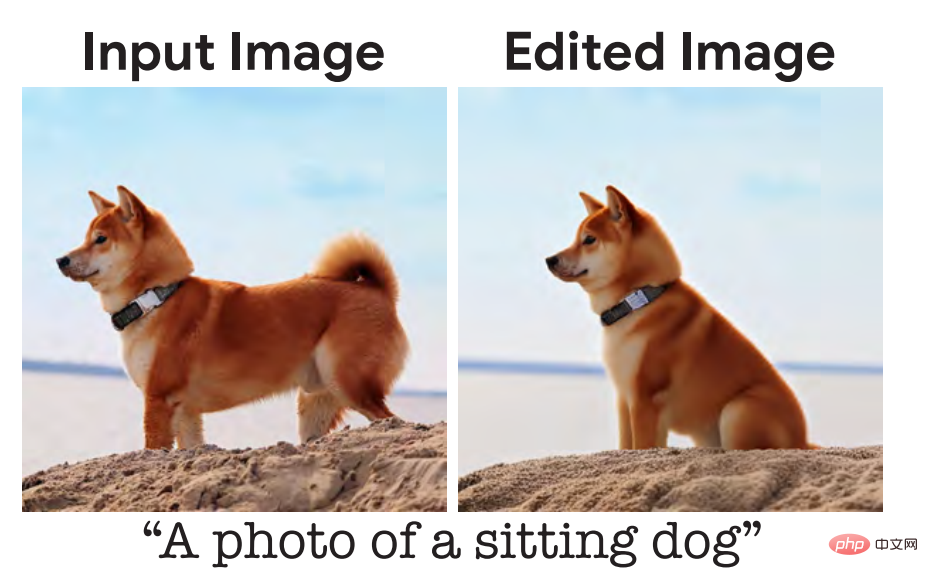

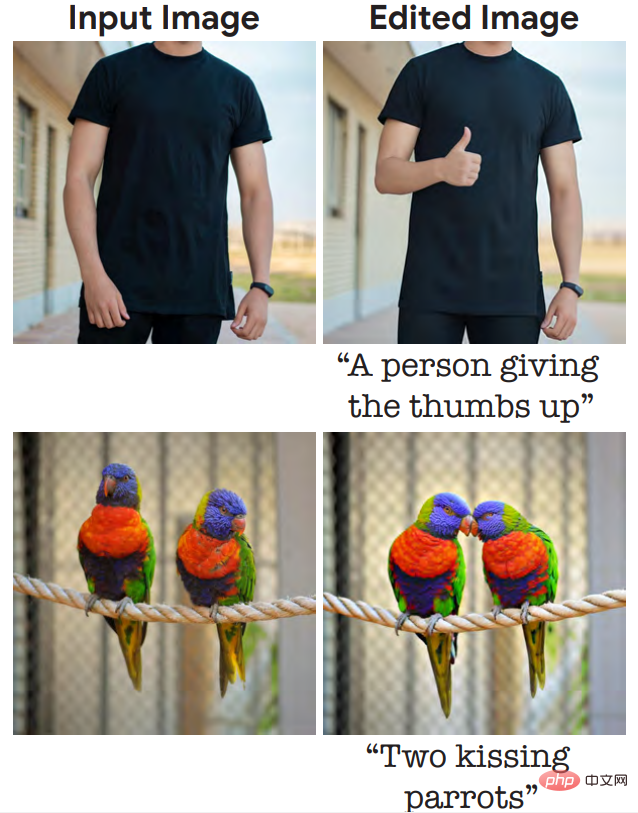

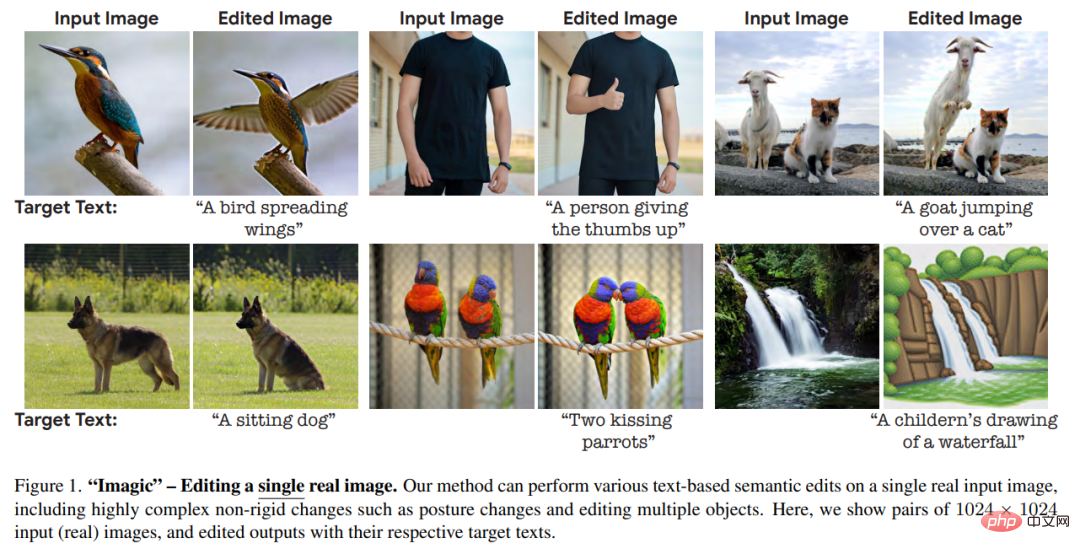

In a paper uploaded to arXiv on October 17, researchers from Google Research, the Israel Institute of Technology, and the Weizmann Institute of Science in Israel introduced a method based on The real image editing method of diffusion model - Imagic, can realize PS of real photos using only text, such as making a person give a thumbs up or making two parrots kiss:

"Please help me with a like gesture." Diffusion model: No problem, I'll cover it.

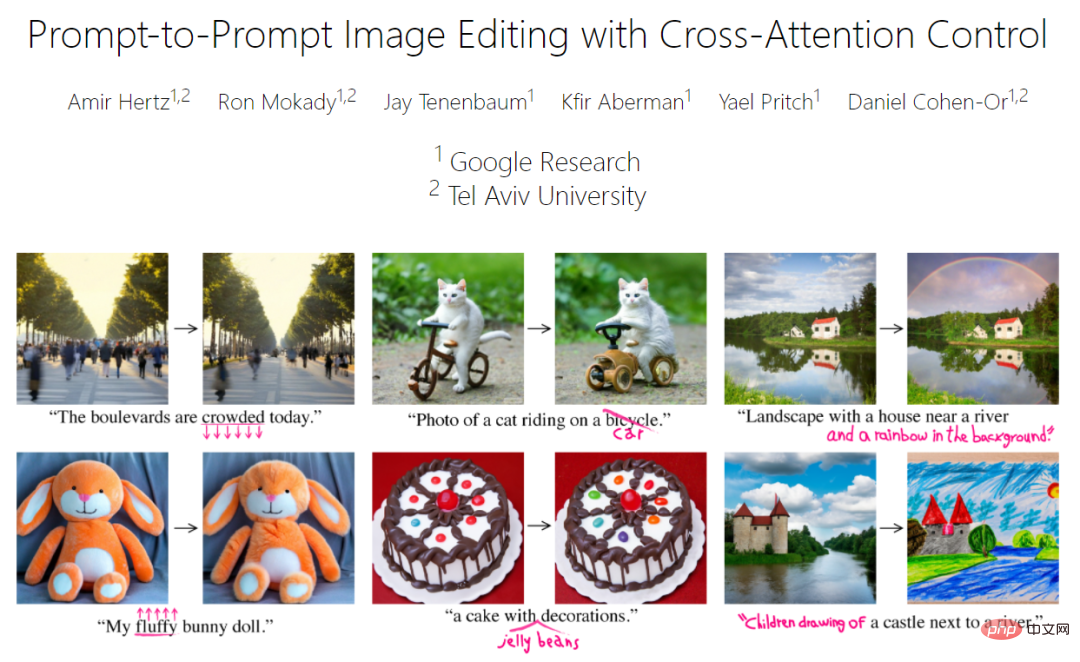

#It can be seen from the images in the paper that the modified images are still very natural, and there is no obvious damage to the information other than the content that needs to be modified. Similar research includes the Prompt-to-Prompt previously completed by Google Research and Tel Aviv University in Israel (reference [16] in the Imagic paper):

Project link (including papers and code): https://prompt-to-prompt.github.io/

Therefore, someone said with emotion , "This field is changing so fast that it's a bit exaggerated." From now on, Party A can really make any changes they want with just their words.

Imagic Paper Overview

##Paper link: https://arxiv.org /pdf/2210.09276.pdf

Applying substantial semantic editing to real photos has always been an interesting task in image processing. This task has attracted considerable interest from the research community as deep learning-based systems have made considerable progress in recent years.

Using simple natural language text prompts to describe the editor we want (such as asking a dog to sit) is highly consistent with the way humans communicate. Therefore, researchers have developed many text-based image editing methods, and these methods are also effective.

However, the current mainstream methods have more or less problems, such as:

1. Limited to a specific set of Editing, such as painting on the image, adding objects or transferring styles [6, 28];

2. Can only operate on images in specific fields or synthesized images [16, 36 ];

3. In addition to the input image, they also require auxiliary input, such as an image mask indicating the desired editing position, multiple images of the same subject, or text describing the original image[ 6, 13, 40, 44].

This article proposes a semantic image editing method "Imagic" to alleviate the above problems. Given an input image to be edited and a single text prompt describing the target edit, this method enables complex non-rigid editing of real high-resolution images. The resulting image output aligns well with the target text, while preserving the overall context, structure, and composition of the original image.

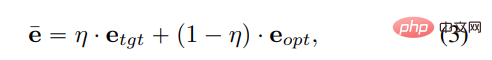

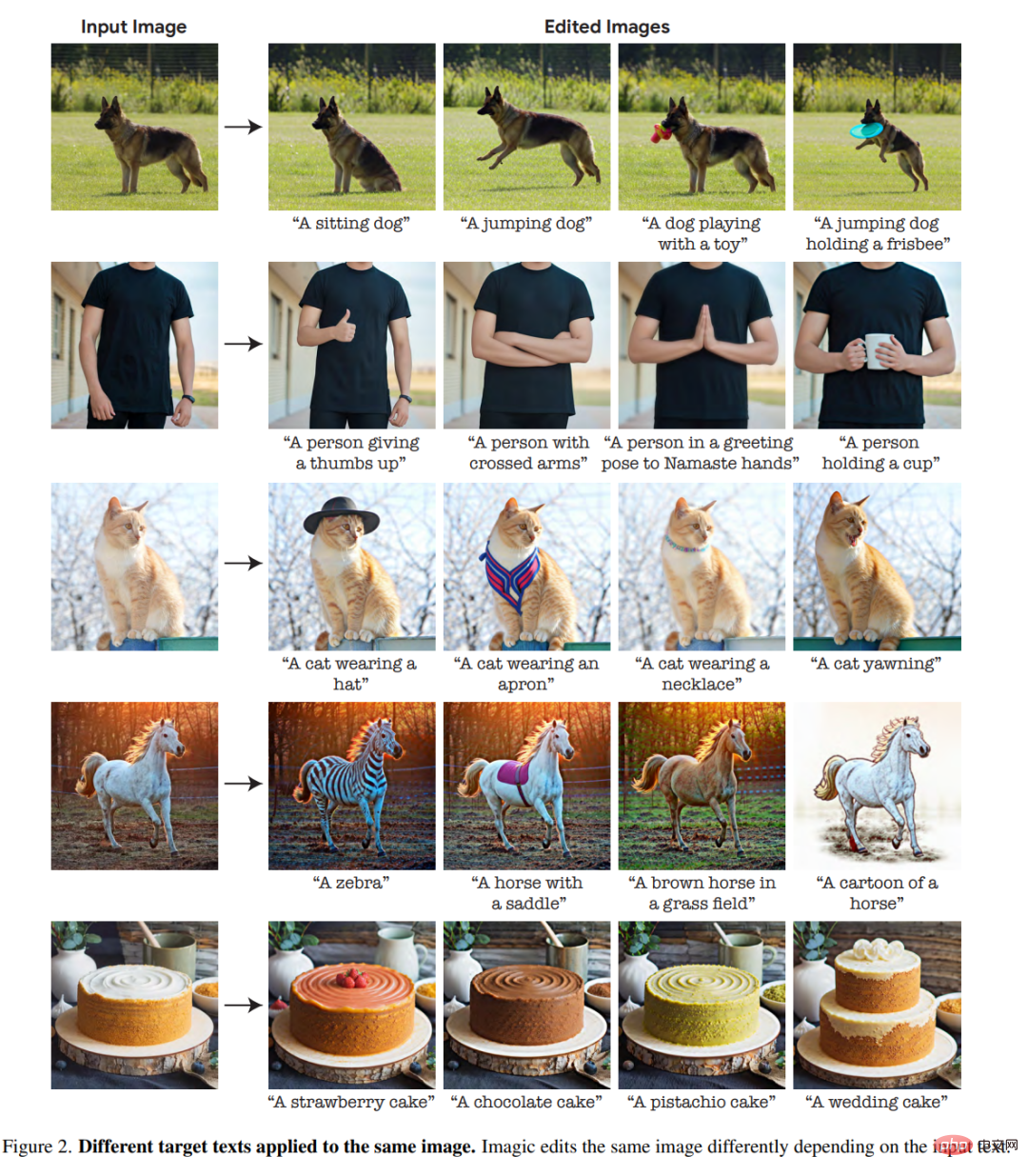

As shown in Figure 1, Imagic can make two parrots kiss or make a person give a thumbs up. The text-based semantic editing it offers is the first time such complex operations, including editing of multiple objects, can be applied to a single real high-resolution image. In addition to these complex changes, Imagic allows for a wide variety of edits, including style changes, color changes, and object additions.

#To achieve this feat, the researchers leveraged the recently successful text-to-image diffusion model. Diffusion models are powerful generative models capable of high-quality image synthesis. When conditioned on a natural language text prompt, it is able to generate images consistent with the requested text. In this work, the researchers used them to edit real images rather than synthesize new ones.

As shown in Figure 3, Imagic only needs three steps to complete the above task: first optimize a text embedding to produce an image similar to the input image. The pre-trained generative diffusion model is then fine-tuned to better reconstruct the input image, conditioned on optimized embeddings. Finally, linear interpolation is performed between the target text embedding and the optimized embedding, resulting in a representation that combines the input image and target text. This representation is then passed to a generative diffusion process with a fine-tuned model, outputting the final edited image.

To prove the power of Imagic, the researchers conducted several experiments, applying the method to numerous images in different fields, and produced impressive results in all experiments the result of. The high-quality images output by Imagic are highly similar to the input images and consistent with the required target text. These results demonstrate the versatility, versatility and quality of Imagic. The researchers also conducted an ablation study that highlighted the effectiveness of each component of the proposed method. Compared to a range of recent methods, Imagic exhibits significantly better editing quality and fidelity to the original image, especially when undertaking highly complex non-rigid editing tasks.

Method details

Given an input image x and a target text, this article aims to edit the image in a way that satisfies the given text while retaining the image x Lots of details. To achieve this goal, this paper utilizes the text embedding layer of the diffusion model to perform semantic operations in a manner somewhat similar to GAN-based methods. Researchers start by looking for meaningful representations and then go through a generative process that produces images that are similar to the input image. The generative model is then optimized to better reconstruct the input image, and the final step is to process the latent representation to obtain the editing result.

As shown in Figure 3 above, our method consists of three stages: (1) Optimizing text embeddings to find the text embedding that best matches the given image near the target text embedding; (2) Fine-tune the diffusion model to better match the given image; (3) Linearly interpolate between the optimized embedding and the target text embedding to find one that achieves both image fidelity and target text alignment. point.

More specific details are as follows:

Text embedding optimization

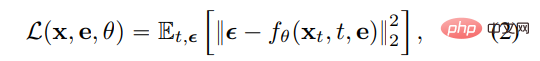

First the target text is input to the text encoder, which outputs the corresponding text embedding  , where T is the number of tokens of the given target text, and d is the token embedding dimension. Then, the researchers froze the parameters of the generated diffusion model f_θ and used the denoising diffusion objective to optimize the target text embedding e_tgt

, where T is the number of tokens of the given target text, and d is the token embedding dimension. Then, the researchers froze the parameters of the generated diffusion model f_θ and used the denoising diffusion objective to optimize the target text embedding e_tgt

Where, x is the input image,  is a noise version of x, and θ is the pre-trained diffusion model weight. This makes the text embedding match the input image as closely as possible. This process runs in relatively few steps, keeping close to the original target text embedding, resulting in an optimized embedding e_opt.

is a noise version of x, and θ is the pre-trained diffusion model weight. This makes the text embedding match the input image as closely as possible. This process runs in relatively few steps, keeping close to the original target text embedding, resulting in an optimized embedding e_opt.

Model fine-tuning

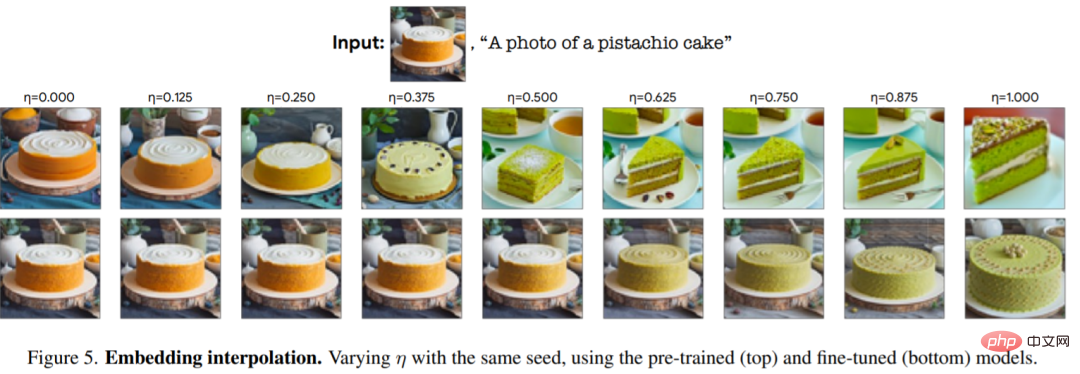

It should be noted here that the optimized embedding e_opt obtained here is generated through diffusion process, they are not necessarily exactly similar to the input image x because they only run a small number of optimization steps (see the upper left image in Figure 5). Therefore, in the second stage, the authors close this gap by optimizing the model parameters θ using the same loss function provided in Equation (2) while freezing the optimization embedding.

Text embedding interpolation

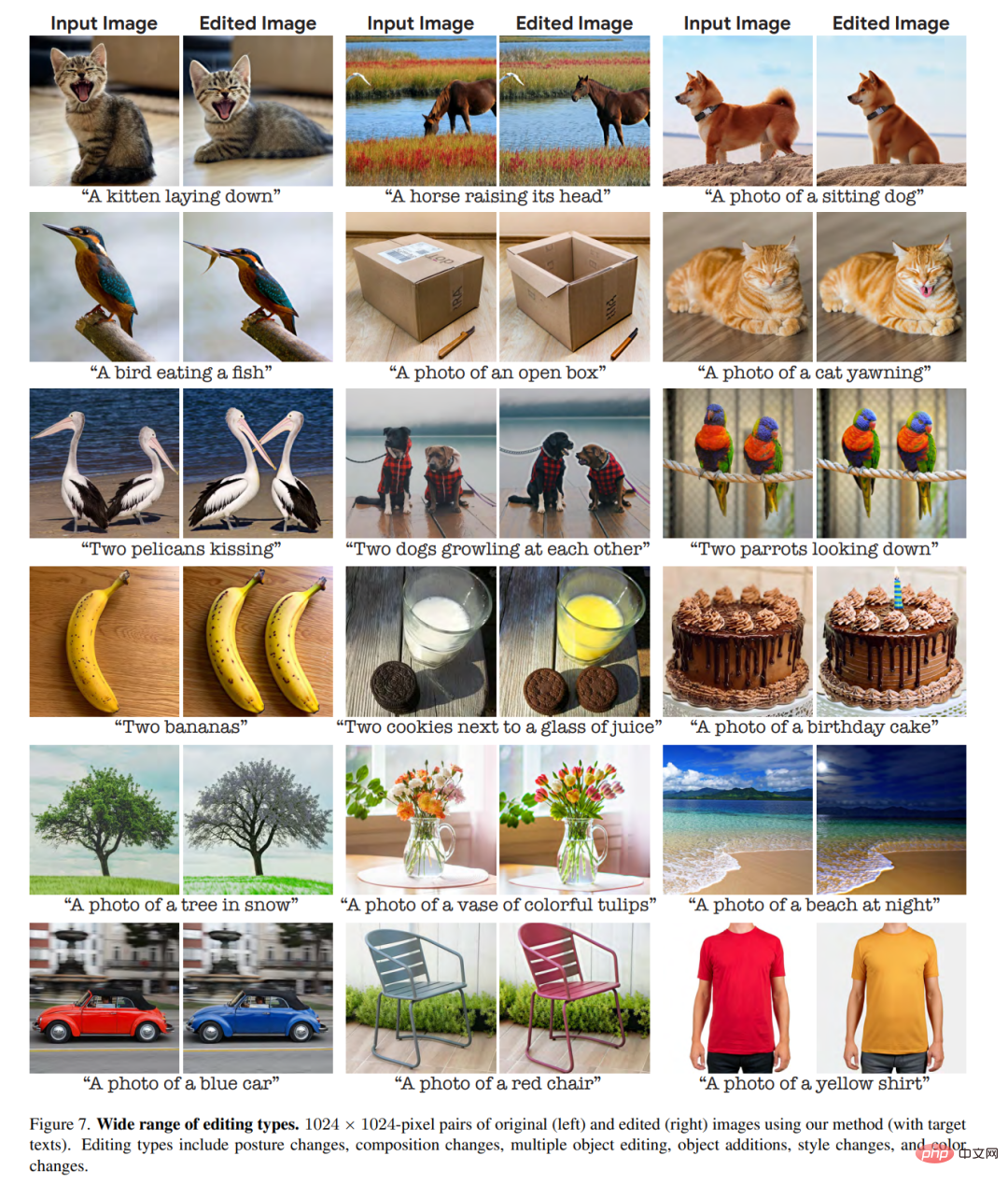

The third stage of Imagic is Perform simple linear interpolation between e_tgt and e_opt. For a given hyperparameter  , we obtain

, we obtain  Then, the authors use a fine-tuned model, conditional on

Then, the authors use a fine-tuned model, conditional on  , to apply a basic generative diffusion process. This produces a low-resolution edited image, which is then super-resolved using a fine-tuned auxiliary model to super-resolve the target text. This generation process outputs the final high-resolution edited image

, to apply a basic generative diffusion process. This produces a low-resolution edited image, which is then super-resolved using a fine-tuned auxiliary model to super-resolve the target text. This generation process outputs the final high-resolution edited image .

.

Experimental results

In order to test the effect, the researchers applied this method to a large number of real pictures from different fields, using simple text prompts to describe different editing categories , such as: style, appearance, color, posture and composition. They collected high-resolution, free-to-use images from Unsplash and Pixabay, optimized them to generate each edit with 5 random seeds, and selected the best results. Imagic demonstrates impressive results with its ability to apply various editing categories on any general input image and text, as shown in Figures 1 and 7.

Figure 2 is an experiment with different text prompts on the same image, showing the versatility of Imagic.

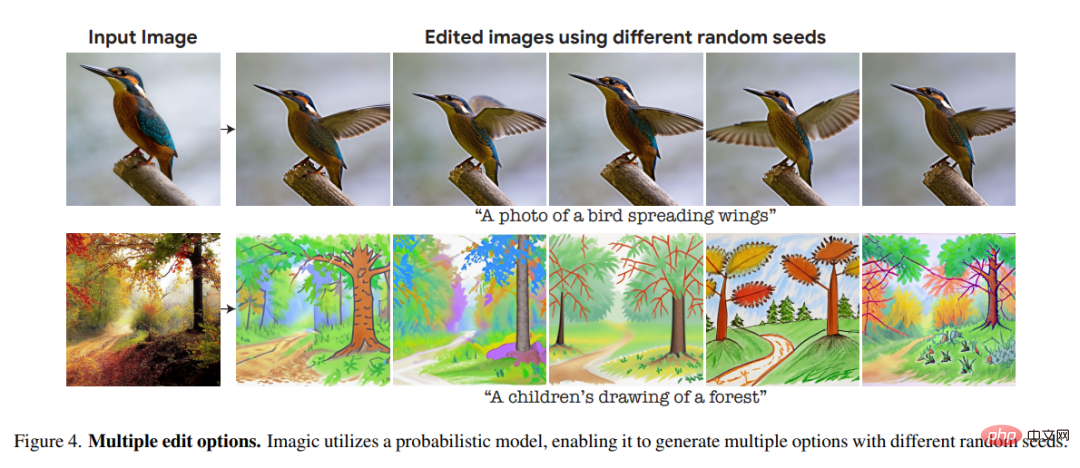

Since the underlying generative diffusion model used by the researchers is based on probability, this method can be used for a single image-text pairs produce different results. Figure 4 shows several options for editing using different random seeds (with slight adjustments to eta for each seed). This randomness allows users to choose between these different options, since natural language text prompts are generally ambiguous and imprecise.

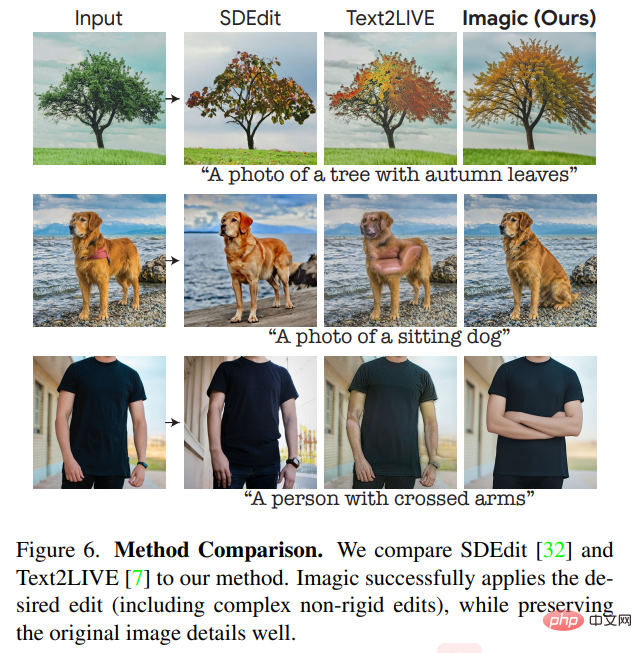

The study compared Imagic to currently leading general-purpose methods on a single input of real-world images. Take the action and edit it based on the text prompt. Figure 6 shows the editing results of different methods such as Text2LIVE[7] and SDEdit[32].

It can be seen that our method maintains high fidelity to the input image while appropriately performing the required edits. When given complex non-rigid editing tasks, such as "making the dog sit", our method significantly outperforms previous techniques. Imagic is the first demo to apply this sophisticated text-based editing on a single real-world image.

The above is the detailed content of Can't stop it! Diffusion model can be used to photoshop photos using only text. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

How to solve the complexity of WordPress installation and update using Composer

Apr 17, 2025 pm 10:54 PM

When managing WordPress websites, you often encounter complex operations such as installation, update, and multi-site conversion. These operations are not only time-consuming, but also prone to errors, causing the website to be paralyzed. Combining the WP-CLI core command with Composer can greatly simplify these tasks, improve efficiency and reliability. This article will introduce how to use Composer to solve these problems and improve the convenience of WordPress management.

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

How to solve SQL parsing problem? Use greenlion/php-sql-parser!

Apr 17, 2025 pm 09:15 PM

When developing a project that requires parsing SQL statements, I encountered a tricky problem: how to efficiently parse MySQL's SQL statements and extract the key information. After trying many methods, I found that the greenlion/php-sql-parser library can perfectly solve my needs.

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

How to solve the problem of PHP project code coverage reporting? Using php-coveralls is OK!

Apr 17, 2025 pm 08:03 PM

When developing PHP projects, ensuring code coverage is an important part of ensuring code quality. However, when I was using TravisCI for continuous integration, I encountered a problem: the test coverage report was not uploaded to the Coveralls platform, resulting in the inability to monitor and improve code coverage. After some exploration, I found the tool php-coveralls, which not only solved my problem, but also greatly simplified the configuration process.

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

How to solve complex BelongsToThrough relationship problem in Laravel? Use Composer!

Apr 17, 2025 pm 09:54 PM

In Laravel development, dealing with complex model relationships has always been a challenge, especially when it comes to multi-level BelongsToThrough relationships. Recently, I encountered this problem in a project dealing with a multi-level model relationship, where traditional HasManyThrough relationships fail to meet the needs, resulting in data queries becoming complex and inefficient. After some exploration, I found the library staudenmeir/belongs-to-through, which easily installed and solved my troubles through Composer.

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

How to solve the complex problem of PHP geodata processing? Use Composer and GeoPHP!

Apr 17, 2025 pm 08:30 PM

When developing a Geographic Information System (GIS), I encountered a difficult problem: how to efficiently handle various geographic data formats such as WKT, WKB, GeoJSON, etc. in PHP. I've tried multiple methods, but none of them can effectively solve the conversion and operational issues between these formats. Finally, I found the GeoPHP library, which easily integrates through Composer, and it completely solved my troubles.

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

Solve CSS prefix problem using Composer: Practice of padaliyajay/php-autoprefixer library

Apr 17, 2025 pm 11:27 PM

I'm having a tricky problem when developing a front-end project: I need to manually add a browser prefix to the CSS properties to ensure compatibility. This is not only time consuming, but also error-prone. After some exploration, I discovered the padaliyajay/php-autoprefixer library, which easily solved my troubles with Composer.

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

How to solve the problem of virtual columns in Laravel model? Use stancl/virtualcolumn!

Apr 17, 2025 pm 09:48 PM

During Laravel development, it is often necessary to add virtual columns to the model to handle complex data logic. However, adding virtual columns directly into the model can lead to complexity of database migration and maintenance. After I encountered this problem in my project, I successfully solved this problem by using the stancl/virtualcolumn library. This library not only simplifies the management of virtual columns, but also improves the maintainability and efficiency of the code.

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

git software installation tutorial

Apr 17, 2025 pm 12:06 PM

Git Software Installation Guide: Visit the official Git website to download the installer for Windows, MacOS, or Linux. Run the installer and follow the prompts. Configure Git: Set username, email, and select a text editor. For Windows users, configure the Git Bash environment.