You must have heard this famous data science quote:

In a data science project, 80% of the time is spent doing data processing.

If you haven’t heard of it, remember: data cleaning is the foundation of the data science workflow. Machine learning models perform based on the data you provide them. Messy data can lead to poor performance or even incorrect results, while clean data is a prerequisite for good model performance. Of course, clean data does not mean good performance all the time. The correct selection of the model (the remaining 20%) is also important, but without clean data, even the most powerful model cannot achieve the expected level.

In this article, we will list the problems that need to be solved in data cleaning and show possible solutions. Through this article, you can learn how to perform data cleaning step by step.

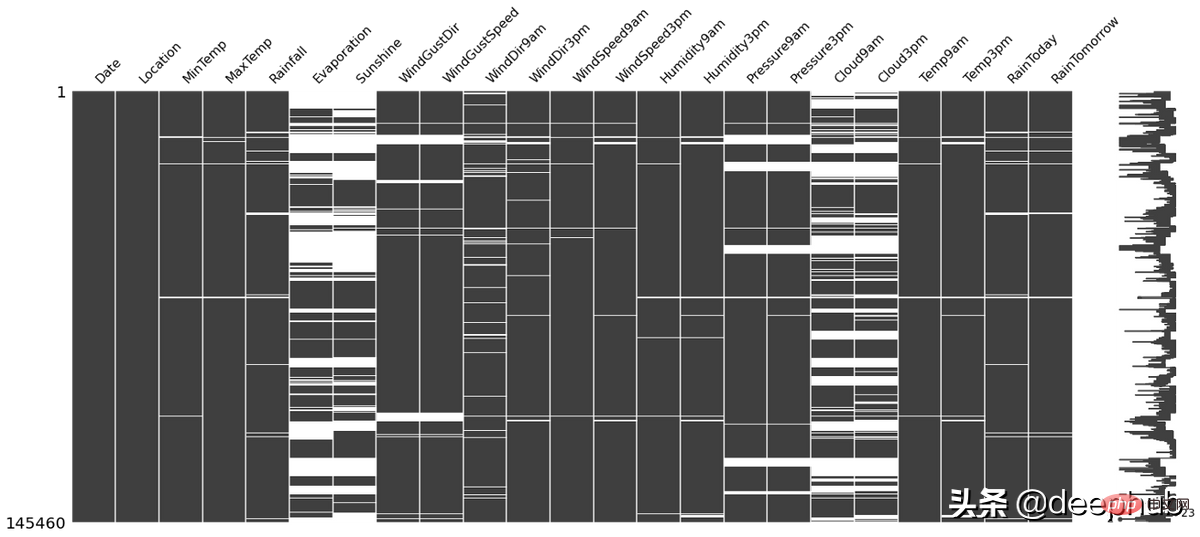

When the data set contains missing data, some data analysis can be performed before filling. Because the position of the empty cell itself can tell us some useful information. For example:

missingno This python library can be used to check the above situation, and it is very simple to use. For example, the white line in the picture below is NA:

import missingno as msno msno.matrix(df)

There are many methods for filling in missing values, such as:

Different methods have advantages and disadvantages over each other, and there is no "best" technique that works in all situations. For details, please refer to our previously published articles

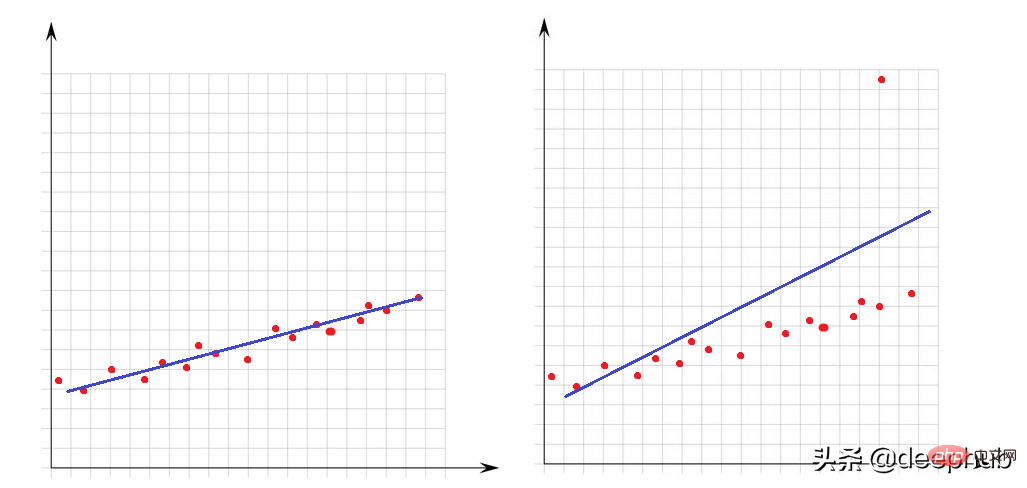

Outliers are very large or very small values relative to other points in the data set. Their presence greatly affects the performance of mathematical models. Let’s look at this simple example:

In the left graph there are no outliers and our linear model fits the data points very well. In the image on the right there is an outlier, when the model tries to cover all points of the dataset, the presence of this outlier changes the way the model fits and makes our model not fit for at least half of the points.

For outliers, we need to introduce how to determine anomalies. This requires clarifying what is maximum or minimum from a mathematical perspective.

Any value greater than Q3 1.5 x IQR or less than Q1-1.5 x IQR can be regarded as an outlier. IQR (interquartile range) is the difference between Q3 and Q1 (IQR = Q3-Q1).

You can use the following function to check the number of outliers in the data set:

def number_of_outliers(df): df = df.select_dtypes(exclude = 'object') Q1 = df.quantile(0.25) Q3 = df.quantile(0.75) IQR = Q3 - Q1 return ((df < (Q1 - 1.5 * IQR)) | (df > (Q3 + 1.5 * IQR))).sum()

One way to deal with outliers is to make them equal to Q3 or Q1. The lower_upper_range function below uses the pandas and numpy libraries to find ranges with outliers outside of them, and then uses the clip function to clip the values to the specified range.

def lower_upper_range(datacolumn): sorted(datacolumn) Q1,Q3 = np.percentile(datacolumn , [25,75]) IQR = Q3 - Q1 lower_range = Q1 - (1.5 * IQR) upper_range = Q3 + (1.5 * IQR) return lower_range,upper_range for col in columns: lowerbound,upperbound = lower_upper_range(df[col]) df[col]=np.clip(df[col],a_min=lowerbound,a_max=upperbound)

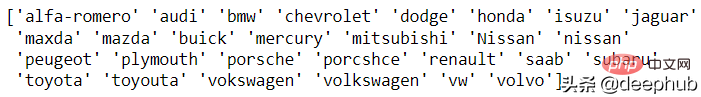

The outlier problem is about numeric features, now let’s look at character type (categorical) features. Inconsistent data means that unique classes of columns have different representations. For example, in the gender column, there are both m/f and male/female. In this case, there would be 4 classes, but there are actually two classes.

There is currently no automatic solution for this problem, so manual analysis is required. The unique function of pandas is prepared for this analysis. Let’s look at an example of a car brand:

df['CarName'] = df['CarName'].str.split().str[0] print(df['CarName'].unique())

maxda-mazda, Nissan-nissan, porcshce-porsche, toyouta-toyota etc. can be merged.

df.loc[df['CarName'] == 'maxda', 'CarName'] = 'mazda' df.loc[df['CarName'] == 'Nissan', 'CarName'] = 'nissan' df.loc[df['CarName'] == 'porcshce', 'CarName'] = 'porsche' df.loc[df['CarName'] == 'toyouta', 'CarName'] = 'toyota' df.loc[df['CarName'] == 'vokswagen', 'CarName'] = 'volkswagen' df.loc[df['CarName'] == 'vw', 'CarName'] = 'volkswagen'

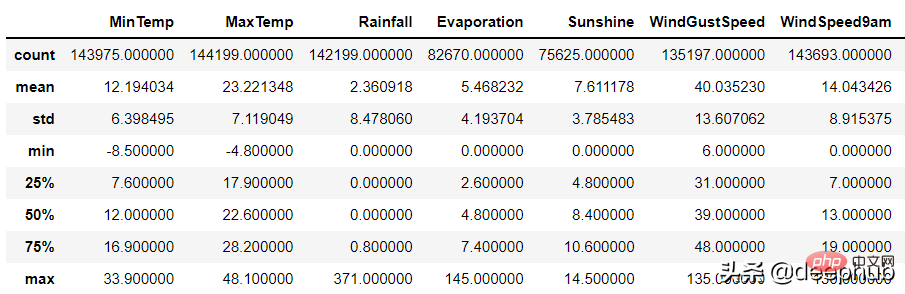

Invalid data represents a value that is not logically correct at all. For example,

For numeric columns, pandas' describe function can be used to identify such errors:

df.describe()

There may be two reasons for invalid data:

1. Data collection errors: For example, the range was not judged during input. When entering height, 1799cm was mistakenly entered instead of 179cm, but the program did not judge the range of the data.

2. Data operation error

数据集的某些列可能通过了一些函数的处理。 例如,一个函数根据生日计算年龄,但是这个函数出现了BUG导致输出不正确。

以上两种随机错误都可以被视为空值并与其他 NA 一起估算。

当数据集中有相同的行时就会产生重复数据问题。 这可能是由于数据组合错误(来自多个来源的同一行),或者重复的操作(用户可能会提交他或她的答案两次)等引起的。 处理该问题的理想方法是删除复制行。

可以使用 pandas duplicated 函数查看重复的数据:

df.loc[df.duplicated()]

在识别出重复的数据后可以使用pandas 的 drop_duplicate 函数将其删除:

df.drop_duplicates()

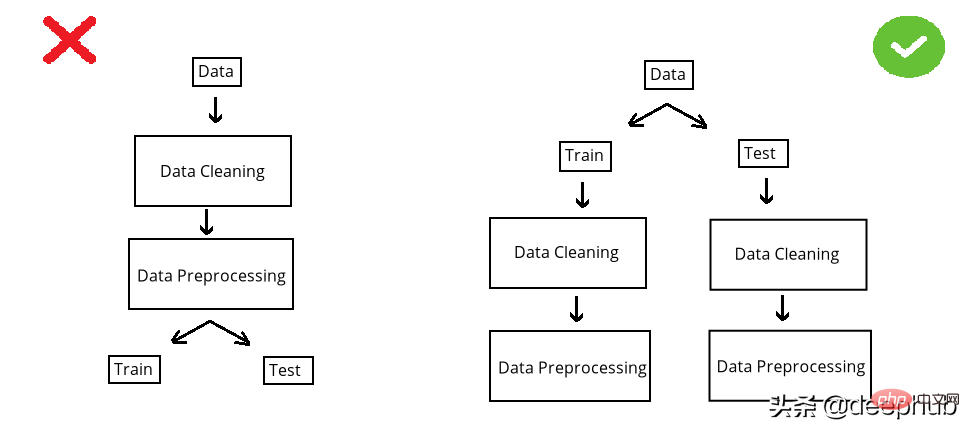

在构建模型之前,数据集被分成训练集和测试集。 测试集是看不见的数据用于评估模型性能。 如果在数据清洗或数据预处理步骤中模型以某种方式“看到”了测试集,这个就被称做数据泄漏(data leakage)。 所以应该在清洗和预处理步骤之前拆分数据:

以选择缺失值插补为例。数值列中有 NA,采用均值法估算。在 split 前完成时,使用整个数据集的均值,但如果在 split 后完成,则使用分别训练和测试的均值。

第一种情况的问题是,测试集中的推算值将与训练集相关,因为平均值是整个数据集的。所以当模型用训练集构建时,它也会“看到”测试集。但是我们拆分的目标是保持测试集完全独立,并像使用新数据一样使用它来进行性能评估。所以在操作之前必须拆分数据集。

虽然训练集和测试集分别处理效率不高(因为相同的操作需要进行2次),但它可能是正确的。因为数据泄露问题非常重要,为了解决代码重复编写的问题,可以使用sklearn 库的pipeline。简单地说,pipeline就是将数据作为输入发送到的所有操作步骤的组合,这样我们只要设定好操作,无论是训练集还是测试集,都可以使用相同的步骤进行处理,减少的代码开发的同时还可以减少出错的概率。

The above is the detailed content of A complete guide to data cleaning with Python. For more information, please follow other related articles on the PHP Chinese website!