Technology peripherals

Technology peripherals

AI

AI

Zhou Bowen of Tsinghua University: The popularity of ChatGPT reveals the high importance of the new generation of collaboration and interactive intelligence

Zhou Bowen of Tsinghua University: The popularity of ChatGPT reveals the high importance of the new generation of collaboration and interactive intelligence

Zhou Bowen of Tsinghua University: The popularity of ChatGPT reveals the high importance of the new generation of collaboration and interactive intelligence

The following is the content of Zhou Bowen’s speech at the Heart of the Machine AI Technology Annual Conference. The Heart of the Machine has edited and organized it without changing the original meaning:

Thank you, the Heart of the Machine Invited by my heart, I am Zhou Bowen from Tsinghua University. It is now the end of the lunar calendar and the beginning of the Gregorian calendar. I am very happy to have such an invitation to share with you our summary of the development trends of artificial intelligence in the past period, as well as some thoughts on the future.

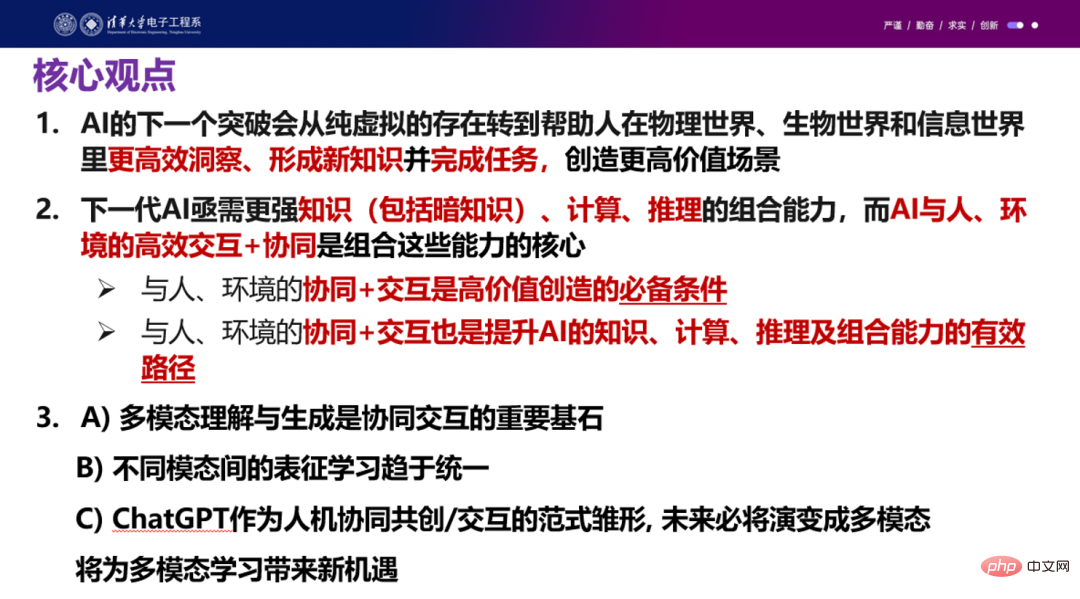

First share the core idea. If you can only remember three points after listening to the entire speech, please remember these three points:

First, the next breakthrough of artificial intelligence will shift from a purely virtual existence to helping people gain more efficient insights and form new knowledge in the physical world, the biological world and the information world. , complete tasks and create higher value scenarios.

Second, the next generation of artificial intelligence urgently needs to strengthen the combination capabilities of knowledge (including dark knowledge), calculation, and reasoning. This combination ability is very important, but we believe that efficient interaction and collaboration between artificial intelligence and people and the environment are the core of combining these abilities.

There are two reasons: First, because collaboration and interaction with people and the environment are necessary conditions for high value creation. Without collaboration between AI and humans, AI cannot accomplish these tasks independently. High-value scenarios; secondly, because this kind of collaboration and interaction is also an effective way to improve AI's knowledge, computing, reasoning and combination capabilities. AI has made great progress in computing, but there is still a big bottleneck between knowledge and reasoning and the effective combination of modules. Adding collaboration and interaction between people and the environment can help make up for some of the bottlenecks of AI in these areas.

Third, we have three judgments about multimodality: first, multimodal understanding and generation are important cornerstones of collaboration and interaction; second, in the past two years, among different modalities, This is a very good basic condition for representation learning to be unified among computers. Third, the recently popular ChatGPT, as the prototype of a future paradigm for human-machine collaborative co-creation and interaction, will surely evolve into multi-modality in the future and will become a multi-modal model. Dynamic learning brings new opportunities. Although it still has many naive aspects, the presentation of this paradigm points us to the future direction.

The above is the core point. In today's report, I will talk about collaborative interactive intelligence and multi-modal learning, and review the latest progress and opportunities.

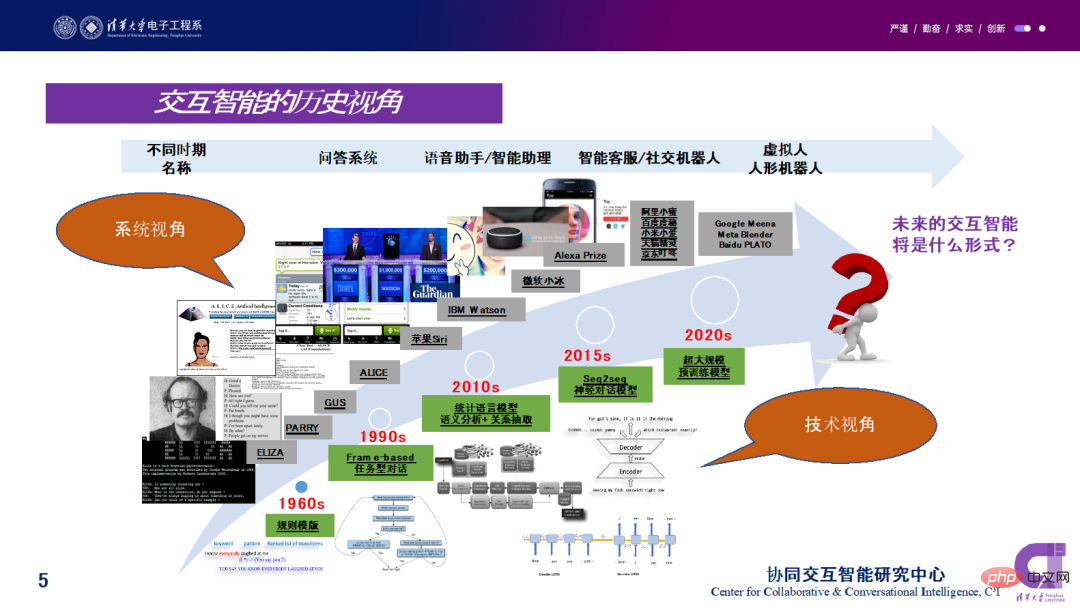

Part One, from the perspective of traditional interactive intelligence, we have come a long way. First of all, I want to emphasize that the collaboration and interaction we are talking about today are completely different from the original interactive intelligence. Interaction in history is more about giving a trained system and completing the interaction as a task, such as from ELIZA, IBM Watson, Microsoft Xiaoice, Siri to JD.com’s intelligent customer service. The collaboration and interaction we are talking about today is to use interaction as a learning method and collaboration as a division of labor between AI and humans to better complete the integration of human-machine collaboration to gain insights, form new knowledge and complete tasks. This is the historical perspective of interactive intelligence as a whole. It can be observed that what drives progress is the change in technical perspective, including from early rule templates to Frame-based task-based dialogue, to the generation of statistical language models, Seq2seq Model, and ultra-large-scale pre-training models.

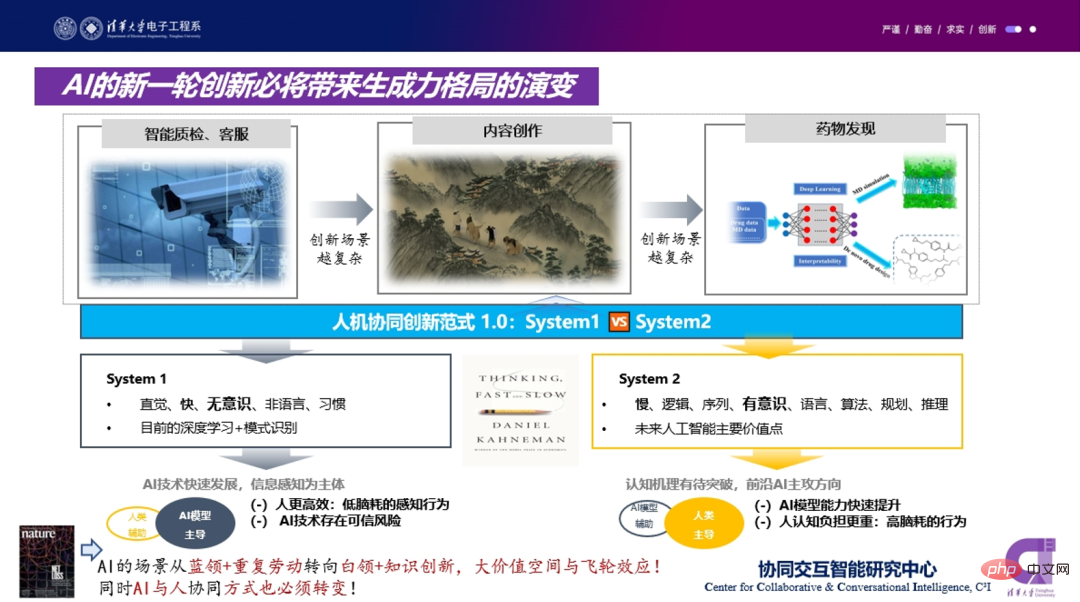

Regarding these changes, we have a judgment that a new round of AI innovation will definitely bring about changes in the productivity pattern. Evolution. A few years ago, everyone was talking more about artificial intelligence application scenarios, focusing on areas such as intelligent quality inspection and customer service. But now we see that AI innovation scenarios are becoming more and more complex, starting to involve artistic content creation, drug discovery, and new knowledge discovery. A best-selling book "Thinking Fast And Slow" by Daniel Kahneman, winner of the 2002 Nobel Prize in Economics, proposed that there are two types of people's thinking methods: System 1 is characterized by intuition and unconsciousness, and System 1 is characterized by intuition and unconsciousness. 2 There are languages, algorithms, calculations, and logic in it.

In the past few years, artificial intelligence has been used more in System 1 scenarios, but in the future, including what is happening now, artificial intelligence is actually better at and more suitable to take on more tasks from the perspective of human-machine collaboration. System 2 works. Because System 1 is more efficient for people, it is a task with low brain consumption and low cognitive load, while System 2 has a very heavy cognitive load for people. It’s just that in the past, the technological progress of artificial intelligence could only do system 1 and not system 2 well. The current trend is that AI is getting closer to system 2.

## Repetitive work (quality inspection, customer service, etc.) has become an application area for white-collar workers and knowledge innovation. There is no doubt that this will bring greater value space and more flywheel effects. What is the flywheel effect? That is, AI can help white-collar workers and knowledge workers better understand, gain insights, and form new knowledge. New knowledge will help design better AI, and better AI can generate more new knowledge.

Under this trend, we must clearly realize that the way AI and people collaborate must change, because AI is no longer the original AI of System 1, but has become System 2 AI. In this case, how AI should collaborate and interact is a cutting-edge issue that needs to be considered.

Why does AI need to have the ability to combine knowledge, calculation, and reasoning? Here are some examples of multi-modal calculations for your reference:

Why does AI need to have the ability to combine knowledge, calculation, and reasoning? Here are some examples of multi-modal calculations for your reference:

For example, in the first picture on the left, ask Where the person in the red jacket will most likely finish at the end of the game, the answer is fourth place. To answer such questions, in addition to very accurate image segmentation and semantic segmentation, a lot of common sense reasoning and discrete reasoning are also required. These are things that our current AI systems are very, very lacking. For the second example, what makes these chairs easy to carry? The answer is "foldable". There is also logical reasoning in it. System 2 challenges like this actually require more iteration and evolution of artificial intelligence.

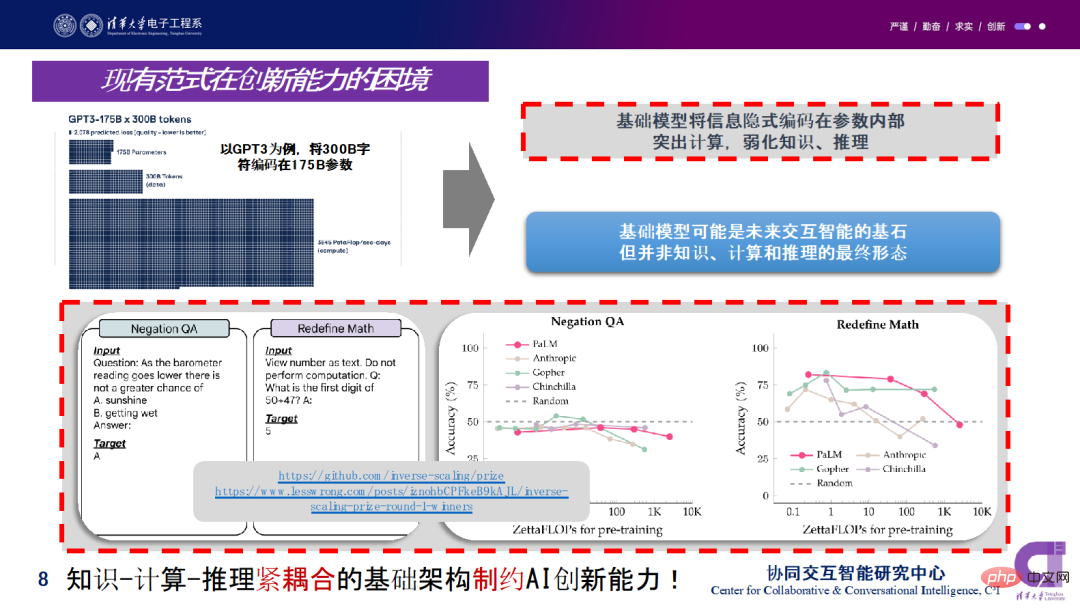

###A development that everyone is currently aware of is the breakthrough of large-scale pre-trained language models. So a natural question is, if we continue to follow this paradigm, can we solve the effective integration of high-value application scenarios and knowledge, calculation and reasoning? ############ Take GPT-3 as an example. Everyone knows that it has 175 billion parameters. It encodes information within the parameters and the model architecture, highlighting calculations and weakening knowledge and reasoning. On the one hand, with the support of "Scaling Law", it has more and more data and its model capabilities are getting stronger and stronger; on the other hand, several scholars at NYU have held a challenge called "Inverse Scaling" for everyone to find Some application scenarios - the larger the model and the larger the parameters, the worse the performance. ###########################There are two examples in the picture above: one is called Negation QA, which is the negation of negation, using double negation. Test the understanding and reasoning capabilities of pre-trained models. The other one is Redefine Math. ######This task aims at redefining mathematical constants for existing mathematical calculation problems to test whether the language model can understand its meaning and calculate correctly######### ######Calculate#########. As you can see from the two figures on the right, for these tasks, the larger the model parameters, the lower the accuracy. ######These examples actually point out that the basic model may be the cornerstone of future interactive intelligence. I personally think it is a more important word than "big model". A very important point is that the basic model is not its final form. To solve the problems encountered, it needs to be better visualized. Therefore, I propose that the effective combination of knowledge, calculation and reasoning is a direction that needs to be researched next. An important aspect of this combination is that human collaboration and interaction can promote the upgrade of these basic models.

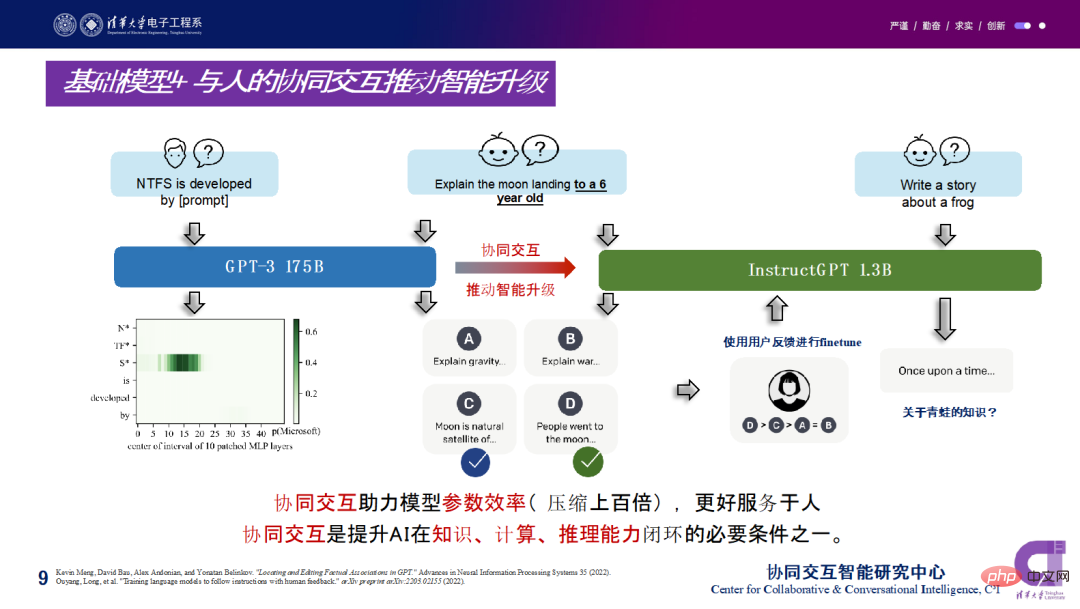

We use another example based on the GPT3 model "InstructGPT" for comparison:

##On some questions, GPT-3 can learn to answer very well based on prompts. But if you ask to explain the moon landing to a 6-year-old child, in terms of GPT3's basic model capabilities, it has various angles to answer this question, because it has a large number of values behind it. For example, starting from the physical principle of gravity, this is the first one; the second one is from the perspective of historical background, the moon landing occurred during the Cold War between the United States and the Soviet Union, explaining how the Cold War happened and how it led to the moon landing project; The third is that from an astronomical perspective, the moon is the planet of the earth. The fourth type starts from the human perspective. For example, humans have always wanted to land on the moon. There are many beautiful legends about Chang'e in China, and the same is true in the West.

However, it is difficult for the current GPT3 model to judge which method is suitable for telling such a thing to a 6-year-old child. It is more based on frequency and the importance of corpus. There is a high probability that It is based on a Wikipedia page to explain what the moon landing and the lunar landing project are, which obviously does not serve the context well. Therefore, InstructGPT is based on this basis and allows users to select and score four types of answers: a, b, c, and d. After ranking is given, this feedback can be taken back to fine-tune the GPT3 model. In this way, if there is a new question next, such as "Write a frog story", the beginning of this model will become "once upon time", a very suitable way for children to start listening to stories.

As a result, the first point is that the model is undoubtedly more efficient, and the other point is that it helps reduce model parameters. InstructGPT has only 1.3 billion model parameters, which is hundreds of times compressed compared to the GPT3 model, but it can better serve people in specific scenarios. Collaborative interaction is a necessary condition to improve AI’s closed-loop computing knowledge, calculation, and reasoning capabilities.

We believe that intelligence includes three basic abilities: knowledge, calculation, and reasoning. We see that computing is currently progressing very fast. Of course, computing also has challenges in computing power and data, but the lack of knowledge and reasoning is particularly obvious.

So here is a question: How to achieve a closed loop among the three? Can strengthening the active collaborative interaction between AI, people, and the environment better help AI achieve a closed loop among the three? Our academic point of view is that we need to introduce collaboration and interaction between AI, people, and the environment. On the one hand, we can improve the capabilities of each module, and on the other hand, we can combine modules to form collaborative interactions.

Echoing our opening point, the next AI breakthrough will shift from virtual existence to helping people more efficiently gain insight into new knowledge and complete tasks in the physical, biological and information worlds. .

At the Collaborative Interactive Intelligence Research Center of Tsinghua University, we mainly propose and do research on these academic issues:

#The first is that we propose a new collaborative perspective, that is, we study how to make AI more responsible for System 2 and let people be more responsible for System 1. The first challenge this brings is that AI itself must shift more towards tasks such as logical reasoning, high calculation, and high complexity, instead of just doing the pattern recognition and intuition work of System 1. The second challenge is how humans and AI can collaborate under this new division of labor. These are two research directions.

The second is in the collaboration between AI and humans, allowing AI to better learn the reinforcement learning of humans in the loop. We need to study better AI continuous learning, and do a lot of multi-modal representation enhancement work in the collaboration between AI, the environment, and people. Multimodality is an important channel for collaboration, and at the same time, the enhancement mechanism for conversational interaction must be strengthened.

There is also a very important collaboration, which is the collaboration between AI and the environment. AI needs to adapt to different environments. These environmental adaptations can be summed up in one sentence: cloud-to-edge adaptation and edge-to-cloud self-evolution. It is easy to understand the self-adaptation from the cloud to the edge. Under different computing power and communication conditions, how to make these basic models better adapt to these environments; the self-evolution from the edge to the cloud actually allows the intelligence at the edge to reversely help. The base model iterates better. In other words, this is a collaboration and interaction between small models and large models. However, we do not believe that this kind of collaboration and interaction is one-way. It can only be the large model that obtains the small model through knowledge distillation and pruning. We believe that the iteration and interaction of small models should have a more effective path to the basic model.

We believe that the above three technical paths are very important. There will be a bottom-level support below - perhaps our current research will bring about some basic theoretical breakthroughs in trustworthy artificial intelligence, because with a better combination of knowledge, calculation, and reasoning, we can better solve the problems originally caused by knowledge, calculation, and reasoning. The interpretability, robustness, and generalization challenges of the black box created by the fusion of calculation and reasoning. We hope to better achieve this progress in trustworthy artificial intelligence in a divisible and composable way. If a person cannot transparently see the artificial intelligence reasoning process, it is actually difficult to trust the results of the artificial intelligence's System 2.

Look at this issue from another perspective. Everyone knows that ChatGPT is very popular recently, so we have done a lot of work to avoid ChatGPT. Of course, it also includes Galactica, a system proposed by Facebook some time ago that uses AI to help write scientific papers. We found that they all require the collaboration of people and the environment to create scenes. These value scenarios didn’t actually exist before, but now they are starting to become possible. But once this possibility is separated from human collaboration and interaction, we will immediately find that these AI systems fall short.

Including Galactica, it can write a very smooth paper, but many basic facts and references are wrong . For example, the author's name is true, but part of the title is true and part false, or multiple papers are combined together. What I want to emphasize is that current AI does not have the ability to complete this complete closed loop of knowledge, calculation, and reasoning, so it must involve humans.

Although Galactica will be offline soon, its purpose is not to let everyone use it to independently complete papers and scientific research, but to better help people, so people must be in a closed loop in. This is another perspective that emphasizes that human collaboration and interaction is a very important basic condition.

Next, I will talk about how I view the progress and new opportunities of multi-modal learning in the context of collaborative interaction. First of all, I think multi-modality has been developing very rapidly in recent times, and it has begun to bring about several obvious trends.

First, there is convergence among multimodalities in the dimensions of modeling and characterizing structures. For example, in the past, in images and videos, everyone used CNN, because text is a Sequence Model, and everyone mostly used RNN and LSTM. But now, no matter what the modality is, everyone can treat all tokenized inputs as one Sequence or Graph Model is processed using self-attention plus multi-head mechanism. The popular Transformer architecture in recent years has made the structures of basically all models converge.

But a deep question is why this architecture of Transformer has advantages for all modal representations? We also have some thoughts, and the conclusion is that Transformer can model different modes in a more universal geometric topological space, further reducing the modeling barriers between multiple modes. Therefore, this advantage of Transformer just lays the foundation for this architectural convergence in the multi-modal direction.

Secondly, we found that the multi-modal pre-training dimensions are also converging. The earliest Bert was proposed in the field of natural language, and this Mask mode detonated the pre-training model. Recent work, including Mr. He Kaiming's MAE work, including work in the field of speech, has continued to use similar ideas. Through this mask method, a convergent pre-trained model architecture is formed between different modalities. Now the pre-training barriers between modalities have been broken down, and the dimensions of pre-trained models have further converged. For example, MAE introduces BERT's pre-training method into various modalities such as vision, image, and voice. Therefore, the Mask mechanism shows universality in multiple modalities.

The third trend is the unification of pre-architecture parameters and pre-training goals. Currently, the Transformer architecture is used to model text, images, and audio, and parameters can be shared between multiple tasks.

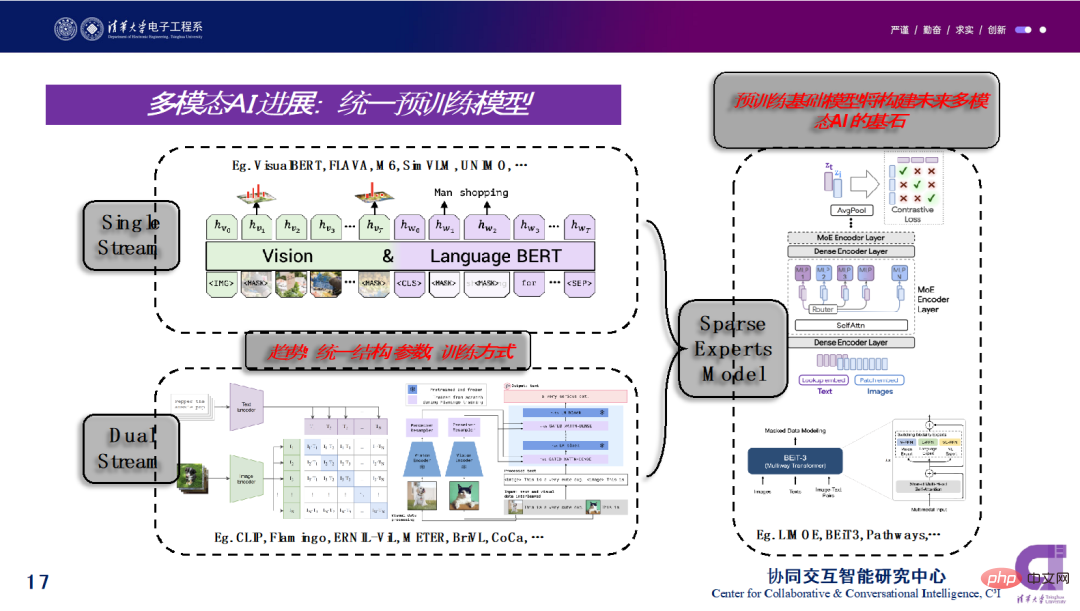

Specifically speaking, the current multi-modal pre-training models are mainly divided into single-stream and dual-stream models. In a single-stream architecture, we assume that the underlying correlation and alignment between the two models is relatively simple. The dual-stream architecture assumes that it is necessary to separate the interaction of modal classes within the modality and the interaction between cross-modalities to obtain better multi-modal representation and be able to encode and fuse different modal information.

The question is whether there is a better way to unify these ideas. The current trend is that sparsity and modularity may be two key properties between more powerful multi-modal, multi-tasking. The sparse expert model can be viewed as a balanced sparse expert model system between single-stream and dual-stream, which can handle different experts, different modalities and tasks.

One question we raised is whether we can use the collaborative interaction model to compress the Google Pathway model a hundred times on these specific tasks, but retain this sparseness and module structure? This type of work is well worthy of follow-up research.

Returning to the aspect of conversational collaborative interaction, I think ChatGPT is a very important work at present. Its core value is to light up a new milestone in the direction of collaborative interaction. It can Used in academic writing, code generation, encyclopedia Q&A, instruction understanding, etc. The pre-trained basic model can provide various capabilities such as interactive intelligent question answering, writing, and code generation. The core capability improvement of ChatGPT is to add human-in-the-loop reinforcement learning to GPT 3, as well as human selection and ranking of different answers.

Although the current presentation form in ChatGPT uses natural language as the main carrier, the entire interaction modality will definitely be expanded to this multi-modality. Human collaboration and interaction in multi-modal scenarios will actually be more efficient, bring more information, and bring about the integration of knowledge from various modalities.

If the collaborative interaction capabilities of these people in the loop and the AI generation capabilities are integrated, a lot of things can be done. For example, the collaborative interaction ChatGPT model and the Diffusion Model are integrated for product innovation and design innovation. In the process of collaborative interaction, models such as ChatGPT constantly look for the mainstream trends in current design and specific consumer preferences. Through insights into the emotional experience of consumer scenarios, judgments on design trends and technology trends, combined with a large number of The analysis of pictures can achieve co-creation through multiple rounds of collaborative interaction with designers or professional product managers.

In some very detailed scenarios, many people actually have no prior knowledge, such as in the field of smart home, but people can generate these ideas through multiple rounds of human-computer collaborative interaction. Design, and then use the Stable Diffusion Model to transform these core keyword scene experiences of human creativity into high-fidelity restored original design drawings. Collaborative interaction can help people carry out more efficient product innovation and design innovation, which is what we at Xianyuan Technology are doing.

Multimodal work is becoming more and more important, so our center is taking the lead in launching the TPAMI 2023 special issue on “Large-Scale Multimodal Learning”, with the goal of bringing together people from multiple disciplines such as: computer vision, natural language processing, machine learning, deep learning, smart healthcare, bioinformatics, cognitive science) to raise important scientific issues and discover research opportunities to cope with multi-modal learning in the era of deep learning and big data outstanding challenges in the field.

The above is the detailed content of Zhou Bowen of Tsinghua University: The popularity of ChatGPT reveals the high importance of the new generation of collaboration and interactive intelligence. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView...

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to realize the function of playing pictures like videos? Many times, we need to achieve similar video playback effects in the application, but the playback content is not...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem that the result of OpenCV.js projection transformation is a blank transparent picture?

Apr 04, 2025 pm 03:45 PM

How to solve the problem of transparent image with blank projection transformation result in OpenCV.js. When using OpenCV.js for image processing, sometimes you will encounter the image after projection transformation...