Technology peripherals

Technology peripherals

AI

AI

Call for suspension of GPT-5 research and development sparks fierce battle! Andrew Ng and LeCun took the lead in opposition, while Bengio stood in support

Call for suspension of GPT-5 research and development sparks fierce battle! Andrew Ng and LeCun took the lead in opposition, while Bengio stood in support

Call for suspension of GPT-5 research and development sparks fierce battle! Andrew Ng and LeCun took the lead in opposition, while Bengio stood in support

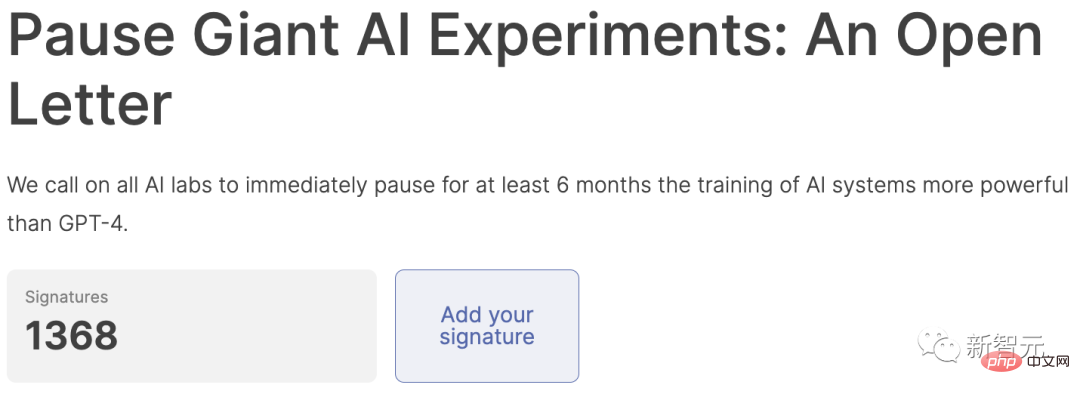

Yesterday, a joint letter written by thousands of big guys calling for a six-month suspension of super AI training exploded like a bomb on the Internet at home and abroad.

After a day of verbal exchanges, several key figures and other big names came out to respond publicly.

Some are very official, some are very personal, and some do not face the problem directly. But one thing is for sure, both the views of these big guys and the interest groups they represent are worthy of careful consideration.

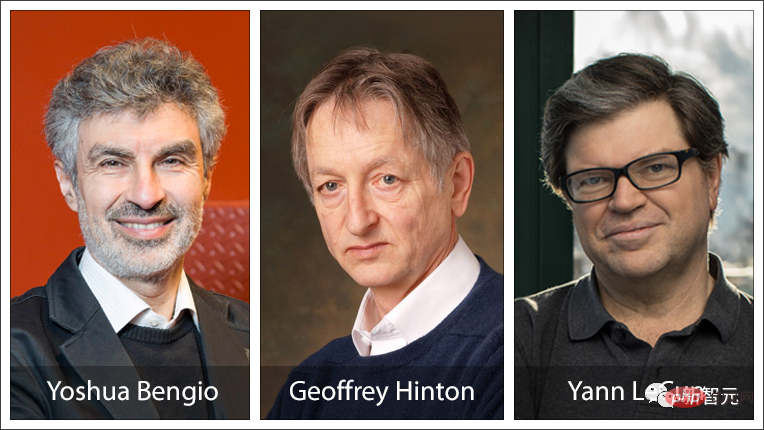

What’s interesting is that among the Turing Big Three, one took the lead in signing, one strongly opposed, and one did not say a word.

##Bengio’s signature, Hinton’s silence, LeCun’s opposition

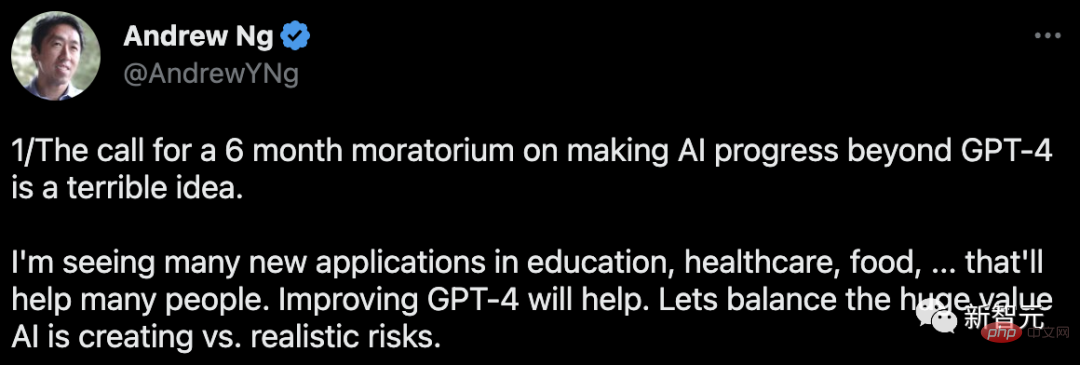

Andrew Ng (opposition)In this incident, Andrew Ng, a former member of Google Brain and founder of the online education platform Coursera, was a clear-cut opponent.

He clearly stated his attitude: suspending "making AI progress beyond GPT-4" for 6 months is a bad idea.

He said that he has seen many new AI applications in education, health care, food and other fields, and many people will benefit from this. And improving GPT-4 would also be beneficial.

What we should do is to strike a balance between the huge value created by AI and the real risks.

Regarding what was mentioned in the joint letter, "If the training of super-powerful AI cannot be quickly suspended, the government should be involved", Ng Enda also said This idea is terrible.

He said that asking the government to suspend emerging technologies that they do not understand is anti-competitive, sets a bad precedent, and is a terrible policy innovation.

He admitted that responsible AI is important and AI does have risks.

But the media's rendering of "AI companies are frantically releasing dangerous codes" is obviously too exaggerated. The vast majority of AI teams attach great importance to responsible AI and safety. But he also admitted that "unfortunately, not all."

Finally, he emphasized again:

A 6-month suspension period is not a practical suggestion. To improve the safety of AI, regulations around transparency and auditing will be more practical and have a greater impact. As we advance technology, let’s also invest more in security instead of stifling progress.Under his Twitter, netizens have already expressed strong opposition: The reason why the bosses are so calm is probably because the pain of unemployment will not fall on them.

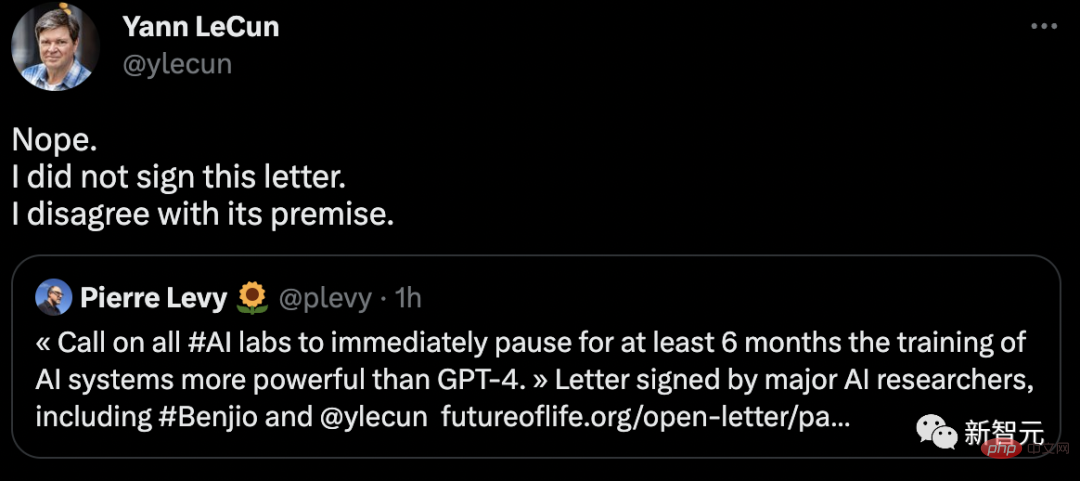

LeCun (Opposition)As soon as the joint letter was sent out, netizens rushed to tell them: Turing Award giants Bengio and LeCun both signed the letter!

LeCun, who often surfs the front lines of the Internet, immediately refuted the rumors: No, I did not sign, and I do not agree with the premise of this letter.

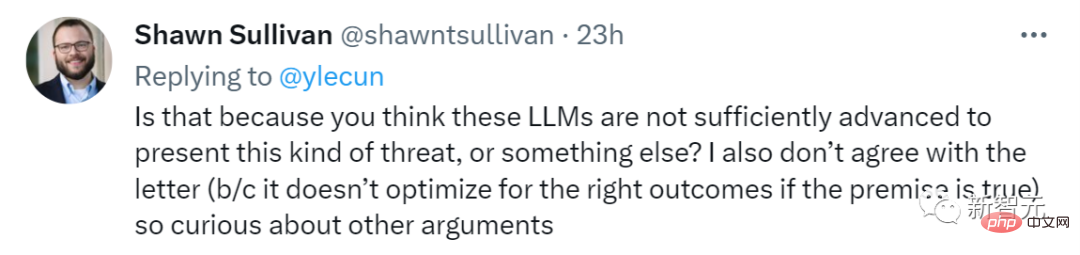

Some netizens said that I don’t agree with this letter either, but I’m curious: the reason why you don’t agree with this letter is that you think LLM Is it not advanced enough to threaten humanity at all, or is it for other reasons?

But LeCun did not answer any of these questions.

After 20 hours of mysterious silence, LeCun suddenly retweeted a netizen’s tweet:

"OpenAI can wait long enough. It took 6 months before GPT4 was released! They even wrote a white paper for this..."

In this regard, LeCun praised: That's right, the so-called "Suspension of research and development" is nothing more than "secret research and development", which is exactly the opposite of what some signatories hope.

It seems that nothing can be hidden from LeCun’s discerning eyes.

The netizen who asked before also agreed: This is why I oppose this petition - no "bad guy" will really stop.

"So this is like an arms treaty that no one abides by? Are there not many examples of this in history?"

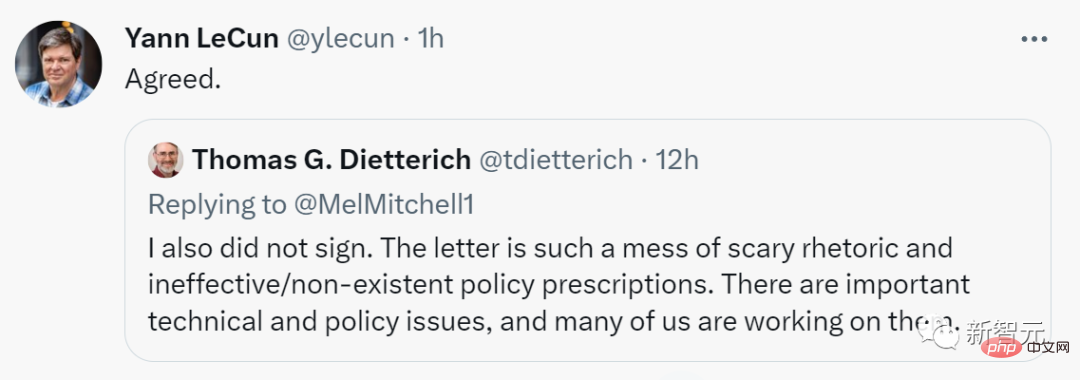

After a while Yes, he retweeted a big shot’s tweet.

The boss said, "I didn't sign it either. This letter is filled with a bunch of terrible rhetoric and invalid/non-existent policy prescriptions." LeCun said, "I agree. ”.

Bengio and Marcus (yes)

The first big person to sign the open letter is the famous Turing Award winner Yoshua Bengio.

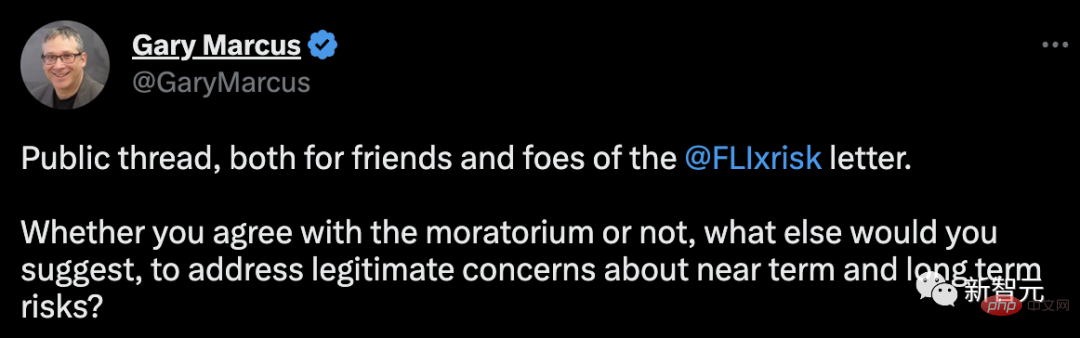

Of course, New York University professor Marcus also voted in favor. He seems to be the first person to expose this open letter.

After the discussion became louder, he quickly posted a blog explaining his position, which was still full of highlights.

Breaking News: The letter I mentioned earlier is now public. The letter calls for a six-month moratorium on training AI "more powerful than GPT-4." Many famous people signed it. I also joined.

I was not involved in drafting it because there are other things to fuss about (e.g., which AIs are more powerful than GPT-4? Since the details of GPT-4's architecture or training set It hasn’t been announced yet, so how will we know?)—but the spirit of the letter is one I support: Until we can better handle the risks and benefits, we should proceed with caution.

It will be interesting to see what happens next.

And another point of view that Marcus just expressed 100% agreement with is also very interesting. This point of view says:

GPT-5 will not be AGI . It is almost certain that no GPT model would be AGI. It is completely impossible for any model optimized with the methods we use today (gradient descent) to be AGI. The upcoming GPT model will definitely change the world, but over-hyping it is crazy.

Altman (non-committal)

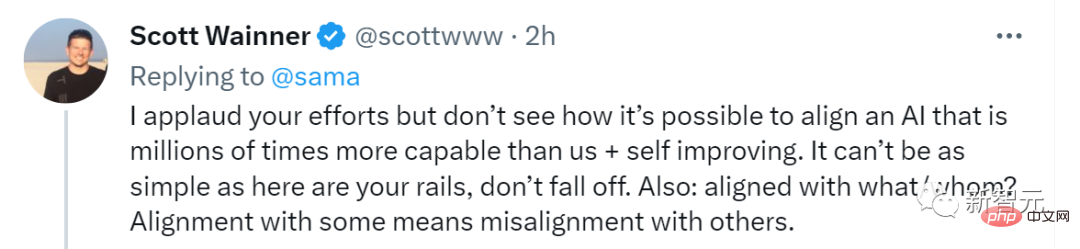

As of now, Sam Altman has not made a clear position on this open letter.

However, he did express some views on general artificial intelligence.

What constitutes good general artificial intelligence:

1. Alignment of technical capabilities for superintelligence

2. Adequate alignment between most leading AGI efforts Coordination

3. An effective global regulatory framework

Some netizens questioned: "Align with what? Align with whom? Alignment with some people means aligning with others Not aligned."

##This comment lit up: "Then you should let it Open."

Greg Brockman, another founder of OpenAI, retweeted Altman’s tweet and once again emphasized that OpenAI’s mission “is to ensure that AGI benefits all mankind.”

Once again, some netizens pointed out the point: You guys keep saying "aligned with the designer's intention" all day long, but other than that, no one knows the truth. What does alignment mean.

Yudkowsky (radical)

There is also a decision theorist named Eliezer Yudkowsky, who has a more radical attitude:

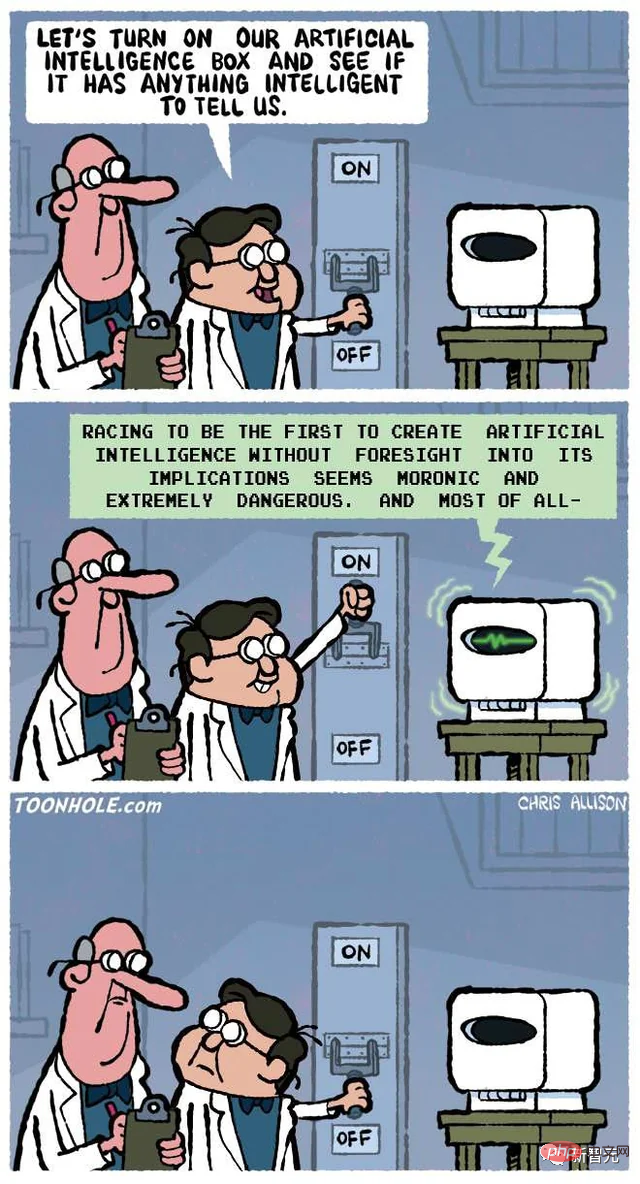

Suspending AI development is not enough, we need to shut down all AI! All closed!

#If this continues, every one of us will die.

As soon as the open letter was released, Yudkowsky immediately wrote a long article and published it in TIME magazine.

He said that he did not sign because in his opinion, the letter was too mild.

This letter underestimates the seriousness of the situation and asks for too few problems to be solved.

He said that the key issue is not the intelligence of "competing with humans". Obviously, when AI becomes smarter than humans, this step is obvious.

The key is that many researchers, including him, believe that the most likely consequence of building an AI with superhuman intelligence is that everyone on the planet will die.

is not "maybe", but "will definitely".

If there is not enough accuracy, the most likely result is that the AI we create will not do what we want, nor will it care about us, nor will it care about us Other sentient life.

Theoretically, we should be able to teach AI to learn this kind of care, but right now we don’t know how.

If there is no such care, the result we get is: AI does not love you, nor does it hate you, you are just a pile of atomic materials, which it can use Do anything.

And if humans want to resist superhuman AI, they will inevitably fail, just like "the 11th century trying to defeat the 21st century", "the South Homo apes tried to defeat Homo sapiens".

Yudkowsky said that the AI we imagine to do bad things is a thinker living on the Internet and sending malicious emails to humans every day, but in fact, it may be a hostile person. The superhuman AI is an alien civilization that thinks millions of times faster than humans. In its view, humans are stupid and slow.

When this AI is smart enough, it will not just stay in the computer. It can send the DNA sequence to the laboratory via email, and the laboratory will produce the protein on demand, and then the AI will have a life form. Then all living things on earth will die.

How should humans survive in this situation? We have no plans at the moment.

OpenAI is just a public call for alignment for future AI. And DeepMind, another leading AI laboratory, has no plans at all.

From the OpenAI official blog

These dangers exist regardless of whether the AI is conscious or not. Its powerful cognitive system can work hard to optimize and calculate standard outputs that satisfy complex results.

Indeed, current AI may simply imitate self-awareness from training data. But we actually know very little about the internal structure of these systems.

If we are still ignorant of GPT-4, and GPT-5 has evolved amazing capabilities, just like from GPT-3 to GPT- 4 is the same, then it is difficult for us to know whether it was humans who created GPT-5 or AI itself.

On February 7, Microsoft CEO Nadella gloated publicly that the new Bing had forced Google to "come out and do a little dance."

His behavior is irrational.

We should have thought about this issue 30 years ago. Six months is not enough to bridge the gap.

It has been more than 60 years since the concept of AI was proposed. We should spend at least 30 years ensuring that superhuman AI "doesn't kill anyone."

We simply can’t learn from our mistakes, because once you’re wrong, you’re dead.

If a 6-month pause would allow the earth to survive, I would agree, but it won’t.

What we have to do is these:

#1. The training of new large language models must not only be suspended indefinitely , but also to be implemented globally.

And there can be no exceptions, including the government or the military.

#2. Shut down all large GPU clusters, which are the large computing facilities used to train the most powerful AI.

Pause all models that are training large-scale AI, set a cap on the computing power used by everyone when training AI systems, and gradually lower this cap in the next few years to Compensate for more efficient training algorithms.

Governments and militaries are no exception, immediately establishing multinational agreements to prevent banned conduct from being transferred elsewhere.

Track all GPUs sold. If there is intelligence that GPU clusters are being built in countries outside the agreement, the offending data center should be destroyed through air strikes.

What should be more worrying is the violation of the moratorium than armed conflict between countries. Don't view anything as a conflict between national interests, and know that anyone who talks about an arms race is a fool.

In this regard, we all either live together or die together. This is not a policy but a fact of nature.

Poll

Because the signatures were too popular, the team decided to pause the display first so that the review could catch up. (The signatures at the top of the list are all directly verified)

The above is the detailed content of Call for suspension of GPT-5 research and development sparks fierce battle! Andrew Ng and LeCun took the lead in opposition, while Bengio stood in support. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C language

Apr 04, 2025 am 08:54 AM

How to output a countdown in C? Answer: Use loop statements. Steps: 1. Define the variable n and store the countdown number to output; 2. Use the while loop to continuously print n until n is less than 1; 3. In the loop body, print out the value of n; 4. At the end of the loop, subtract n by 1 to output the next smaller reciprocal.

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to play picture sequences smoothly with CSS animation?

Apr 04, 2025 pm 05:57 PM

How to achieve the playback of pictures like videos? Many times, we need to implement similar video player functions, but the playback content is a sequence of images. direct...

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

How to implement nesting effect of text annotations in Quill editor?

Apr 04, 2025 pm 05:21 PM

A solution to implement text annotation nesting in Quill Editor. When using Quill Editor for text annotation, we often need to use the Quill Editor to...

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Zustand asynchronous operation: How to ensure the latest state obtained by useStore?

Apr 04, 2025 pm 02:09 PM

Data update problems in zustand asynchronous operations. When using the zustand state management library, you often encounter the problem of data updates that cause asynchronous operations to be untimely. �...

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a foreground page in a React Vite project using AI tools?

Apr 04, 2025 pm 01:45 PM

How to quickly build a front-end page in back-end development? As a backend developer with three or four years of experience, he has mastered the basic JavaScript, CSS and HTML...

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView: How to achieve efficient 'synchronous' communication?

Apr 04, 2025 am 11:45 AM

Electron rendering process and WebView...

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to use CSS to achieve smooth playback effect of image sequences?

Apr 04, 2025 pm 04:57 PM

How to realize the function of playing pictures like videos? Many times, we need to achieve similar video playback effects in the application, but the playback content is not...

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the effect of high input elements but high text at the bottom?

Apr 04, 2025 pm 10:27 PM

How to achieve the height of the input element is very high but the text is located at the bottom. In front-end development, you often encounter some style adjustment requirements, such as setting a height...