Technology peripherals

Technology peripherals

AI

AI

Wear a VR helmet to teach a robot to grasp, and the robot learns it on the spot

Wear a VR helmet to teach a robot to grasp, and the robot learns it on the spot

Wear a VR helmet to teach a robot to grasp, and the robot learns it on the spot

In recent years, many interesting developments have emerged in the field of robotics, such as robot dogs that can dance, play football, and bipedal robots that move things. Typically these robots rely on generating control strategies based on sensory input. Although this approach avoids the challenges of developing state estimation modules, modeling object properties, and tuning controller gains, it requires significant domain expertise. Even though much progress has been made, learning bottlenecks make it difficult for robots to perform arbitrary tasks and achieve universal goals.

To understand the key to robot learning, a core question is: How do we collect training data for robots? One approach is to collect data about the robot through a self-supervised data collection strategy. While this approach is relatively robust, it often requires thousands of hours of data interaction with the real world, even for relatively simple operational tasks. The other is to train on simulated data and then transfer to real robots (Sim2Real). This allows robots to learn complex robotic behaviors orders of magnitude faster. However, setting up a simulated robotic environment and specifying simulator parameters often requires extensive domain expertise.

In fact, there is a third method. To collect training data, you can also ask human teachers to provide demonstrations, and then train the robot to quickly imitate human demonstrations. This imitation approach has recently shown great potential in a variety of challenging operational problems. However, most of these works suffer from a fundamental limitation—it is difficult to collect high-quality demonstration data for robots.

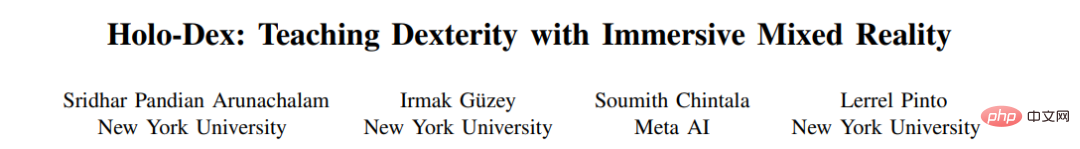

Based on the above issues, researchers from New York University and Meta AI proposed HOLO-DEX, a new framework for collecting demonstration data and training dexterous robots. It uses a VR headset (such as the Quest 2) to place human teachers in an immersive virtual world. In this virtual world, teachers can see what the robot "sees" through the robot's eyes and control the Allegro manipulator via built-in pose detectors.

Looks like a human teaching the robot to do the movements "step by step":

HOLODEX allows humans Seamlessly providing high-quality demonstration data to robots through a low-latency observation feedback system, it has the following three advantages:

- Compared with self-supervised data collection methods, HOLODEX is based on powerful imitation learning technology and can be trained quickly without a reward mechanism;

- Compared with the Sim2Real method, the learned strategy can be directly executed on the real robot because They are trained on real data;

- Compared with other imitation methods, HOLODEX significantly reduces the requirements for domain expertise and only requires people to operate VR equipment.

## Paper link: https://arxiv.org/pdf/2210.06463.pdf

Project link: https://holo-dex.github.io/

Code link: https:/ /github.com/SridharPandian/Holo-Dex

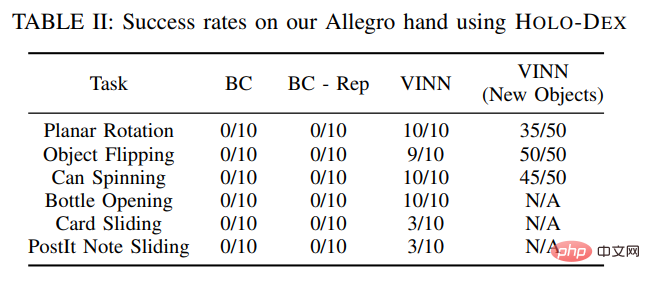

To evaluate the performance of HOLO-DEX, the study conducted experiments on six tasks requiring dexterity, including handheld Objects, unscrewing bottle caps with one hand, etc. The study found that human teachers using HOLO-DEX were 1.8 times faster than previous work on single-image teleoperation (teleoperation). On 4/6 tasks, the success rate of the HOLO-DEX learning strategy exceeds 90%. Additionally, the study found that the dexterous strategies learned through HOLO-DEX can generalize to new, unseen target objects.

Overall, the contributions of this study include:

- Provides a method for human teachers to achieve high-quality teleoperation in mixed reality with the help of VR headsets;

- Experiments show that HOLO-DEX The collected demonstrations can be used to train effective and versatile dexterous manipulation behaviors; The utility of design.

- In addition, the mixed reality API, research collection demonstrations and training code related to HOLO-DEX have been open source: https://holo-dex.github.io/

HOLO-DEX Architecture Overview

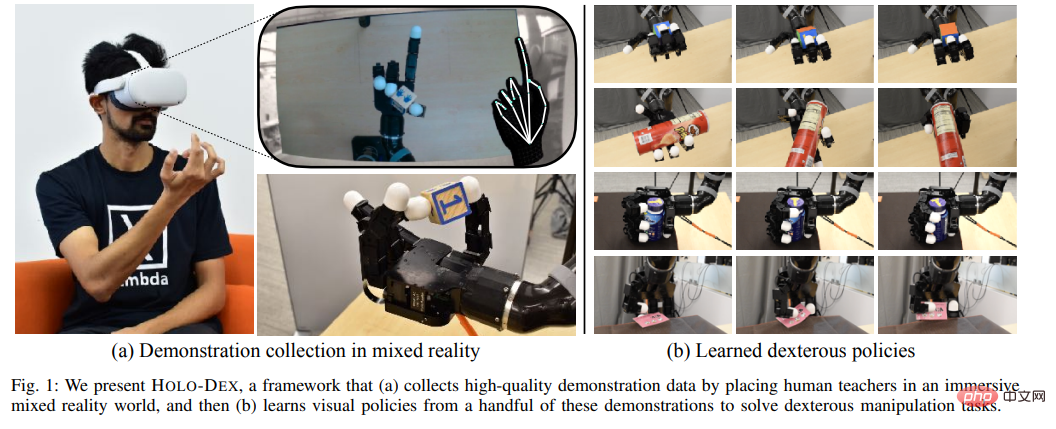

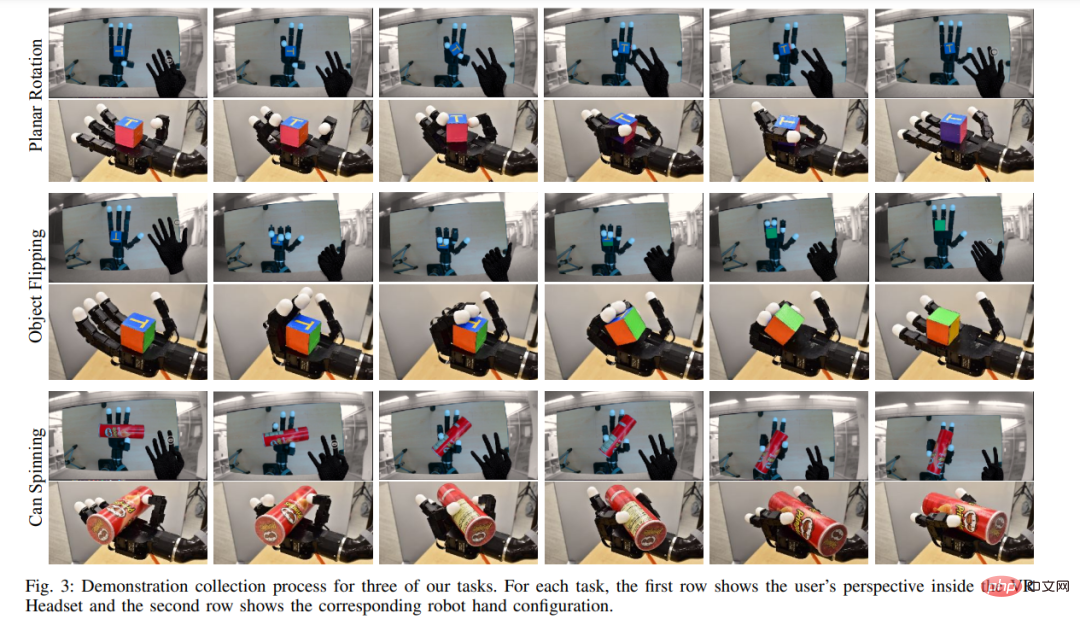

As shown in Figure 1 below, HOLO-DEX operates in two stages. In the first phase, a human teacher uses a virtual reality (VR) headset to provide a demonstration to the robot. This stage includes creating a virtual world for teaching, estimating the teacher's hand posture, relocating the teacher's hand posture to the robot hand, and finally controlling the robot hand. After collecting some demonstrations in the first phase, the second phase of HOLO-DEX learns visual strategies to solve the demonstrated tasks.

The study used the Meta Quest 2 VR headset to place human teachers in the virtual world, with a resolution of 1832 × 1920 and a refresh rate of 72 Hz. The base version of the headset is priced at $399 and is relatively light at 503 grams, making presentations easier and more comfortable for teachers. What’s more, Quest 2’s API interface allows for the creation of custom mixed reality worlds that visualize robotic systems alongside diagnostic panels in VR.

Hand Pose Retargeting

Next, the teacher’s hand pose extracted from VR needs to be retargeted to the robot hand. This first involves calculating the angles of each joint of the teacher's hand, and then a direct reorientation method is to "command" the robot's joints to move to the corresponding angles. This method worked for all fingers in the study except the thumb, but the shape of the Allegro robotic hand doesn't exactly match that of humans, so the method doesn't work entirely with the thumb.To solve this problem, this study maps the spatial coordinates of the teacher's thumb tip to the robot's thumb tip, and then calculates the thumb's joint angle through an inverse kinematics solver. It should be noted that since the Allegro manipulator does not have a pinky finger, the study ignored the angle of the teacher's pinky finger.

The entire posture redirection process does not require any calibration or teacher-specific adjustments to collect demos. But the study found that thumb redirection could be improved by finding a specific mapping from the teacher's thumb to the robot's thumb. The entire process is computationally cheap and can transmit the desired robot hand pose at 60 Hz.

Robot Hand Control

Allegro Hand performs asynchronous control through the ROS communication framework. Given the robot hand joint positions calculated by the reorientation program, this study uses a PD controller to output the required torque at 300Hz. To reduce the steady-state error, this study uses a gravity compensation module to calculate the offset torque. In latency tests, the study found that sub-100 millisecond latency was achieved when the VR headset was on the same local network as the robotic hand. Low latency and low error rates are critical to HOLO-DEX as this allows intuitive teleoperation of the robotic hand by a human teacher.

When human teachers control the robot hand, they can see the robot's changes in real time (60Hz). This allows the teacher to correct execution errors of the robot hand. During the teaching process, the study recorded observation data from three RGBD cameras and the robot's motion information at a frequency of 5Hz. The study had to reduce recording frequency due to the large data footprint and associated bandwidth required to record multiple cameras.

Use HOLO-DEX data for imitation learning

After collecting the data, we enter the second stage. HOLO-DEX needs to train the visual strategy on the data. This study adopts nearest neighbor imitation (INN) algorithm for learning. In previous work, INN was shown to produce smart state-based policies on Allegro. HOLO-DEX goes a step further and demonstrates that these visual strategies generalize to novel objects in a variety of dexterous manipulation tasks.

In order to choose a learning algorithm to obtain low-dimensional embeddings, this study tried several state-of-the-art self-supervised learning algorithms and found that BYOL provided the best nearest neighbor results, so BYOL was selected As a basic self-supervised learning method.

Experimental results

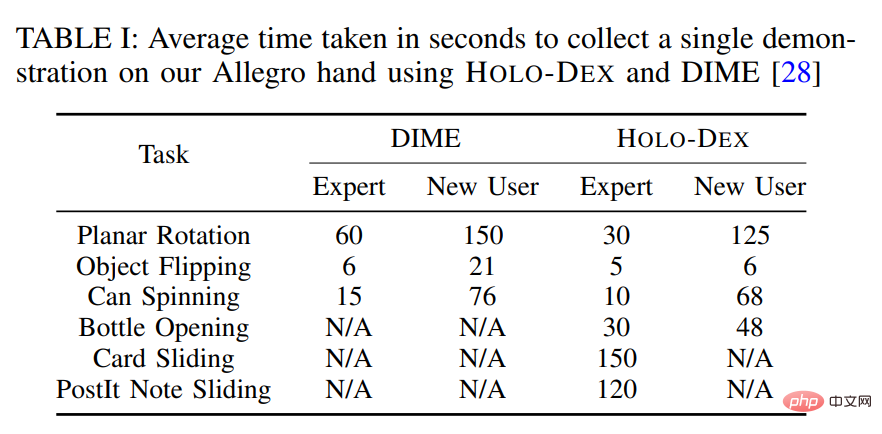

Table 1 below shows that HOLO-DEX collects successful demos 1.8 times faster than DIME. For 3/6 tasks requiring precise 3D motion, the study found that single-image teleoperation was not even sufficient to collect a single demonstration.

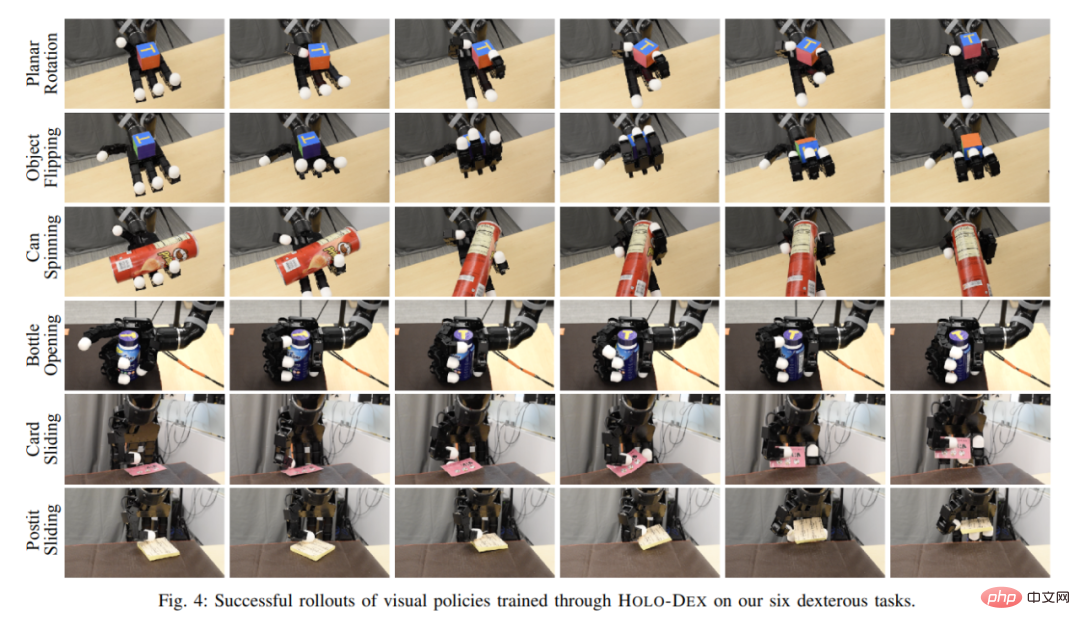

This study examined the performance of various imitation learning strategies on dexterity tasks. The success rate of each task is shown in Table 2 below.

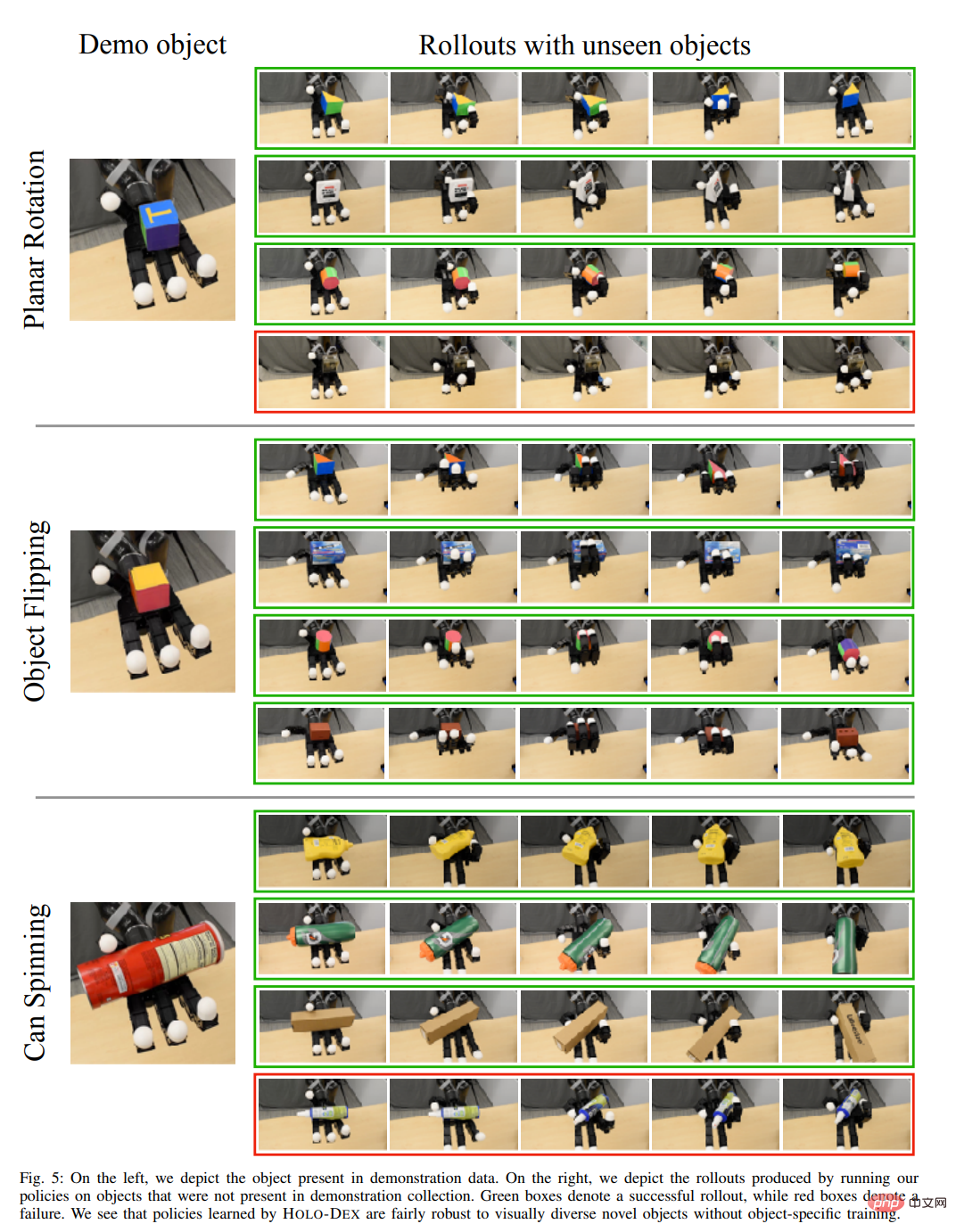

Since the strategies proposed in this study are vision-based and do not require explicit estimation of the state of objects, they can be compared with those not seen in training objects are compatible. The study evaluated its manual manipulation strategies that were trained to perform plane rotation, object flipping, and Can Spinning tasks on objects of a variety of visual appearances and geometries, as shown in Figure 5 below.

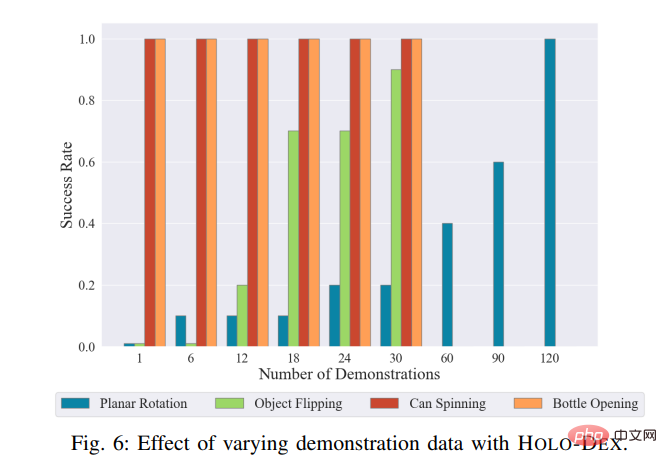

In addition, the study also tested the performance of HOLO-DEX on data sets of different sizes for different tasks. The visualization results are shown in the figure below .

The above is the detailed content of Wear a VR helmet to teach a robot to grasp, and the robot learns it on the spot. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1387

1387

52

52

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The second generation Ameca is here! He can communicate with the audience fluently, his facial expressions are more realistic, and he can speak dozens of languages.

Mar 04, 2024 am 09:10 AM

The humanoid robot Ameca has been upgraded to the second generation! Recently, at the World Mobile Communications Conference MWC2024, the world's most advanced robot Ameca appeared again. Around the venue, Ameca attracted a large number of spectators. With the blessing of GPT-4, Ameca can respond to various problems in real time. "Let's have a dance." When asked if she had emotions, Ameca responded with a series of facial expressions that looked very lifelike. Just a few days ago, EngineeredArts, the British robotics company behind Ameca, just demonstrated the team’s latest development results. In the video, the robot Ameca has visual capabilities and can see and describe the entire room and specific objects. The most amazing thing is that she can also

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

How can AI make robots more autonomous and adaptable?

Jun 03, 2024 pm 07:18 PM

In the field of industrial automation technology, there are two recent hot spots that are difficult to ignore: artificial intelligence (AI) and Nvidia. Don’t change the meaning of the original content, fine-tune the content, rewrite the content, don’t continue: “Not only that, the two are closely related, because Nvidia is expanding beyond just its original graphics processing units (GPUs). The technology extends to the field of digital twins and is closely connected to emerging AI technologies. "Recently, NVIDIA has reached cooperation with many industrial companies, including leading industrial automation companies such as Aveva, Rockwell Automation, Siemens and Schneider Electric, as well as Teradyne Robotics and its MiR and Universal Robots companies. Recently,Nvidiahascoll

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

After 2 months, the humanoid robot Walker S can fold clothes

Apr 03, 2024 am 08:01 AM

Editor of Machine Power Report: Wu Xin The domestic version of the humanoid robot + large model team completed the operation task of complex flexible materials such as folding clothes for the first time. With the unveiling of Figure01, which integrates OpenAI's multi-modal large model, the related progress of domestic peers has been attracting attention. Just yesterday, UBTECH, China's "number one humanoid robot stock", released the first demo of the humanoid robot WalkerS that is deeply integrated with Baidu Wenxin's large model, showing some interesting new features. Now, WalkerS, blessed by Baidu Wenxin’s large model capabilities, looks like this. Like Figure01, WalkerS does not move around, but stands behind a desk to complete a series of tasks. It can follow human commands and fold clothes

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

The first robot to autonomously complete human tasks appears, with five fingers that are flexible and fast, and large models support virtual space training

Mar 11, 2024 pm 12:10 PM

This week, FigureAI, a robotics company invested by OpenAI, Microsoft, Bezos, and Nvidia, announced that it has received nearly $700 million in financing and plans to develop a humanoid robot that can walk independently within the next year. And Tesla’s Optimus Prime has repeatedly received good news. No one doubts that this year will be the year when humanoid robots explode. SanctuaryAI, a Canadian-based robotics company, recently released a new humanoid robot, Phoenix. Officials claim that it can complete many tasks autonomously at the same speed as humans. Pheonix, the world's first robot that can autonomously complete tasks at human speeds, can gently grab, move and elegantly place each object to its left and right sides. It can autonomously identify objects

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Cloud Whale Xiaoyao 001 sweeping and mopping robot has a 'brain'! | Experience

Apr 26, 2024 pm 04:22 PM

Sweeping and mopping robots are one of the most popular smart home appliances among consumers in recent years. The convenience of operation it brings, or even the need for no operation, allows lazy people to free their hands, allowing consumers to "liberate" from daily housework and spend more time on the things they like. Improved quality of life in disguised form. Riding on this craze, almost all home appliance brands on the market are making their own sweeping and mopping robots, making the entire sweeping and mopping robot market very lively. However, the rapid expansion of the market will inevitably bring about a hidden danger: many manufacturers will use the tactics of sea of machines to quickly occupy more market share, resulting in many new products without any upgrade points. It is also said that they are "matryoshka" models. Not an exaggeration. However, not all sweeping and mopping robots are

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

The humanoid robot can do magic, let the Spring Festival Gala program team find out more

Feb 04, 2024 am 09:03 AM

In the blink of an eye, robots have learned to do magic? It was seen that it first picked up the water spoon on the table and proved to the audience that there was nothing in it... Then it put the egg-like object in its hand, then put the water spoon back on the table and started to "cast a spell"... …Just when it picked up the water spoon again, a miracle happened. The egg that was originally put in disappeared, and the thing that jumped out turned into a basketball... Let’s look at the continuous actions again: △ This animation shows a set of actions at 2x speed, and it flows smoothly. Only by watching the video repeatedly at 0.5x speed can it be understood. Finally, I discovered the clues: if my hand speed were faster, I might be able to hide it from the enemy. Some netizens lamented that the robot’s magic skills were even higher than their own: Mag was the one who performed this magic for us.

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

Ten humanoid robots shaping the future

Mar 22, 2024 pm 08:51 PM

The following 10 humanoid robots are shaping our future: 1. ASIMO: Developed by Honda, ASIMO is one of the most well-known humanoid robots. Standing 4 feet tall and weighing 119 pounds, ASIMO is equipped with advanced sensors and artificial intelligence capabilities that allow it to navigate complex environments and interact with humans. ASIMO's versatility makes it suitable for a variety of tasks, from assisting people with disabilities to delivering presentations at events. 2. Pepper: Created by Softbank Robotics, Pepper aims to be a social companion for humans. With its expressive face and ability to recognize emotions, Pepper can participate in conversations, help in retail settings, and even provide educational support. Pepper's

American university opens 'The Legend of Zelda: Tears of the Kingdom' engineering competition for students to build robots

Nov 23, 2023 pm 08:45 PM

American university opens 'The Legend of Zelda: Tears of the Kingdom' engineering competition for students to build robots

Nov 23, 2023 pm 08:45 PM

"The Legend of Zelda: Tears of the Kingdom" became the fastest-selling Nintendo game in history. Not only did Zonav Technology bring various "Zelda Creator" community content, but it also became the United States' A new engineering course at the University of Maryland (UMD). Rewrite: The Legend of Zelda: Tears of the Kingdom is one of Nintendo's fastest-selling games on record. Not only does Zonav Technology bring rich community content, it has also become part of the new engineering course at the University of Maryland. This fall, Associate Professor Ryan D. Sochol of the University of Maryland opened a course called "