Technology peripherals

Technology peripherals

AI

AI

An article explaining the testing technology of intelligent driving perception system in detail

An article explaining the testing technology of intelligent driving perception system in detail

An article explaining the testing technology of intelligent driving perception system in detail

Foreword

With the advancement of artificial intelligence and its software and hardware technology, autonomous driving has developed rapidly in recent years. Autonomous driving systems have been used in civilian vehicle driver assistance systems, autonomous logistics robots, drones and other fields. The perception component is the core of the autonomous driving system, which enables the vehicle to analyze and understand information about the internal and external traffic environment. However, like other software systems, autonomous driving perception systems are plagued by software flaws. Moreover, the autonomous driving system operates in safety-critical scenarios, and its software defects may lead to catastrophic consequences. In recent years, there have been many fatalities and injuries caused by defects in autonomous driving systems. Autonomous driving system testing technology has received widespread attention from academia and industry. Enterprises and research institutions have proposed a series of technologies and environments including virtual simulation testing, real-life road testing and combined virtual and real testing. However, due to the particularity of input data types and the diversity of operating environments of autonomous driving systems, the implementation of this type of testing technology requires excessive resources and entails greater risks. This article briefly analyzes the current research and application status of autonomous driving perception system testing methods.

1 Automatic driving perception system test

The quality assurance of the automatic driving perception system is becoming more and more important. The perception system needs to help vehicles automatically analyze and understand road condition information. Its composition is very complex, and it is necessary to fully test the reliability and safety of the system to be tested in many traffic scenarios. Current autonomous driving perception tests are mainly divided into three categories. No matter what kind of testing method, it shows an important feature that is different from traditional testing, that is, strong dependence on test data.

The first type of testing is mainly based on software engineering theory and formal methods, etc., and takes the model structure mechanism of the perception system implementation as the entry point. This testing method is based on a high-level understanding of the operating mechanism and system characteristics of autonomous driving perception. The purpose of this biased perception system logic test is to discover the design flaws of the perception module in the early stages of system development to ensure the effectiveness of the model algorithm in early system iterations. Based on the characteristics of the autonomous driving algorithm model, the researchers proposed a series of test data generation, test verification indicators, test evaluation methods and technologies.

The second type of testing virtual simulation method uses computers to abstract the actual traffic system to complete testing tasks, including system testing in a preset virtual environment or independent testing of perception components. The effect of virtual simulation testing depends on the reality of the virtual environment, test data quality and specific test execution technology. It is necessary to fully consider the effectiveness of the simulation environment construction method, data quality assessment and test verification technology. Autonomous driving environment perception and scene analysis models rely on large-scale effective traffic scene data for training and testing verification. Domestic and foreign researchers have conducted a lot of research on traffic scenes and their data structure generation technology. Use methods such as data mutation, simulation engine generation, and game model rendering to construct virtual test scene data to obtain high-quality test data, and use different generated test data for autonomous driving models and data amplification and enhancement. Test scenarios and data generation are key technologies. Test cases must be rich enough to cover the state space of the test sample. Test samples need to be generated under extreme traffic conditions to test the safety of the system's decision output model under these boundary use cases. Virtual testing often combines existing testing theories and technologies to construct effective methods for evaluating and verifying test effects.

The third category is road testing of real vehicles equipped with autonomous driving perception systems, including preset closed scene testing and actual road condition testing. The advantage of this type of testing is that testing in a real environment can fully guarantee the validity of the results. However, this type of method has difficulties such as test scenarios that are difficult to meet diverse needs, difficulty in obtaining relevant traffic scene data samples, high cost of manual annotation of real road collection data, uneven annotation quality, excessive test mileage requirements, and too long data collection cycle, etc. . There are safety risks in manual driving in dangerous scenarios, and it is difficult for testers to solve these problems in the real world. At the same time, traffic scene data also suffers from problems such as a single data source and insufficient data diversity, which is insufficient to meet the testing and verification requirements of autonomous driving researchers in software engineering. Despite this, road testing is an indispensable part of traditional car testing and is extremely important in autonomous driving perception testing.

From the perspective of test types, perception system testing has different test contents for the vehicle development life cycle. Autonomous driving testing can be divided into model-in-the-loop (MiL) testing, software-in-the-loop (SiL) testing, hardware-in-the-loop (HiL) testing, vehicle-in-the-loop (ViL) testing, etc. This article focuses on the SiL and HiL related parts of the autonomous driving perception system test. HiL includes perception hardware devices, such as cameras, lidar, and human-computer interaction perception modules. SiL uses software simulation to replace the data generated by the real hardware. The purpose of both tests is to verify the functionality, performance, robustness and reliability of the autonomous driving system. For specific test objects, different types of tests are combined with different testing technologies at each perception system development stage to complete the corresponding verification requirements. Current autonomous driving perception information mainly comes from the analysis of several types of main data, including image (camera), point cloud (lidar), and fusion perception systems. This article mainly analyzes the perception tests of these three types of data.

2 Autonomous Driving Image System Test

Images collected by multiple types of cameras are one of the most important input data types for autonomous driving perception. Image data can provide front-view, surround-view, rear-view and side-view environmental information when the vehicle is running, and help the autonomous driving system achieve functions such as road ranging, target recognition and tracking, and automatic lane change analysis. Image data comes in various formats, such as RGB images, semantic images, depth images, etc. These image formats have their own characteristics. For example, RGB images have richer color information, depth-of-field images contain more scene depth information, and semantic images are obtained based on pixel classification, which is more beneficial for target detection and tracking tasks.

Image-based automatic driving perception system testing relies on large-scale effective traffic scene images for training and testing verification. However, the cost of manual labeling of real road collection data is high, the data collection cycle is too long, laws and regulations for manual driving in dangerous scenes are imperfect, and the quality of labeling is uneven. At the same time, traffic scene data is also affected by factors such as a single data source and insufficient data diversity, which is insufficient to meet the testing and verification requirements of autonomous driving research.

Domestic and foreign researchers have conducted a lot of research on the construction and generation technology of traffic scene data, using methods such as data mutation, adversarial generation network, simulation engine generation and game model rendering to build virtual tests. Scenario data to obtain high-quality test data, and use different generated test data for autonomous driving models and data enhancement. Using hard-coded image transformations to generate test images is an effective method. A variety of mathematical transformations and image processing techniques can be used to mutate the original image to test the potential erroneous behavior of the autonomous driving system under different environmental conditions.

Zhang et al. used an adversarial generative network-based method for image style transformation to simulate vehicle driving scenes under specified environmental conditions. Some studies perform autonomous driving tests in virtual environments, using 3D models from physical simulation models to construct traffic scenes and rendering them into 2D images as input to the perception system. Test images can also be generated by synthesis, sampling modifiable content in the subspace of low-dimensional images and performing image synthesis. Compared with direct mutation of images, the synthetic scene is richer and the image perturbation operation is more free. Fremont et al. used the autonomous driving domain-specific programming language Scenic to pre-design test scenarios, used a game engine interface to generate specific traffic scene images, and used the rendered images for training and verification on the target detection model.

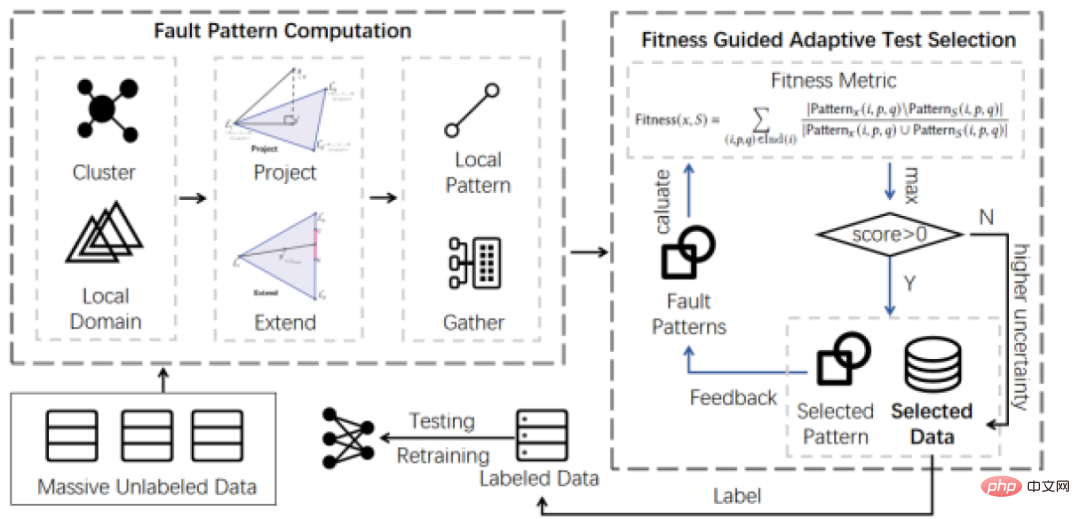

Pei et al. used the idea of differential testing to find inconsistent outputs of the autonomous driving steering model, and also proposed using neuron coverage, that is, the neurons in the neural network exceed the preset given activation threshold. ratio to measure the effectiveness of the test sample. On the basis of neural coverage, researchers have also proposed many new test coverage concepts, such as neuron boundary coverage, strong neuron coverage, hierarchical neuron coverage, etc. In addition, using heuristic search technology to find target test cases is also an effective method. The core difficulty lies in designing test evaluation indicators to guide the search. There are common problems in autonomous driving image system testing such as lack of labeled data for special driving scenarios. This team proposed an adaptive deep neural network test case selection method ATS, inspired by the idea of adaptive random testing in the field of software testing, to solve the high human resource cost of deep neural network test data labeling in the autonomous driving perception system. This problem.

3 Autonomous Driving LiDAR System Test

Lidar is a crucial sensor for the autonomous driving system and can determine the distance between the sensor transmitter and the target object. propagation distance, and analyze information such as the amount of reflected energy on the surface of the target object, the amplitude, frequency and phase of the reflected wave spectrum. The point cloud data collected accurately depicts the three-dimensional scale and reflection intensity information of various objects in the driving scene, which can make up for the camera's lack of data form and accuracy. Lidar plays an important role in tasks such as autonomous driving target detection and positioning mapping, and cannot be replaced by single vision alone.

As a typical complex intelligent software system, autonomous driving takes the surrounding environment information captured by lidar as input, and makes judgments through the artificial intelligence model in the perception module, and is controlled by system planning Finally, complete various driving tasks. Although the high complexity of the artificial intelligence model gives the autonomous driving system the perception capability, the existing traditional testing technology relies on the manual collection and annotation of point cloud data, which is costly and inefficient. On the other hand, point cloud data is disordered, lacks obvious color information, is easily interfered by weather factors, and the signal is easily attenuated, making the diversity of point cloud data particularly important during the testing process.

Testing of autonomous driving systems based on lidar is still in its preliminary stages. Both actual drive tests and simulation tests have problems such as high cost, low test efficiency, and unguaranteed test adequacy. In view of the problems faced by autonomous driving systems such as changeable test scenarios, large and complex software systems, and huge testing costs, being able to propose test data generation technology based on domain knowledge is of great significance to the guarantee of autonomous driving systems.

In terms of radar point cloud data generation, Sallab et al. modeled radar point cloud data by building a cycle consistency generative adversarial network, and conducted feature analysis on the simulated data to generate new Point cloud data. Yue et al. proposed a point cloud data generation framework for autonomous driving scenes. This framework accurately mutates the point cloud data in the game scene based on annotated objects to obtain new data. The mutation they obtained using this method The data retrained the point cloud data processing module of the autonomous driving system and achieved better accuracy improvements.

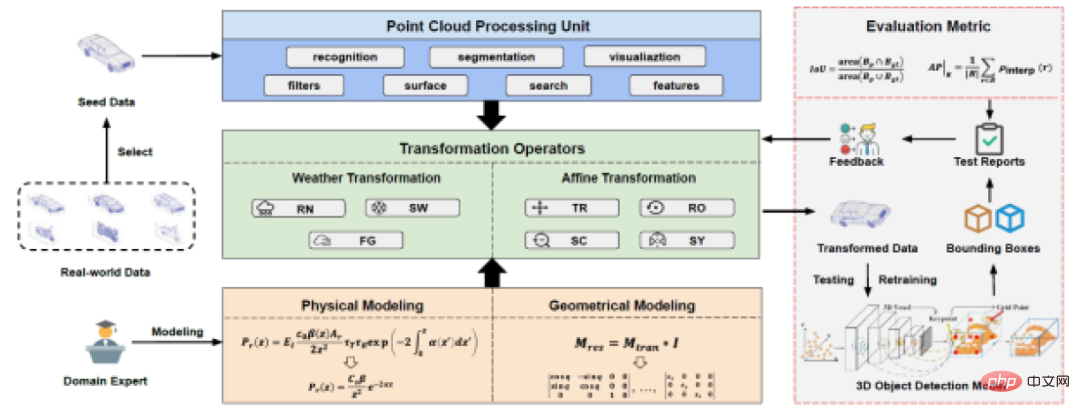

This team designed and implemented a lidar automated testing tool LiRTest, which is mainly used for automated testing of autonomous vehicle target detection systems, and can be further retrained to improve system robustness. . LiRTest first designs physical and geometric models by domain experts, and then constructs transformation operators based on the models. Developers select point cloud seeds from real-world data, use point cloud processing units to identify and process them, and implement transformation operator-based mutation algorithms to generate tests that evaluate the robustness of autonomous driving 3D target detection models. data. Finally, LiRTest gets the test report and gives feedback on the operator design, thereby iteratively improving quality.

The autonomous driving system is a typical information-physical fusion system. Its operating status is not only determined by user input information and the internal status of the software system, but is also affected by the physical environment. Although there is currently a small amount of research focusing on the point cloud data generation problem affected by various environmental factors, due to the characteristics of point cloud data, the authenticity of the generated data is difficult to equate with the drive test data. Therefore, how to do this without significantly increasing additional resource consumption? In this case, automatically generating point cloud data that can describe a variety of real environmental factors is a key issue that needs to be solved.

In the common software architecture of autonomous driving software, artificial intelligence models have an extremely important impact on driving decisions and system behaviors. The functions they affect include: object recognition, path planning, behavior Forecast etc. The most commonly used artificial intelligence model for point cloud data processing is the target detection model, which is implemented using deep neural networks. Although this technology can achieve high accuracy on specific tasks, due to the lack of interpretability of its results, users and developers cannot analyze and confirm its behavior, which brings difficulties to the development of testing technology and the evaluation of test adequacy. great difficulty. These are all challenges that future lidar model testers will need to face.

4 Autonomous Driving Fusion Perception System Test

Autonomous driving systems are usually equipped with a variety of sensors to sense environmental information, and are equipped with a variety of software and algorithms to complete various autonomous driving tasks. Different sensors have different physical characteristics and their application scenarios are also different. Fusion sensing technology can make up for the poor environmental adaptability of a single sensor, and ensure the normal operation of the autonomous driving system under various environmental conditions through the cooperation of multiple sensors.

Due to different information recording methods, there is strong complementarity between different types of sensors. The installation cost of the camera is low, and the image data collected has high resolution and rich visual information such as color and texture. However, the camera is sensitive to the environment and may be unreliable at night, during strong light, and other light changes. LiDAR, on the other hand, is not easily affected by changes in light and provides precise three-dimensional perception during the day and night. However, lidar is expensive and the point cloud data collected lacks color information, making it difficult to identify targets without obvious shapes. How to utilize the advantages of each modal data and mine deeper semantic information has become an important issue in fused sensing technology.

Researchers have proposed a variety of data fusion methods. Fusion sensing technology of lidar and cameras based on deep learning has become a major research direction due to its high accuracy. Feng et al. briefly summarized the fusion methods into three types: early stage, mid stage and late stage fusion. Early fusion only fuses original data or preprocessed data; mid-stage fusion cross-fuses the data features extracted by each branch; late fusion only fuses the final output results of each branch. Although deep learning-based fused sensing technology has demonstrated great potential in existing benchmark datasets, such intelligent models may still exhibit incorrect and unexpected extreme behaviors in real-world scenarios with complex environments, leading to fatal loss. To ensure the safety of autonomous driving systems, such fused perception models need to be thoroughly tested.

Currently, fusion sensing testing technology is still in its preliminary stage. The test input domain is huge and the cost of data collection is high. The main problems are automated test data generation technology. Therefore, automated test data generation technology has received widespread attention. Wang et al. proposed a cross-modal data enhancement algorithm that inserts virtual objects into images and point clouds according to geometric consistency rules to generate test data sets. Zhang et al. proposed a multimodal data enhancement method that utilizes multimodal transformation flows to maintain the correct mapping between point clouds and image pixels, and based on this, further proposed a multimodal cut and paste enhancement method.

Considering the impact of complex environments in real scenes on sensors, our team designed a data amplification technology for multi-modal fusion sensing systems. This method involves domain experts formulating a set of mutation rules with realistic semantics for each modal data, and automatically generating test data to simulate various factors that interfere with sensors in real scenarios, and help software developers test and Evaluating fused sensing systems. The mutation operators used in this method include three categories: signal noise operators, signal alignment operators and signal loss operators, which simulate different types of interference existing in real scenes. The noise operator refers to the presence of noise in the collected data due to the influence of environmental factors during the sensor data collection process. For example, for image data, operators such as spot and blur are used to simulate the situation when the camera encounters strong light and shakes. The alignment operator simulates the misalignment of multimodal data modes, specifically including time misalignment and space misalignment. For the former, one signal is randomly delayed to simulate transmission congestion or delay. For the latter, minor adjustments are made to the calibration parameters of each sensor to simulate slight changes in sensor position due to vehicle jitter and other issues while the vehicle is traveling. The signal loss operator simulates sensor failure. Specifically, after randomly discarding one signal, observe whether the fusion algorithm can respond in time or work normally.

In short, multi-sensor fusion perception technology is an inevitable trend in the development of autonomous driving. Complete testing is a necessary condition to ensure that the system can work normally in a complex real environment. How to use limited resources Adequate testing within the network remains a pressing issue.

in conclusion

Autonomous driving perception testing is being closely integrated with the autonomous driving software development process, and various in-the-loop tests will gradually become a necessary component of autonomous driving quality assurance. In industrial applications, actual drive testing remains important. However, there are problems such as excessive cost, insufficient efficiency, and high safety hazards, which are far from meeting the testing and verification needs of autonomous driving intelligent perception systems. The rapid development of formal methods and simulation virtual testing in multiple branches of research provides effective ways to improve testing. Researchers are exploring model testing indicators and technologies suitable for intelligent driving to provide support for virtual simulation testing methods. This team is committed to researching the generation, evaluation and optimization methods of autonomous driving perception test data, focusing on in-depth research on three aspects based on images, point cloud data and perception fusion testing to ensure a high-quality autonomous driving perception system.

The above is the detailed content of An article explaining the testing technology of intelligent driving perception system in detail. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1393

1393

52

52

1206

1206

24

24

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

What do you think of furmark? - How is furmark considered qualified?

Mar 19, 2024 am 09:25 AM

What do you think of furmark? - How is furmark considered qualified?

Mar 19, 2024 am 09:25 AM

What do you think of furmark? 1. Set the "Run Mode" and "Display Mode" in the main interface, and also adjust the "Test Mode" and click the "Start" button. 2. After waiting for a while, you will see the test results, including various parameters of the graphics card. How is furmark qualified? 1. Use a furmark baking machine and check the results for about half an hour. It basically hovers around 85 degrees, with a peak value of 87 degrees and room temperature of 19 degrees. Large chassis, 5 chassis fan ports, two on the front, two on the top, and one on the rear, but only one fan is installed. All accessories are not overclocked. 2. Under normal circumstances, the normal temperature of the graphics card should be between "30-85℃". 3. Even in summer when the ambient temperature is too high, the normal temperature is "50-85℃

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

The Stable Diffusion 3 paper is finally released, and the architectural details are revealed. Will it help to reproduce Sora?

Mar 06, 2024 pm 05:34 PM

StableDiffusion3’s paper is finally here! This model was released two weeks ago and uses the same DiT (DiffusionTransformer) architecture as Sora. It caused quite a stir once it was released. Compared with the previous version, the quality of the images generated by StableDiffusion3 has been significantly improved. It now supports multi-theme prompts, and the text writing effect has also been improved, and garbled characters no longer appear. StabilityAI pointed out that StableDiffusion3 is a series of models with parameter sizes ranging from 800M to 8B. This parameter range means that the model can be run directly on many portable devices, significantly reducing the use of AI

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

Let's talk about end-to-end and next-generation autonomous driving systems, as well as some misunderstandings about end-to-end autonomous driving?

Apr 15, 2024 pm 04:13 PM

In the past month, due to some well-known reasons, I have had very intensive exchanges with various teachers and classmates in the industry. An inevitable topic in the exchange is naturally end-to-end and the popular Tesla FSDV12. I would like to take this opportunity to sort out some of my thoughts and opinions at this moment for your reference and discussion. How to define an end-to-end autonomous driving system, and what problems should be expected to be solved end-to-end? According to the most traditional definition, an end-to-end system refers to a system that inputs raw information from sensors and directly outputs variables of concern to the task. For example, in image recognition, CNN can be called end-to-end compared to the traditional feature extractor + classifier method. In autonomous driving tasks, input data from various sensors (camera/LiDAR

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

DualBEV: significantly surpassing BEVFormer and BEVDet4D, open the book!

Mar 21, 2024 pm 05:21 PM

This paper explores the problem of accurately detecting objects from different viewing angles (such as perspective and bird's-eye view) in autonomous driving, especially how to effectively transform features from perspective (PV) to bird's-eye view (BEV) space. Transformation is implemented via the Visual Transformation (VT) module. Existing methods are broadly divided into two strategies: 2D to 3D and 3D to 2D conversion. 2D-to-3D methods improve dense 2D features by predicting depth probabilities, but the inherent uncertainty of depth predictions, especially in distant regions, may introduce inaccuracies. While 3D to 2D methods usually use 3D queries to sample 2D features and learn the attention weights of the correspondence between 3D and 2D features through a Transformer, which increases the computational and deployment time.

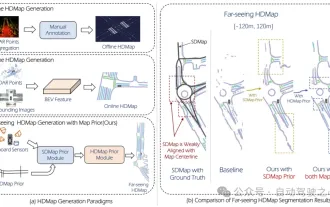

Mass production killer! P-Mapnet: Using the low-precision map SDMap prior, the mapping performance is violently improved by nearly 20 points!

Mar 28, 2024 pm 02:36 PM

Mass production killer! P-Mapnet: Using the low-precision map SDMap prior, the mapping performance is violently improved by nearly 20 points!

Mar 28, 2024 pm 02:36 PM

As written above, one of the algorithms used by current autonomous driving systems to get rid of dependence on high-precision maps is to take advantage of the fact that the perception performance in long-distance ranges is still poor. To this end, we propose P-MapNet, where the “P” focuses on fusing map priors to improve model performance. Specifically, we exploit the prior information in SDMap and HDMap: on the one hand, we extract weakly aligned SDMap data from OpenStreetMap and encode it into independent terms to support the input. There is a problem of weak alignment between the strictly modified input and the actual HD+Map. Our structure based on the Cross-attention mechanism can adaptively focus on the SDMap skeleton and bring significant performance improvements;