Technology peripherals

Technology peripherals

AI

AI

The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing

The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing

The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing

During the Qingli period of Renzong in the Northern Song Dynasty 980 years ago, a revolution in knowledge was quietly taking place in China.

#The trigger for all this is not the words of the sages who live in temples, but the clay bricks with regular inscriptions fired piece by piece.

This revolution is "movable type printing".

The subtlety of movable type printing lies in the idea of "building block assembly": the craftsman first makes the reverse character mold of the single character, and then puts the single character according to the manuscript. Selected and printed with ink, these fonts can be used as many times as needed.

#Compared with the cumbersome process of "one print, one version" of woodblock printing, Modularization-Assembled on demand-Multiple uses This working mode geometrically improves the efficiency of printing and lays the foundation for the development and inheritance of human civilization for thousands of years.

Returning to the field of deep learning, today with the popularity of large pre-trained models, how to migrate the capabilities of a series of large models to specific downstream tasks has become a problem. The key issue.

The previous knowledge transfer or reuse method is similar to "block printing": we often need to train a new complete model according to task requirements. These methods are often accompanied by huge training costs and are difficult to scale to a large number of tasks.

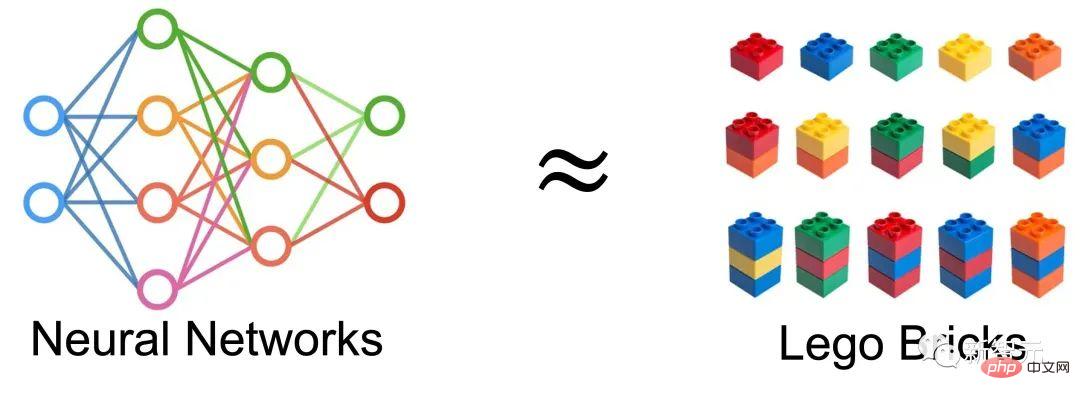

So a very natural idea came up: Can we regard the neural network as an assembly of building blocks? And obtain a new network by reassembling the existing network, and use it to perform transfer learning?

##

##

Paper link: https://arxiv.org/abs/2210.17409

Code link: https://github.com/Adamdad/DeRy

Project homepage: https://adamdad.github.io/dery/

OpenReview: https://openreview.net/forum?id=gtCPWaY5bNh

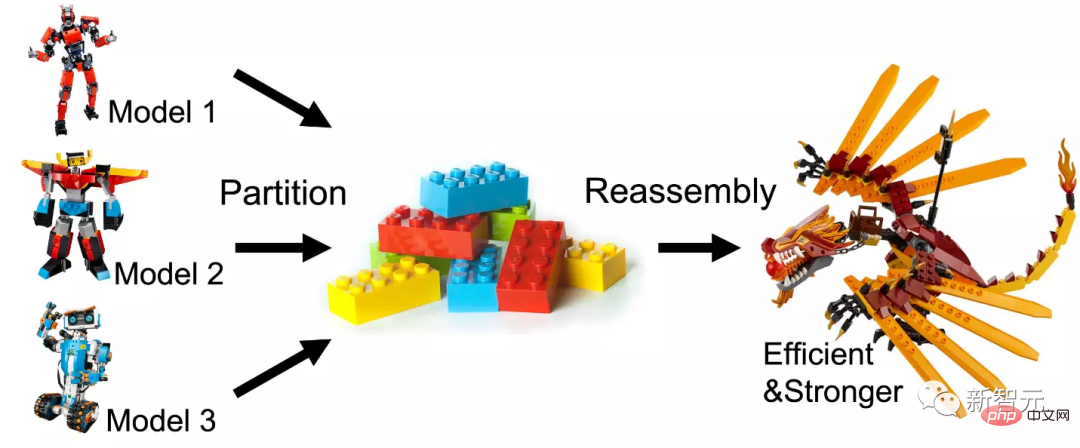

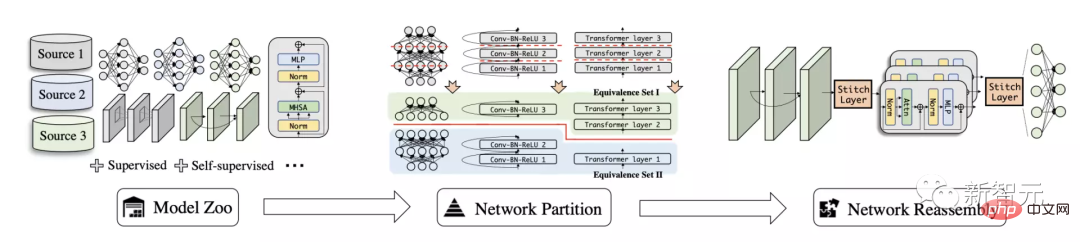

The author comes first Disassemble the existing pre-trained model into a sub-network based on functional similarity, and then reassemble the sub-network to build an efficient and easy-to-use model for specific tasks.

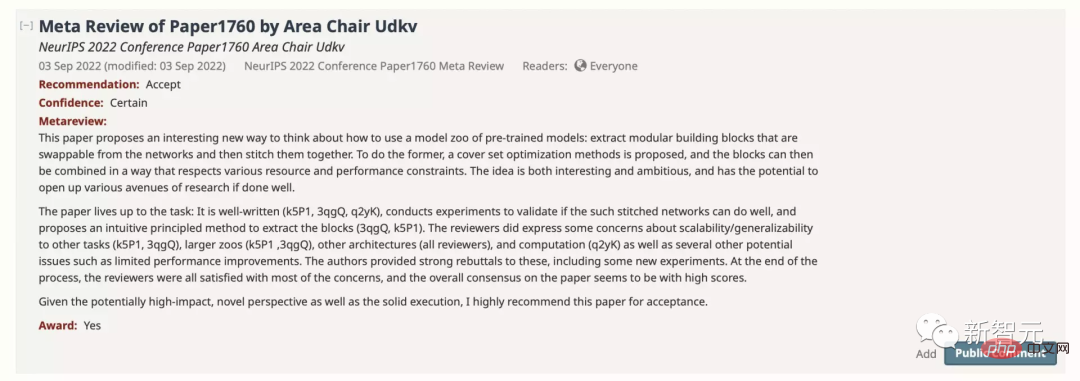

The paper was accepted by NeurIPS with a score of 886 and recommended as Paper Award Nomination.

In this article, the author explores a new knowledge transfer task called Deep Model Reassembly (DeRy), using for general model reuse.

Given a set of pre-trained models trained on different data and heterogeneous architectures, deep model restructuring first splits each model into independent model chunks and then selectively to reassemble sub-model pieces within hardware and performance constraints.

This method is similar to treating the deep neural network model as building blocks: dismantle the existing large building blocks into small building blocks, and then The parts are assembled as required. The assembled new model should not only have stronger performance; the assembly process should not change the structure and parameters of the original module as much as possible to ensure its efficiency.

Breaking up and reorganizing the deep model

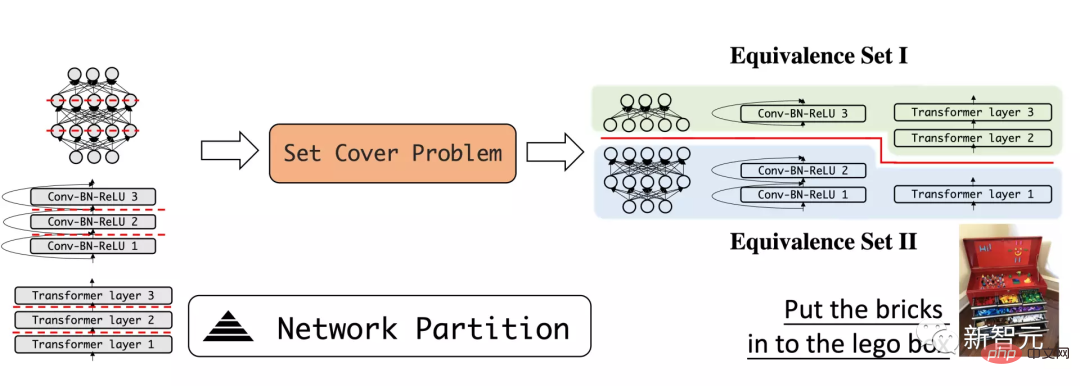

The method in this article can be divided into two parts. DeRy first solves a Set Cover Problem and splits all pre-trained networks according to functional levels; in the second step, DeRy formalizes the model assembly into a 0-1 integer programming problem to ensure that the assembled model is Best performance on specific tasks.

##Deep Model Reassembly

First, the author defines the problem of deep model reassembly: given a trained deep model, it is called a model library.

Each model is composed of layer links, represented by . Different networks can have completely different structures and operations, as long as the model is connected layer by layer.

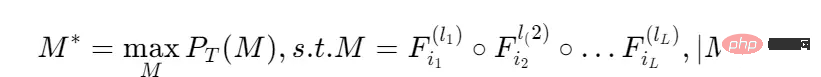

Given a task, we hope to find the layer mixture model with the best performance, and the calculation amount of the model meets certain restrictions:

Performance on the task; Represents the layer operation of the th model;

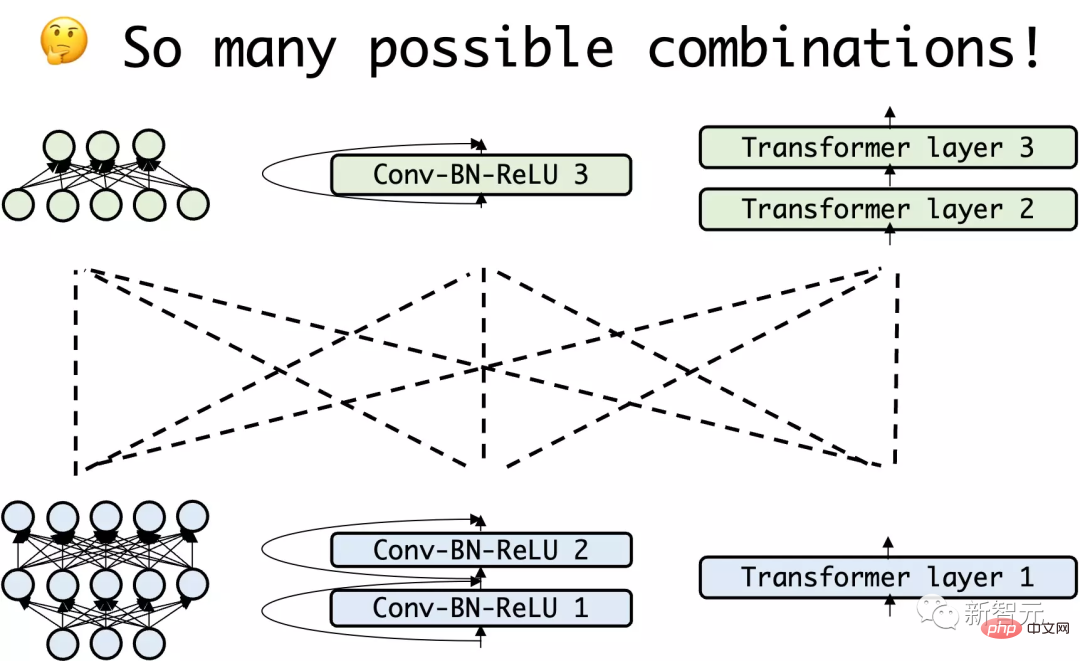

This problem requires searching all permutations of all model layers in order to Maximize revenue. In essence, this task involves an extremely complex combinatorial optimization.

In order to simplify the search cost, this article first splits the model library model from the depth direction to form some shallower and smaller sub-networks; then performs splicing search at the sub-network level.

Split the network according to functional levels

DeRy’s first step is Take apart deep learning models like building blocks. The author adopts a deep network splitting method to split the deep model into some shallower small models.

The article hopes that the disassembled sub-models have different functions as much as possible. This process can be compared to the process of dismantling building blocks and putting them into toy boxes into categories: Similar building blocks are put together, and different building blocks are taken apart.

For example, split the model into the bottom layer and the high layer, and expect that the bottom layer is mainly responsible for identifying local patterns such as curves or shapes, while the high layer can judge the overall semantics of the sample.

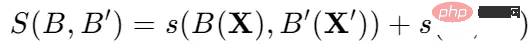

Using the general feature similarity measurement index, the functional similarity of any model can be quantitatively measured.

The key idea is that for similar inputs, neural networks with the same function can produce similar outputs.

So, for the input tensors X and X' corresponding to the sum of the two networks, their functional similarity is defined as:

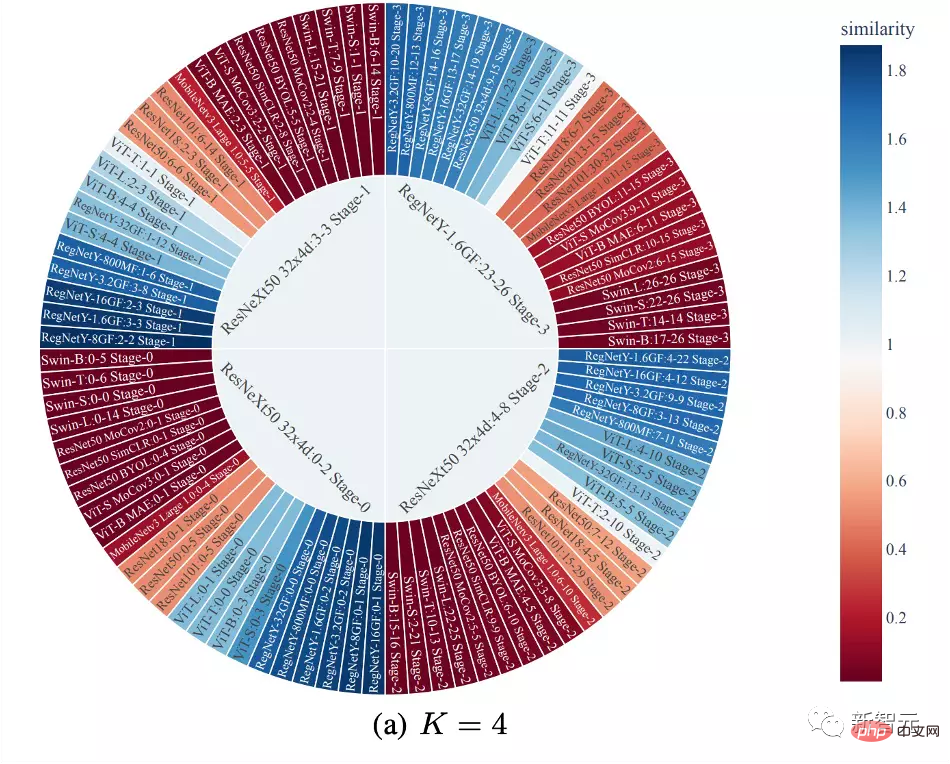

Then the model library can be divided into functional equivalence sets through functional similarity.

The subnetworks in each equivalence set have high functional similarity, and the division of each model ensures the separability of the model library.

One of the core benefits of such disassembly is that due to functional similarities, the subnetworks in each equivalent set can be regarded as approximately commutative, that is, a network block can be Replaced by another subnetwork of the same equivalence set without affecting network prediction.

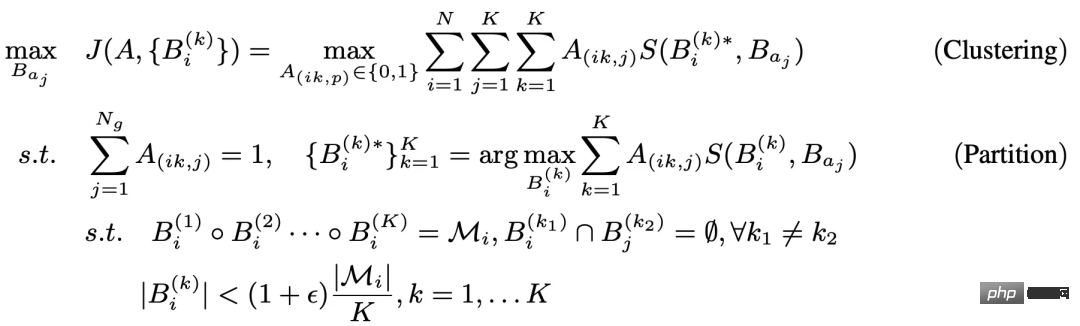

The above splitting problem can be formalized as a three-layer constrained optimization problem:

The inner-level optimization of this problem is very similar to the general covering set problem or graph segmentation problem. Therefore, the author uses a heuristic Kernighan-Lin (KL) algorithm to optimize the inner layer.

The general idea is that for two randomly initialized sub-models, one layer of operations is exchanged each time. If the exchange can increase the value of the evaluation function, the exchange is retained; otherwise, it is given up. This exchange.

The outer loop here adopts a K-Means clustering algorithm.

For each network division, each subnetwork is always assigned to the function set with the largest center distance. Since the inner and outer loops are iterative and have convergence guarantee, the optimal subnetwork split according to functional levels can be obtained by solving the above problem.

Network assembly based on integer optimization

Network splitting divides each network into sub-networks, each sub-network Belongs to an equivalence set. This can be used as a search space to find the optimal network splicing for downstream tasks.

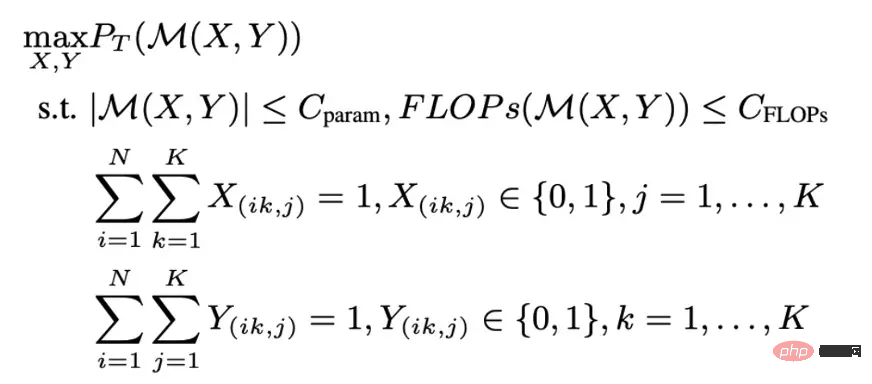

Due to the diversity of sub-models, this network assembly is a combinatorial optimization problem with a large search space, and certain search conditions are defined: Each network combination takes a network block from the same functional set and places it according to its position in the original network; the synthesized network needs to meet the computational limit. This process is described as optimization of a 0-1 integer optimization problem.

In order to further reduce the training overhead for each calculation of the combined model performance, the author draws on an alternative function in NAS training that does not require training, called for NASWOT. From this, the true performance of the network can be approximated simply by using the network's inference on a specified data set.

Through the above split-recombine process, different pre-trained models can be spliced and fused to obtain a new and stronger model.

Experimental results

Model reorganization is suitable for transfer learning

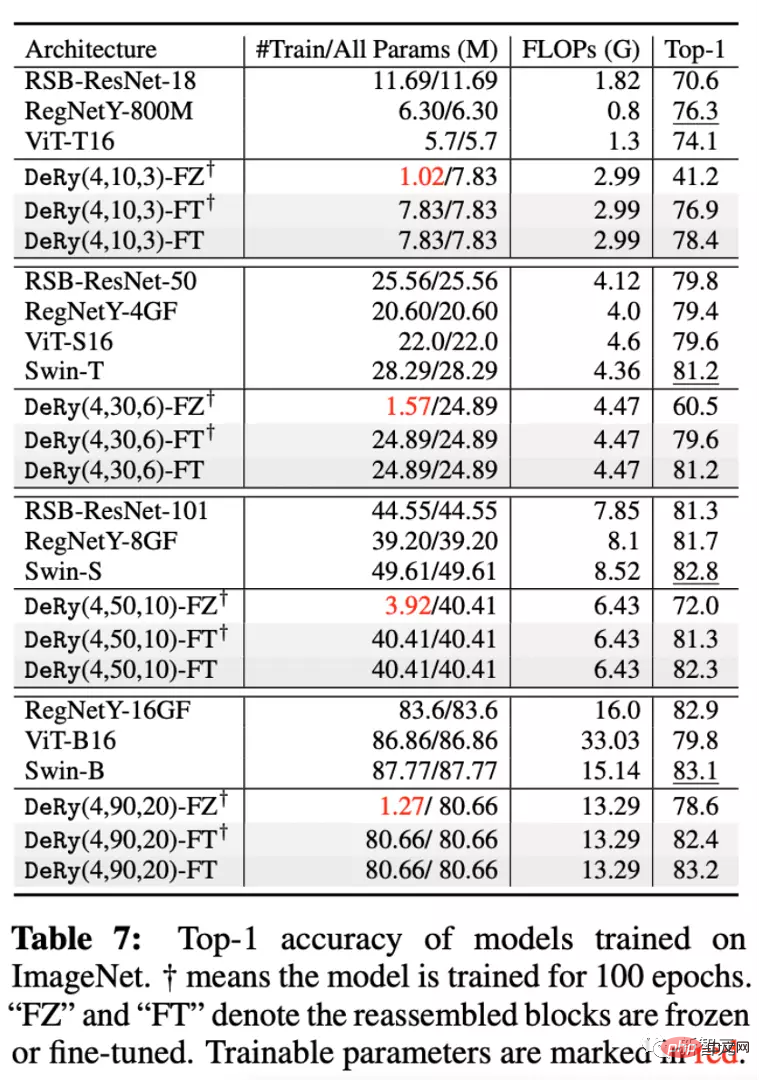

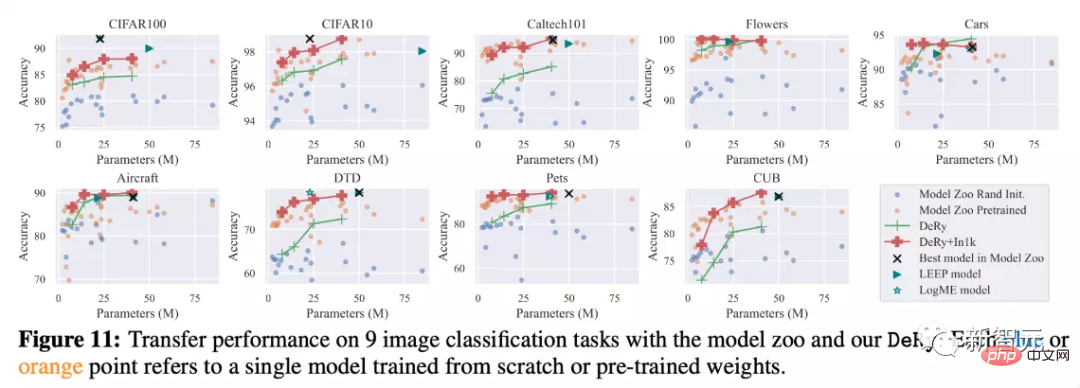

The author combines a model containing 30 different pre-trained networks The library was painstakingly disassembled and reassembled, and performance evaluated on ImageNet and 9 other downstream classification tasks.

Two different training methods were used in the experiment: Full-Tuning, which means that all parameters of the spliced model are trained; Freeze- Tuning means that only the spliced connection layer is trained.

In addition, five scale models were selected and compared, called DeRy(, ,).

As you can see in the picture above, on the ImageNet data set, the models of different scales obtained by DeRy can be better than or equal to the models of similar size in the model library.

It can be found that even if only the parameters of the link part are trained, the model can still obtain strong performance gains. For example, the DeRy(4,90,20) model achieved a Top1 accuracy of 78.6% with only 1.27M parameters trained.

At the same time, nine transfer learning experiments also verified the effectiveness of DeRy. It can be seen that without pre-training, DeRy's model can outperform other models in comparisons of various model sizes; by continuously pre-training the reassembled model, the model performance can be greatly improved. Reach the red curve.

Compared with other transfer learning methods from the model library such as LEEP or LogME, DeRy can surpass the performance limitations of the model library itself, and even be better than the best model in the original model library. Best model.

Exploring the nature of model reorganization

The author is also very curious about the model reorganization proposed in this article properties, such as "What pattern will the model be split according to?" and "What rules will the model be reorganized according to?". The author provides experiments for analysis.

Functional similarity, reassembly location and reassembly performance

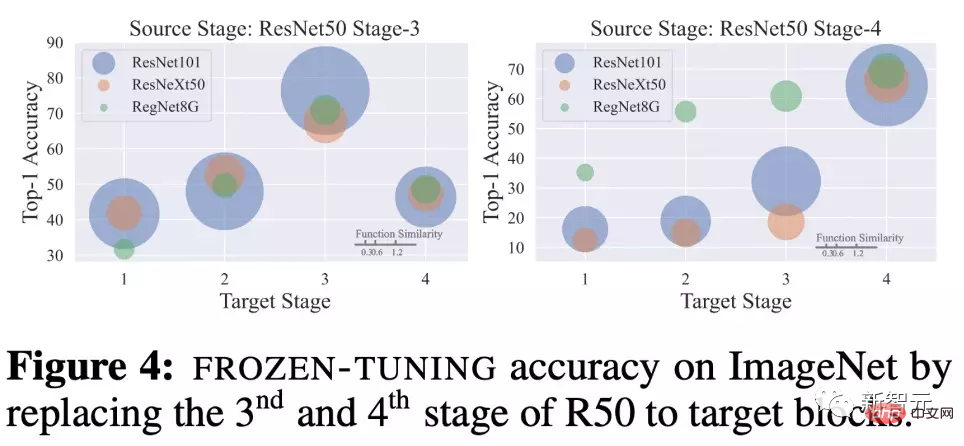

The author explores how the same network block is used by other After replacing network blocks with different functional similarities, Freeze-Tuning Performance comparison of 20 epochs.

For ResNet50 trained on ImageNet, use the network blocks of the 3rd and 4th stages, Replacement with different network blocks for ResNet101, ResNeXt50 and RegNetY8G.

It can be observed that the replacement position has a great impact on performance.

For example, if the third stage is replaced by the third stage of another network, the performance of the reorganized network will be particularly strong . At the same time, functional similarity is also positively matched with recombination performance.

Network model blocks at the same depth have greater similarity, resulting in stronger model capabilities after training. This points to the dependence and positive relationship between similarity-recombination position-recombination performance.

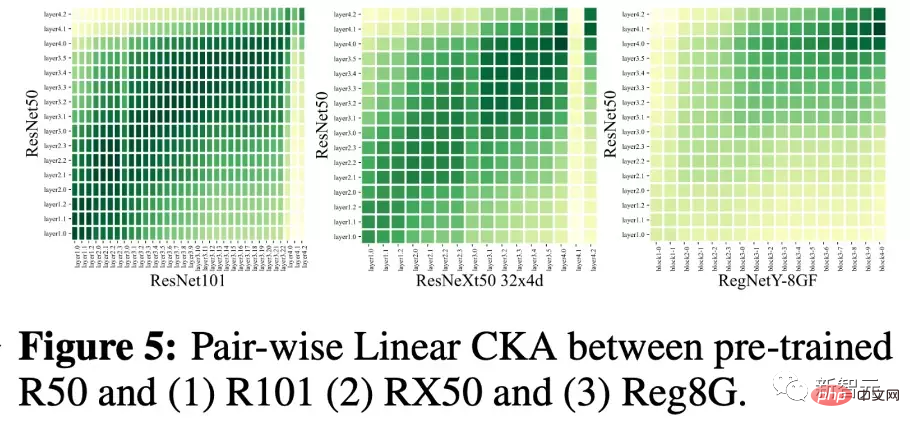

Observation of splitting results

In the figure below, the author draws the The result of one step splitting. The color represents the similarity between the network block and the network block at the center of the equivalence set of the song.

It can be seen that the division proposed in this article tends to cluster the sub-networks together according to depth and split them. At the same time, the functional similarity data between CNN and Transformer is small, but the functional similarity between CNN and CNNs of different architectures is usually larger.

##Using NASWOT as a performance indicator

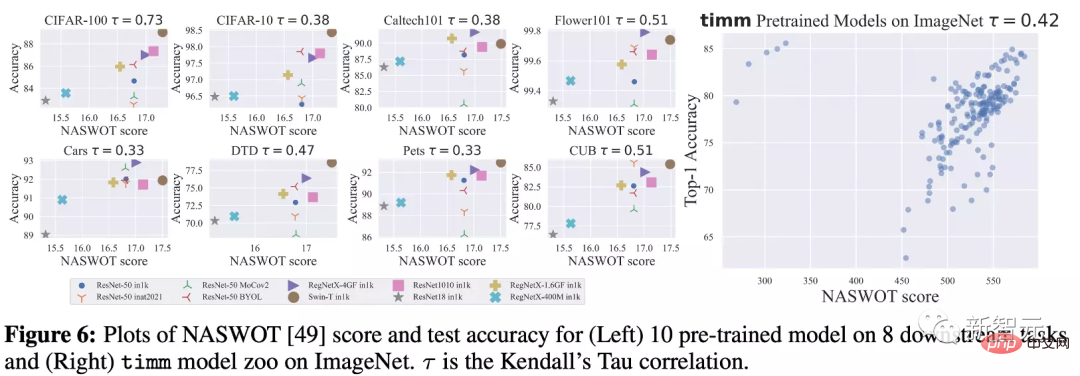

Since this article applies NASWOT for zero-training transfer prediction for the first time, the author also tested the reliability of this indicator.

In the figure below, the author calculates the NASWOT scores of different models on different data sets, and compares them with the accuracy of transfer learning plus one.

It can be observed that the NASWOT scores have obtained a more accurate performance ranking (Kendall's Tau correlation). This shows that the zero training index used in this article can effectively predict the performance of the model on downstream data.

Summary

This paper proposes a new knowledge transfer task called deep model restructuring (Deep Model Reassembly, DeRy for short). He constructs a model adapted to downstream tasks by breaking up existing heterogeneous pre-trained models and reassembling them.

The author proposes a simple two-stage implementation to accomplish this task. First, DeRy solves a covering set problem and splits all pre-trained networks according to functional levels; in the second step, DeRy formalizes the model assembly into a 0-1 integer programming problem to ensure the performance of the assembled model on specific tasks. optimal.

This work not only achieved strong performance improvements, but also mapped the possible connectivity between different neural networks.

The above is the detailed content of The correct way to play building-block deep learning! National University of Singapore releases DeRy, a new transfer learning paradigm that turns knowledge transfer into movable type printing. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Beyond ORB-SLAM3! SL-SLAM: Low light, severe jitter and weak texture scenes are all handled

May 30, 2024 am 09:35 AM

Written previously, today we discuss how deep learning technology can improve the performance of vision-based SLAM (simultaneous localization and mapping) in complex environments. By combining deep feature extraction and depth matching methods, here we introduce a versatile hybrid visual SLAM system designed to improve adaptation in challenging scenarios such as low-light conditions, dynamic lighting, weakly textured areas, and severe jitter. sex. Our system supports multiple modes, including extended monocular, stereo, monocular-inertial, and stereo-inertial configurations. In addition, it also analyzes how to combine visual SLAM with deep learning methods to inspire other research. Through extensive experiments on public datasets and self-sampled data, we demonstrate the superiority of SL-SLAM in terms of positioning accuracy and tracking robustness.

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile