Technology peripherals

Technology peripherals

AI

AI

Google AI singer is coming! AudioLM can compose music and songs by simply listening for a few seconds.

Google AI singer is coming! AudioLM can compose music and songs by simply listening for a few seconds.

Google AI singer is coming! AudioLM can compose music and songs by simply listening for a few seconds.

Image generation model rolled up! Video generative models rolled up!

The next one is the audio generation model.

Recently, the Google research team launched an AI model for speech generation-AudioLM.

With just a few seconds of audio prompts, it can not only generate high-quality, coherent speech, but also piano music.

Paper address: https://www.php.cn/link/b6b3598b407b7f328e3129c74ca8ca94

AudioLM is a high-quality audio generation framework with long-term consistency , maps the input audio into a string of discrete tokens, and transforms the audio generation task into a language modeling task.

Existing audio taggers have to make a trade-off between audio generation quality and stable long-term structure.

In order to solve this contradiction, Google adopts a "hybrid tokenization" solution, using discretized activation of pre-trained mask language models, and using discrete codes generated by neural audio codecs to achieve high-quality synthesis.

AudioLM model can learn to generate natural and coherent continuous words based on short prompts, when trained on speech, without any recording or annotation. It achieves continuous speech that is grammatically smooth and semantically reasonable, while maintaining the identity and intonation of the speaker.

In addition to speech, AudioLM can also generate coherent piano music without even needing to train on any musical symbols.

From text to piano music: two major issues

In recent years, language models trained on massive text corpora have shown their excellent generative capabilities, realizing open dialogue, Machine translation, and even common sense reasoning, can also model signals other than text, such as natural images.

The idea of AudioLM is to leverage these advances in language modeling to generate audio without training on annotated data.

But this requires facing two problems.

First, the data rate for audio is much higher and the unit sequence is longer. For example, a sentence contains dozens of character representations, but after being converted into an audio waveform, it generally contains hundreds of thousands of values.

In addition, there is a one-to-many relationship between text and audio. The same sentence can be presented by different speakers with different styles, emotional content, and context.

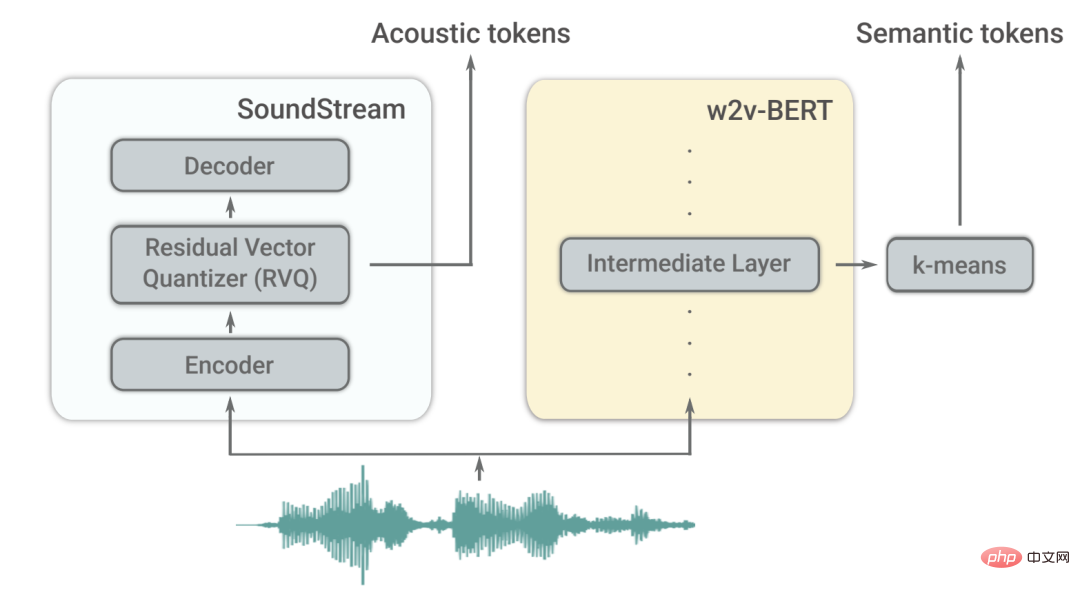

To overcome these two challenges, AudioLM utilizes two audio tags.

First, semantic tags are extracted from w2v-BERT, a self-supervised audio model.

These tags capture both local dependencies (such as speech in speech, local melodies in piano music) and global long-term structures (such as language syntax and semantic content in speech, harmony and rhythm in piano music) while heavily downsampling the audio signal in order to model long sequences.

However, the fidelity of the audio reconstructed from these tokens is not high.

To improve sound quality, in addition to semantic tagging, AudioLM also utilizes acoustic tags generated by the SoundStream neural codec to capture the details of the audio waveform (such as speaker characteristics or recording conditions) for high-quality synthesis.

How to train?

AudioLM is an audio-only model trained without any symbolic representation of text or music.

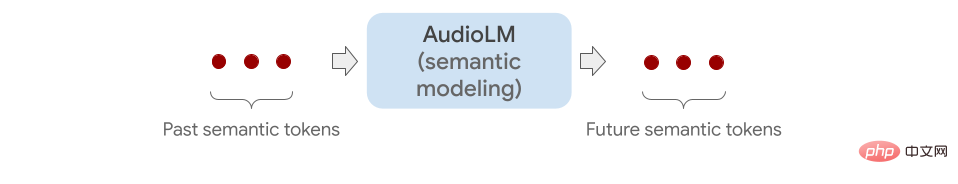

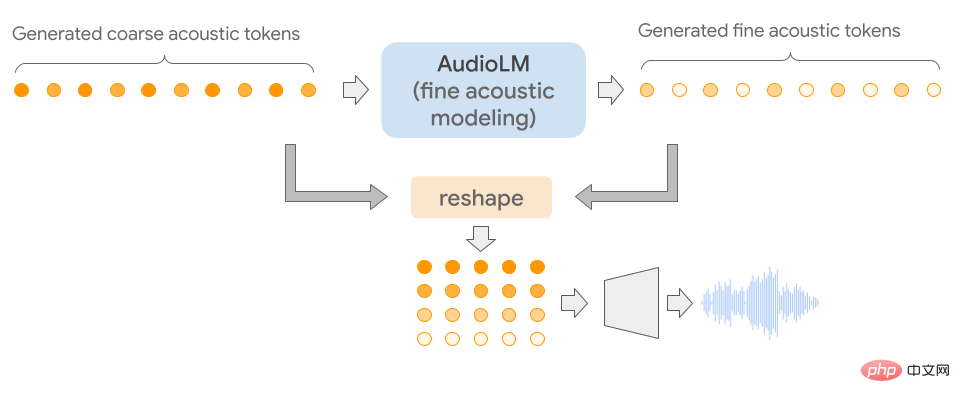

It models audio sequences hierarchically by chaining multiple Transformer models (one for each stage) from semantic tagging to fine acoustic tagging.

Each stage is trained to predict the next token based on the last token, just like training a language model.

The first stage performs this task on semantic tags to model the high-level structure of the audio sequence.

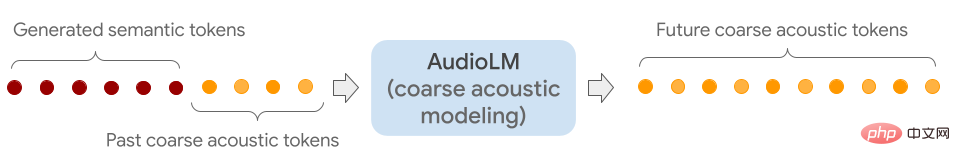

In the second stage, by connecting the entire semantic tag sequence with the past rough tags, and feeding the two as conditions to the rough model, and then predicting the future mark.

This step simulates acoustic properties, such as speaker characteristics or timbre in music.

In the third stage, a fine acoustic model is used to process the coarse acoustic signal, thereby adding more details to the final audio.

Finally, the acoustic markers are fed into the SoundStream decoder to reconstruct the waveform.

After training is completed, AudioLM can be adjusted on a few seconds of audio, which allows it to generate continuous audio.

In order to demonstrate the general applicability of AudioLM, the researchers tested it through 2 tasks in different audio fields.

The first is Speech continuation. This model retains the prompted speaker characteristics and prosody, while also outputting new content that is grammatically correct and semantically consistent.

The second is Piano continuation, which generates piano music that is consistent with the cues in terms of melody, harmony, and rhythm.

As shown below, all the sounds you hear after the gray vertical lines are generated by AudioLM.

To verify the effectiveness, the researchers asked human raters to listen to short audio clips to determine whether they were original recordings of human speech or recordings generated by AudioLM.

According to the collected ratings, it can be seen that AudioLM has a success rate of 51.2%, which means that the speech generated by this AI model is difficult for ordinary listeners to distinguish from real speech.

Rupal Patel, who studies information and language science at Northeastern University, said that previous work using artificial intelligence to generate audio could only capture these nuances if they were explicitly annotated in the training data.

In contrast, AudioLM automatically learns these features from the input data and also achieves high-fidelity results.

With the emergence of multi-modal ML models such as GPT3 and Bloom (text generation), DALLE and Stable Diffusion (image generation), RunwayML and Make-A-Video (video generation), content creation and creativity Work is changing.

The future world is a world generated by artificial intelligence.

Reference materials:

https://www.php.cn/link/c11cb55c3d8dcc03a7ab7ab722703e0a

https ://www.php.cn/link/b6b3598b407b7f328e3129c74ca8ca94

https://www.php.cn/link/c5f7756d9f92a8954884ec415f79d120

https://www.php.cn/link/9b644ca9f37e3699ddf2055800130aa9

The above is the detailed content of Google AI singer is coming! AudioLM can compose music and songs by simply listening for a few seconds.. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1385

1385

52

52

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

How to choose a GitLab database in CentOS

Apr 14, 2025 pm 05:39 PM

When installing and configuring GitLab on a CentOS system, the choice of database is crucial. GitLab is compatible with multiple databases, but PostgreSQL and MySQL (or MariaDB) are most commonly used. This article analyzes database selection factors and provides detailed installation and configuration steps. Database Selection Guide When choosing a database, you need to consider the following factors: PostgreSQL: GitLab's default database is powerful, has high scalability, supports complex queries and transaction processing, and is suitable for large application scenarios. MySQL/MariaDB: a popular relational database widely used in Web applications, with stable and reliable performance. MongoDB:NoSQL database, specializes in

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product