Technology peripherals

Technology peripherals

AI

AI

Data closed-loop research: The development of autonomous driving shifts from technology-driven to data-driven

Data closed-loop research: The development of autonomous driving shifts from technology-driven to data-driven

Data closed-loop research: The development of autonomous driving shifts from technology-driven to data-driven

Zosi Auto R&D released "2022 China Autonomous Driving Data Closed-Loop Research Report".

1. The development of autonomous driving gradually shifts from technology-driven to data-driven

Nowadays, autonomous driving sensor solutions and computing platforms have become increasingly homogeneous, and suppliers The technological gap is narrowing day by day. In the past two years, autonomous driving technology iterations have advanced rapidly, and mass production has accelerated. According to Zuosi Data Center, in 2021, the cumulative number of domestic L2 assisted driving passenger vehicles will reach 4.79 million, a year-on-year increase of 58.0%. From January to June 2022, the penetration rate of China's L2 assisted driving in the new passenger car market climbed to 32.4%.

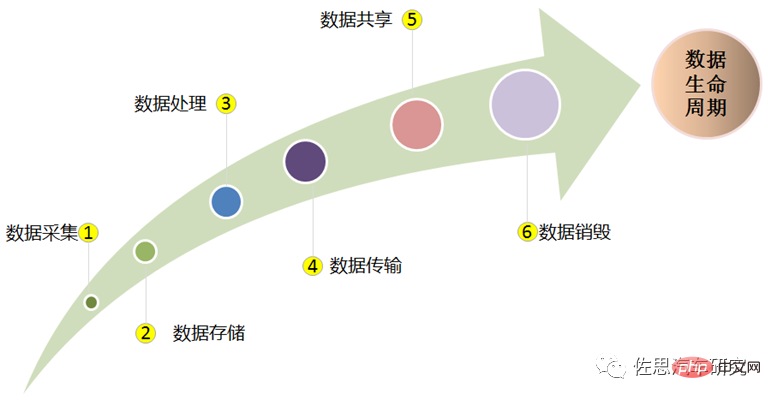

For autonomous driving, data runs through the entire life cycle of research and development, testing, mass production, operation and maintenance. With the rapid increase in the number of smart connected car sensors, the amount of data generated by ADAS and autonomous vehicles has also increased exponentially, from GB to TB, PB, EB, and even ZB in the future. Only by using data-driven car evolution to meet the personalized needs of users can car companies go far.

According to the "Safety Guidelines for Automobile Collection Data Processing", automobile collection data refers to the data collected by automobile sensing equipment and control units, as well as the data generated after processing, which can be detailed It is divided into off-vehicle data, cockpit data, operating data and location trajectory data, etc.

According to the "Several Regulations on Automobile Data Security Management (Trial)" promulgated by the Cyberspace Administration of China in August 2021, the collection, analysis, storage, transmission, query, and application of automobile data , deletion and other entire processes have been specified in detail. In the process of car data processing, we adhere to the data processing principles of "in-car processing", "no collection by default", "applicable accuracy range" and "desensitization processing" to reduce the disorderly collection and illegal abuse of car data. In the development process of autonomous driving technology, data collection and processing must first be legal and compliant.

Data collection/cleaning

From car cameras, millimeter wave radar, lidar The large amounts of unstructured data (images, videos, speech) collected by ultrasonic and ultrasonic radars can be raw and chaotic. To make data meaningful, it needs to be cleaned, structured, and organized. Data from multiple sources is first imported into the appropriate repository, the data format is standardized, and aggregated according to relevant rules. It then checks for corrupted, duplicate or missing data points and discards unnecessary data that may affect the overall quality of the data set. Finally, labels are used to classify videos captured under different conditions, such as day, night, sunny, rainy, etc. This step provides clean, structured data that will be used for training and validation.

Data annotation

The structured data that has been cleaned after data collection needs to be Label. Annotation is the process of assigning coded values to raw data. Encoded values include, but are not limited to, assigning class labels, drawing bounding boxes, and marking object boundaries. High-quality annotations are needed to teach supervised learning models what objects are and to measure the performance of trained models.

In the field of autonomous driving, the scenarios for data annotation processing usually include lane changing and overtaking, passing through intersections, unprotected left turns and right turns without traffic light control. Turns, and some complex long-tail scenes such as vehicles running red lights, pedestrians crossing the road, vehicles parked illegally on the roadside, etc.

Commonly used annotation tools include general image drawing, lane line annotation, driver face annotation, 3D point cloud annotation, 2D/3D fusion annotation, panoramic semantic segmentation, etc. Due to the development of big data and the increase in the number of large data sets, the use of data annotation tools continues to expand rapidly.

Data transmission

Nowadays, the frequency of data collection has entered the millisecond level, requiring It is high-precision data of thousands of signal dimensions (such as bus signals, sensor internal states, software buried points, user behavior and environment perception data, etc.), while avoiding data loss, disorder, jumps and delays, and at high Under the premise of high accuracy and high quality, transmission/storage costs are greatly reduced. The uplink and downlink of Internet of Vehicles data are relatively long (from the vehicle MCU, DCU, gateway, 4G/5G to the cloud) and it is necessary to ensure the data transmission quality of each link node.

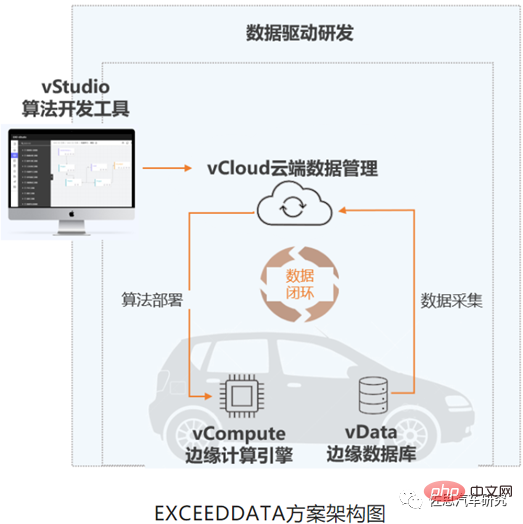

In response to the new changes in data transmission, some companies have been able to provide efficient data collection and vehicle-cloud integrated transmission solutions, such as Zhixiehui and EXCEEDDATA flexible data acquisition platform solutions, which are based on real-time data in the vehicle edge computing environment. 10 millisecond-level real-time computing is used to trigger the flexible data collection and upload function. The uploaded data has been calculated and filtered, significantly reducing the amount of uploaded data. In addition, the original signal from the vehicle is compressed and stored 100-300 times losslessly. The cloud management platform saves the high-quality signal from the vehicle without loss and high compression ratio. It supports the issuance of data acquisition algorithms, the triggering of multiple acquisition modes, and the real-time upload of collected data. One-click download to the business desktop, multiple flexible filtering by vehicle, event, time period, etc., easy to use and solve, separation of storage and calculation, realizing a closed loop of vehicle-cloud isomorphic data collection-calculation-uploading-processing; In 2021, the first domestic mass-produced model equipped with the EXCEEDDATA solution has been launched (HiPhiX).

Source: Zhixiehuitong

Data Storage

In order to perceive the surrounding environment more clearly, self-driving cars are equipped with more sensors and generate a large amount of data. Some high-level autonomous driving systems are even equipped with more than 40 various sensors to accurately perceive the 360° environment around the vehicle. The development of autonomous driving systems requires multiple steps such as data collection, data aggregation, cleaning and labeling, model training, simulation, and big data analysis. This process involves the aggregation and storage of massive data, data flow between different systems in different links, and Read and write massive amounts of data during model training. Data faces new challenges with storage bottlenecks.

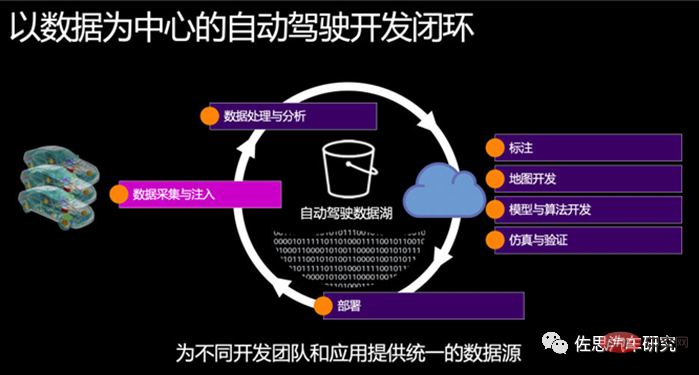

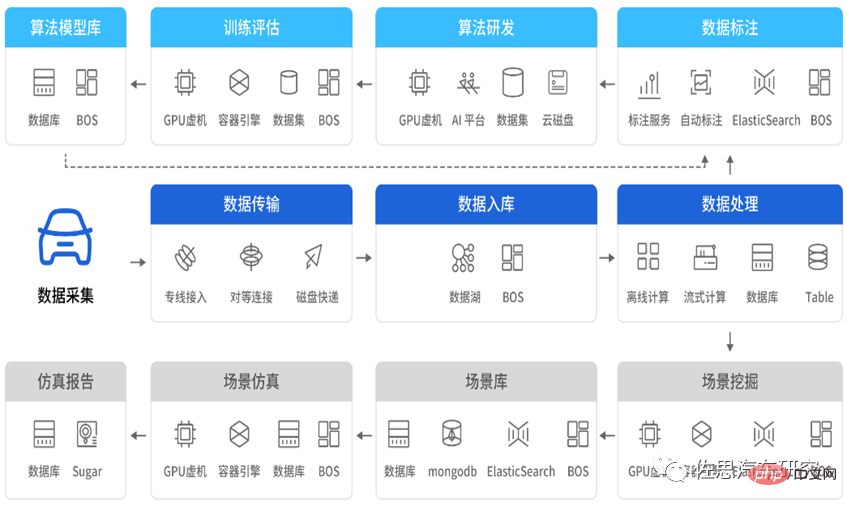

To this end, the technologies and capabilities of many cloud service providers in this area have become the key to helping car companies win. For example, Amazon Cloud Technology AWS uses the autonomous driving data lake as the center to help car companies build an end-to-end autonomous driving data closed loop. Use Amazon Simple Storage Service (Amazon S3, cloud object storage service) to build an autonomous driving data lake to achieve data collection, data management and analysis, data annotation, model and algorithm development, simulation verification, map development, DevOps and MLOps, and car companies It can more easily realize the development, testing and application of the entire process of autonomous driving.

Source: AWS

Among domestic technology giants, take Baidu data closed-loop solution as an example, its data storage provides Data retrieval service for roadside and vehicle multi-source data information, used for massive data search on the business platform, with multi-dimensional retrieval (vehicle information, mileage, autonomous driving duration, etc.), management of the entire life cycle from data production to destruction, Supports advantages such as panoramic data view, data traceability, and open data sharing.

Baidu autonomous driving data closed-loop solution architecture

Source: Baidu

2. Efficient development of autonomous driving requires the construction of a data closed-loop system

The development of autonomous driving has shifted from technology-driven to data-driven, but data-driven business models face many difficulties.

It is difficult to process massive data: The amount of data collected by high-level autonomous driving test vehicles every day is TB level, and the development team needs PB level storage space, but these data can be used for training The value data accounts for less than 5%. In addition, there are strict safety compliance requirements for data collected by sensors such as vehicle cameras, lidar, and high-precision positioning, which undoubtedly poses great challenges to the access, storage, desensitization, and processing of massive data.

The cost of data annotation is high: Data annotation takes up a lot of manpower and time costs. With the development of high-level autonomous driving capabilities, the complexity of scenarios continues to increase, and more difficult scenarios will appear. Improving the accuracy of the vehicle perception model places higher requirements on the scale and quality of the training data set. Traditional manual annotation has been unable to meet the demand for massive data sets for model training in terms of efficiency and cost.

Simulation test efficiency is low: Virtual simulation is an effective means to accelerate the training of autonomous driving algorithms, but simulation scenarios are difficult to construct and have low degree of restoration, especially some complex and dangerous scenarios, which are difficult to construct. In addition, the parallel simulation capability is insufficient, the efficiency of simulation testing is low, and the iteration cycle of the algorithm is too long.

High-precision map coverage is low: High-precision maps mainly rely on self-collection and self-made maps, and only meet the scenarios of designated roads in the experimental stage. In the future, it will be commercialized and expanded to urban streets in major cities across the country. It will face very prominent challenges in terms of coverage, dynamic updates, as well as cost and efficiency.

In order to solve various difficulties and problems, efficient development of autonomous driving requires the construction of an efficient data closed-loop system.

Source: Freetech

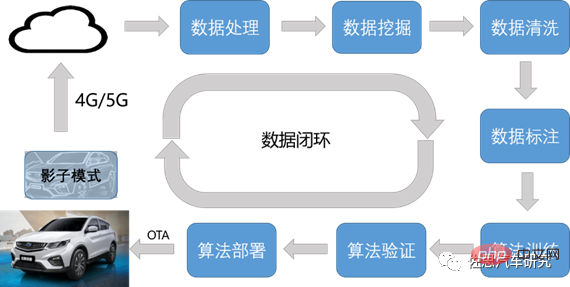

As far as the autonomous driving data closed loop is concerned, in Corner Cases need to be constantly solved during the implementation of autonomous driving. For this purpose, sufficient data samples and convenient vehicle-side verification methods must be available. Shadow mode is one of the best solutions for solving Corner Cases.

Shadow mode was proposed by Tesla in April 2019 and applied to the vehicle to compare relevant decisions and trigger data upload. The self-driving software on the sold vehicle is used to continuously record the data detected by the sensors and selectively transmit it back at the appropriate time for machine learning and improvement of the original self-driving algorithm.

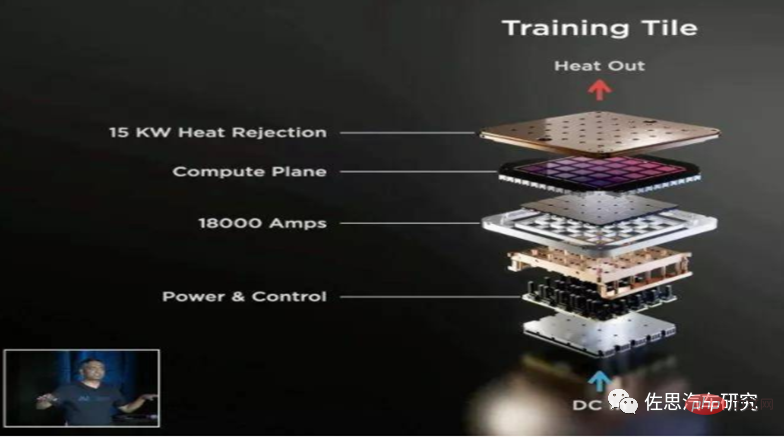

Dojo supercomputer can use massive video data for unsupervised annotation and training.

In 2021, Tesla delivered 936,200 vehicles globally, of which 484,100 were delivered by the Chinese factory. In the first half of 2022, 560,000 vehicles will be delivered. Tesla takes advantage of mass production and continuously optimizes algorithms through shadow mode. Using shadow mode, millions of sold vehicles are used as test vehicles to capture surrounding perceptions and special road conditions, and continuously strengthen the ability to predict, avoid, and learn from uncertain events. Because there are millions of sold vehicles to support it, the coverage of Corner Cases and extreme working conditions will be more comprehensive. The high-quality data collected by flexible triggering can iterate out better algorithms, and the excellence of algorithm iteration determines The value of software. In terms of software upgrade subscription services, the explosive power of data closed loop has just emerged.

3. Data closed loop becomes the core of iterative upgrade of autonomous driving

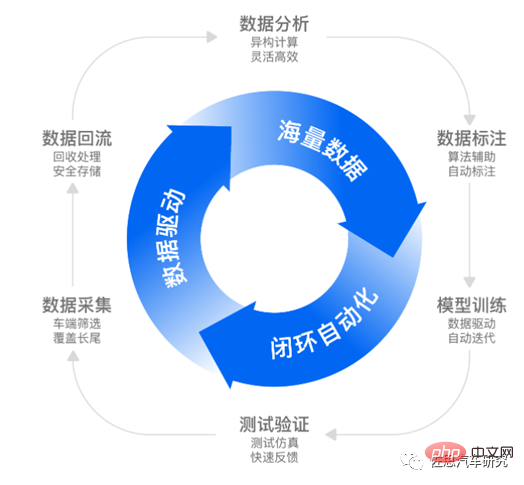

The premise of continuous iteration of the autonomous driving system is the continuous optimization of the algorithm, and the algorithm The excellence depends on the efficiency of the data closed-loop system. The efficient flow of data in each scenario of autonomous driving development is crucial. Data intelligence will become the key to accelerating the mass production of autonomous driving.

In December 2021, HaoMo Zhixing officially released MANA Xuehu, China’s first autonomous driving data intelligence system, which accelerates autonomous driving from the five major capabilities of perception, cognition, annotation, simulation, and calculation. The evolution of driving technology. In the next three years, the assisted driving system can be installed on more than 1 million passenger cars. Relying on its fully self-developed autonomous driving system, Haomo Zhixing has achieved significant advantages in the accumulation, processing, and application of data. Massive data brings the advantage of technological iteration. The advantages of cost reduction and efficiency increase are obvious.

For another example, Momenta has achieved leading full-process data-driven technical capabilities, including algorithm modules such as perception, fusion, prediction, and control, which can be iterated efficiently in a data-driven manner. with updates. Its Closed Loop Automation is a set of tool chains that allow data flow to drive automatic iteration of data-driven algorithms. CLA can automatically filter out massive amounts of golden data, drive the automatic iteration of algorithms, and make the self-driving flywheel spin faster and faster.

Source: Momenta

Source: Momenta

In the context of software-defined cars, data, algorithms and computing power are the troika of autonomous driving development. The research and development cycles of car companies are shortened and function iterations are accelerated. In the future, being able to continuously collect data at low cost, high efficiency and high efficiency, and iterate algorithms through real data to ultimately form a data closed loop and business closed loop is the key to the sustainable development of autonomous driving companies.

The above is the detailed content of Data closed-loop research: The development of autonomous driving shifts from technology-driven to data-driven. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1359

1359

52

52

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Why is Gaussian Splatting so popular in autonomous driving that NeRF is starting to be abandoned?

Jan 17, 2024 pm 02:57 PM

Written above & the author’s personal understanding Three-dimensional Gaussiansplatting (3DGS) is a transformative technology that has emerged in the fields of explicit radiation fields and computer graphics in recent years. This innovative method is characterized by the use of millions of 3D Gaussians, which is very different from the neural radiation field (NeRF) method, which mainly uses an implicit coordinate-based model to map spatial coordinates to pixel values. With its explicit scene representation and differentiable rendering algorithms, 3DGS not only guarantees real-time rendering capabilities, but also introduces an unprecedented level of control and scene editing. This positions 3DGS as a potential game-changer for next-generation 3D reconstruction and representation. To this end, we provide a systematic overview of the latest developments and concerns in the field of 3DGS for the first time.

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

How to solve the long tail problem in autonomous driving scenarios?

Jun 02, 2024 pm 02:44 PM

Yesterday during the interview, I was asked whether I had done any long-tail related questions, so I thought I would give a brief summary. The long-tail problem of autonomous driving refers to edge cases in autonomous vehicles, that is, possible scenarios with a low probability of occurrence. The perceived long-tail problem is one of the main reasons currently limiting the operational design domain of single-vehicle intelligent autonomous vehicles. The underlying architecture and most technical issues of autonomous driving have been solved, and the remaining 5% of long-tail problems have gradually become the key to restricting the development of autonomous driving. These problems include a variety of fragmented scenarios, extreme situations, and unpredictable human behavior. The "long tail" of edge scenarios in autonomous driving refers to edge cases in autonomous vehicles (AVs). Edge cases are possible scenarios with a low probability of occurrence. these rare events

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

Choose camera or lidar? A recent review on achieving robust 3D object detection

Jan 26, 2024 am 11:18 AM

0.Written in front&& Personal understanding that autonomous driving systems rely on advanced perception, decision-making and control technologies, by using various sensors (such as cameras, lidar, radar, etc.) to perceive the surrounding environment, and using algorithms and models for real-time analysis and decision-making. This enables vehicles to recognize road signs, detect and track other vehicles, predict pedestrian behavior, etc., thereby safely operating and adapting to complex traffic environments. This technology is currently attracting widespread attention and is considered an important development area in the future of transportation. one. But what makes autonomous driving difficult is figuring out how to make the car understand what's going on around it. This requires that the three-dimensional object detection algorithm in the autonomous driving system can accurately perceive and describe objects in the surrounding environment, including their locations,

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

Have you really mastered coordinate system conversion? Multi-sensor issues that are inseparable from autonomous driving

Oct 12, 2023 am 11:21 AM

The first pilot and key article mainly introduces several commonly used coordinate systems in autonomous driving technology, and how to complete the correlation and conversion between them, and finally build a unified environment model. The focus here is to understand the conversion from vehicle to camera rigid body (external parameters), camera to image conversion (internal parameters), and image to pixel unit conversion. The conversion from 3D to 2D will have corresponding distortion, translation, etc. Key points: The vehicle coordinate system and the camera body coordinate system need to be rewritten: the plane coordinate system and the pixel coordinate system. Difficulty: image distortion must be considered. Both de-distortion and distortion addition are compensated on the image plane. 2. Introduction There are four vision systems in total. Coordinate system: pixel plane coordinate system (u, v), image coordinate system (x, y), camera coordinate system () and world coordinate system (). There is a relationship between each coordinate system,

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

This article is enough for you to read about autonomous driving and trajectory prediction!

Feb 28, 2024 pm 07:20 PM

Trajectory prediction plays an important role in autonomous driving. Autonomous driving trajectory prediction refers to predicting the future driving trajectory of the vehicle by analyzing various data during the vehicle's driving process. As the core module of autonomous driving, the quality of trajectory prediction is crucial to downstream planning control. The trajectory prediction task has a rich technology stack and requires familiarity with autonomous driving dynamic/static perception, high-precision maps, lane lines, neural network architecture (CNN&GNN&Transformer) skills, etc. It is very difficult to get started! Many fans hope to get started with trajectory prediction as soon as possible and avoid pitfalls. Today I will take stock of some common problems and introductory learning methods for trajectory prediction! Introductory related knowledge 1. Are the preview papers in order? A: Look at the survey first, p

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

SIMPL: A simple and efficient multi-agent motion prediction benchmark for autonomous driving

Feb 20, 2024 am 11:48 AM

Original title: SIMPL: ASimpleandEfficientMulti-agentMotionPredictionBaselineforAutonomousDriving Paper link: https://arxiv.org/pdf/2402.02519.pdf Code link: https://github.com/HKUST-Aerial-Robotics/SIMPL Author unit: Hong Kong University of Science and Technology DJI Paper idea: This paper proposes a simple and efficient motion prediction baseline (SIMPL) for autonomous vehicles. Compared with traditional agent-cent

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

nuScenes' latest SOTA | SparseAD: Sparse query helps efficient end-to-end autonomous driving!

Apr 17, 2024 pm 06:22 PM

Written in front & starting point The end-to-end paradigm uses a unified framework to achieve multi-tasking in autonomous driving systems. Despite the simplicity and clarity of this paradigm, the performance of end-to-end autonomous driving methods on subtasks still lags far behind single-task methods. At the same time, the dense bird's-eye view (BEV) features widely used in previous end-to-end methods make it difficult to scale to more modalities or tasks. A sparse search-centric end-to-end autonomous driving paradigm (SparseAD) is proposed here, in which sparse search fully represents the entire driving scenario, including space, time, and tasks, without any dense BEV representation. Specifically, a unified sparse architecture is designed for task awareness including detection, tracking, and online mapping. In addition, heavy

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving