Technology peripherals

Technology peripherals

AI

AI

For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!

For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!

For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!

2022 is the year of the explosion of artificial intelligence-generated content (AIGC). One of the popular directions is to edit pictures through text descriptions (text prompts). Existing methods usually rely on generative models trained on large-scale data sets, which not only results in high data acquisition and training costs, but also results in larger model sizes. These factors have brought a high threshold to the actual development and application of technology, limiting the development and creativity of AIGC.

In response to the above pain points, NetEase Interactive Entertainment AI Lab collaborated with Shanghai Jiao Tong University to conduct research and innovatively proposed a solution based on differentiable vector renderer - CLIPVG, for the first time It achieves text-guided image editing without relying on any generative model. This solution cleverly uses the characteristics of vector elements to constrain the optimization process, so it can not only avoid massive data requirements and high training overhead, but also achieve the optimal level of generation effects. The corresponding paper "CLIPVG: Text-Guided Image Manipulation Using Differentiable Vector Graphics" has been included in AAAI 2023.

- ##Paper address: https://arxiv.org/abs/2212.02122

- Open source code: https://github.com/NetEase-GameAI/clipvg

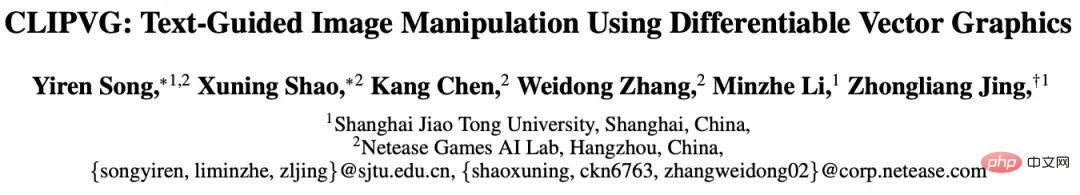

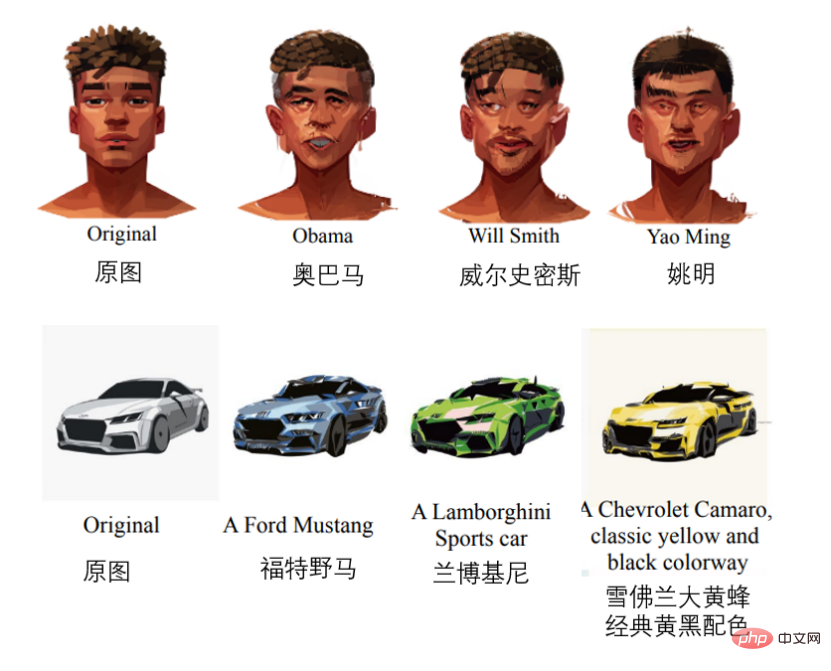

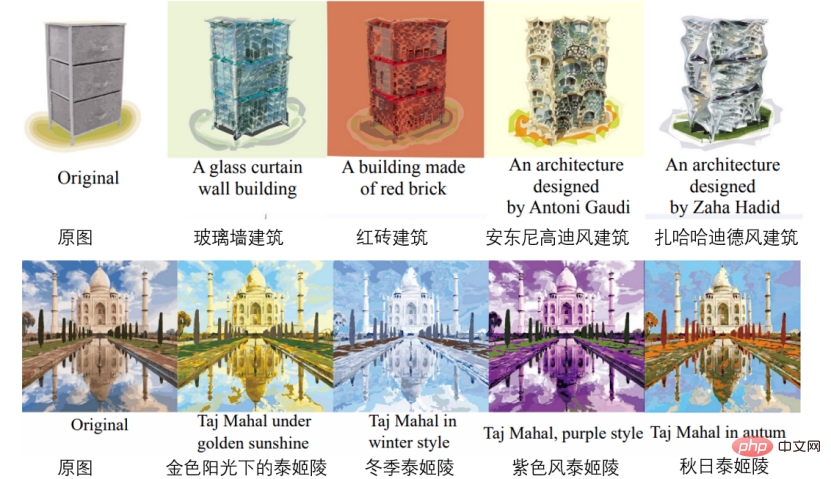

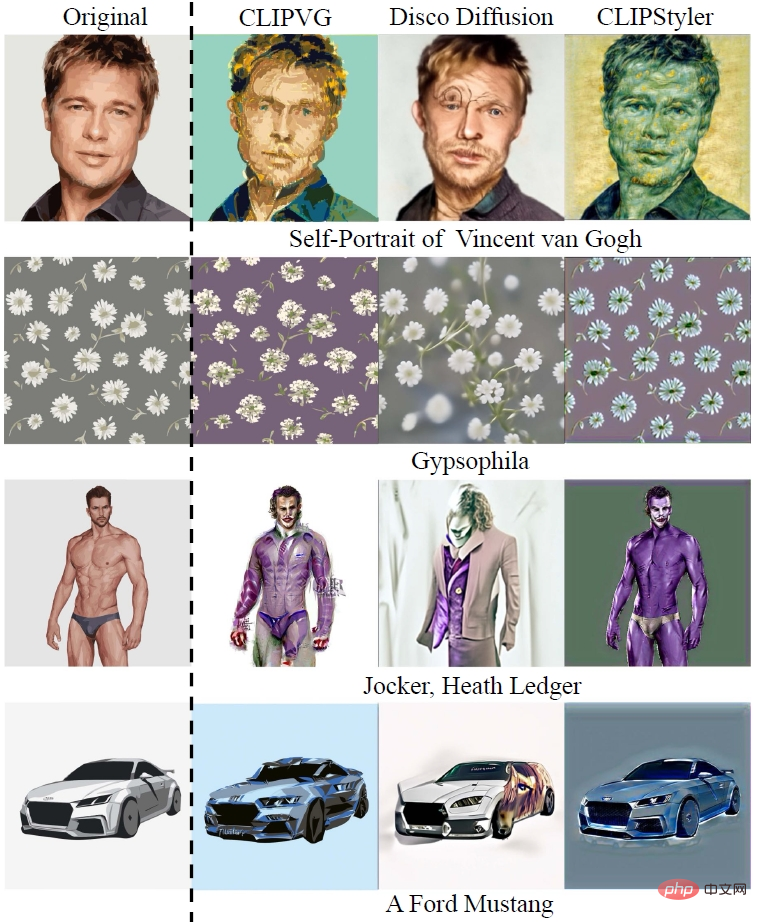

Some of the effects are as follows (in order) For face editing, car model modification, building generation, color change, pattern modification, font modification).

Ideas and technical background

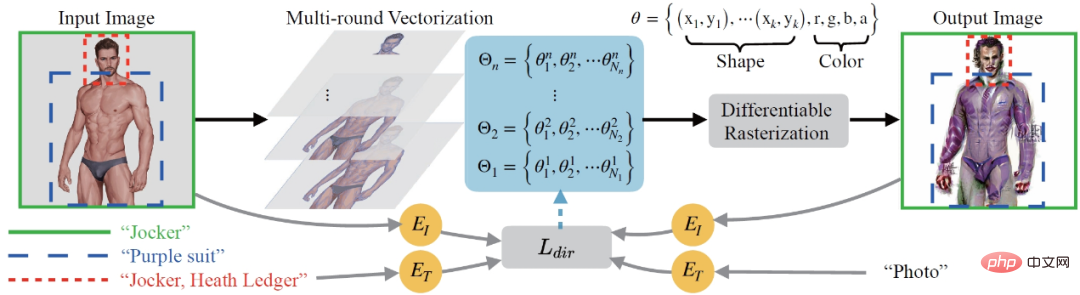

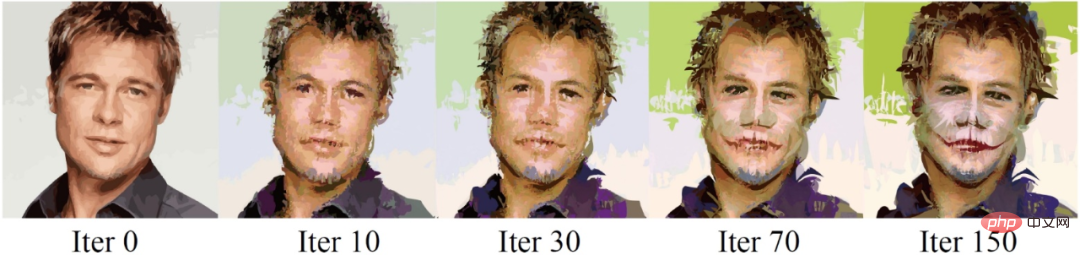

From the perspective of the overall process, CLIPVG first proposed a multi-round vectorization method that can robustly convert pixel images into vectors domain and adapt to subsequent image editing needs. An ROI CLIP loss is then defined as the loss function to support guidance with different text for each region of interest (ROI). The entire optimization process uses a differentiable vector renderer to perform gradient calculations on vector parameters (such as color block colors, control points, etc.).CLIPVG combines technologies from two fields, one is text-guided image editing in the pixel domain, and the other is the generation of vector images. Next, the relevant technical background will be introduced in turn.

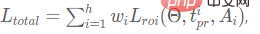

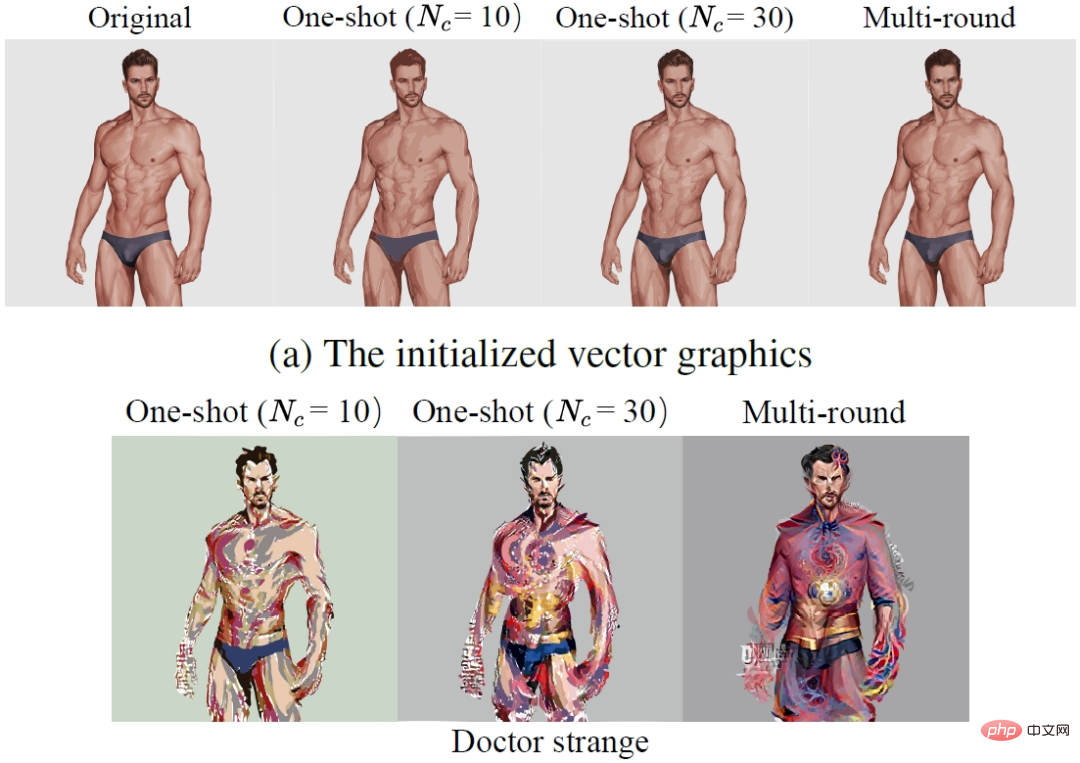

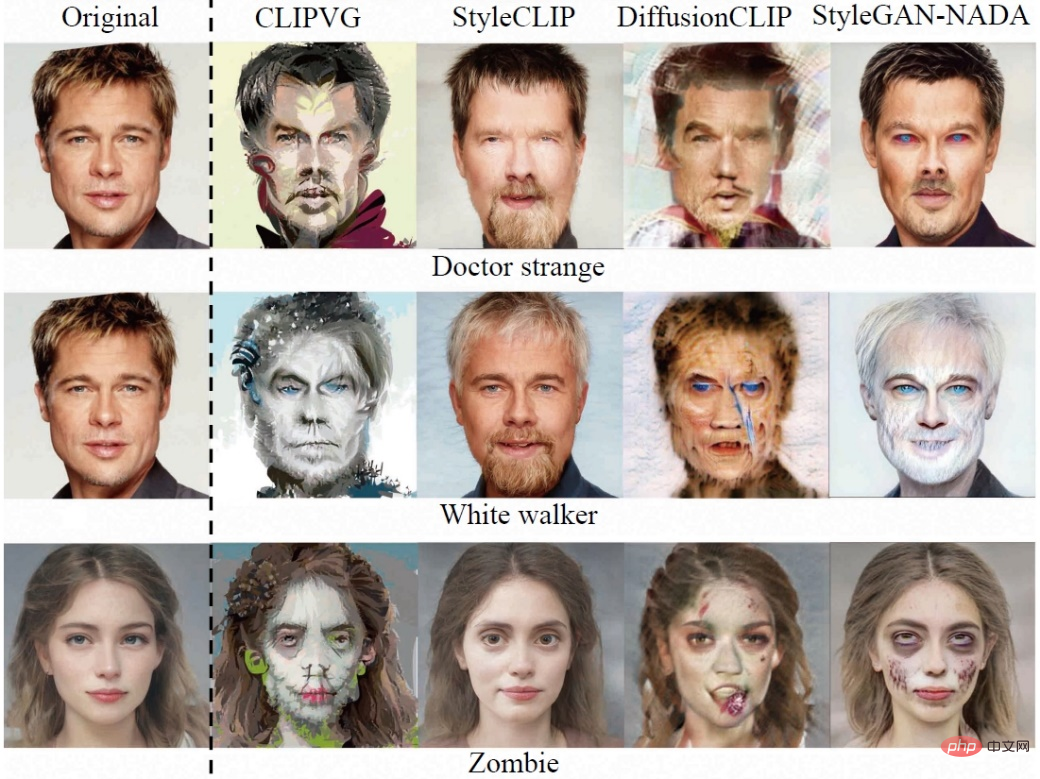

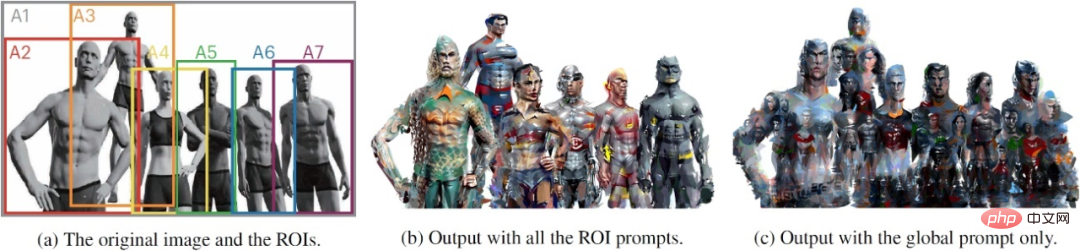

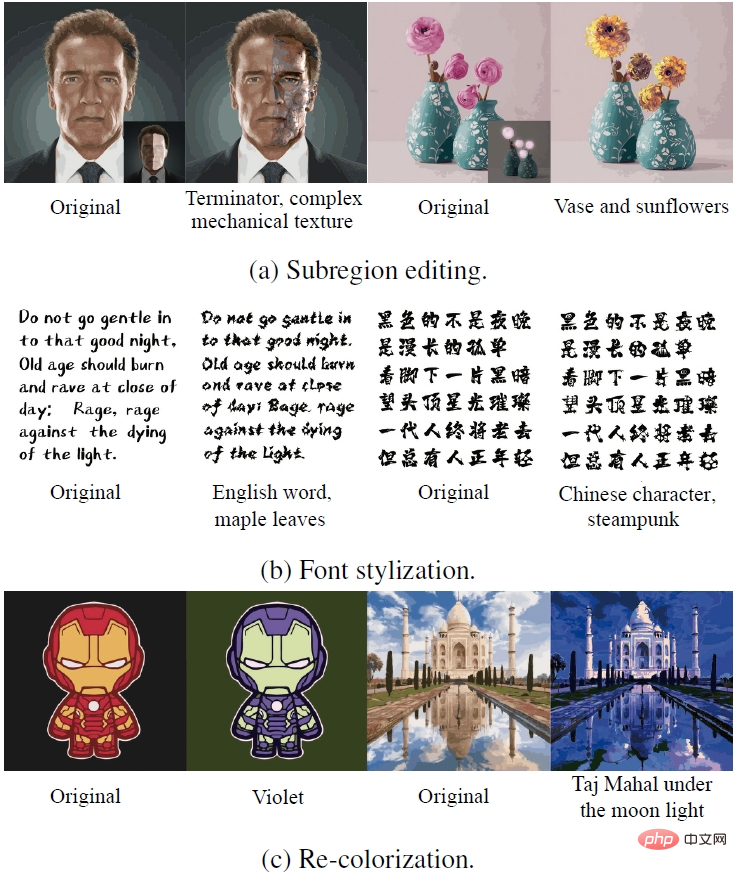

Text-guided image translation

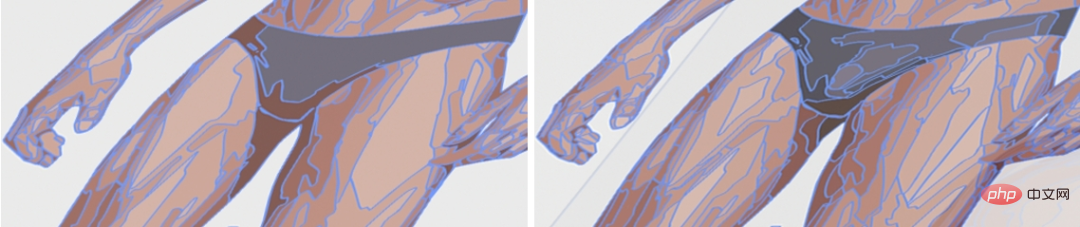

Typical methods to allow AI to "understand" text guidance during image editing It uses the Contrastive Language-Image Pre-Training (CLIP) model. The CLIP model can encode text and images into comparable latent spaces and provide cross-modal similarity information about "whether the image conforms to the text description", thereby establishing a semantic connection between text and images. However, in fact, it is difficult to effectively guide image editing directly using only the CLIP model. This is because CLIP mainly focuses on the high-level semantic information of the image and lacks constraints on pixel-level details, causing the optimization process to easily fall into a local optimum. (local minimum) or adversarial solutions.The existing common method is to combine CLIP with a pixel domain generation model based on GAN or Diffusion, such as StyleCLIP (Patashnik et al, 2021), StyleGAN-NADA (Gal et al, 2022), Disco Diffusion (alembics 2022), DiffusionCLIP (Kim, Kwon, and Ye 2022), DALL·E 2 (Ramesh et al, 2022) and so on. These schemes utilize generative models to constrain image details, thus making up for the shortcomings of using CLIP alone. But at the same time, these generative models rely heavily on training data and computing resources, and will make the effective range of image editing limited by the training set images. Limited by the ability to generate models, methods such as StyleCLIP, StyleGAN-NADA, and DiffusionCLIP can only limit a single model to a specific field, such as face images. Although methods such as Disco Diffusion and DALL·E 2 can edit any image, they require massive data and computing resources to train their corresponding generative models. There are currently very few solutions that do not rely on generative models, such as CLIPstyler (Kwon and Ye 2022). During optimization, CLIPstyler will divide the image to be edited into random patches, and use CLIP guidance on each patch to strengthen the constraints on image details. The problem is that each patch will independently reflect the semantics defined by the input text. As a result, this solution can only perform style transfer, but cannot perform overall high-level semantic editing of the image. Different from the above pixel domain methods, the CLIPVG solution proposed by NetEase Interactive Entertainment AI Lab uses the characteristics of vector graphics to constrain image details to replace the generative model. CLIPVG can support any input image and can perform general-purpose image editing. Its output is a standard svg format vector graphic, which is not limited by resolution. Some existing works consider text-guided vector graphics generation, such as CLIPdraw (Frans, Soros, and Witkowski 2021), StyleCLIPdraw (Schaldenbrand, Liu, and Oh 2022) et al. A typical approach is to combine CLIP with a differentiable vector renderer, and start from randomly initialized vector graphics and gradually approximate the semantics represented by the text. The differentiable vector renderer used is Diffvg (Li et al. 2020), which can rasterize vector graphics into pixel images through differentiable rendering. CLIPVG also uses Diffvg to establish the connection between vector images and pixel images. Different from existing methods, CLIPVG focuses on how to edit existing images rather than directly generating them. Since most of the existing images are pixel images, they need to be vectorized before they can be edited using the characteristics of vector graphics. Existing vectorization methods include Adobe Image Trace (AIT), LIVE (Ma et al. 2022), etc., but these methods do not consider subsequent editing needs. CLIPVG introduces multiple rounds of vectorization enhancement methods based on existing methods to specifically improve the robustness of image editing. Technical implementation The overall process of CLIPVG is shown in the figure below. First, the input pixel image is subjected to multi-round vectorization (Multi-round Vectorization) with different precisions, where the set of vector elements obtained in the i-th round is marked as Θi. The results obtained in each round will be superimposed together as an optimization object, and converted back to the pixel domain through differentiable vector rendering (Differentiable Rasterization). The starting state of the output image is the vectorized reconstruction of the input image, and then iterative optimization is performed in the direction described in the text. The optimization process will calculate the ROI CLIP loss ( The entire iterative optimization process can be seen in the following example, in which the guide text is "Jocker, Heath Ledger" (Joker, Heath Ledger) . Vectorization Vector graphics can be defined as a collection of vector elements, where each vector element is controlled by a series of parameters. The parameters of the vector element depend on its type. Taking a filled curve as an example, its parameters are , where is the control Point parameters, are parameters for RGB color and opacity. There are some natural constraints when optimizing vector elements. For example, the color inside an element is always consistent, and the topological relationship between its control points is also fixed. These features make up for CLIP's lack of detailed constraints and can greatly enhance the robustness of the optimization process. Theoretically, CLIPVG can be vectorized using any existing method. But research has found that doing so can lead to several problems with subsequent image editing. First of all, the usual vectorization method can ensure that the adjacent vector elements of the image are perfectly aligned in the initial state, but each element will move with the optimization process, causing "cracks" to appear between the elements. Secondly, sometimes the input image is relatively simple and only requires a small number of vector elements to fit, while the effect of text description requires more complex details to express, resulting in the lack of necessary raw materials (vector elements) during image editing. In response to the above problems, CLIPVG proposed a multi-round vectorization strategy. In each round, existing methods will be called to obtain a vectorized result, which will be superimposed in sequence. Each round improves accuracy relative to the previous round, i.e. vectorizes with smaller blocks of vector elements. The figure below reflects the difference in different precisions during vectorization. The set of vector elements obtained by the i-th round of vectorization can be expressed as Loss function Similar to StyleGAN-NADA and CLIPstyler, CLIPVG uses a directional CLIP loss to Measures the correspondence between generated images and description text, which is defined as follows, where Ai is the i-th ROI area, which is The total loss is the sum of ROI CLIP losses in all areas, that is, Here is the one The region can be a ROI, or a patch cropped from the ROI. CLIPVG will optimize the vector parameter set Θ based on the above loss function. When optimizing, you can also target only a subset of Θ, such as shape parameters, color parameters, or some vector elements corresponding to a specific area. In the experimental part, CLIPVG first verified the effectiveness of multiple rounds of vectorization strategies and vector domain optimization through ablation experiments, and then compared it with the existing baseline A comparison was made, and unique application scenarios were finally demonstrated. Ablation experiment The study first compared the multi-round vectorization (Multi-round) strategy and only one-round vectorization (One- shot) effect. The first line in the figure below is the initial result after vectorization, and the second line is the edited result. where Nc represents the accuracy of vectorization. It can be seen that multiple rounds of vectorization not only improve the reconstruction accuracy of the initial state, but also effectively eliminate the cracks between vector elements after editing and enhance the performance of details. #In order to further study the characteristics of vector domain optimization, the paper compares CLIPVG (vector domain method) and CLIPstyler (pixel domain method) using different patch sizes The effect of enhancement. The first line in the figure below shows the effect of CLIPVG using different patch sizes, and the second line shows the effect of CLIPstyler. Its textual description is "Doctor Strange". The resolution of the entire image is 512x512. It can be seen that when the patch size is small (128x128 or 224x224), both CLIPVG and CLIPstyler will display the representative red and blue colors of "Doctor Strange" in small local areas, but the semantics of the entire face do not change significantly. . This is because the CLIP guidance at this time is not applied to the entire image. When CLIPVG increases the patch size to 410x410, you can see obvious changes in character identity, including hairstyles and facial features, which are effectively edited according to text descriptions. If patch enhancement is removed, the semantic editing effect and detail clarity will be reduced, indicating that patch enhancement still has a positive effect. Unlike CLIPVG, CLIPstyler still cannot change the character's identity when the patch is larger or the patch is removed, but only changes the overall color and some local textures. The reason is that the method of enlarging the patch size in the pixel domain loses the underlying constraints and falls into a local optimum. This set of comparisons shows that CLIPVG can effectively utilize the constraints on details in the vector domain and achieve high-level semantic editing combined with the larger CLIP scope (patch size), which is difficult to achieve with pixel domain methods. Comparative experiment In the comparative experiment, the study first used CLIPVG and two methods to edit any picture. The pixel domain methods were compared, including Disco Diffusion and CLIPstyler. As you can see in the figure below, for the example of "Self-Portrait of Vincent van Gogh", CLIPVG can edit the character identity and painting style at the same time, while the pixel domain method only can achieve one of them. For "Gypsophila", CLIPVG can edit the number and shape of petals more accurately than the baseline method. In the examples of "Jocker, Heath Ledger" and "A Ford Mustang", CLIPVG can also robustly change the overall semantics. Relatively speaking, Disco Diffusion is prone to local flaws, while CLIPstyler generally only adjusts the texture and color. (Top down: Van Gogh painting, gypsophila, Heath Ledger Joker , Ford Mustang) #The researchers then compared pixel domain methods for images in specific fields (taking human faces as an example), including StyleCLIP, DiffusionCLIP and StyleGAN-NADA. Due to the restricted scope of use, the generation quality of these baseline methods is generally more stable. In this set of comparisons, CLIPVG still shows that the effect is not inferior to existing methods, especially the degree of consistency with the target text is often higher. (Top to bottom: Doctor Strange, White Walkers, Zombies) Using the characteristics of vector graphics and ROI-level loss functions, CLIPVG can support a series of innovative gameplay that are difficult to achieve with existing methods. For example, the editing effect of the multi-person picture shown at the beginning of this article is achieved by defining different ROI level text descriptions for different characters. The left side of the picture below is the input, the middle is the editing result of the ROI level text description, and the right side is the result of the entire picture having only one overall text description. The descriptions corresponding to areas A1 to A7 are 1. "Justice League Six", 2. "Aquaman", 3. "Superman", 4. "Wonder Woman" ), 5. "Cyborg" (Cyborg), 6. "Flash, DC Superhero" (The Flash, DC) and 7. "Batman" (Batman). It can be seen that the description at the ROI level can be edited separately for each character, but the overall description cannot generate effective individual identity characteristics. Since the ROIs overlap with each other, it is difficult for existing methods to achieve the overall coordination of CLIPVG even if each character is edited individually. CLIPVG can also achieve a variety of special editing effects by optimizing some vector parameters. The first line in the image below shows the effect of editing only a partial area. The second line shows the font generation effect of locking the color parameters and optimizing only the shape parameters. The third line is the opposite of the second line, achieving the purpose of recoloring by optimizing only the color parameters. (Top-down: sub-area editing, font stylization, image color change)Vector image generation

in the figure below) based on the area range and associated text of each ROI, and optimize each vector element according to the gradient, including color parameters and shape parameters.

in the figure below) based on the area range and associated text of each ROI, and optimize each vector element according to the gradient, including color parameters and shape parameters.

, and the results produced by all rounds The set of vector elements obtained after superposition is denoted as

, and the results produced by all rounds The set of vector elements obtained after superposition is denoted as  , which is the total optimization object of CLIPVG.

, which is the total optimization object of CLIPVG.

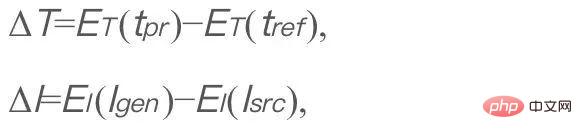

represents the input text description.

represents the input text description.  is a fixed reference text, set to "photo" in CLIPVG, and

is a fixed reference text, set to "photo" in CLIPVG, and  is the generated image (the object to be optimized).

is the generated image (the object to be optimized).  is the original image.

is the original image.  and

and  are the text and image codecs of CLIP respectively. ΔT and ΔI represent the latent space directions of text and image respectively. The purpose of optimizing this loss function is to make the semantic change direction of the image after editing conform to the description of the text. The fixed t_ref is ignored in subsequent formulas. In CLIPVG, the generated image is the result of differentiable rendering of vector graphics. In addition, CLIPVG supports assigning different text descriptions to each ROI. At this time, the directional CLIP loss will be converted into the following ROI CLIP loss,

are the text and image codecs of CLIP respectively. ΔT and ΔI represent the latent space directions of text and image respectively. The purpose of optimizing this loss function is to make the semantic change direction of the image after editing conform to the description of the text. The fixed t_ref is ignored in subsequent formulas. In CLIPVG, the generated image is the result of differentiable rendering of vector graphics. In addition, CLIPVG supports assigning different text descriptions to each ROI. At this time, the directional CLIP loss will be converted into the following ROI CLIP loss,

The associated text description. R is a differentiable vector renderer, and R(Θ) is the entire rendered image.

The associated text description. R is a differentiable vector renderer, and R(Θ) is the entire rendered image.  is the entire input image.

is the entire input image.  represents a cropping operation, which means cropping the area

represents a cropping operation, which means cropping the area  from image I. CLIPVG also supports a patch-based enhancement scheme similar to that in CLIPstyler, that is, multiple patches can be further randomly cropped from each ROI, and the CLIP loss is calculated for each patch based on the text description corresponding to the ROI.

from image I. CLIPVG also supports a patch-based enhancement scheme similar to that in CLIPstyler, that is, multiple patches can be further randomly cropped from each ROI, and the CLIP loss is calculated for each patch based on the text description corresponding to the ROI.

is the loss weight corresponding to each area.

is the loss weight corresponding to each area. Experimental results

More applications

The above is the detailed content of For the first time, you don't rely on a generative model, and let AI edit pictures in just one sentence!. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1377

1377

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Today I would like to share a recent research work from the University of Connecticut that proposes a method to align time series data with large natural language processing (NLP) models on the latent space to improve the performance of time series forecasting. The key to this method is to use latent spatial hints (prompts) to enhance the accuracy of time series predictions. Paper title: S2IP-LLM: SemanticSpaceInformedPromptLearningwithLLMforTimeSeriesForecasting Download address: https://arxiv.org/pdf/2403.05798v1.pdf 1. Large problem background model

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving