Technology peripherals

Technology peripherals

AI

AI

DAMO Academy's open source low-cost large-scale classification framework FFC

DAMO Academy's open source low-cost large-scale classification framework FFC

DAMO Academy's open source low-cost large-scale classification framework FFC

Paper link: https://arxiv.org/pdf/2105.10375.pdf

Application & Code:

- https://www.php.cn/link/c42af2fa7356818e0389593714f59b52

- https://www.php.cn/link/60a6c4002cc7b29142def8871531281a

Background

Image classification is one of the most successful practical application technologies of AI at present, and has been integrated into people's daily life. It is widely used in most computer vision tasks, such as image classification, image search, OCR, content review, recognition authentication and other fields. There is a general consensus: "When the data set is larger and there are more IDs, as long as it is properly trained, the effect of the corresponding classification task will be better." However, when faced with tens of millions of IDs or even hundreds of millions of IDs, it is difficult for the currently popular DL framework to directly conduct such ultra-large-scale classification training at low cost.

The most intuitive way to solve this problem is to consume more graphics card resources through clustering, but even so, the classification problem under massive IDs will still have the following problems:

1) Cost issue: In the case of massive data in the distributed training framework, memory overhead, multi-machine communication, data storage and loading will consume more resources.

2) Long tail problem: In actual scenarios, when the data set reaches hundreds of millions of IDs, the number of image samples in most of the IDs will often be very small, and the data will be distributed in a long tail. It is very obvious that direct training is difficult to achieve better results.

The remaining chapters of this article will focus on the existing solutions for ultra-large-scale classification frameworks, as well as the corresponding principles and tricks of the low-cost classification framework FFC.

Method

Before introducing the method, this article first reviews the main challenges of current ultra-large-scale classification:

Challenge point 1: The cost remains high

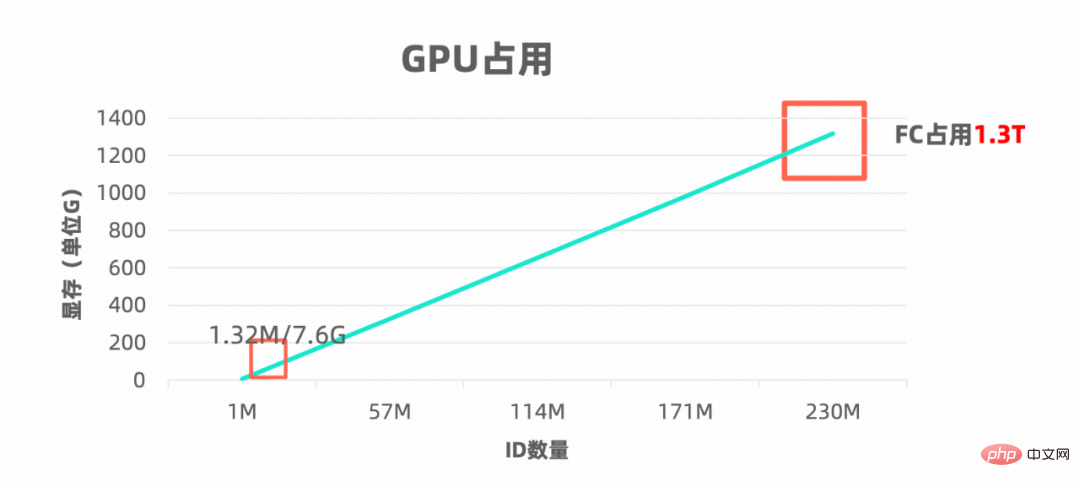

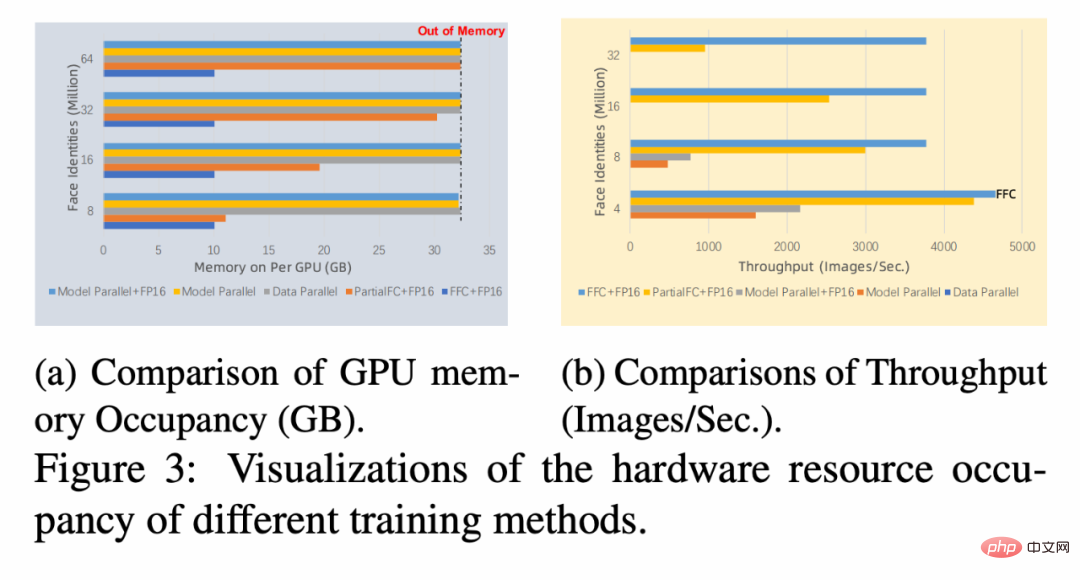

The larger the number of IDs, the greater the memory requirements of the classifier, as shown in the following diagram:

The larger the video memory, the more machine cards are required and the higher the cost. The corresponding hardware infrastructure cost for multi-machine collaboration is also higher. At the same time, when the number of classification IDs reaches an extremely large scale, the main calculation amount will be wasted on the last layer of classifiers, and the time consumed by the skeleton network is negligible.

Challenge point 2: Difficulty in long-tail learning

In actual scenarios, the absolute majority among hundreds of millions of IDs The number of image samples in most IDs will be very small, and the long-tail data distribution is very obvious, making direct training difficult to converge. If trained with equal weights, long-tail samples will be overwhelmed and insufficiently learned. At this time, imbalanced samples are generally used. On this research topic, there are many methods that can be used for reference. What method is more suitable to integrate into the simple ultra-large-scale classification framework?

With the above two challenges, let’s first take a look at what existing feasible solutions are available and whether they can solve the above two challenges well.

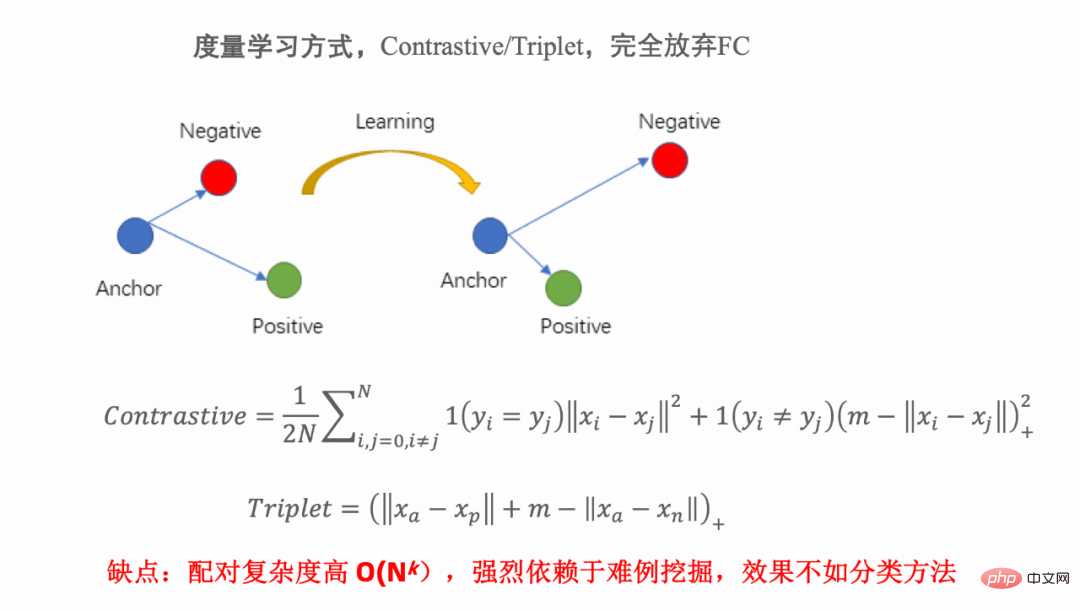

Feasible method 1: metric learning

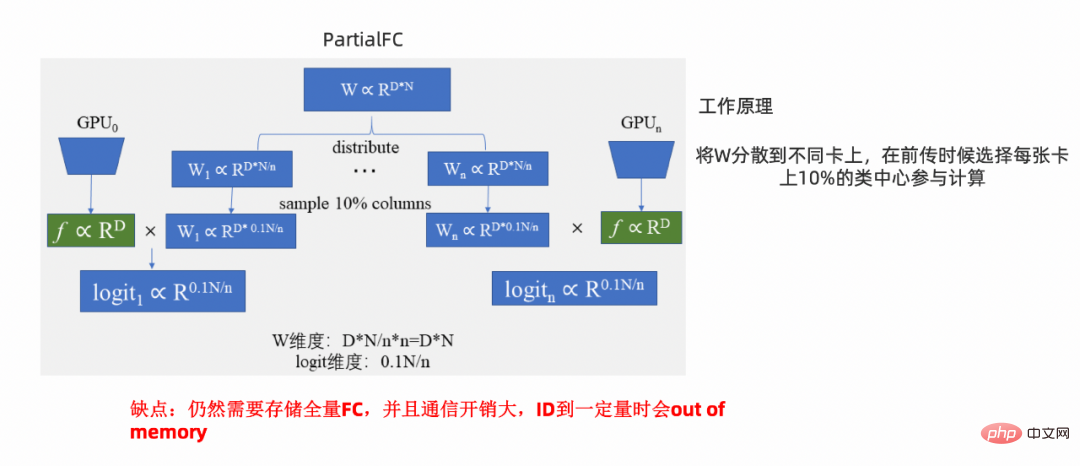

##Feasible Method 2: PFC framework

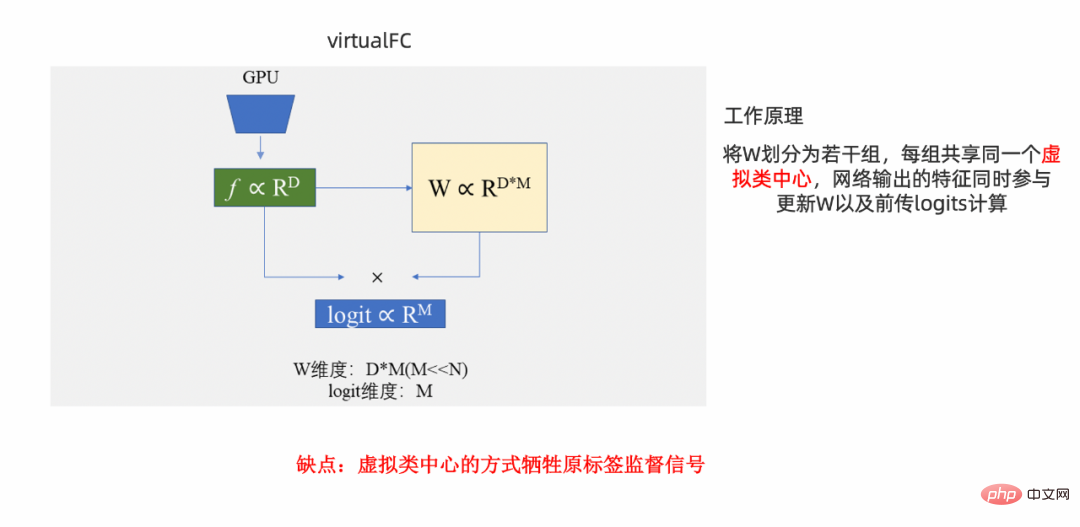

Feasible method 3: VFC framework

##Method of this paper: FFC framework

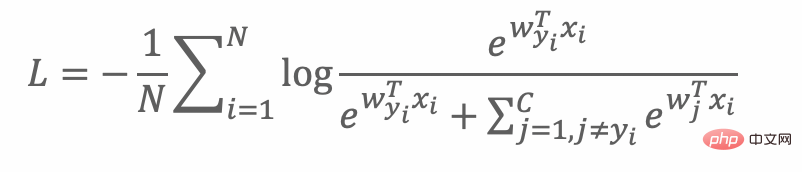

The loss function when training with FC for large-scale classification is as follows:

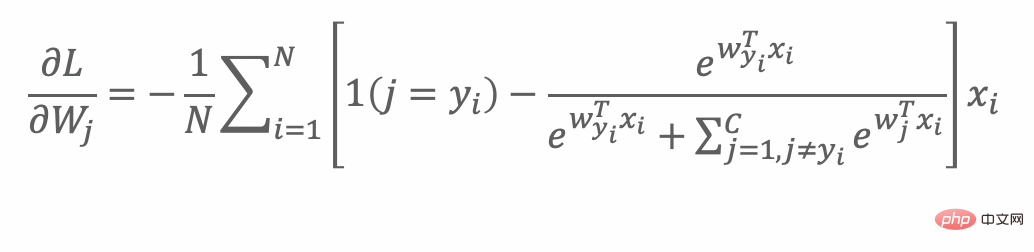

During each backtransmission process, all class centers will be updated:

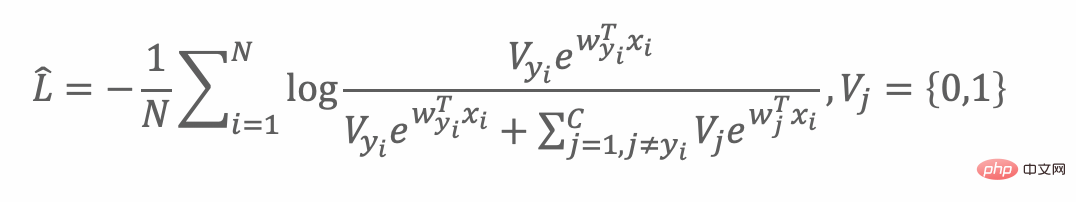

But FC is too big. The intuitive idea is to reasonably select a certain proportion of class centers, that is, Vj is 1 part as follows:

Due to the above motivation , leading to the following preliminary plan:

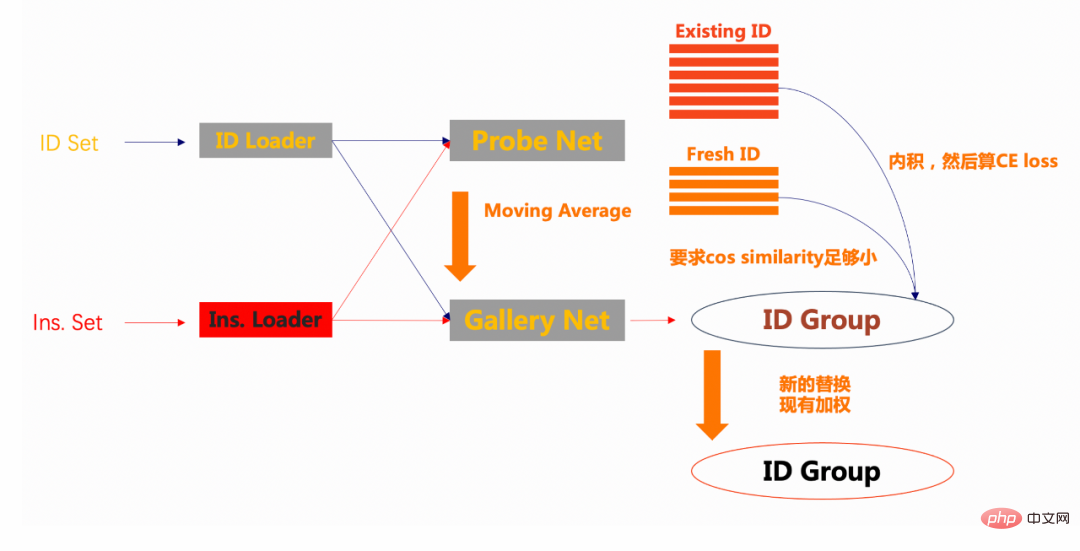

First of all, in order to solve the impact of the long tail, this article introduces two loaders, one based on id There are two loaders, the sampling id_loader and the instance_loader based on sample sampling. In each epoch, classes with many samples and classes with few samples (few-shot) can have the opportunity to be trained.

Secondly, before training starts, send a part of the samples to the id group. Here, it is assumed that 10% of the id samples are put into the group. At this time, gallery uses random parameters.

Then, when training starts, the batch samples enter the probe net one by one. Then there are two situations for the samples in each batch: 1.) There are features with the same ID of this sample in the group, 2.) There are no features of similar samples in the group. For these two cases, call them existing id and fresh id respectively. For existing samples, use the feature and the feature in the group to do the inner product, calculate the cross-entropy loss function with the label, and then return it. For fresh samples, minimize the cosine similarity with the samples in the group.

Finally, update the features in the group and replace them with new class centers, based on the principle of weighting existing class centers. For gallery net, the moving average strategy is used to gradually update the parameters in the probe.

Method of this paper: Trick introduction

##1.) The size of the introduced ID Group is adjustable Parameter, generally defaults to 30,000.2.) In order to achieve stable training, refer to the moco class method and introduce moving average. The corresponding convergence conditions are:

Experimental results

Experimental results

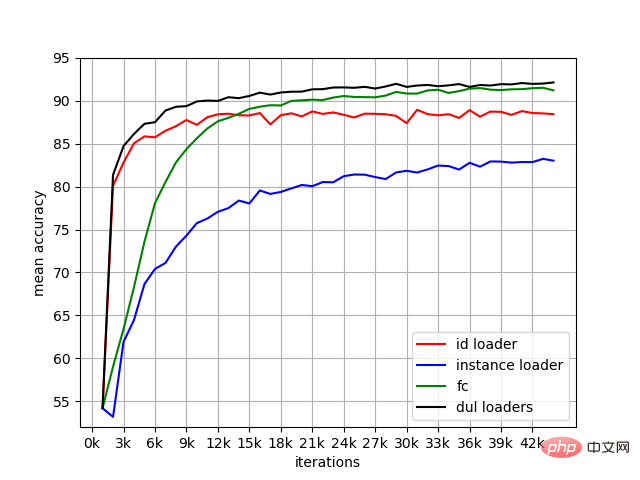

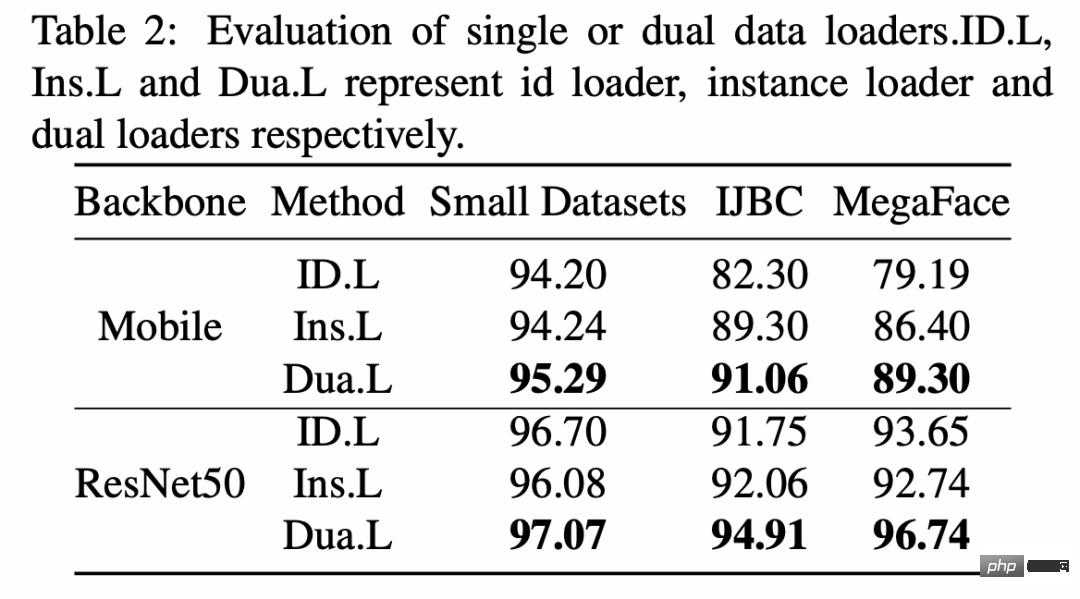

1. Double Loader ablation experiment

2. Comparison of SOTA method effects

3. Comparison of video memory and sample throughput

The above is the detailed content of DAMO Academy's open source low-cost large-scale classification framework FFC. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

How to evaluate the cost-effectiveness of commercial support for Java frameworks

Jun 05, 2024 pm 05:25 PM

Evaluating the cost/performance of commercial support for a Java framework involves the following steps: Determine the required level of assurance and service level agreement (SLA) guarantees. The experience and expertise of the research support team. Consider additional services such as upgrades, troubleshooting, and performance optimization. Weigh business support costs against risk mitigation and increased efficiency.

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

How does the learning curve of PHP frameworks compare to other language frameworks?

Jun 06, 2024 pm 12:41 PM

The learning curve of a PHP framework depends on language proficiency, framework complexity, documentation quality, and community support. The learning curve of PHP frameworks is higher when compared to Python frameworks and lower when compared to Ruby frameworks. Compared to Java frameworks, PHP frameworks have a moderate learning curve but a shorter time to get started.

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

How do the lightweight options of PHP frameworks affect application performance?

Jun 06, 2024 am 10:53 AM

The lightweight PHP framework improves application performance through small size and low resource consumption. Its features include: small size, fast startup, low memory usage, improved response speed and throughput, and reduced resource consumption. Practical case: SlimFramework creates REST API, only 500KB, high responsiveness and high throughput

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Golang framework documentation best practices

Jun 04, 2024 pm 05:00 PM

Writing clear and comprehensive documentation is crucial for the Golang framework. Best practices include following an established documentation style, such as Google's Go Coding Style Guide. Use a clear organizational structure, including headings, subheadings, and lists, and provide navigation. Provides comprehensive and accurate information, including getting started guides, API references, and concepts. Use code examples to illustrate concepts and usage. Keep documentation updated, track changes and document new features. Provide support and community resources such as GitHub issues and forums. Create practical examples, such as API documentation.

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

How to choose the best golang framework for different application scenarios

Jun 05, 2024 pm 04:05 PM

Choose the best Go framework based on application scenarios: consider application type, language features, performance requirements, and ecosystem. Common Go frameworks: Gin (Web application), Echo (Web service), Fiber (high throughput), gorm (ORM), fasthttp (speed). Practical case: building REST API (Fiber) and interacting with the database (gorm). Choose a framework: choose fasthttp for key performance, Gin/Echo for flexible web applications, and gorm for database interaction.

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

Detailed practical explanation of golang framework development: Questions and Answers

Jun 06, 2024 am 10:57 AM

In Go framework development, common challenges and their solutions are: Error handling: Use the errors package for management, and use middleware to centrally handle errors. Authentication and authorization: Integrate third-party libraries and create custom middleware to check credentials. Concurrency processing: Use goroutines, mutexes, and channels to control resource access. Unit testing: Use gotest packages, mocks, and stubs for isolation, and code coverage tools to ensure sufficiency. Deployment and monitoring: Use Docker containers to package deployments, set up data backups, and track performance and errors with logging and monitoring tools.

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

What are the common misunderstandings in the learning process of Golang framework?

Jun 05, 2024 pm 09:59 PM

There are five misunderstandings in Go framework learning: over-reliance on the framework and limited flexibility. If you don’t follow the framework conventions, the code will be difficult to maintain. Using outdated libraries can cause security and compatibility issues. Excessive use of packages obfuscates code structure. Ignoring error handling leads to unexpected behavior and crashes.

Golang framework performance comparison: metrics for making wise choices

Jun 05, 2024 pm 10:02 PM

Golang framework performance comparison: metrics for making wise choices

Jun 05, 2024 pm 10:02 PM

When choosing a Go framework, key performance indicators (KPIs) include: response time, throughput, concurrency, and resource usage. By benchmarking and comparing frameworks' KPIs, developers can make informed choices based on application needs, taking into account expected load, performance-critical sections, and resource constraints.