ChatGPT is racing all the way, where are our safety belts?

Since its release on November 30 last year, OpenAI’s ChatGPT has swept across various social fields in just three months: ordinary people use it as a search engine; office clerks use it to write copywriting and do... Meeting minutes, which programmers use to write code.

But, worryingly, students are also using it to write homework. A survey some time ago showed that nearly 90% of students in the United States are already using ChatGPT to write homework, and one paper generated by ChatGPT even ranked first in the class. For school teachers, this change comes too suddenly. Before coming up with countermeasures, some schools directly chose to ban it.

Some companies have also banned ChatGPT, including Wall Street financial institutions such as Citigroup, Goldman Sachs, and JPMorgan Chase, because they are worried that employees will leak sensitive company information when using ChatGPT. .

These rushed responses reflect a problem: Our society is not yet ready for the arrival of AI generative models such as ChatGPT, discussions on related issues need to be put on the agenda. Perhaps this is also the reason why the recently held Global Artificial Intelligence Developer Pioneer Conference "Youzhizhi: AI Safety and Ethical Insights Theme Forum" was packed with seats.

Although the theme of the conference is "A Safety and Ethical Insights", the scope of the guests' discussion is not limited to this. Instead, multi-dimensional discussions were added, ranging from the impact of AI generation models such as ChatGPT on basic research to its application issues to ideas for talent training in the new era. In-depth discussions were conducted from all angles. Through this forum, everyone is trying to outline the answer to a question: In the AIGC era that has arrived, how should we deal with it?

What inspirations and challenges does ChatGPT bring to the scientific research field?

In January this year, Turing Award winner Yann LeCun was put on the hot search list because of his harsh evaluation of ChatGPT. In his view, "As far as the underlying technology is concerned, ChatGPT has no special innovation," nor is it "something revolutionary." It can only be regarded as a decent engineering example.

"Whether ChatGPT is revolutionary" is a controversial topic. But there is no doubt that it is indeed an engineering masterpiece. At the scene, Academician of the Chinese Academy of Sciences E Weinan also pointed out this point.

He also emphasized that this masterpiece is the result of OpenAI’s gradual verification and concentrated investment. Among them, "concentrated investment" is very important. Because the success of OpenAI shows that the previous "small workshop, project-based" AI empowerment method is becoming history, and "AI engineering and platformization" are becoming an important support for releasing the dividends of artificial intelligence technology. If it can adapt to this change, the success of AI in the field of natural language is expected to be replicated in the field of basic scientific research. This is also the work that Academician E Weinan is engaged in in the field of AI for Science.

Of course, this work is not easy and requires us to concentrate on building platform infrastructure for basic scientific research, including data, basic software tools, computing platforms, and intelligence. Chemical research platform, etc. In the past few years, Academician E has led the team to do some work in this area and released important results such as the pre-training model of the inter-atomic potential energy function.

However, their work still faces many challenges, such as the accumulated data formats are different and noisy, the software lacks a basic testing system, and the computing infrastructure cannot keep up, etc. In Academician E’s view, this is the “darkness before dawn.” Only by daring to pool resources in original innovation can we usher in the dawn.

In addition to natural sciences, the emergence of ChatGPT also has certain inspirations for researchers in the field of social sciences. In this regard, Wu Guanjun, Dean of the School of Politics and International Relations at East China Normal University, contributed very valuable ideas.

Dean Wu pointed out that social science researchers should have some sense of crisis in front of ChatGPT. First of all, behind it is a huge amount of data that anthropologists may not be able to collect through traditional field surveys, and it can process large amounts of data in a short time. Secondly, it "reads" a large number of books, which a person may not be able to finish in a lifetime.

"We are facing a (technological) singularity, but many of our scholars are unaware of it," Wu Guanjun said.

The technological singularity here refers to a view summarized based on the history of technological development, which believes that an inevitable event will occur in the future: technological development will occur in a very short time Great near-infinite progress occurs within. By then, all the knowledge, values, and rules we are familiar with will become invalid.

Therefore, he called on social scientists to work with other scientists to advance research on the era of technological singularity. This kind of research focuses on "practical knowledge" (such as ethics and politics) in Aristotle's knowledge classification, because in the other two types of knowledge (productive knowledge and theoretical knowledge), AI has begun to learn and even Learn better than humans. In fields of practical knowledge such as politics, AI can only retell and cannot create a new world. Therefore, Dean Wu believes that mankind will have great potential in this field.

The AIGC market is about to explode, how can the application side prepare?

A few days ago, OpenAI released the ChatGPT API amid long-awaited calls, and the price is very cheap. It only costs $2 to generate one million tokens. For the application side, this is quite exciting news. At the same time, the research and development of domestic ChatGPT-like products is also accelerating, and new products will be released this month. It is foreseeable that in the next year, applications supported by the AIGC model will explode at home and abroad.

But at the same time, our investment in the application side does not seem to keep up. This is Deloitte China Chairman Jiang Ying observed Phenomenon.

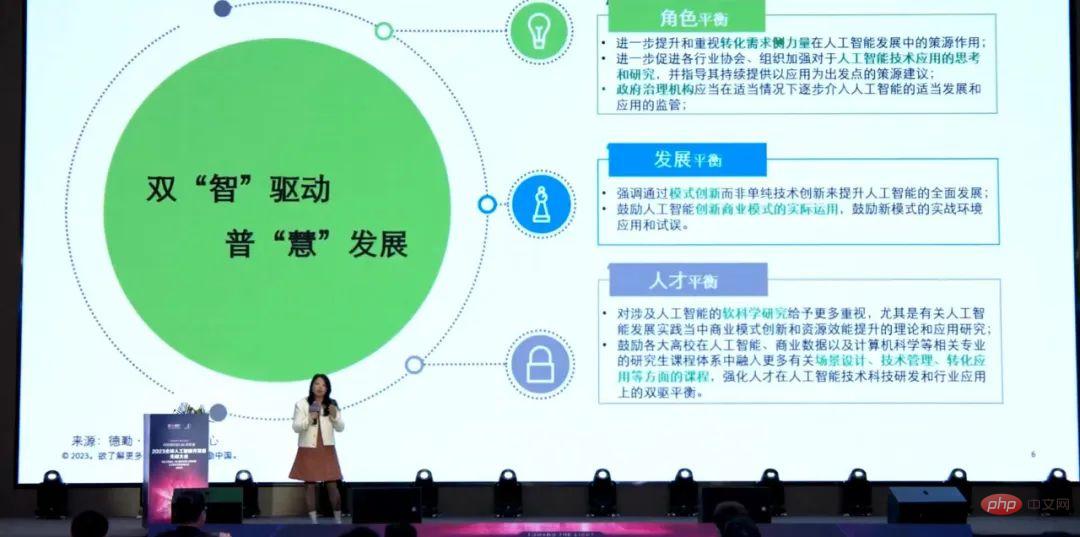

Specifically, she found that my country’s artificial intelligence development has an imbalance between investment in the R&D supply side and application demand side. This imbalance manifests itself in three aspects.

First of all, in terms of roles, many current decision-making organizations are mainly composed of scientific research institutions and technology companies, and the participation of relevant parties in application scenarios such as industry associations is relatively insufficient. Therefore, Jiang Ying believes that we need to establish a multi-party alliance to further enhance the role of transforming demand-side forces in the development of artificial intelligence.

Secondly, in terms of development, improving the development of artificial intelligence through technological innovation has been fully mentioned and received a lot of support, but the power of model innovation has not been fully valued. Therefore, Jiang Ying proposed that we should encourage the practical application of innovative business models of artificial intelligence and increase research in this area.

Finally, in terms of talent training, many universities now have artificial intelligence-related majors, but most of these majors are limited to the cultivation of science and engineering knowledge and skills, resulting in technical and management applications. Talents with two-way knowledge are relatively scarce. You must know that in actual application scenarios, if AI is to truly achieve economic efficiency, it is not enough to just use it to complete the work of a certain production line. The entire process and management structure need to be transformed. Talents who can meet such needs are currently is very scarce.

##At the application level, SenseTime Technology Intelligence Tian Feng, director of the Industrial Research Institute and deputy director of the Shanghai Artificial Intelligence Research Institute, also shared his views, but he was more concerned about the various risks that exist in the application process of AI, including model risks, data Risks and more.

Specifically, Tian Feng advocates using technology and management tools to improve corporate AI ethical governance capabilities. In the past few years, SenseTime has developed a set of AI ethical risk governance toolboxes, including digital watermarking, data desensitization, data sandbox, model physical examination platform, etc., covering design, development, deployment, and launch. Complete product life cycle.

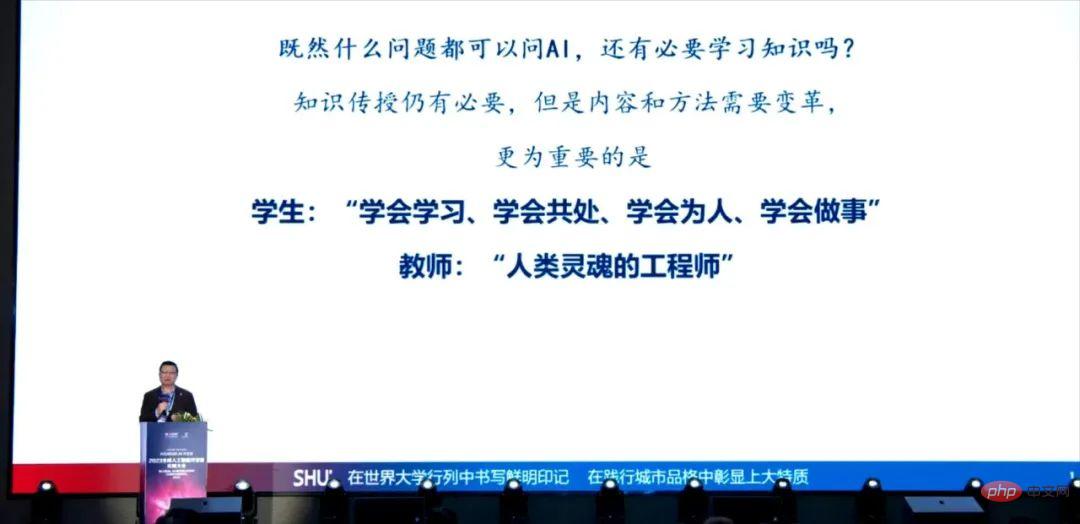

Students are using ChatGPT, what should teachers do?

At the forum, Shanghai University Vice President Wang Xiaofan was asked a question: Will Shanghai University ban students from using ChatGPT? In response, Principal Wang replied: I do not advocate banning it.

In his view, the emergence of AI tools such as ChatGPT has its positive side.

In teaching practice, it can provide students with immediate feedback, helping students make progress faster like AlphaGo assists human chess players. Recall that 30 years ago, we were still checking documents one by one in the library; 20 years ago, we began to quickly search documents online; and today, ChatGPT can already help us organize documents into abstracts or reviews. This undoubtedly greatly improves the efficiency of our learning knowledge.

In terms of teaching content and methods, it will force teaching workers to reflect: What kind of knowledge should our education impart? How can we use tools like ChatGPT to make our higher education teaching and learning more efficient? After this round of reflection and change, higher education may be closer to its essence. As Einstein said, "The value of a college education is not to memorize many facts, but to train the brain to think... The top priority should always be the cultivation of the overall ability of independent thinking and judgment, rather than the acquisition of knowledge." specific knowledge".

Of course, the problems ChatGPT brings to higher education are unavoidable. In this regard, President Wang proposed three tasks that need to be done: first, there are rules to follow, that is, formulating guiding principles for the use of AIGC in teaching and research, and ensuring that students and teachers can understand and follow these guidelines; second, Fair use, that is, supporting the reasonable use of AIGC in teaching, learning and research, but taking effective measures to prohibit academic misconduct such as plagiarism; third, teaching demonstration, that is, teachers play a role model in the teaching and research process, and use fair use Artificial intelligence technologies such as AIGC are integrated into the teaching of academic integrity and ethics courses.

The development of artificial intelligence cannot follow the old path of automobiles

In addition to the wonderful sharing of the guests, this forum also produced two important results.

The first result is that the core expert members of the Shanghai Standard "Information Technology Artificial Intelligence System Life Cycle Governance Guidelines" working group were awarded letters of appointment. This guide will start from the conceptual design of the AI algorithm model and run through the complete life cycle of development, testing and evaluation, deployment, operation monitoring, and retirement, providing guidance for the governance of artificial intelligence systems. This is the country's first local standard on artificial intelligence system governance. It aims to promote development with standard rules, maintain the bottom line of safety and ethics through technical means and regulatory measures, and ensure that technologies such as AIGC can fully exert their social and economic value and use them. The actual results establish the "Shanghai experience" in the development of artificial intelligence.

The release and signing of the second achievement "2023 Shanghai Artificial Intelligence Safety Ethics Initiative". The "Proposal" is guided by the "Shanghai Regulations on Promoting the Development of the Artificial Intelligence Industry", advocating for artificial intelligence developers to move toward the light, and ensuring that the artificial intelligence industry moves toward fairness and justice, content security, privacy protection, interconnection, and joint construction and sharing. The direction of governance continues to advance and develop upward. Representatives from the Shanghai Artificial Intelligence Industry Association, Shanghai Young and Middle-aged Intellectuals Association, Shanghai Lingang Group, East China Branch of the Academy of Information and Communications Technology, and the Municipal Software Development Center jointly signed the "Proposal".

Why do you want to do these two things? In his speech, President Wang mentioned a piece of trivia: the automobile was invented at the end of the 19th century, but it was not until the second half of the 20th century that different countries introduced regulations requiring people to wear seat belts while driving. It is conceivable how many people must have died in vain.

If you think about it carefully, we are actually a bit like those people who were just exposed to cars in the 19th century: the emerging AIGC is accelerating, but there are no regulations to restrict the drivers, and there are no seat belts for the people in the cars. Tie. These issues are urgent.

"Today's development of artificial intelligence obviously cannot follow the path of cars," Principal Wang said earnestly. Taking the forum as a starting point, all parties in society are exploring a new path so that we can calmly cope with the arrival of the AIGC era.

The above is the detailed content of ChatGPT is racing all the way, where are our safety belts?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) price forecast 2025-2031: Will WLD reach USD 4 by 2031?

Apr 21, 2025 pm 02:42 PM

WorldCoin (WLD) stands out in the cryptocurrency market with its unique biometric verification and privacy protection mechanisms, attracting the attention of many investors. WLD has performed outstandingly among altcoins with its innovative technologies, especially in combination with OpenAI artificial intelligence technology. But how will the digital assets behave in the next few years? Let's predict the future price of WLD together. The 2025 WLD price forecast is expected to achieve significant growth in WLD in 2025. Market analysis shows that the average WLD price may reach $1.31, with a maximum of $1.36. However, in a bear market, the price may fall to around $0.55. This growth expectation is mainly due to WorldCoin2.

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

What does cross-chain transaction mean? What are the cross-chain transactions?

Apr 21, 2025 pm 11:39 PM

Exchanges that support cross-chain transactions: 1. Binance, 2. Uniswap, 3. SushiSwap, 4. Curve Finance, 5. Thorchain, 6. 1inch Exchange, 7. DLN Trade, these platforms support multi-chain asset transactions through various technologies.

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

What are the hybrid blockchain trading platforms?

Apr 21, 2025 pm 11:36 PM

Suggestions for choosing a cryptocurrency exchange: 1. For liquidity requirements, priority is Binance, Gate.io or OKX, because of its order depth and strong volatility resistance. 2. Compliance and security, Coinbase, Kraken and Gemini have strict regulatory endorsement. 3. Innovative functions, KuCoin's soft staking and Bybit's derivative design are suitable for advanced users.

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

'Black Monday Sell' is a tough day for the cryptocurrency industry

Apr 21, 2025 pm 02:48 PM

The plunge in the cryptocurrency market has caused panic among investors, and Dogecoin (Doge) has become one of the hardest hit areas. Its price fell sharply, and the total value lock-in of decentralized finance (DeFi) (TVL) also saw a significant decline. The selling wave of "Black Monday" swept the cryptocurrency market, and Dogecoin was the first to be hit. Its DeFiTVL fell to 2023 levels, and the currency price fell 23.78% in the past month. Dogecoin's DeFiTVL fell to a low of $2.72 million, mainly due to a 26.37% decline in the SOSO value index. Other major DeFi platforms, such as the boring Dao and Thorchain, TVL also dropped by 24.04% and 20, respectively.

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a recommendation to modify the AAVE protocol token and introduce token repurchase, which has reached the quorum number of people.

Apr 21, 2025 pm 06:24 PM

Aavenomics is a proposal to modify the AAVE protocol token and introduce token repos, which has implemented a quorum for AAVEDAO. Marc Zeller, founder of the AAVE Project Chain (ACI), announced this on X, noting that it marks a new era for the agreement. Marc Zeller, founder of the AAVE Chain Initiative (ACI), announced on X that the Aavenomics proposal includes modifying the AAVE protocol token and introducing token repos, has achieved a quorum for AAVEDAO. According to Zeller, this marks a new era for the agreement. AaveDao members voted overwhelmingly to support the proposal, which was 100 per week on Wednesday

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Why is the rise or fall of virtual currency prices? Why is the rise or fall of virtual currency prices?

Apr 21, 2025 am 08:57 AM

Factors of rising virtual currency prices include: 1. Increased market demand, 2. Decreased supply, 3. Stimulated positive news, 4. Optimistic market sentiment, 5. Macroeconomic environment; Decline factors include: 1. Decreased market demand, 2. Increased supply, 3. Strike of negative news, 4. Pessimistic market sentiment, 5. Macroeconomic environment.

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

How to win KERNEL airdrop rewards on Binance Full process strategy

Apr 21, 2025 pm 01:03 PM

In the bustling world of cryptocurrencies, new opportunities always emerge. At present, KernelDAO (KERNEL) airdrop activity is attracting much attention and attracting the attention of many investors. So, what is the origin of this project? What benefits can BNB Holder get from it? Don't worry, the following will reveal it one by one for you.

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

Ranking of leveraged exchanges in the currency circle The latest recommendations of the top ten leveraged exchanges in the currency circle

Apr 21, 2025 pm 11:24 PM

The platforms that have outstanding performance in leveraged trading, security and user experience in 2025 are: 1. OKX, suitable for high-frequency traders, providing up to 100 times leverage; 2. Binance, suitable for multi-currency traders around the world, providing 125 times high leverage; 3. Gate.io, suitable for professional derivatives players, providing 100 times leverage; 4. Bitget, suitable for novices and social traders, providing up to 100 times leverage; 5. Kraken, suitable for steady investors, providing 5 times leverage; 6. Bybit, suitable for altcoin explorers, providing 20 times leverage; 7. KuCoin, suitable for low-cost traders, providing 10 times leverage; 8. Bitfinex, suitable for senior play