Li Feifei has these views on AIGC|Stanford HAI Viewpoint Report

Recently, the Stanford HAI Research Institute led by Li Feifei released a perspective report on "generative AI".

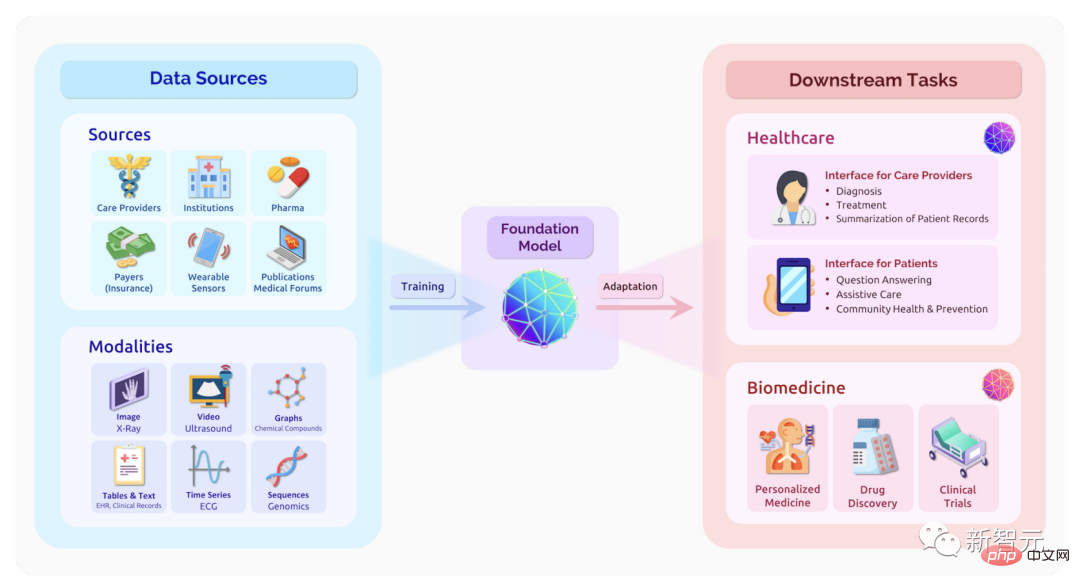

#The report points out that most current generative artificial intelligence is driven by basic models.

The opportunities these models bring to our lives, communities, and societies are enormous, but so are the risks.

#On the one hand, the generative AI can make humans more productive and creative. On the other hand, they can amplify social biases and even undermine our trust in information.

#We believe that collaboration across disciplines is essential to ensuring these technologies benefit us all. Here's what leaders in the fields of medicine, science, engineering, humanities, and social sciences have to say about how "generative artificial intelligence" will impact their fields and our world.

#In this article, we selected Li Feifei and Percy Liang’s insights on current generative AI.

For the complete opinion report, please see:

##https://hai.stanford .edu/generative-ai-perspectives-stanford-haiLi Feifei: The great turning point of artificial intelligence

Li Feifei, co-director of Stanford HAI, posted a message : "The great turning point of artificial intelligence."

The human brain can recognize all patterns in the world and build models or generate models based on them concept. The dream of generations of artificial intelligence scientists is to give machines this generative ability, and they have worked hard for a long time in the field of generative model algorithm development.

In 1966, researchers at MIT launched the "Summer Vision Project", aiming to use technology to effectively build visual systems. An important part. This was the beginning of research in the field of computer vision and image generation.

Recently, thanks to the close relationship between deep learning and big data, people seem to have reached an important turning point, which is about to enable machines to generate language, images, Audio, etc. capabilities.

Although computer vision was inspired by building AI that can see what humans can, the goal of the discipline now goes far beyond that. The AI to be built in the future should see things that humans cannot.

#How to use generative artificial intelligence to enhance human vision?

##For example, deaths caused by medical errors are a concerning issue in the United States. Generative AI can assist healthcare providers in seeing potential problems.

If the error occurs in rare circumstances, generative AI can create simulated versions of similar data to further train AI models or provide training for medical personnel.

Before you start developing a new generative tool, you should focus on what people want to gain from the tool.

#In a recent project to benchmark robotic tasks, the research team conducted a large-scale user study before starting work, asking people if they were How much they would benefit from robots completing certain tasks, and the tasks that benefit people the most, became the focus of the project.

#To seize the significant opportunities created by generative AI, the associated risks also need to be properly assessed.

Joy Buolamwini led a study called "Shades of Gender," which found that AI often has problems identifying women and people of color. Similar biases against underrepresented groups will continue to appear in generative AI.

It is also a very important ability to determine whether a picture was generated using AI. Human society is built on trust in citizenship, and without this ability, our sense of trust will be reduced.

#Advances in machine-generated capabilities are extremely exciting, as is the potential for AI to see things humans cannot.

#However, we need to be alert to the ways in which these capabilities can disrupt our daily lives, our environment, and our role as global citizens.

Percy Liang: "The New Cambrian Era: Excitement and Anxiety of Science"

Director of the Institute for Human-Centered Artificial Intelligence at Stanford University, Associate Professor of Computer Science Percy Liang published an article "The New Cambrian Era: The Excitement and Anxiety of Science"

# #In human history, it has always been difficult to create new things, and this ability is almost only possessed by experts.

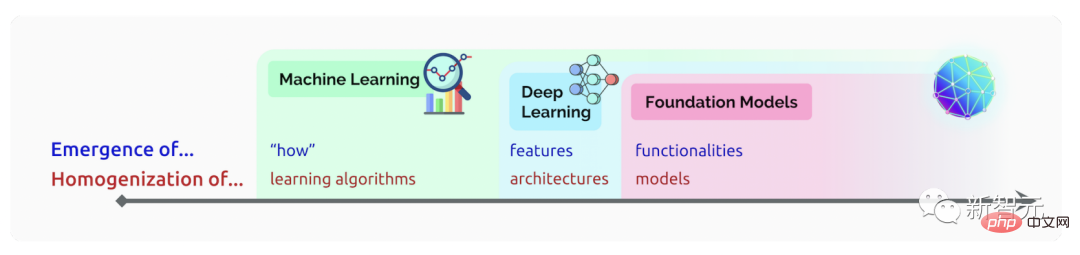

#But with the recent advancement of basic models, the "Cambrian explosion" of artificial intelligence is taking place, and artificial intelligence will be able to create anything, from videos to Protein to code.

#This ability lowers the threshold for creation, but it also deprives us of the ability to discern reality.

#Basic models based on deep neural networks and self-supervised learning have been around for decades. Recently, however, the sheer volume of data that these models can be trained on has led to rapid advances in model capabilities.

A paper released in 2021 details the opportunities and risks of the underlying model, and these emerging capabilities will become "a source of excitement for the scientific community," It can also lead to "unintended consequences."

The issue of homogeneity is also discussed in the paper. The same few models are reused as the basis for many applications, allowing researchers to focus on a small set of models. But centralization also makes these models a single point of failure, with potential harm affecting many downstream applications.

It is also very important to benchmark the basic model so that researchers can better understand its capabilities and shortcomings and formulate more reasonable development strategies.

#HELM (Holistic Evaluation of Language Models) was developed for this purpose. HELM evaluates the performance of more than 30 well-known language models in a series of scenarios using various indicators such as accuracy, robustness, and fairness.

New models, new application scenarios and new evaluation indicators will continue to appear. We welcome everyone to contribute to the development of HELM.

The above is the detailed content of Li Feifei has these views on AIGC|Stanford HAI Viewpoint Report. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1664

1664

14

14

1423

1423

52

52

1318

1318

25

25

1268

1268

29

29

1248

1248

24

24

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

How to understand DMA operations in C?

Apr 28, 2025 pm 10:09 PM

DMA in C refers to DirectMemoryAccess, a direct memory access technology, allowing hardware devices to directly transmit data to memory without CPU intervention. 1) DMA operation is highly dependent on hardware devices and drivers, and the implementation method varies from system to system. 2) Direct access to memory may bring security risks, and the correctness and security of the code must be ensured. 3) DMA can improve performance, but improper use may lead to degradation of system performance. Through practice and learning, we can master the skills of using DMA and maximize its effectiveness in scenarios such as high-speed data transmission and real-time signal processing.

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

How to use the chrono library in C?

Apr 28, 2025 pm 10:18 PM

Using the chrono library in C can allow you to control time and time intervals more accurately. Let's explore the charm of this library. C's chrono library is part of the standard library, which provides a modern way to deal with time and time intervals. For programmers who have suffered from time.h and ctime, chrono is undoubtedly a boon. It not only improves the readability and maintainability of the code, but also provides higher accuracy and flexibility. Let's start with the basics. The chrono library mainly includes the following key components: std::chrono::system_clock: represents the system clock, used to obtain the current time. std::chron

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

Quantitative Exchange Ranking 2025 Top 10 Recommendations for Digital Currency Quantitative Trading APPs

Apr 30, 2025 pm 07:24 PM

The built-in quantization tools on the exchange include: 1. Binance: Provides Binance Futures quantitative module, low handling fees, and supports AI-assisted transactions. 2. OKX (Ouyi): Supports multi-account management and intelligent order routing, and provides institutional-level risk control. The independent quantitative strategy platforms include: 3. 3Commas: drag-and-drop strategy generator, suitable for multi-platform hedging arbitrage. 4. Quadency: Professional-level algorithm strategy library, supporting customized risk thresholds. 5. Pionex: Built-in 16 preset strategy, low transaction fee. Vertical domain tools include: 6. Cryptohopper: cloud-based quantitative platform, supporting 150 technical indicators. 7. Bitsgap:

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

What is real-time operating system programming in C?

Apr 28, 2025 pm 10:15 PM

C performs well in real-time operating system (RTOS) programming, providing efficient execution efficiency and precise time management. 1) C Meet the needs of RTOS through direct operation of hardware resources and efficient memory management. 2) Using object-oriented features, C can design a flexible task scheduling system. 3) C supports efficient interrupt processing, but dynamic memory allocation and exception processing must be avoided to ensure real-time. 4) Template programming and inline functions help in performance optimization. 5) In practical applications, C can be used to implement an efficient logging system.

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

How to measure thread performance in C?

Apr 28, 2025 pm 10:21 PM

Measuring thread performance in C can use the timing tools, performance analysis tools, and custom timers in the standard library. 1. Use the library to measure execution time. 2. Use gprof for performance analysis. The steps include adding the -pg option during compilation, running the program to generate a gmon.out file, and generating a performance report. 3. Use Valgrind's Callgrind module to perform more detailed analysis. The steps include running the program to generate the callgrind.out file and viewing the results using kcachegrind. 4. Custom timers can flexibly measure the execution time of a specific code segment. These methods help to fully understand thread performance and optimize code.

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

How to handle high DPI display in C?

Apr 28, 2025 pm 09:57 PM

Handling high DPI display in C can be achieved through the following steps: 1) Understand DPI and scaling, use the operating system API to obtain DPI information and adjust the graphics output; 2) Handle cross-platform compatibility, use cross-platform graphics libraries such as SDL or Qt; 3) Perform performance optimization, improve performance through cache, hardware acceleration, and dynamic adjustment of the details level; 4) Solve common problems, such as blurred text and interface elements are too small, and solve by correctly applying DPI scaling.

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

Steps to add and delete fields to MySQL tables

Apr 29, 2025 pm 04:15 PM

In MySQL, add fields using ALTERTABLEtable_nameADDCOLUMNnew_columnVARCHAR(255)AFTERexisting_column, delete fields using ALTERTABLEtable_nameDROPCOLUMNcolumn_to_drop. When adding fields, you need to specify a location to optimize query performance and data structure; before deleting fields, you need to confirm that the operation is irreversible; modifying table structure using online DDL, backup data, test environment, and low-load time periods is performance optimization and best practice.

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

How to use string streams in C?

Apr 28, 2025 pm 09:12 PM

The main steps and precautions for using string streams in C are as follows: 1. Create an output string stream and convert data, such as converting integers into strings. 2. Apply to serialization of complex data structures, such as converting vector into strings. 3. Pay attention to performance issues and avoid frequent use of string streams when processing large amounts of data. You can consider using the append method of std::string. 4. Pay attention to memory management and avoid frequent creation and destruction of string stream objects. You can reuse or use std::stringstream.