Yesterday, the International Conference on Machine Learning (ICML) issued a call for papers for 2023.

The paper submission period is from January 9th to January 26th.

# However, the request for a "code of ethics" in this meeting has caused a lot of dissatisfaction.

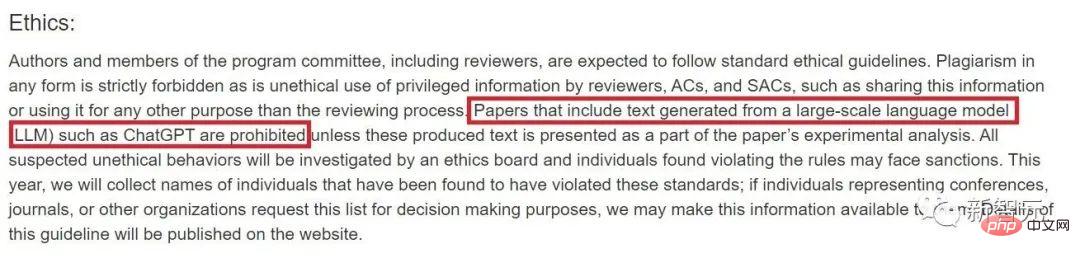

According to the conference’s policy, all authors and program committee members, Including reviewers, standard ethical guidelines should be followed.

Any form of plagiarism, as well as unethical use of privileged information by reviewers, Area Chairs (ACs) and Senior Area Chairs (SACs), are strictly prohibited, e.g. Share this information or use it for any purpose other than the review process.

Papers containing text generated from large-scale language models (LLM) (such as ChatGPT) are prohibited unless these generated texts are presented as part of the paper's experimental analysis .

All suspected unethical behavior will be investigated by the Ethics Committee, and individuals found to have violated the rules may face sanctions. This year we will collect the names of individuals found to have violated these standards; we may provide this information to individuals representing conferences, journals, or other organizations if they request this list for decision-making purposes.

Among them, the request "It is forbidden to use large language models to write papers" has been popular among netizens. discussion.

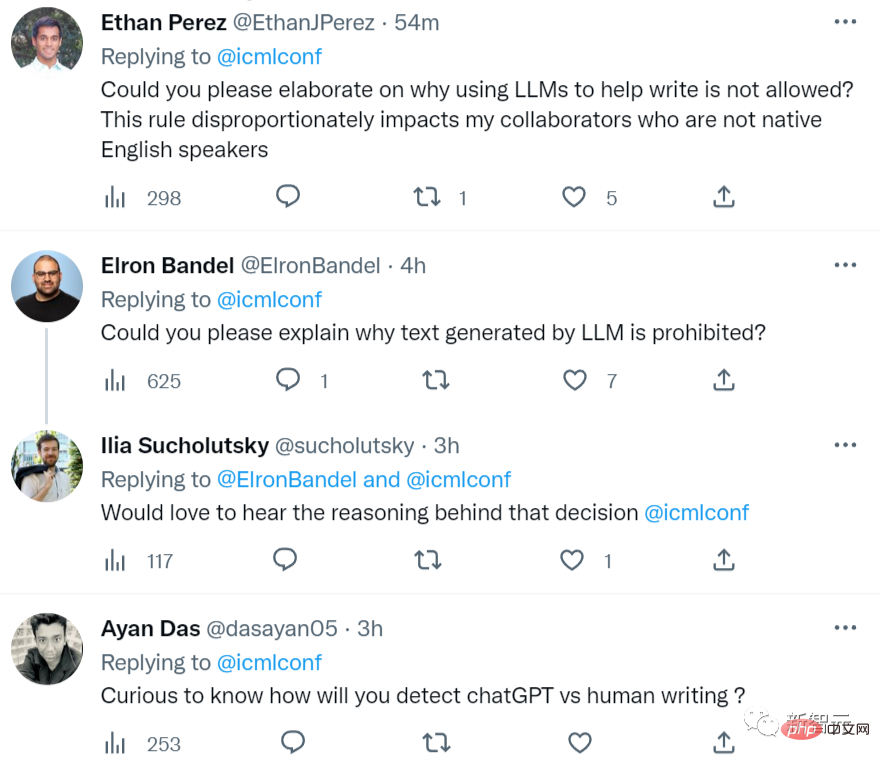

This news has been released, and netizens have commented on the ICML Twitter: "Why can't large language models be used?"

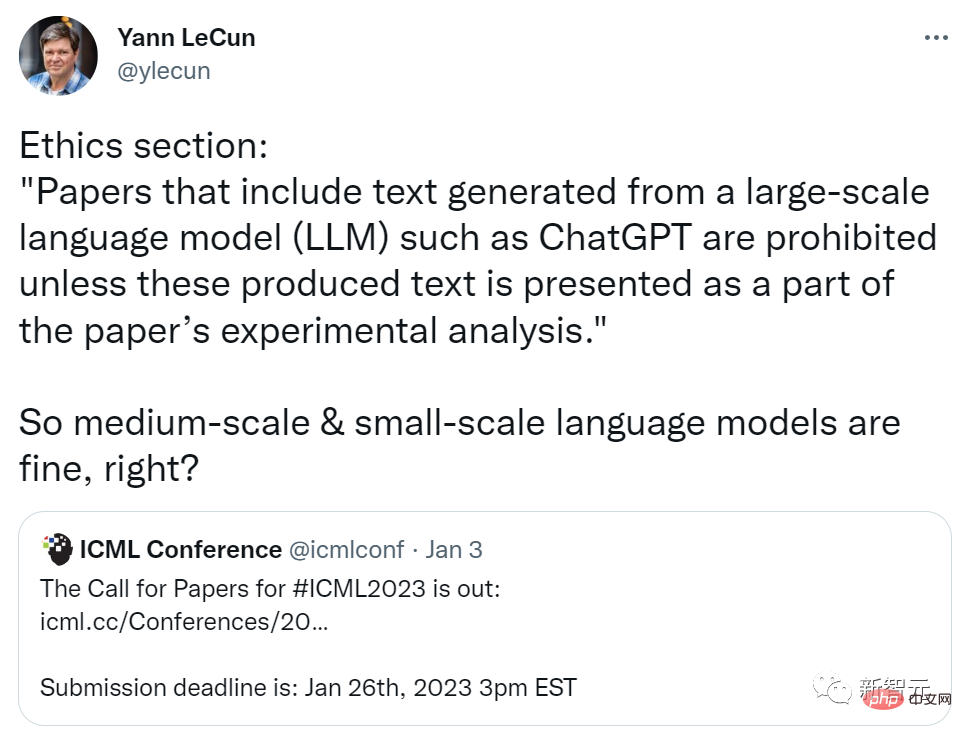

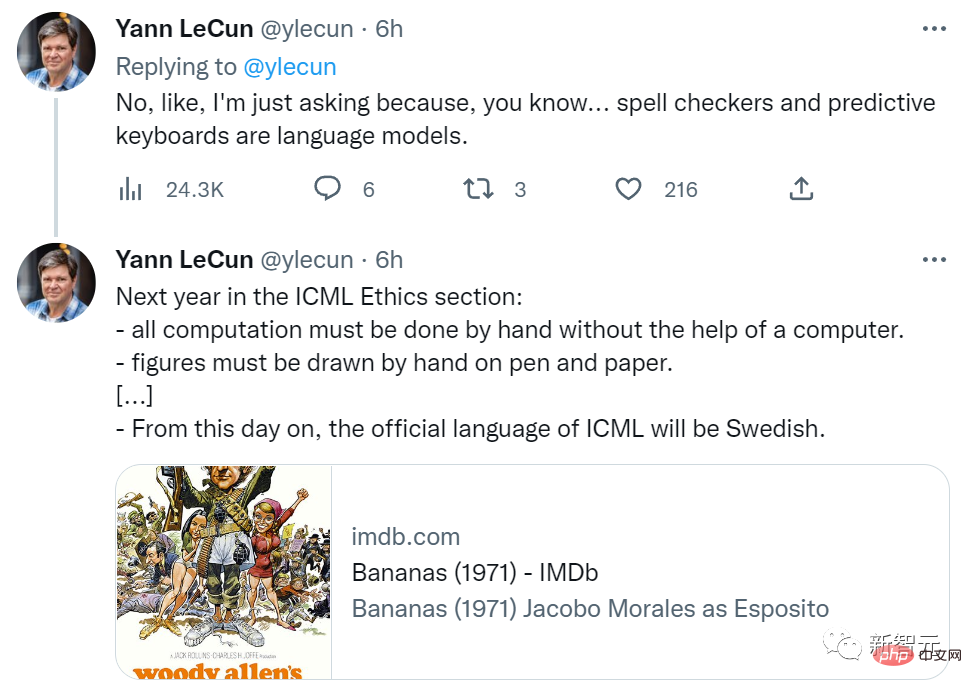

##Yann LeCun forwarded and commented: "Large language models cannot It means that medium and small language models can still be used."

## He explained: "Because the spell check application And text prediction is also a language model."

Finally, LeCun said: "Next year's ICML Code of Ethics should become : Researchers must complete various calculations by hand without the help of computers; all types of graphics must be drawn by hand with pen and paper; from today on, the official language of ICML becomes Swedish (Sweden:?)."

Finally, I did not forget to forward a movie "Crazy" to summarize my evaluation of ICML policies.

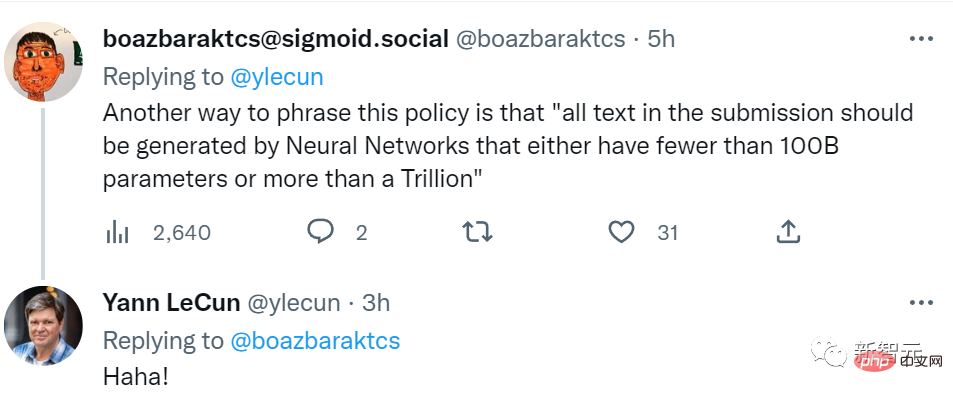

#Under LeCun’s post, netizens showed their talents and took action.

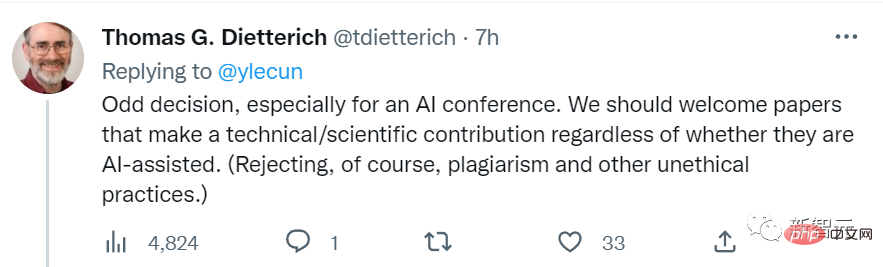

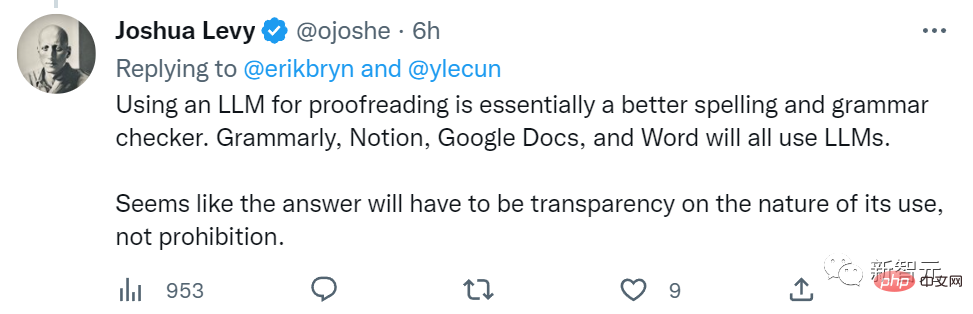

A netizen provided a new idea to ICML: "Another way to express this policy is that 'all text in the submission should be composed of parameters less than 100B or more than 1 trillion neural networks generated'." Some people pretend to be ICML reviewers and advertise ChatGPT: "As a reviewer of ICML and other conferences, I appreciate the authors using ChatGP and other tools to polish their work. Article. This will make their paper clearer and easier to read. (This post has been modified by ChatGPT)" For this rule, MIT professor Erik Brynjolfsson simply Summary: "This is a losing battle." #In addition to making jokes, some people also seriously expressed their thoughts on the ICML regulations. Thomas Dietterich, former president of AAAI, said: "This rule is strange, especially for an artificial intelligence conference. We should welcome all contributions to science and technology Contributed articles, regardless of whether the author has received AI assistance." ##Some people also gave ICML suggestions for improvement: Use large language models for review to improve spelling and grammar. Grammarly, Notion, Google Docs and even Word all use large language models. #It seems that the solution is to clearly explain the reasons and uses of using large language models, rather than banning them. #It turns out I’m not the only one who uses Grammarly to check spelling and grammar (doge). # Of course, some netizens expressed their understanding and believed that this move was to protect the authority of the reviewers. Netizen Anurag Ghosh commented: "I think ICML's requirements are to prevent the publication of papers that appear to be correct. For example, the five fabricated papers published in the field of machine learning /AI-generated papers. This will expose the flaws of peer review." Some people also believe that "large language models Just tools, so what if they can produce higher quality papers? The main contribution of these studies does not come from large language models, but from those researchers. Are we going to ban researchers from accepting any kind of help, such as Google Search, or can't you talk about this with people who have no stake?" Due to the big talk in recent years The popularity of models has never diminished, and the heated discussion among scholars and netizens triggered by the ban proposed by ICML will probably continue for some time. #But looking at it so far, there is a problem that no one seems to have discussed. How to judge whether a fragment of an article is generated by a large language model? How to verify, by checking for plagiarism? After all, if it were an article generated by a machine model, no one would specifically mark "This article was automatically generated by a large language model", right? What's more, research papers, which have clear logic, clear structure, and highly patterned language style, are simply the best place for large language models to play. Even if the generated original text is slightly stiff, But if it is used as an auxiliary tool, it may be difficult to distinguish, and it will be difficult to have clear standards to implement this ban. #It seems that the burden on the shoulders of ICML reviewers will be much heavier this time.

The above is the detailed content of The ICML call for papers prohibits the use of large language models. LeCun forwarded: Can small and medium-sized models be used?. For more information, please follow other related articles on the PHP Chinese website!