Crawling web pages is actually to obtain web page information through URL. The essence of web page information is a piece of HTML code with JavaScript and CSS added. Python provides a third-party module, requests, for capturing web page information. The requests module calls itself "HTTP for Humans", which literally means an HTTP module designed specifically for humans. This module supports sending requests and obtaining responses.

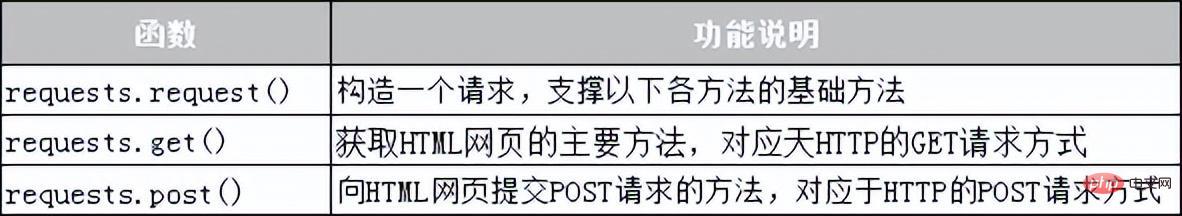

The requests module provides many functions for sending HTTP requests. The commonly used request functions are shown in Table 10-1.

Table 10-1 Request function of the requests module

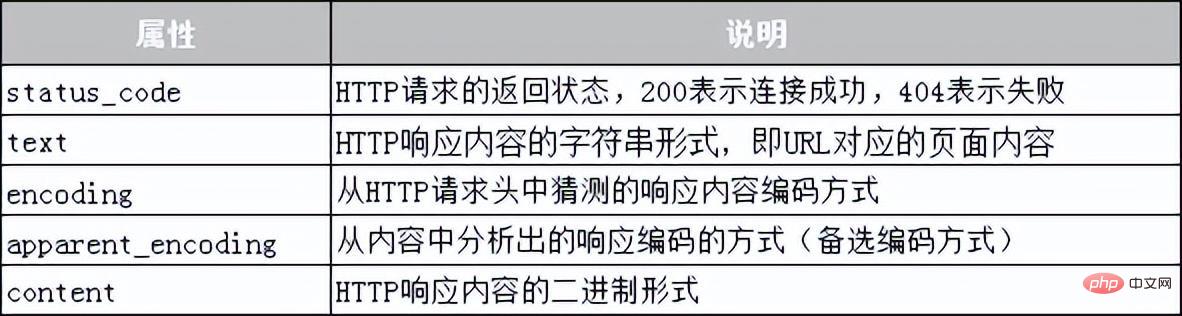

The Response class object provided by the requests module is used for dynamic Respond to client requests, control the information sent to the user, and dynamically generate responses, including status codes, web page content, etc. Next, a table is used to list the information that the Response class can obtain, as shown in Table 10-2.

Table 10-2 Common attributes of the Response class

Next, we will use a case to demonstrate how to use the requests module to crawl Baidu web pages. The specific code is as follows:

# 01 requests baidu

import requests

base_url = 'http://www.baidu.com'

#发送GET请求

res = requests.get (base_url)

print("响应状态码:{}".format(res.status_code))#获取响应状态码

print("编码方式:{}".format(res.encoding))#获取响应内容的编码方式

res.encoding = 'utf-8'#更新响应内容的编码方式为UIE-8

print("网页源代码:n{}".format(res.text)) #获取响应内容In the above code, line 2 uses import to import the requests module; lines 3 to 4 of the code send a GET request to the server based on the URL, and use the variable res to receive the response content returned by the server; line 5 ~The 6th line of code prints the status code and encoding method of the response content; the 7th line changes the encoding method of the response content to "utf-8"; the 8th line of code prints the response content. Run the program. The output of the program is as follows:

响应状态码:200 编码方式:ISO-8859-1 网页源代码: <!DOCTYPE html> <!–-STATUS OK--><html> <head><meta http-equiv=content-type content=text/html; charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content= always name=referrer><link rel=stylesheet type=text/css href=http://s1.bdstatic. com/r/www/cache/bdorz/baidu.min.css><title>百度一下,你就知道</title></head> <body link=#0000cc>…省略N行…</body></html>

It is worth mentioning that when using the requests module to crawl web pages, various exceptions may occur due to reasons such as no network connection, server connection failure, etc. The most common The two exceptions are URLError and HTTPError. These network exceptions can be captured and handled using the try...except statement.

The above is the detailed content of How to use the Requests module to crawl web pages?. For more information, please follow other related articles on the PHP Chinese website!