New word discovery algorithm based on CNN

Author | mczhao, senior R&D manager of Ctrip, focuses on the field of natural language processing technology.

Overview

With the continuous emergence of consumer hot spots and new Internet celebrity memes, in NLP tasks on e-commerce platforms, there often appear Some words I haven't seen before. These words are not in the system's existing vocabulary and are called "unregistered words".

On the one hand, the lack of words in the lexicon affects the word segmentation quality of some word segmenters based on the lexicon, which indirectly affects the quality of text recall and highlight prompts, that is, user text Search accuracy and search result interpretability.

On the other hand, in the mainstream NLP deep learning algorithm BERT/Transformer, etc., word vectors are often used instead of word vectors when processing Chinese. In theory, the effect of using word vectors should be better, but due to the unregistered words, the effect of using word vectors in practice is better. If the vocabulary is more complete, the effect of using word vectors will be better than using word vectors.

To sum up, new word discovery is a problem we need to solve at the moment.

1. Traditional unsupervised method

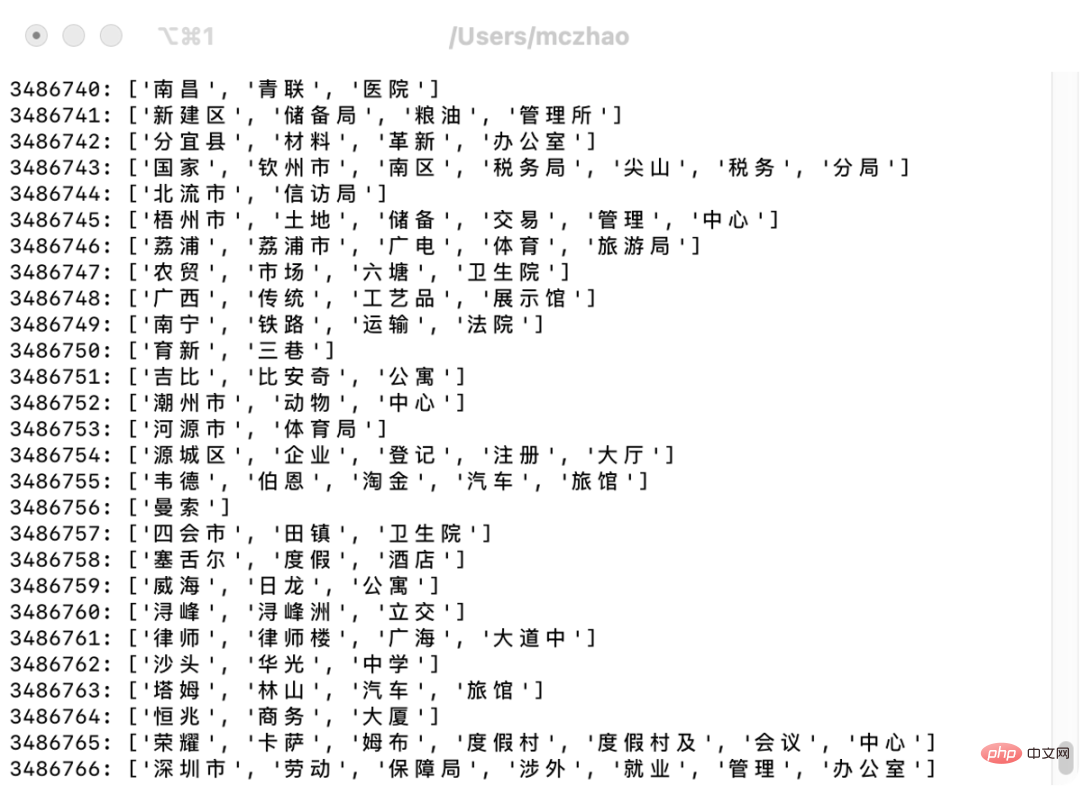

There is a relatively mature solution to this problem of Chinese new word discovery in the industry. The input is some corpus, and after segmenting these texts into NGram, candidate segments are generated. Calculate some statistical characteristics of these fragments, and then determine whether this fragment is a word based on these characteristics.

The mainstream approach in the industry is to count and observe indicators in these three aspects: popularity, cohesion, and richness of left and right adjacent characters. There are many articles describing these three indicators on the Internet. Here is a brief introduction. For details, you can refer to the two new word discovery articles of Hello NLP and Smooth NLP.

1.1 Popularity

Use word frequency to express popularity. Count the occurrence times of all fragments in all corpus, and those high-frequency fragments are often one word.

1.2 Cohesion

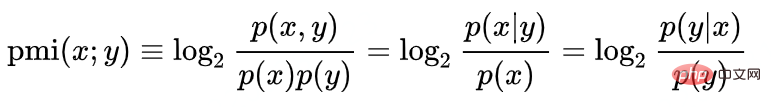

Use point mutual information to measure cohesion:

For example, we determine whether Han Ting is a word, log(P("Han Ting")/P("Han")P("Ting")). The probability of Hanting becoming a word is directly proportional to the popularity of "Hanting" and inversely proportional to the popularity of the words "Han" and "ting". This is easy to understand. For example, the most common word in Chinese characters is "的". The probability of matching any Chinese character with "的" is very high, but it does not mean that "x of" or "的x" is the same word. Here The popularity of the single word "的" plays an inhibitory role.

1.3 The richness of left and right adjacent characters

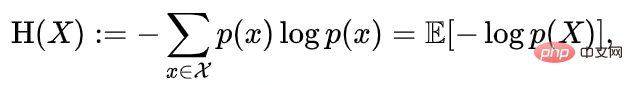

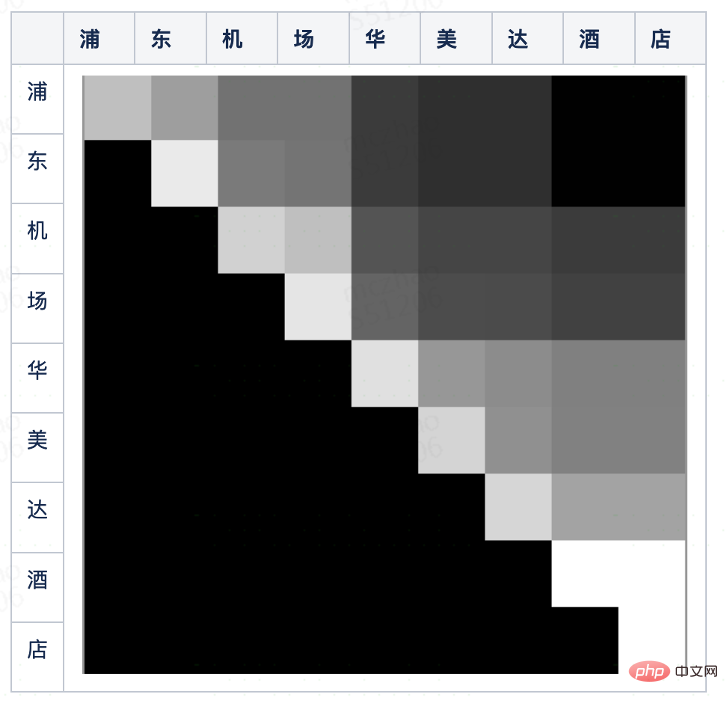

The left and right adjacency entropy represents the richness of left and right characters. The left and right adjacency entropy is the randomness of the distribution of words appearing on the left or right of the candidate word fragment. You can separate the entropy on the left and the entropy on the right, or you can combine the two entropies into one indicator.

For example, the segment "Shangri-La" has very high heat and cohesion, and corresponds to its sub-segment "Shangri-La" The popularity and cohesion of "Gri" are also very high, but because the word "La" appears after "Shangri" in most cases, its right neighbor entropy is very low, which inhibits its word formation. It can be judged that "Shangri" cannot be a separate word.

2. Limitations of the classic method

The problem with the classic method is that it requires manual setting of threshold parameters. After an NLP expert understands the probability distribution of the fragments in the current corpus, he combines these indicators through formulas or uses them independently, and then sets a threshold as a judgment standard. The judgment results using this standard can also achieve high accuracy.

However, the probability distribution or word frequency is not static. As the corpus becomes more and more abundant, or the weighted popularity of the corpus (usually the corresponding product popularity) fluctuates, experts set The parameters and thresholds in the formula also need to be constantly adjusted. This wastes a lot of manpower and turns artificial intelligence engineers into mere tweakers.

3. New word discovery based on deep learning

3.1 Word frequency probability distribution chart

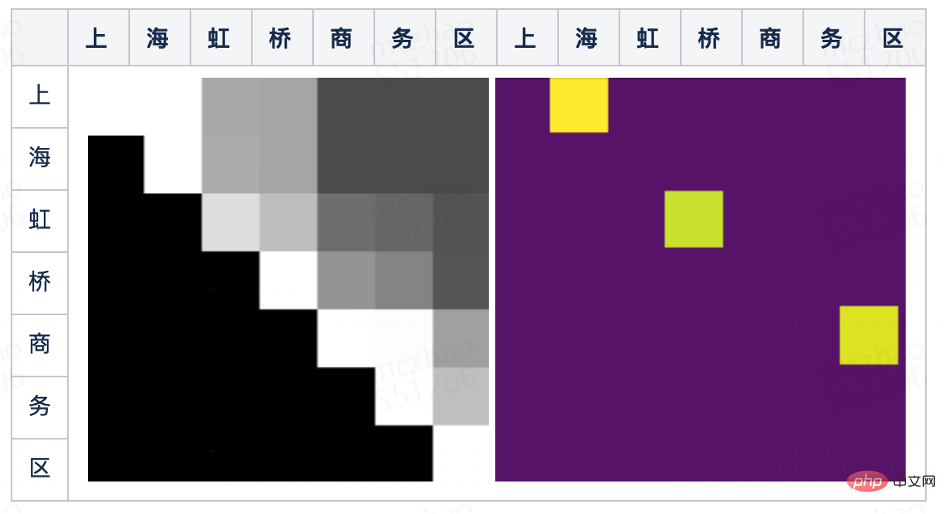

The three indicators of the above-mentioned algorithms in the industry have only one fundamental source feature, which is word frequency. In statistical methods, some simple and key statistics are usually displayed in the form of pictures, such as histograms, box plots, etc. Even without the intervention of a model, people can still make correct decisions at a glance just by looking at them. judge. You can cut the corpus into all fragments of limited length, normalize the word frequency of the fragments to 0-255, and map it into a two-dimensional matrix. The rows represent the starting characters and the columns represent the ending characters. One pixel is a fragment, and the pixel The brightness of the point is the popularity of the candidate word fragment.

The picture above is the word frequency probability distribution diagram of the short sentence "Pudong Airport Ramada Hotel". We were pleasantly surprised to find that with our naked eyes, it roughly You can separate some brighter, isosceles right-angled triangle blocks, such as: "Pudong", "Pudong Airport", "Airport", "Ramada Hotel", etc. These blocks can determine that the corresponding fragment is the word we need.

3.2 Classic image segmentation algorithm

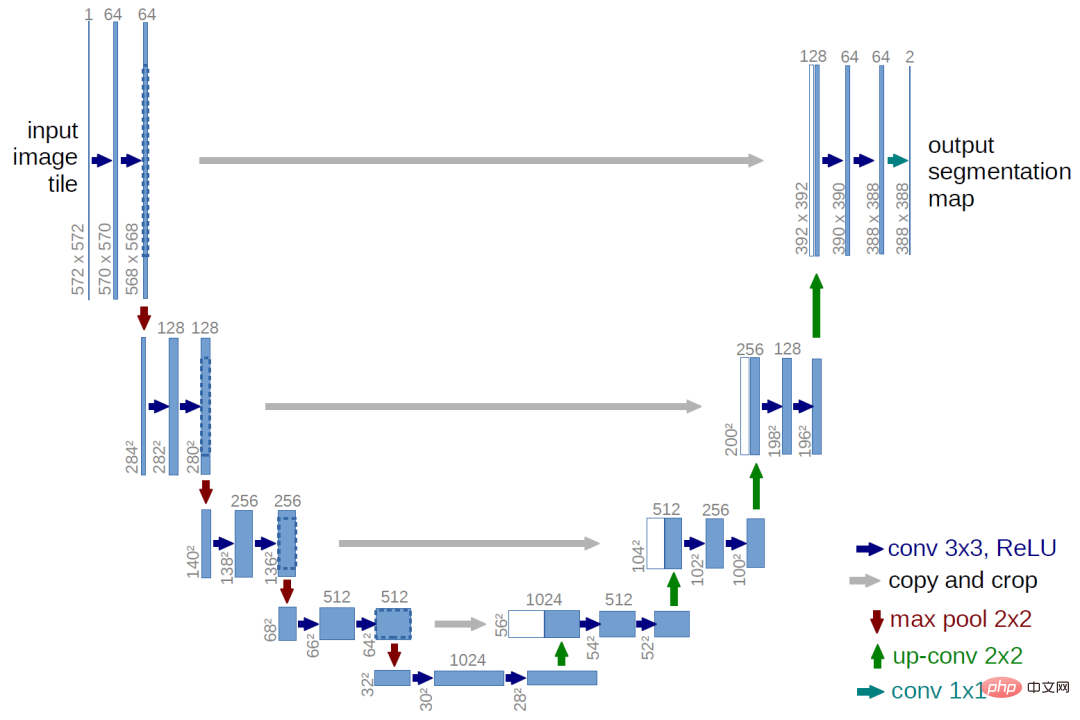

By observing the word frequency probability distribution map, we can transform a short sentence segmentation problem into an image segmentation problem . Early image segmentation algorithms are similar to the above-mentioned new word discovery algorithms. They are also threshold-based algorithms for detecting edge grayscale changes. With the development of technology, deep learning algorithms are now generally used, and the more famous one is the U-Net image segmentation algorithm. .

The first half of U-Net uses convolutional downsampling to extract multiple layers of features with different granularities. The second half Upsampling, these features are concated at the same resolution, and finally the pixel-level classification results are obtained through the fully connected layer Softmax.

3.3 New word discovery algorithm based on convolutional network

The segmentation of the word frequency probability distribution map is similar to the segmentation of the graph. They all cut out parts that are adjacent in location and have similar gray levels. Therefore, to segment short sentences, you can also refer to the image segmentation algorithm and use a fully convolutional network. The reason for using convolution is that whether we are cutting short sentences or images, we pay more attention to local information, that is, those pixels close to the cutting edge. The reason for using multi-layer networks is that multi-layer pooling can show the threshold judgment of different layer features. For example, when we cut the map terrain, we must consider not only the slope (first derivative/difference) but also the change of slope (second order). Derivative/difference), the two are thresholded respectively and the combination method is not just a simple linear weighting but a serial network.

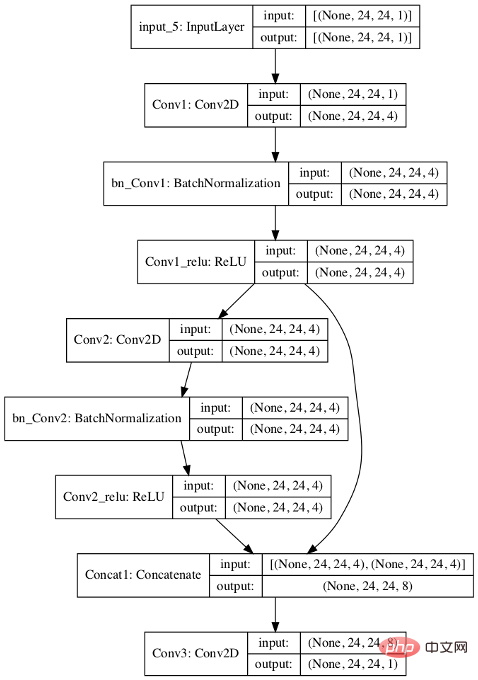

For the new word discovery scenario, we design the following algorithm:

- First fill the word frequency distribution map of the short sentence with 0 to 24x24;

- First have two 3x3 convolution layers and output 4 channels;

- Concat the two convolution layers, do another 3x3 convolution, and output a single channel ;

- The loss function uses logistic=T, so the last layer can be used for classification without softmax output;

##Compared with U-Net, there are the following differences:

1) Abandoned downsampling and upsampling,The reason is that the short sentences generally used for segmentation are relatively short, and the resolution of the word frequency distribution map is not high, so the model is also simplified.

2) U-Net is a three-category (block 1, block 2, on the edge), This algorithm only requires two categories (whether the pixel is a word). So the final output results are also different. U-Net outputs some continuous blocks and dividing lines, and we only need whether a certain point is positive.

The picture below shows the results predicted by the model after training the model. We can see that in the output results, the pixels corresponding to the three words "Shanghai" (the upper row and the sea column), "Hongqiao" and "Business District" have been identified.

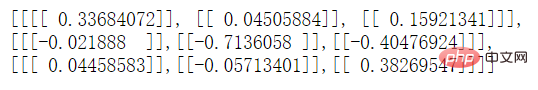

If you want to explore how the model works, you can view the convolution kernel of the middle layer. We first simplify the number of convolution kernels in the model's convolutional layer from 4 to 1. After training, view the middle layer through TensorFlow's API: model.get_layer('Conv2').__dict__. We found that the convolution kernel of the Conv2 layer is as follows:

You can see the effects of the first and second rows on the model. The effect is the opposite. The previous line corresponding to the pixel minus the difference (with weight) of the current line. If the grayscale difference is larger, the string represented by this pixel is more likely to be a word.

You can also see that the absolute value of 0.04505884 in the first row and the second column is relatively small. This may be because the forward parameter of the first row minus the second row and the third column minus the second Negative parameters of columns cancel each other out.

5. Optimization space

This article describes a fully convolutional network model with a very simple structure, and there is still a lot of room for improvement. .

First, expand the feature selection range. For example, the input feature in this article only has word frequency. If the left and right adjacency entropy is also included in the input feature, the segmentation effect will be more accurate.

The second is to increase the depth of the network. Through model analysis, we found that the first layer of convolution is mainly to deal with the cases generated by pixels filled with 0. There is only one layer of convolution that actually focuses on the real heat. If it is a 3x3 convolution kernel, it can only be seen. For the first-order difference result, the second row and column before and after the current pixel are not taken into account. You can appropriately expand the convolution kernel size or deepen the network to make the model's field of view larger. But deepening the network will also bring about the problem of overfitting.

Finally, this model can not only be used to supplement the vocabulary to improve the word segmentation effect, but also can be directly used as a reference for word segmentation in the candidate word recall and word segmentation path scoring in the word segmentation process. The prediction results of this model can be applied in both steps.

The above is the detailed content of New word discovery algorithm based on CNN. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

CLIP-BEVFormer: Explicitly supervise the BEVFormer structure to improve long-tail detection performance

Mar 26, 2024 pm 12:41 PM

Written above & the author’s personal understanding: At present, in the entire autonomous driving system, the perception module plays a vital role. The autonomous vehicle driving on the road can only obtain accurate perception results through the perception module. The downstream regulation and control module in the autonomous driving system makes timely and correct judgments and behavioral decisions. Currently, cars with autonomous driving functions are usually equipped with a variety of data information sensors including surround-view camera sensors, lidar sensors, and millimeter-wave radar sensors to collect information in different modalities to achieve accurate perception tasks. The BEV perception algorithm based on pure vision is favored by the industry because of its low hardware cost and easy deployment, and its output results can be easily applied to various downstream tasks.

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Implementing Machine Learning Algorithms in C++: Common Challenges and Solutions

Jun 03, 2024 pm 01:25 PM

Common challenges faced by machine learning algorithms in C++ include memory management, multi-threading, performance optimization, and maintainability. Solutions include using smart pointers, modern threading libraries, SIMD instructions and third-party libraries, as well as following coding style guidelines and using automation tools. Practical cases show how to use the Eigen library to implement linear regression algorithms, effectively manage memory and use high-performance matrix operations.

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

Explore the underlying principles and algorithm selection of the C++sort function

Apr 02, 2024 pm 05:36 PM

The bottom layer of the C++sort function uses merge sort, its complexity is O(nlogn), and provides different sorting algorithm choices, including quick sort, heap sort and stable sort.

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

Practice and reflections on Jiuzhang Yunji DataCanvas multi-modal large model platform

Oct 20, 2023 am 08:45 AM

1. The historical development of multi-modal large models. The photo above is the first artificial intelligence workshop held at Dartmouth College in the United States in 1956. This conference is also considered to have kicked off the development of artificial intelligence. Participants Mainly the pioneers of symbolic logic (except for the neurobiologist Peter Milner in the middle of the front row). However, this symbolic logic theory could not be realized for a long time, and even ushered in the first AI winter in the 1980s and 1990s. It was not until the recent implementation of large language models that we discovered that neural networks really carry this logical thinking. The work of neurobiologist Peter Milner inspired the subsequent development of artificial neural networks, and it was for this reason that he was invited to participate in this project.

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

Can artificial intelligence predict crime? Explore CrimeGPT's capabilities

Mar 22, 2024 pm 10:10 PM

The convergence of artificial intelligence (AI) and law enforcement opens up new possibilities for crime prevention and detection. The predictive capabilities of artificial intelligence are widely used in systems such as CrimeGPT (Crime Prediction Technology) to predict criminal activities. This article explores the potential of artificial intelligence in crime prediction, its current applications, the challenges it faces, and the possible ethical implications of the technology. Artificial Intelligence and Crime Prediction: The Basics CrimeGPT uses machine learning algorithms to analyze large data sets, identifying patterns that can predict where and when crimes are likely to occur. These data sets include historical crime statistics, demographic information, economic indicators, weather patterns, and more. By identifying trends that human analysts might miss, artificial intelligence can empower law enforcement agencies

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

Improved detection algorithm: for target detection in high-resolution optical remote sensing images

Jun 06, 2024 pm 12:33 PM

01 Outlook Summary Currently, it is difficult to achieve an appropriate balance between detection efficiency and detection results. We have developed an enhanced YOLOv5 algorithm for target detection in high-resolution optical remote sensing images, using multi-layer feature pyramids, multi-detection head strategies and hybrid attention modules to improve the effect of the target detection network in optical remote sensing images. According to the SIMD data set, the mAP of the new algorithm is 2.2% better than YOLOv5 and 8.48% better than YOLOX, achieving a better balance between detection results and speed. 02 Background & Motivation With the rapid development of remote sensing technology, high-resolution optical remote sensing images have been used to describe many objects on the earth’s surface, including aircraft, cars, buildings, etc. Object detection in the interpretation of remote sensing images

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

Application of algorithms in the construction of 58 portrait platform

May 09, 2024 am 09:01 AM

1. Background of the Construction of 58 Portraits Platform First of all, I would like to share with you the background of the construction of the 58 Portrait Platform. 1. The traditional thinking of the traditional profiling platform is no longer enough. Building a user profiling platform relies on data warehouse modeling capabilities to integrate data from multiple business lines to build accurate user portraits; it also requires data mining to understand user behavior, interests and needs, and provide algorithms. side capabilities; finally, it also needs to have data platform capabilities to efficiently store, query and share user profile data and provide profile services. The main difference between a self-built business profiling platform and a middle-office profiling platform is that the self-built profiling platform serves a single business line and can be customized on demand; the mid-office platform serves multiple business lines, has complex modeling, and provides more general capabilities. 2.58 User portraits of the background of Zhongtai portrait construction

PHP algorithm analysis: efficient method to find missing numbers in an array

Mar 02, 2024 am 08:39 AM

PHP algorithm analysis: efficient method to find missing numbers in an array

Mar 02, 2024 am 08:39 AM

PHP algorithm analysis: An efficient method to find missing numbers in an array. In the process of developing PHP applications, we often encounter situations where we need to find missing numbers in an array. This situation is very common in data processing and algorithm design, so we need to master efficient search algorithms to solve this problem. This article will introduce an efficient method to find missing numbers in an array, and attach specific PHP code examples. Problem Description Suppose we have an array containing integers between 1 and 100, but one number is missing. We need to design a