Technology peripherals

Technology peripherals

AI

AI

The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”

The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”

The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”

Because it can do things that it has not been trained on, large language models seem to have some kind of magic, and therefore have become the focus of hype and attention from the media and researchers.

When expanding a large language model, occasionally some new capabilities will appear that are not available in smaller models. This attribute similar to "creativity" is called "emergent" capability, which represents We have taken a giant step towards general artificial intelligence.

Now, researchers from Google, Stanford, Deepmind and the University of North Carolina are exploring the "emergent" ability in large language models.

DALL-E prompted by the decoder

Magical "emergency" ability

Natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data. Scaling up language models often improves performance and sample efficiency on a range of downstream NLP tasks.

In many cases, we can predict the performance of a large language model by extrapolating the performance trends of smaller models. For example, the effect of scale on language model perplexity has been demonstrated across more than seven orders of magnitude.

However, performance on some other tasks did not improve in a predictable way.

For example, the GPT-3 paper shows that the language model's ability to perform multi-digit addition has a flat scaling curve for models from 100M to 13B parameters, is approximately random, but will decrease in One node causes a performance jump.

#Given the increasing use of language models in NLP research, it is important to better understand these capabilities that may arise unexpectedly.

In a recent paper "Emergent Power of Large Language Models" published in Machine Learning Research (TMLR), researchers demonstrated the "emergent power" produced by dozens of extended language models. ”Examples of abilities.

The existence of this "emergent" capability raises the question of whether additional scaling can further expand the range of capabilities of language models.

Certain tips and fine-tuning methods will only produce improvements in larger models

"Emergent" prompt task

First, we discuss the "emergent" abilities that may appear in the prompt task.

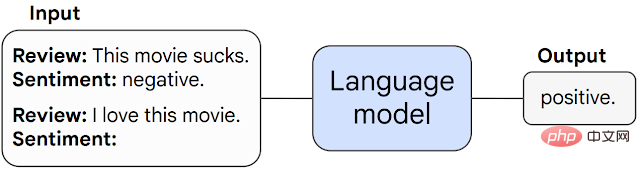

In this type of task, a pre-trained language model is prompted to perform the task of next word prediction and performs the task by completing the response.

Without any further fine-tuning, language models can often perform tasks not seen during training.

#We call a task an "emergent" task when it unpredictably surges from random to above-random performance at a specific scale threshold. .

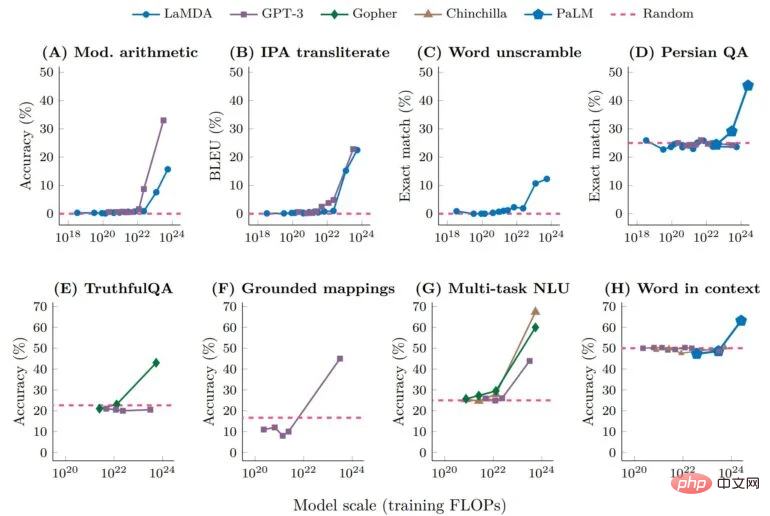

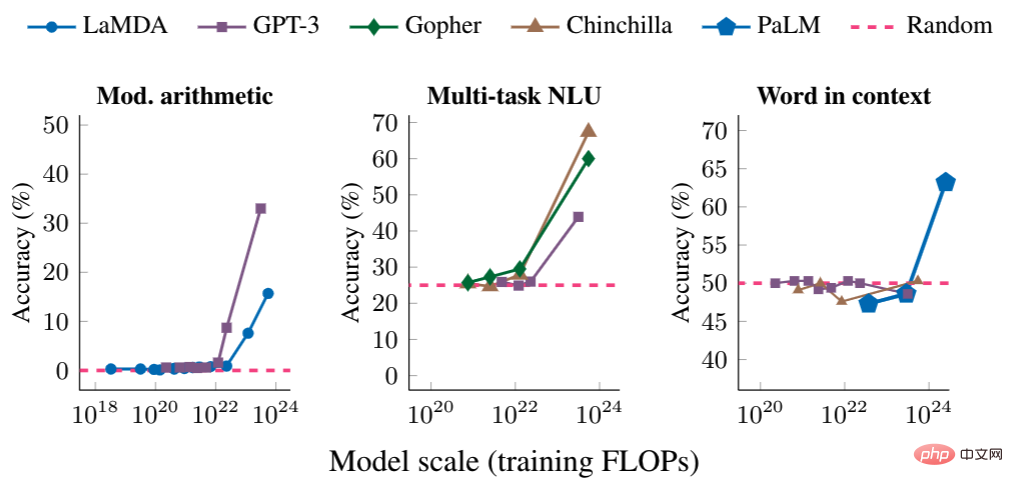

Below we present three examples of prompted tasks with "emergent" performance: multi-step arithmetic, taking a college-level exam, and identifying the intended meaning of a word.

In each case, language models perform poorly, with little dependence on model size, until a certain threshold is reached - where their performance spikes.

For models of sufficient scale, performance on these tasks only becomes non-random - for example, training floating point operations per second for arithmetic and multi-task NLU tasks ( FLOP) exceeds 10 to the 22nd power, and the training FLOP of words in the context task exceeds 10 to the 24th power.

"Emergent" prompt strategy

The second category of "emergent" capabilities includes prompt strategies that enhance language model capabilities.

Prompting strategies are a broad paradigm for prompting that can be applied to a range of different tasks. They are considered "emergent" when they fail for small models and can only be used by sufficiently large models.

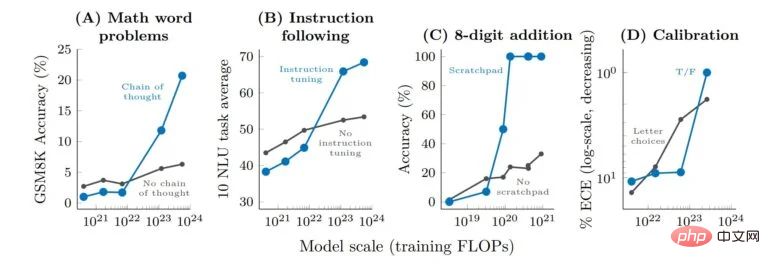

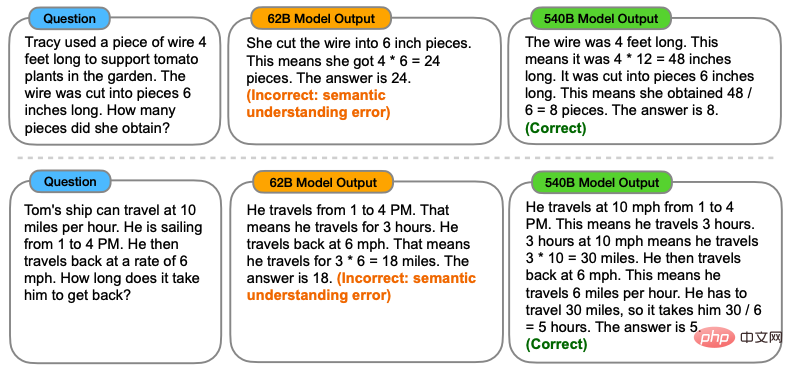

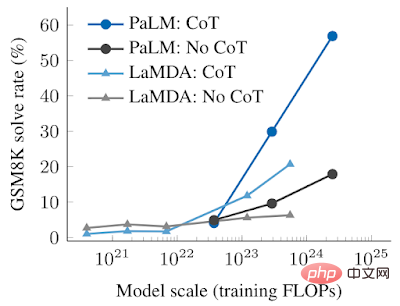

Thought chain prompts are a typical example of the "emergent" prompt strategy, where the prompt model generates a series of intermediate steps before giving the final answer.

Thought chain prompts enable language models to perform tasks that require complex reasoning, such as multi-step math word problems.

It is worth mentioning that the model can acquire the ability of thought chain reasoning without explicit training. The figure below shows an example of a thought chain prompt.

#The empirical results of the thinking chain prompt are as follows.

For smaller models, applying the thought chain prompt is no better than the standard prompt, for example when applied to GSM8K, which is a Challenging math word problem benchmark.

However, for large models, Thought Chain prompts achieved a 57% solution rate on GSM8K, significantly improving performance in our tests.

The significance of studying "emergent" abilities

So what is the significance of studying "emergent" abilities?

Identifying “emergent” capabilities in large language models is the first step in understanding this phenomenon and its potential impact on future model capabilities.

For example, because “emergent” small-shot hinting capabilities and strategies are not explicitly encoded in pre-training, researchers may not know the full range of small-shot hinting capabilities of current language models.

In addition, the question of whether further expansion will potentially give larger models "emergent" capabilities is also very important.

- Why does "emergent" ability appear?

- When certain capabilities emerge, will new real-world applications of language models be unlocked?

- Since computing resources are expensive, can emergent capabilities be unlocked through other means (such as better model architecture or training techniques) without increasing scalability?

Researchers say these questions are not yet known.

However, as the field of NLP continues to develop, it is very important to analyze and understand the behavior of language models, including the "emergent" capabilities produced by scaling.

The above is the detailed content of The parameters are slightly improved, and the performance index explodes! Google: Large language models hide 'mysterious skills”. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1376

1376

52

52

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

How to comment deepseek

Feb 19, 2025 pm 05:42 PM

DeepSeek is a powerful information retrieval tool. Its advantage is that it can deeply mine information, but its disadvantages are that it is slow, the result presentation method is simple, and the database coverage is limited. It needs to be weighed according to specific needs.

How to search deepseek

Feb 19, 2025 pm 05:39 PM

How to search deepseek

Feb 19, 2025 pm 05:39 PM

DeepSeek is a proprietary search engine that only searches in a specific database or system, faster and more accurate. When using it, users are advised to read the document, try different search strategies, seek help and feedback on the user experience in order to make the most of their advantages.

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

Sesame Open Door Exchange Web Page Registration Link Gate Trading App Registration Website Latest

Feb 28, 2025 am 11:06 AM

This article introduces the registration process of the Sesame Open Exchange (Gate.io) web version and the Gate trading app in detail. Whether it is web registration or app registration, you need to visit the official website or app store to download the genuine app, then fill in the user name, password, email, mobile phone number and other information, and complete email or mobile phone verification.

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can't the Bybit exchange link be directly downloaded and installed?

Feb 21, 2025 pm 10:57 PM

Why can’t the Bybit exchange link be directly downloaded and installed? Bybit is a cryptocurrency exchange that provides trading services to users. The exchange's mobile apps cannot be downloaded directly through AppStore or GooglePlay for the following reasons: 1. App Store policy restricts Apple and Google from having strict requirements on the types of applications allowed in the app store. Cryptocurrency exchange applications often do not meet these requirements because they involve financial services and require specific regulations and security standards. 2. Laws and regulations Compliance In many countries, activities related to cryptocurrency transactions are regulated or restricted. To comply with these regulations, Bybit Application can only be used through official websites or other authorized channels

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

Sesame Open Door Trading Platform Download Mobile Version Gateio Trading Platform Download Address

Feb 28, 2025 am 10:51 AM

It is crucial to choose a formal channel to download the app and ensure the safety of your account.

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

Top 10 recommended for crypto digital asset trading APP (2025 global ranking)

Mar 18, 2025 pm 12:15 PM

This article recommends the top ten cryptocurrency trading platforms worth paying attention to, including Binance, OKX, Gate.io, BitFlyer, KuCoin, Bybit, Coinbase Pro, Kraken, BYDFi and XBIT decentralized exchanges. These platforms have their own advantages in terms of transaction currency quantity, transaction type, security, compliance, and special features. For example, Binance is known for its largest transaction volume and abundant functions in the world, while BitFlyer attracts Asian users with its Japanese Financial Hall license and high security. Choosing a suitable platform requires comprehensive consideration based on your own trading experience, risk tolerance and investment preferences. Hope this article helps you find the best suit for yourself

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

Binance binance official website latest version login portal

Feb 21, 2025 pm 05:42 PM

To access the latest version of Binance website login portal, just follow these simple steps. Go to the official website and click the "Login" button in the upper right corner. Select your existing login method. If you are a new user, please "Register". Enter your registered mobile number or email and password and complete authentication (such as mobile verification code or Google Authenticator). After successful verification, you can access the latest version of Binance official website login portal.

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

Sesame Open Door Exchange Web Page Login Latest version gateio official website entrance

Mar 04, 2025 pm 11:48 PM

A detailed introduction to the login operation of the Sesame Open Exchange web version, including login steps and password recovery process. It also provides solutions to common problems such as login failure, unable to open the page, and unable to receive verification codes to help you log in to the platform smoothly.