Technology peripherals

Technology peripherals

AI

AI

ConvNeXt V2 is here, using only the simplest convolution architecture, the performance is not inferior to Transformer

ConvNeXt V2 is here, using only the simplest convolution architecture, the performance is not inferior to Transformer

ConvNeXt V2 is here, using only the simplest convolution architecture, the performance is not inferior to Transformer

After decades of basic research, the field of visual recognition has ushered in a new era of large-scale visual representation learning. Pretrained large-scale vision models have become an essential tool for feature learning and vision applications. The performance of a visual representation learning system is greatly affected by three main factors: the model's neural network architecture, the method used to train the network, and the training data. Improvements in each factor contribute to improvements in overall model performance.

Innovation in neural network architecture design has always played an important role in the field of representation learning. The convolutional neural network architecture (ConvNet) has had a significant impact on computer vision research, enabling the use of universal feature learning methods in various visual recognition tasks without relying on manually implemented feature engineering. In recent years, the transformer architecture, originally developed for natural language processing, has also become widely used in other deep learning fields because of its suitability for models and datasets of different sizes.

The emergence of the ConvNeXt architecture modernizes the traditional ConvNet, proving that pure convolutional models can also adapt to changes in model and dataset size. However, the most common way to explore the design space of neural network architectures is still to benchmark the performance of supervised learning on ImageNet.

Another way of thinking is to shift the focus of visual representation learning from labeled supervised learning to self-supervised pre-training. Self-supervised algorithms introduced masked language modeling into the field of vision and quickly became a popular method for visual representation learning. However, self-supervised learning typically uses an architecture designed for supervised learning and assumes that the architecture is fixed. For example, Masked Autoencoder (MAE) uses a visual transformer architecture.

One approach is to combine these architectures with a self-supervised learning framework, but this will face some specific problems. For example, the following problem arises when combining ConvNeXt with MAE: MAE has a specific encoder-decoder design that is optimized for the sequence processing capabilities of the transformer, which makes the computationally intensive encoder focus on those visible patch, thereby reducing pre-training costs. But this design may be incompatible with standard ConvNet, which uses dense sliding windows. Furthermore, without considering the relationship between architecture and training objectives, it is unclear whether optimal performance can be achieved. In fact, existing research shows that it is difficult to train ConvNet with mask-based self-supervised learning, and experimental evidence shows that transformer and ConvNet may diverge in feature learning, which will affect the quality of the final representation.

To this end, researchers from KAIST, Meta, and New York University (including Liu Zhuang, first author of ConvNeXt, and Xie Saining, first author of ResNeXt) proposed to jointly design network architecture and masked autoencoding under the same framework The purpose of this is to enable mask-based self-supervised learning to be applied to the ConvNeXt model and obtain results comparable to the transformer.

##Paper address: https://arxiv.org/pdf/2301.00808v1.pdf

When designing the masked autoencoder, this research treats the input with the mask as a set of sparse patches and uses sparse convolution to process the visible parts. The idea was inspired by the use of sparse convolutions when processing large-scale 3D point clouds. Specifically, this research proposes implementing ConvNeXt with sparse convolutions, and then during fine-tuning, the weights can be converted back to standard dense network layers without special processing. To further improve pre-training efficiency, this study replaces the transformer decoder with a single ConvNeXt, making the entire design fully convolutional. The researchers observed that after adding these changes: the learned features were useful and improved the baseline results, but the fine-tuned performance was still inferior to the transformer-based model.

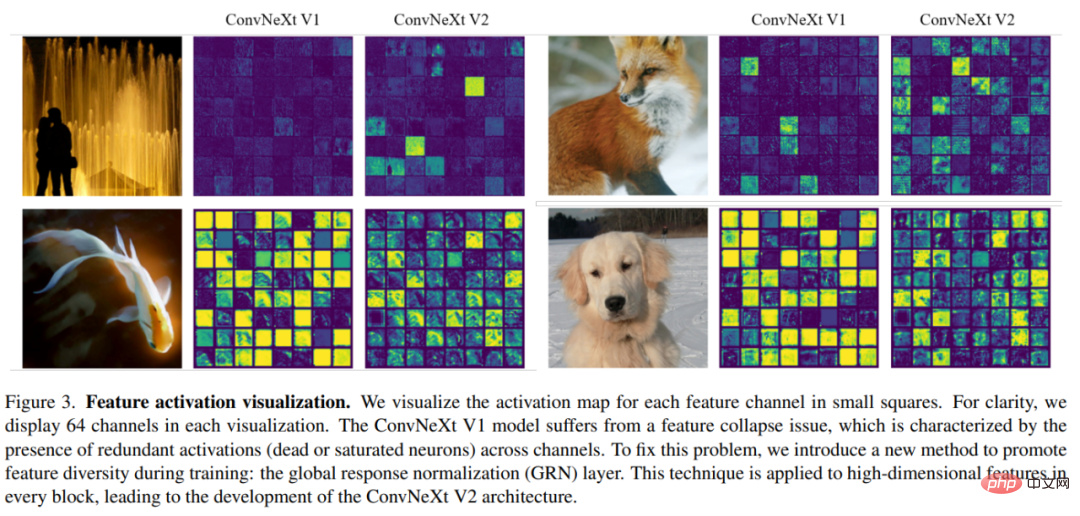

The study then analyzes the feature space of ConvNeXt with different training configurations. When training ConvNeXt directly on masked inputs, researchers found potential feature collapse problems in the MLP layer. In order to solve this problem, this study proposes to add a global response normalization layer (Global Response Normalization layer) to enhance feature competition between channels. This improvement is most effective when the model is pretrained using a masked autoencoder, suggesting that reusing fixed architecture designs from supervised learning may not be the best approach.

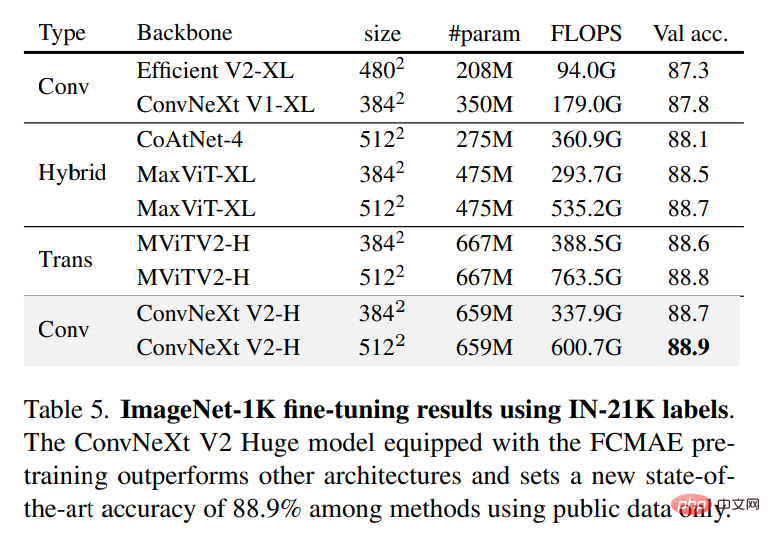

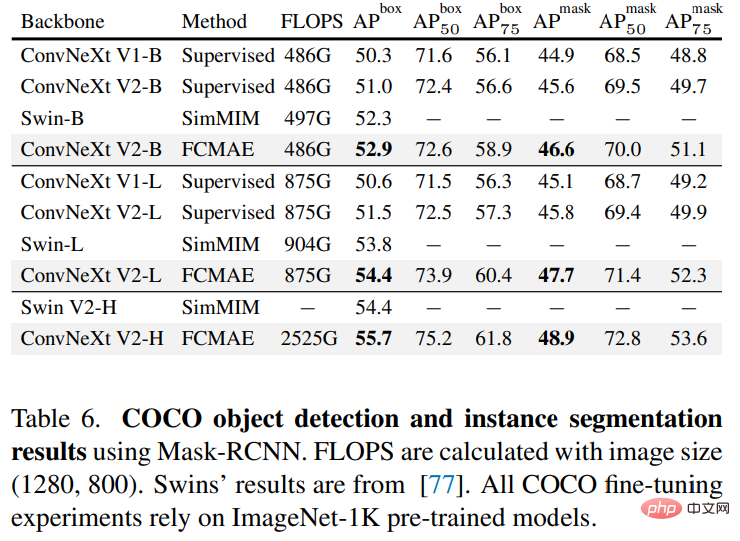

Based on the above improvements, this study proposes ConvNeXt V2, which shows better performance when combined with masked autoencoders. At the same time, researchers found that ConvNeXt V2 has significant performance improvements over pure ConvNet on various downstream tasks, including classification tasks on ImageNet, target detection on COCO, and semantic segmentation on ADE20K.

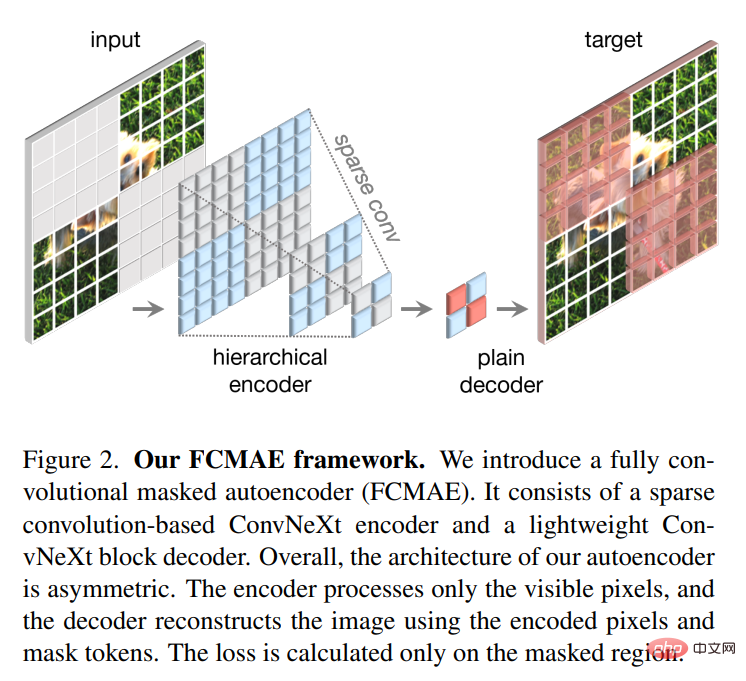

Fully Convolutional Masked Autoencoder

The method proposed in this study is conceptually simple and is implemented in a fully convolutional manner In progress. The learning signal is generated by randomly masking the original visual input with a high mask ratio, and then letting the model predict the missing parts based on the remaining context. The overall framework is shown in the figure below.

The framework consists of a ConvNeXt encoder based on sparse convolution and a lightweight ConvNeXt decoder, where the structure of the autoencoder is Asymmetrical. The encoder only processes visible pixels, while the decoder uses encoded pixels and mask tokens to reconstruct the image. At the same time, the loss is only calculated in the masked area.

Global response normalization

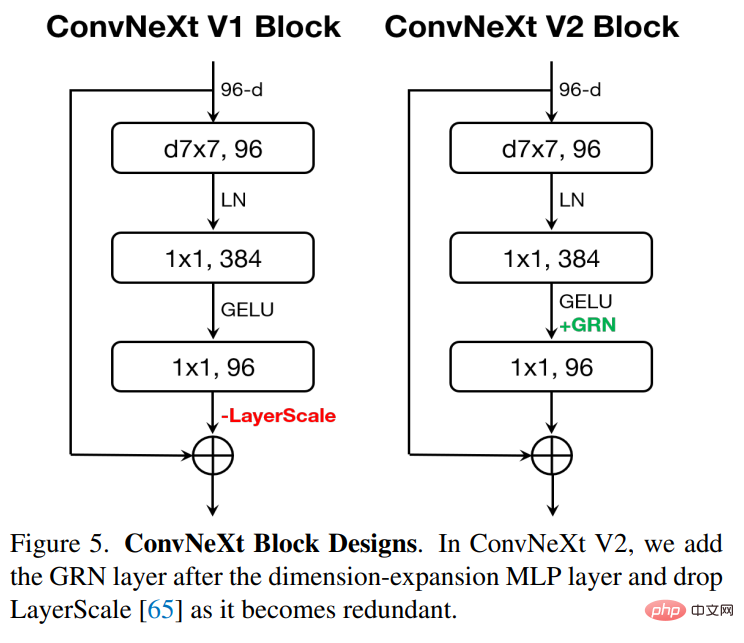

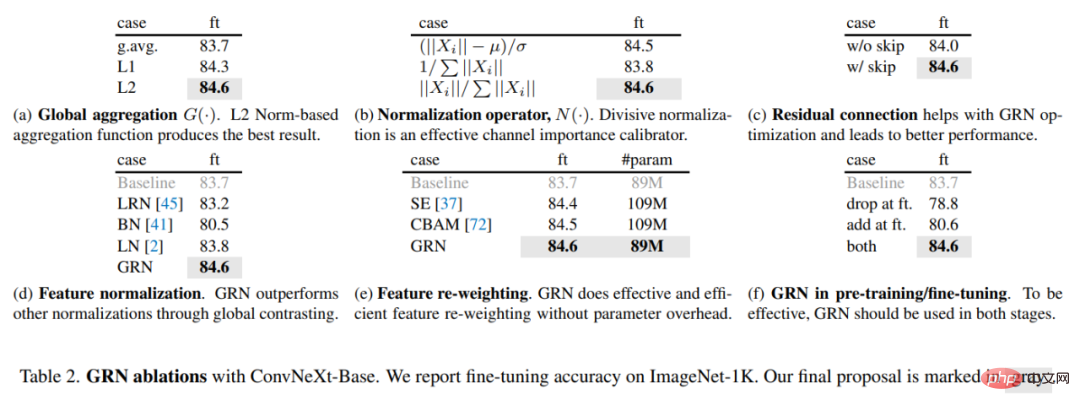

There are many mechanisms in the brain that promote neuronal diversity. For example, lateral inhibition can help enhance the response of activated neurons, increasing the contrast and selectivity of individual neurons to stimuli while also increasing the response diversity of the entire population of neurons. In deep learning, this form of lateral inhibition can be achieved through response normalization. This study introduces a new response normalization layer called global response normalization (GRN), which aims to increase the contrast and selectivity between channels. The GRN unit consists of three steps: 1) global feature aggregation, 2) feature normalization, and 3) feature calibration. As shown in the figure below, GRN layers can be merged into the original ConvNeXt block.

The researchers found experimentally that when applying GRN, LayerScale is not necessary and can be deleted. Leveraging this new block design, the study created a variety of models with varying efficiencies and capacities, termed the ConvNeXt V2 model family, ranging from lightweight (Atto) to computationally intensive (Huge).

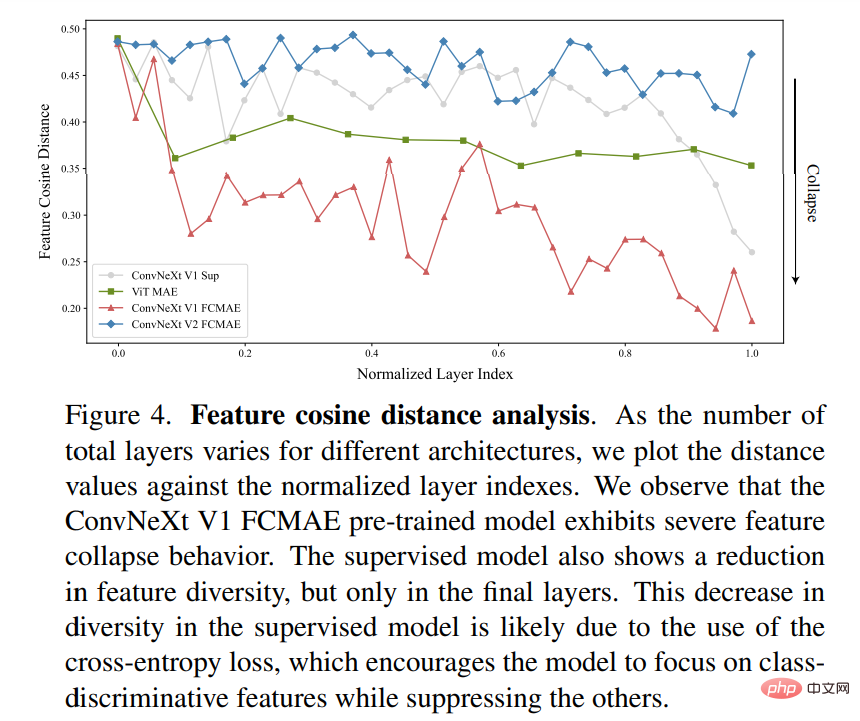

To evaluate the role of GRN, this study used the FCMAE framework to pre-train ConvNeXt V2. From the visual display in Figure 3 below and the cosine distance analysis in Figure 4, it can be observed that ConvNeXt V2 effectively alleviates the feature collapse problem. The cosine distance values are consistently high, indicating that feature diversity can be maintained during the transfer of network layers. This is similar to the ViT model pretrained using MAE. This shows that the learning behavior of ConvNeXt V2 is similar to ViT under a similar mask image pre-training framework.

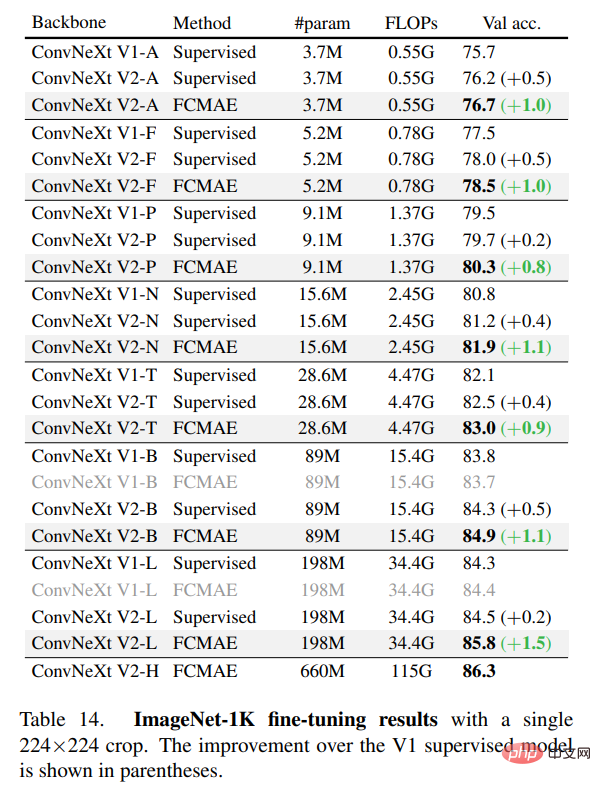

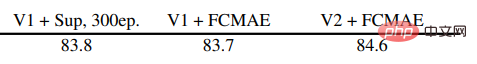

The study further evaluated the fine-tuning performance, and the results are shown in the table below.

When equipped with GRN, the FCMAE pre-trained model can significantly outperform the supervised model trained using 300 epochs. GRN improves representation quality by enhancing feature diversity, which is crucial for mask-based pre-training and is absent in the ConvNeXt V1 model. It is worth noting that this improvement is achieved without adding additional parameter overhead and without increasing FLOPS.

Finally, the study also examines the importance of GRN in pre-training and fine-tuning. As shown in Table 2(f) below, performance drops significantly whether GRN is removed from fine-tuning or newly initialized GRN is added during fine-tuning, indicating that GRN is important in both pre-training and fine-tuning.

Interested readers can read the original text of the paper to learn more about the research details.

The above is the detailed content of ConvNeXt V2 is here, using only the simplest convolution architecture, the performance is not inferior to Transformer. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1386

1386

52

52

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

The world's most powerful open source MoE model is here, with Chinese capabilities comparable to GPT-4, and the price is only nearly one percent of GPT-4-Turbo

May 07, 2024 pm 04:13 PM

Imagine an artificial intelligence model that not only has the ability to surpass traditional computing, but also achieves more efficient performance at a lower cost. This is not science fiction, DeepSeek-V2[1], the world’s most powerful open source MoE model is here. DeepSeek-V2 is a powerful mixture of experts (MoE) language model with the characteristics of economical training and efficient inference. It consists of 236B parameters, 21B of which are used to activate each marker. Compared with DeepSeek67B, DeepSeek-V2 has stronger performance, while saving 42.5% of training costs, reducing KV cache by 93.3%, and increasing the maximum generation throughput to 5.76 times. DeepSeek is a company exploring general artificial intelligence

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI subverts mathematical research! Fields Medal winner and Chinese-American mathematician led 11 top-ranked papers | Liked by Terence Tao

Apr 09, 2024 am 11:52 AM

AI is indeed changing mathematics. Recently, Tao Zhexuan, who has been paying close attention to this issue, forwarded the latest issue of "Bulletin of the American Mathematical Society" (Bulletin of the American Mathematical Society). Focusing on the topic "Will machines change mathematics?", many mathematicians expressed their opinions. The whole process was full of sparks, hardcore and exciting. The author has a strong lineup, including Fields Medal winner Akshay Venkatesh, Chinese mathematician Zheng Lejun, NYU computer scientist Ernest Davis and many other well-known scholars in the industry. The world of AI has changed dramatically. You know, many of these articles were submitted a year ago.

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

Google is ecstatic: JAX performance surpasses Pytorch and TensorFlow! It may become the fastest choice for GPU inference training

Apr 01, 2024 pm 07:46 PM

The performance of JAX, promoted by Google, has surpassed that of Pytorch and TensorFlow in recent benchmark tests, ranking first in 7 indicators. And the test was not done on the TPU with the best JAX performance. Although among developers, Pytorch is still more popular than Tensorflow. But in the future, perhaps more large models will be trained and run based on the JAX platform. Models Recently, the Keras team benchmarked three backends (TensorFlow, JAX, PyTorch) with the native PyTorch implementation and Keras2 with TensorFlow. First, they select a set of mainstream

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Hello, electric Atlas! Boston Dynamics robot comes back to life, 180-degree weird moves scare Musk

Apr 18, 2024 pm 07:58 PM

Boston Dynamics Atlas officially enters the era of electric robots! Yesterday, the hydraulic Atlas just "tearfully" withdrew from the stage of history. Today, Boston Dynamics announced that the electric Atlas is on the job. It seems that in the field of commercial humanoid robots, Boston Dynamics is determined to compete with Tesla. After the new video was released, it had already been viewed by more than one million people in just ten hours. The old people leave and new roles appear. This is a historical necessity. There is no doubt that this year is the explosive year of humanoid robots. Netizens commented: The advancement of robots has made this year's opening ceremony look like a human, and the degree of freedom is far greater than that of humans. But is this really not a horror movie? At the beginning of the video, Atlas is lying calmly on the ground, seemingly on his back. What follows is jaw-dropping

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

KAN, which replaces MLP, has been extended to convolution by open source projects

Jun 01, 2024 pm 10:03 PM

Earlier this month, researchers from MIT and other institutions proposed a very promising alternative to MLP - KAN. KAN outperforms MLP in terms of accuracy and interpretability. And it can outperform MLP running with a larger number of parameters with a very small number of parameters. For example, the authors stated that they used KAN to reproduce DeepMind's results with a smaller network and a higher degree of automation. Specifically, DeepMind's MLP has about 300,000 parameters, while KAN only has about 200 parameters. KAN has a strong mathematical foundation like MLP. MLP is based on the universal approximation theorem, while KAN is based on the Kolmogorov-Arnold representation theorem. As shown in the figure below, KAN has

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Time Series Forecasting NLP Large Model New Work: Automatically Generate Implicit Prompts for Time Series Forecasting

Mar 18, 2024 am 09:20 AM

Today I would like to share a recent research work from the University of Connecticut that proposes a method to align time series data with large natural language processing (NLP) models on the latent space to improve the performance of time series forecasting. The key to this method is to use latent spatial hints (prompts) to enhance the accuracy of time series predictions. Paper title: S2IP-LLM: SemanticSpaceInformedPromptLearningwithLLMforTimeSeriesForecasting Download address: https://arxiv.org/pdf/2403.05798v1.pdf 1. Large problem background model

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

FisheyeDetNet: the first target detection algorithm based on fisheye camera

Apr 26, 2024 am 11:37 AM

Target detection is a relatively mature problem in autonomous driving systems, among which pedestrian detection is one of the earliest algorithms to be deployed. Very comprehensive research has been carried out in most papers. However, distance perception using fisheye cameras for surround view is relatively less studied. Due to large radial distortion, standard bounding box representation is difficult to implement in fisheye cameras. To alleviate the above description, we explore extended bounding box, ellipse, and general polygon designs into polar/angular representations and define an instance segmentation mIOU metric to analyze these representations. The proposed model fisheyeDetNet with polygonal shape outperforms other models and simultaneously achieves 49.5% mAP on the Valeo fisheye camera dataset for autonomous driving

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

Tesla robots work in factories, Musk: The degree of freedom of hands will reach 22 this year!

May 06, 2024 pm 04:13 PM

The latest video of Tesla's robot Optimus is released, and it can already work in the factory. At normal speed, it sorts batteries (Tesla's 4680 batteries) like this: The official also released what it looks like at 20x speed - on a small "workstation", picking and picking and picking: This time it is released One of the highlights of the video is that Optimus completes this work in the factory, completely autonomously, without human intervention throughout the process. And from the perspective of Optimus, it can also pick up and place the crooked battery, focusing on automatic error correction: Regarding Optimus's hand, NVIDIA scientist Jim Fan gave a high evaluation: Optimus's hand is the world's five-fingered robot. One of the most dexterous. Its hands are not only tactile